MLOps Pipeline Optimization: A Complete Guide

Getting a machine learning model (ML) from a data scientist's laptop into a live production environment is often a slow, manual, and frustrating process. Teams work in silos, hand-offs are messy, and technical debt piles up, slowing innovation to a crawl. An MLOps pipeline is designed to solve this by automating the entire workflow. But just having a pipeline isn't enough. To truly accelerate your AI initiatives, you need to focus on MLOps pipeline optimization. This means making your automated processes as efficient and reliable as possible. We’ll show you how to identify bottlenecks and streamline each stage for faster deployments and better models.

Key takeaways

- Optimize the entire ML lifecycle, not just one part: A successful MLOps pipeline is a continuous system. Focus on streamlining every stage, from data preparation to production monitoring, to build a reliable and repeatable process that accelerates development.

- Use automation to build speed and reliability: Implement CI/CD for your models and data, automate testing and validation, and set up triggers for model retraining. This reduces manual errors and frees up your team to focus on building better models, not just maintaining them.

- Track key metrics to guide your strategy: To know if your optimizations are working, you need data. Monitor KPIs like deployment frequency and lead time for changes to pinpoint bottlenecks and create a feedback loop for continuous improvement.

What is an MLOps pipeline (and why does optimization matter)?

Think of an MLOps pipeline as the automated assembly line for your ML models. It’s the end-to-end process that takes your model from a concept on a data scientist's laptop to a fully functional, monitored application in production. Without a solid pipeline, you’re left with a manual, error-prone process that slows down innovation and creates a ton of headaches for your team. A well-structured pipeline, on the other hand, brings order to the chaos.

At its core, an MLOps pipeline automates the ML lifecycle, covering everything from data preparation and validation to model training, deployment, and ongoing monitoring. If you’re familiar with DevOps, the concept will feel similar. MLOps applies many of the same CI/CD principles to ML, treating models and data as first-class citizens in your software development process. The goal is to create a repeatable, reliable, and efficient system for getting models into the hands of users.

So, why is optimization so critical? An unoptimized pipeline is like an assembly line with constant bottlenecks. It might work, but it’s slow, costly, and fragile. Optimizing your pipeline is about making it faster, more reliable, and scalable. It helps you minimize the "technical debt" that accumulates from manual workarounds and inconsistent processes. By streamlining each step—from how you manage data to how you deploy models—you reduce the risk of errors, accelerate your time-to-market, and free up your team to focus on building better models instead of just keeping the lights on. It’s the difference between having a cool AI prototype and running a successful, production-grade AI initiative.

An unoptimized pipeline is like an assembly line with constant bottlenecks. It might work, but it’s slow, costly, and fragile. Optimizing your pipeline is about making it faster, more reliable, and scalable. It helps you minimize the "technical debt" that accumulates from manual workarounds and inconsistent processes.

The core components of an MLOps pipeline

Think of an MLOps pipeline as the automated assembly line for your ML models. It’s a structured process that takes your model from a raw idea all the way to a production-ready tool that delivers real value. By automating the entire ML lifecycle, you create a system that is faster, more efficient, and far more reliable than manual workflows. This structure is what allows teams to move from slow, one-off projects to a continuous cycle of innovation and improvement.

The pipeline is generally broken down into a few key stages, each with its own set of tasks and goals. It starts with getting your data in order, moves into the creative phase of building and training your model, and then pushes that model out into the world where it can be used. The final, and arguably most important, stage is to watch how it performs and create a feedback loop for continuous improvement. Understanding these core components is the first step to optimizing your entire process. A comprehensive solution can manage these interconnected stages, ensuring that each part of your pipeline works in harmony with the others to drive success.

1 Data preparation and management

Everything in ML starts with data. This initial stage is all about gathering raw data from various sources and getting it ready for your model. It’s not the most glamorous part of the process, but it’s the most critical—your model will only ever be as good as the data it’s trained on. This phase involves cleaning the data to remove errors or inconsistencies, transforming it into a usable format, and labeling it so the model can understand what it’s looking at. Proper data management also includes versioning, so you can track changes and ensure your experiments are reproducible. Getting this foundation right prevents major headaches down the line.

2 Model development and training

Once your data is clean and ready, you can move on to the development and training phase. This is where your data scientists get to work their magic. They experiment with different algorithms, select the best features from your dataset, and train the model to recognize patterns. This stage is highly iterative; it involves training a model, evaluating its performance, tweaking its parameters, and training it again until it meets your desired accuracy. The goal is to produce a validated model that is ready for the next step: deployment. The entire MLOps process is designed to make this experimental phase as efficient and repeatable as possible.

BLOG: What is MLOps?

3 Model deployment and serving

A perfectly trained model sitting on a developer's laptop doesn't provide any business value. The deployment and serving stage is where your model gets put to work. Deployment involves integrating the trained model into a live production environment, like a mobile app or a company website, so it can start making predictions on new, real-world data. "Serving" is the process of making those predictions available to end-users quickly and reliably. This component is all about operationalizing your model, ensuring it's scalable, stable, and can handle the demands of a production workload. This is the step that turns your AI project into a tangible business asset.

4 Monitoring and feedback loop

The work isn’t over once a model is deployed. The world is constantly changing, and so is the data that flows into your model. Continuous monitoring is essential for tracking your model's performance over time and watching for issues like "model drift," which happens when a model's accuracy degrades because the new data it's seeing is different from its training data. By setting up a strong monitoring and feedback loop, you can catch these issues early. The insights you gather here feed back into the beginning of the pipeline, signaling when it's time to retrain the model with fresh data and start the cycle all over again.

How to optimize each part of your pipeline

Optimizing your MLOps pipeline isn't about one big fix. It’s about making targeted improvements at every stage, from the moment you touch your data to long after your model is live. A truly efficient pipeline is a well-oiled machine where each component works seamlessly with the next. By breaking the process down, you can identify specific bottlenecks and apply the right strategies to create a faster, more reliable, and scalable system. This approach turns optimization from a daunting task into a series of manageable steps, each one adding value and strengthening your overall AI initiative. Let's look at how you can refine each core part of your pipeline.

Manage data quality and versioning

Your model is only as good as the data it’s trained on, which is why optimization starts here. The key is to treat your data and features with the same rigor as your code. This means implementing robust version control for all your ML assets, including datasets, schemas, and feature engineering scripts. Versioning allows your team to reproduce experiments, audit changes, and quickly roll back to a previous state if something goes wrong. A solid MLOps architecture includes dedicated steps for data preparation and validation, ensuring that only high-quality, consistent data enters your training workflow. This prevents the classic "garbage in, garbage out" problem and builds a trustworthy foundation for your entire pipeline.

Develop and train models efficiently

Once your data is in order, the focus shifts to building and training your model without wasting time or resources. The goal here is automation. A mature MLOps process automates the training pipeline so it can be triggered by events like a new code commit or the arrival of a new batch of data. This eliminates manual hand-offs and reduces the risk of human error. By automating the repetitive tasks of training and validation, you free up your data scientists to concentrate on what they do best: experimenting with different architectures, tuning hyperparameters, and creating more powerful models. This efficiency accelerates the development cycle and gets better models into production faster.

Streamline model deployment and serving

A great model sitting on a developer's laptop doesn't provide any business value. The deployment stage is where your model goes live and starts doing its job. Optimization here is about making this transition smooth, repeatable, and safe. An effective pipeline automates the ML lifecycle by packaging the model, testing it in a staging environment, and deploying it to production with minimal manual intervention. This often involves using strategies like canary releases or A/B testing to deploy new models gradually. Establishing a clear, automated path to production not only increases speed and efficiency but also makes the entire process more reliable and less stressful for your team.

Improve monitoring and feedback loops

Deployment isn't the finish line. Once a model is in production, you need to watch it closely to ensure it continues to perform as expected. Continuous monitoring is critical to detect issues like model drift, which happens when a model's accuracy degrades over time as real-world data changes. By tracking key performance metrics, data drift, and system health, you can catch problems early. This monitoring data creates a vital feedback loop. When performance dips below a certain threshold, it can automatically trigger an alert or even kick off a retraining pipeline with new data, ensuring your model stays relevant and effective over its entire lifespan.

Find the right tools for MLOps optimization

Choosing the right tools is a critical step in optimizing your MLOps pipeline, but the sheer number of options can feel overwhelming. The best stack for you depends entirely on your team’s specific needs, your existing infrastructure, and what you’re trying to achieve. Think of it less like finding a single magic bullet and more like assembling the perfect toolkit for your unique projects. Some teams prefer to piece together individual open-source components for maximum flexibility, while others need a comprehensive solution that handles everything. Let's walk through the main categories of tools so you can find the right fit for your team.

Open-source frameworks and platforms

Open-source tools are a popular choice for teams that want granular control over their MLOps pipeline. Frameworks like MLflow for experiment tracking or Kubeflow for orchestration give you powerful building blocks to create a custom environment. The biggest advantage here is flexibility—you can pick and choose components to fit your exact workflow. These tools often have strong community support, which means you can find plenty of documentation and help from other developers. The trade-off is that they require more in-house expertise to integrate, manage, and maintain. This approach is best for teams that have the engineering resources to build and support a bespoke MLOps stack.

Specialized MLOps tools

Instead of a single platform, you can also assemble a pipeline using specialized tools that excel at one particular job. For example, you might use a dedicated tool for data versioning, another for feature storage, and a third for production model monitoring. This approach allows you to select the best-in-class solution for each stage of the ML lifecycle. A robust MLOps architecture often combines these specialized tools to create a powerful, customized pipeline. This is a great middle-ground option if you want more capability than a single open-source framework offers but don’t want to commit to a fully managed platform.

Comprehensive MLOps solutions

If your goal is to move fast and focus on building models rather than managing infrastructure, a comprehensive MLOps solution might be your best bet. These platforms manage the entire stack for you—from the underlying compute resources to pre-built project components. They streamline the whole ML lifecycle by integrating best-in-class open-source elements into a single, cohesive environment. This approach helps you get to production faster and reduces the engineering overhead needed to maintain your pipeline. For businesses looking to accelerate their AI initiatives, a managed solution provides a clear path to deploying and scaling projects efficiently.

Measure your optimization success

Optimizing your MLOps pipeline is a great goal, but how do you know if your efforts are actually working? You can’t rely on gut feelings. To truly understand the impact of your changes, you need to measure them. Tracking the right metrics gives you concrete proof of what’s improving, what isn’t, and where to focus your attention next. This data-driven approach not only helps you refine your processes but also makes it easier to demonstrate the value of your work to the rest of the organization. By establishing a clear measurement framework, you turn optimization from a guessing game into a deliberate strategy for building more efficient and reliable AI systems.

Define your key performance indicators (KPIs)

Before you get lost in the weeds of specific metrics, it’s helpful to start with a few high-level Key Performance Indicators (KPIs). These are the vital signs of your MLOps health. A great place to start is with the four key metrics popularized in the book Accelerate, which have become a standard for measuring software delivery performance. These MLOps principles translate perfectly to ML systems and include:

- Deployment frequency: How often are you successfully deploying models to production? Higher frequency often indicates a more agile and automated process.

- Lead time for changes: How long does it take to go from a code commit to a successful deployment? A shorter lead time means you can deliver value faster.

- Change failure rate: What percentage of your deployments result in a failure that requires a fix? A low rate points to a stable and reliable pipeline.

- Mean time to restore (MTTR): When a failure occurs, how long does it take to recover? This measures your team’s ability to respond to and resolve issues quickly.

Set metrics for each pipeline stage

While KPIs give you a bird's-eye view, you also need more granular metrics to diagnose issues within specific parts of your pipeline. By tracking metrics at each stage, you can pinpoint bottlenecks and opportunities for improvement. Think about organizing your metrics into a few key categories to get a complete picture of the MLOps lifecycle.

Start with model performance, tracking classics like accuracy, precision, and recall. But don't stop there; also monitor for performance degradation and data drift over time. Next, look at operational metrics, such as deployment time and mean time to detection (MTTD), which tells you how quickly you can spot a problem. Finally, measure resource efficiency by tracking compute utilization and cost per prediction to ensure your pipeline is not only effective but also financially sustainable.

Create a strategy for continuous improvement

Your metrics are only useful if you act on them. The ultimate goal is to create a feedback loop where data from your pipeline informs an ongoing strategy for improvement. This isn't a one-time fix; it's a commitment to getting better over time. A cornerstone of this strategy is continuous monitoring. This is critical for detecting subtle issues like model drift, where a model’s performance slowly degrades as it encounters new, real-world data it wasn’t trained on.

Use your metrics to identify the biggest bottlenecks and then target them with automation. For example, if your lead time for changes is high because of slow manual testing, that’s a clear signal to automate your validation processes. By consistently monitoring your KPIs and pipeline metrics, you can make informed decisions that lead to a more resilient, efficient, and scalable MLOps practice.

One of the biggest roadblocks to an efficient MLOps pipeline is a lack of communication between teams. When data scientists, ML engineers, and IT operations work in separate silos, it creates friction, delays, and misunderstandings

Tackle common MLOps optimization challenges

Optimizing your MLOps pipeline goes beyond just tweaking algorithms or adding more computing power. The most common hurdles are often human and process-related. Getting your pipeline to run smoothly means getting your teams, your code, and your infrastructure to work together in a scalable and responsible way. Let's walk through some of the biggest challenges you'll face and how you can get ahead of them.

Improve cross-functional collaboration

One of the biggest roadblocks to an efficient MLOps pipeline is a lack of communication between teams. When data scientists, ML engineers, and IT operations work in separate silos, it creates friction, delays, and misunderstandings. The key is to foster a culture where everyone is working toward shared goals. Effective collaboration is vital for the success of the entire ML lifecycle.

Start by establishing clear lines of communication and shared project management tools. Regular stand-ups or check-in meetings where all stakeholders are present can prevent small issues from becoming major blockers. When everyone understands their role and how it connects to the larger project, you can move from data to delivery much faster.

Manage your technical debt

Technical debt in ML is the implied cost of rework caused by choosing an easy, limited solution now instead of using a better approach that would take longer. It can build up from outdated models, messy code, or inefficient processes. While it might seem faster to take shortcuts in the short term, this debt will eventually slow you down and make your systems fragile.

The goal of MLOps is to streamline the ML lifecycle and minimize this "technical debt." By addressing these issues early and building with best practices from the start, you can avoid major complications down the road. This means regularly refactoring code, documenting processes, and retiring models that are no longer performing well. It’s a continuous effort, but it pays off in creating a more robust and maintainable system.

Address compliance and ethics

As you build and deploy models, you're often working with vast amounts of data, some of which may be sensitive. Ensuring your pipeline is secure and compliant with regulations like GDPR or HIPAA isn't just a good practice—it's a requirement. A data breach or a non-compliant model can have serious legal and financial consequences.

Building a successful MLOps pipeline means integrating governance and security from day one. This involves creating robust frameworks for managing data access, ensuring model fairness, and maintaining audit trails for every decision the model makes. By embedding these ethical and compliance checks directly into your automated pipeline, you can manage data responsibly without slowing down your development cycle.

Scale your infrastructure and processes

What works for one model with a small dataset might completely fall apart when you try to scale to ten models or a million users. A common challenge is building an infrastructure that can grow with your business needs. Without a scalable foundation, you'll hit a ceiling that limits your ability to innovate and deploy new projects.

Design your pipeline for flexibility and scalability from the outset. Automation is your best friend here, as it reduces manual effort and ensures consistency as you grow. By using a comprehensive solution like Cake to manage your compute infrastructure and platform elements, you can focus on building great models instead of maintaining the underlying systems. This allows your team to adapt quickly to changing demands and scale your ML projects efficiently.

Automate your MLOps pipeline

Once you’ve optimized the individual parts of your pipeline, the next step is to connect them with automation. Think of it as the secret to making your MLOps process not just efficient, but also reliable and scalable. Automation reduces the need for manual hand-offs between teams, which is often where errors and delays happen. By automating your workflow, you create a repeatable system that can consistently test, validate, and deploy models with minimal human intervention. This frees up your data scientists and engineers to focus on more strategic work, like developing new models, instead of getting bogged down in routine operational tasks.

An automated pipeline is a game-changer for consistency. Every model, every piece of code, and every dataset goes through the exact same process, ensuring that your production environment is stable and predictable. This is where you move from simply having a process to having a true MLOps engine that drives business value. The goal is to build a system that automatically pushes new models through the pipeline, from code check-in all the way to production deployment and monitoring. With a platform like Cake managing the underlying infrastructure, your team can focus on defining these automation rules rather than building the complex systems that run them.

By automating your workflow, you create a repeatable system that can consistently test, validate, and deploy models with minimal human intervention.

Automate testing and validation

Manual testing is a major bottleneck in any development cycle, and MLOps is no exception. Automating your testing and validation process ensures that every component of your pipeline is rigorously checked before it moves forward. This isn’t just about testing the model’s code; it’s about validating the data, the model itself, and the infrastructure it runs on. You should implement automated checks for data quality and schema, ensuring new data doesn’t break your pipeline. For the model, you can automate performance validation, check for biases, and ensure it isn’t becoming stale. These MLOps principles are fundamental to building trust in your system and catching issues long before they impact your users.

Implement continuous integration and deployment (CI/CD)

If you have a background in software development, you’re likely familiar with CI/CD. In MLOps, the concept is similar but expanded to include data and models. Continuous Integration (CI) automatically merges and tests code changes from your team, but here it also includes testing data validation scripts and model training code. Continuous Deployment (CD) takes over once tests pass, automatically deploying the new pipeline components or even a fully retrained model into production. Setting up automation pipelines in ML creates a seamless flow from development to deployment, dramatically speeding up how quickly you can deliver value and react to new information.

Set up automated retraining triggers

Models in the real world aren't static; their performance can degrade as data patterns shift. This is why Continuous Training (CT) is a unique and critical part of MLOps. Instead of waiting for someone to notice a problem, you can set up automated triggers to retrain your model proactively. These triggers can be based on a simple schedule, like retraining every month. A more advanced approach is to trigger retraining when a certain amount of new data has been collected or, even better, when your monitoring system detects a significant drop in model performance. This ensures your models stay fresh, accurate, and relevant without requiring constant manual oversight from your team.

Build a scalable and efficient MLOps pipeline

Building an MLOps pipeline that works for a small proof-of-concept is one thing; creating one that can handle real-world demands is another challenge entirely. A pipeline that isn’t built for scale will eventually buckle under pressure, leading to slow performance, high costs, and frustrated teams. Imagine your model taking too long to generate predictions for users, or your infrastructure costs spiraling out of control during a traffic spike. These are the exact problems a scalable pipeline is designed to prevent.

To avoid these roadblocks, you need to design for scalability and efficiency from day one. This means making smart choices about your infrastructure, training methods, and deployment strategies. It’s about creating a system that is not just robust, but also flexible enough to adapt to changing needs. By focusing on these core areas, you can create a powerful MLOps foundation that not only supports your current projects but also grows alongside your business. This ensures your AI initiatives can deliver consistent, reliable value over the long term, without requiring a complete overhaul every time you launch a new feature or your user base expands.

Leverage cloud resources

Trying to manage your own physical hardware for ML is a full-time job in itself. Instead of getting bogged down with server maintenance and infrastructure management, you can use cloud resources to do the heavy lifting. Cloud platforms provide the flexible, on-demand computing power you need for every stage of the ML lifecycle, from data processing to model training and deployment. A robust MLOps architecture built on the cloud allows your team to focus on what they do best: building and refining models. It gives you access to powerful tools and scalable infrastructure without the massive upfront investment, making it easier to get your projects into production faster.

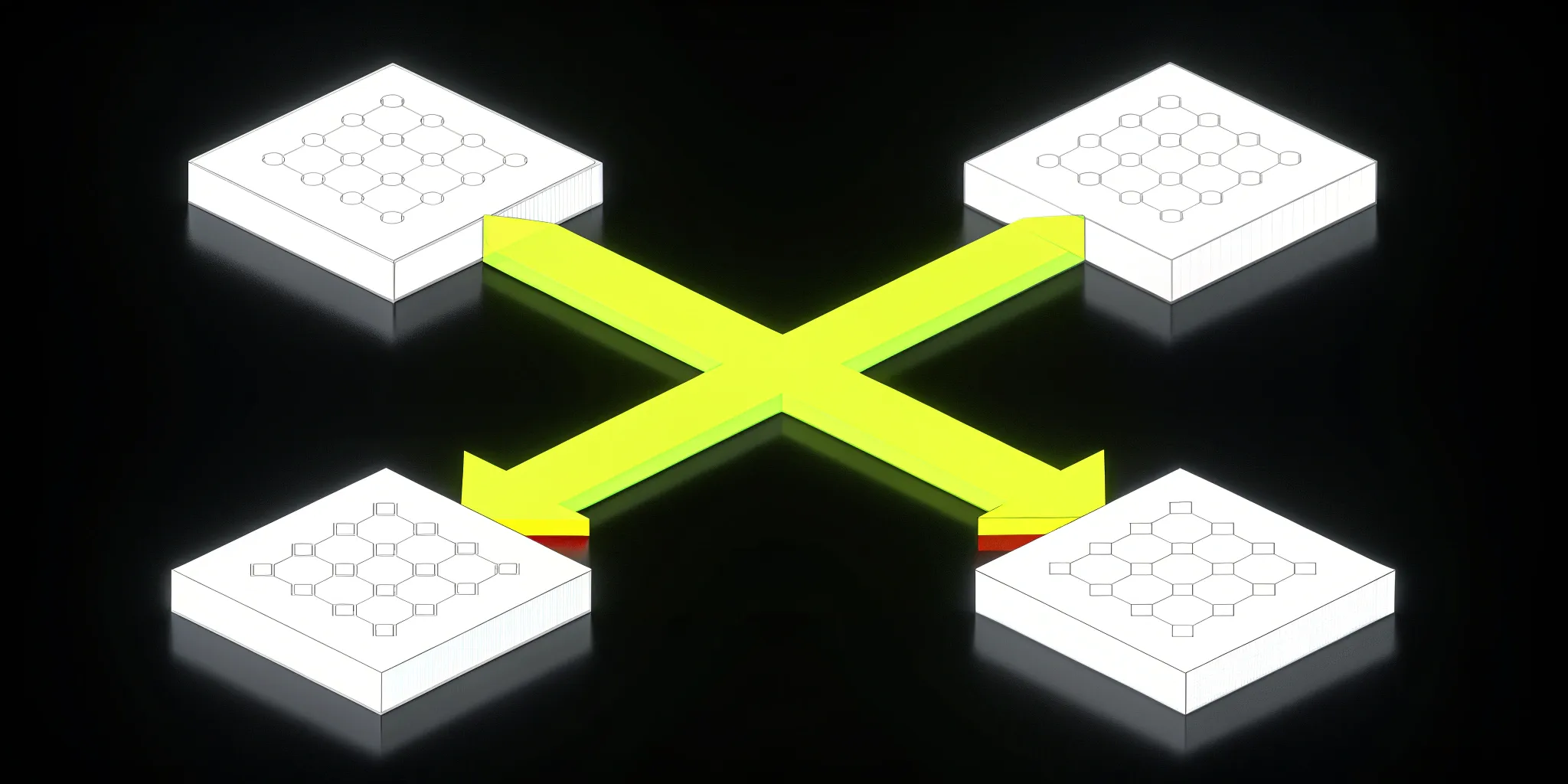

Use distributed training techniques

When you're working with massive datasets or incredibly complex models, training on a single machine can take days or even weeks. Distributed training is a technique that speeds this process up by splitting the workload across multiple machines. Think of it as a "divide and conquer" approach for your model training. This method is one of the core MLOps best practices for achieving efficiency at scale. By parallelizing the training process, you can significantly reduce the time it takes to develop and iterate on your models. This allows your team to experiment more quickly, fine-tune models faster, and ultimately deliver better results in a fraction of the time.

Build a scalable inference infrastructure

Once your model is trained, it needs a reliable home where it can serve predictions—this is called inference. Your inference infrastructure must be able to handle fluctuating demand, whether it's a handful of requests per hour or millions per second. Building a scalable system ensures your application remains fast and responsive for users, even during peak traffic. A well-designed MLOps pipeline includes an inference setup that can automatically scale resources up or down based on real-time needs. This not only provides a better user experience but also helps manage costs by ensuring you only pay for the compute power you actually use.

What's next for MLOps pipeline optimization?

As MLOps matures, the focus is shifting from simply getting models into production to building truly intelligent, resilient, and automated systems. The future of optimization isn't about a single tool or trick; it's about evolving your entire approach to be more dynamic and proactive. The goal is to create a system that not only works today but can also adapt to whatever comes next.

One of the biggest trends is a deeper commitment to automation. We're moving toward fully automated data, model, and code pipelines that trigger automatically based on events like new data arrivals or code commits. This reduces manual effort and speeds up the cycle from idea to deployment. Hand-in-hand with automation is the growing importance of continuous monitoring. It’s no longer enough to just deploy a model; you have to constantly watch its performance to detect issues like model drift, which happens when a model's accuracy degrades over time as real-world data changes.

This all rests on having a robust MLOps architecture that supports seamless collaboration between your data, engineering, and operations teams. A solid foundation makes everything from data preparation to model monitoring more efficient. To measure the effectiveness of these efforts, many teams are tracking key metrics like deployment frequency and lead time for changes. By focusing on these areas, you can build a pipeline that is not just optimized for today's needs but is also prepared for future challenges and opportunities.

Related articles

- LLMOps 101: Your Essential Guide for AI Success

- ML Platforms: A Practical Guide to Choosing

- MLOps, Powered by Cake

- Inside Cake's AI Development Platform

- Success Story: “DevOps on Steroids” for Insurtech AI

Frequently asked questions

What’s the most important first step to take when optimizing an MLOps pipeline?

Before you dive into tools or code, the best first step is to get a clear picture of your current process. Map out how a model currently moves from an idea to production, even if it's a messy, manual workflow. This helps you identify the most painful bottlenecks. At the same time, define what success looks like by setting a few key metrics, like how long it takes to deploy a change. This gives you a baseline, so you can prove that your optimization efforts are actually making a difference.

How is MLOps really different from the DevOps I already know?

Think of it this way: DevOps focuses on automating the lifecycle of code. MLOps does that too, but it adds two more complex, moving parts to the equation: data and models. Unlike application code, a model's performance can degrade over time as it encounters new real-world data—a problem we call "model drift." This means MLOps requires a continuous feedback loop for monitoring and retraining that simply doesn't exist in traditional DevOps.

Do I need a huge engineering team to build and manage an effective MLOps pipeline?

Not at all. While you can certainly build a complex, custom pipeline with a large team, it's not a requirement for getting started. The goal of many MLOps tools, especially comprehensive platforms, is to handle the heavy lifting of infrastructure and integration for you. This allows a smaller, more focused team to concentrate on building and improving models instead of spending all their time managing the underlying systems.

You mentioned "model drift" a few times. Why is it such a big deal?

Model drift is a big deal because it's a silent killer of AI value. It happens when the world changes, and the data your model sees in production no longer matches the data it was trained on. Imagine a model trained to predict customer behavior before a major market shift—its predictions will become less and less accurate. If you aren't constantly monitoring for drift, you could be making important business decisions based on faulty information without even realizing it.

How do I decide between using individual open-source tools and a comprehensive platform?

The choice comes down to a trade-off between control and speed. Using open-source tools gives you complete control to build a highly customized system, but it requires significant engineering resources to integrate, manage, and maintain all the different parts. A comprehensive platform handles that integration and maintenance for you, providing a streamlined, production-ready environment so you can move much faster. It's a great choice if your main goal is to accelerate your AI initiatives and focus on delivering business value.

About Author

Cake Team

More articles from Cake Team

Related Post

Machine Learning in Production: A Practical Guide

Cake Team

The Main Goals of MLOps (And What They Aren't)

Cake Team

MLOps vs AIOps vs DevOps: A Complete Guide

Cake Team

Identify & Overcome AI Pipeline Bottlenecks: A Practical Guide

Cake Team