Your Guide to the Top Open-Source MLOps Tools

Machine learning is a team sport, but it often feels like your data scientists and engineers are playing on different fields. A brilliant model is useless if it can't be deployed, and a deployment pipeline is worthless without a solid model. MLOps provides the shared playbook, but you still need the right equipment to win. The challenge is finding the best mlops tools that everyone can actually use. This guide cuts through the noise, highlighting top mlops open source options for creating a unified workflow that gets your entire team aligned from experiment to production.

Key takeaways

- MLOps bridges the gap from lab to live: It’s the set of practices that turns an experimental model into a reliable, production-ready application. Without it, even the best models fail to deliver consistent value to your users.

- Choose tools based on your reality, not hype: The best MLOps stack is one that fits your specific project needs, team skills, and existing tech. A thoughtful evaluation of these factors is more important than picking the most popular tool.

- Focus on AI, not just infrastructure: Building a custom open-source stack means you're responsible for integration, maintenance, and security. A managed platform like Cake handles the underlying complexity, freeing up your team to focus on creating value with AI instead of managing tools.

What is MLOps and why is everyone talking about it?

Building a powerfulMLmodel is an exciting first step, but it's only half the battle. The real challenge often lies in getting that model out of the lab and into a live environment where it can deliver real value. This is where MLOps comes in. It provides the framework and practices needed to bridge the gap between development and operations, turning promising models into reliable, production-ready applications that consistently perform for your users.

Breaking down the basics of MLOps

So, what exactly is MLOps? Think of it as the operational backbone for ML. MLOps (short for machine learning operations), is a set of practices designed to deploy and maintain ML models reliably and efficiently. If you're familiar with DevOps principles for software development, this might sound familiar. MLOps applies that same focus on automation, collaboration, and iteration to the entire ML lifecycle. It’s all about getting data scientists, ML engineers, and operations teams on the same page to move models from a lab environment into live production smoothly and without friction.

BLOG: MLOps vs DevOps

How MLOps can streamline your ML workflow

Building a great model is one thing, but getting it to work consistently for your users is a whole different challenge. This is where MLOps becomes essential. Without a solid MLOps strategy, deploying models can be slow and chaotic, and keeping them running effectively is even harder. MLOps provides the structure needed to manage the entire ML lifecycle, from preparing data to deploying and monitoring the model. It helps you tackle the common MLOps challenges like model drift and integration issues head-on. By implementing MLOps, you can get your models to market faster, improve team collaboration, and ensure your models perform as expected over the long term.

How MLOps extends DevOps

If you’re familiar with DevOps, MLOps will feel like a natural next step. MLOps extends the principles of automation and collaboration from DevOps and applies them to the unique lifecycle of a machine learning model. While DevOps focuses on the software development pipeline, MLOps accounts for the added complexities of ML, where you’re managing not just code, but also data and models. This means creating reproducible workflows that can handle everything from data ingestion and validation to model training, deployment, and ongoing monitoring. It’s about building a bridge between what data scientists create and what operations teams can reliably manage in a live environment.

Continuous training and monitoring

Software code is generally static until a developer updates it, but ML models are different. Their performance can degrade over time as they encounter new, real-world data—a problem known as model drift. MLOps addresses this by incorporating continuous training (CT) and monitoring into the workflow. Instead of a one-and-done deployment, MLOps establishes automated pipelines that constantly monitor a model’s accuracy and retrain it on fresh data when performance dips, ensuring the model stays relevant and effective long after its initial launch.

Model fairness and explainability

Beyond just performance, MLOps also helps operationalize responsible AI. It’s not enough for a model to be accurate; it also needs to be fair, transparent, and explainable, especially when its decisions impact people. An MLOps framework provides the structure to integrate automated checks for bias and fairness directly into the deployment pipeline. It also facilitates the use of tools that help you interpret why a model made a specific decision, which is crucial for building trust with users and meeting regulatory requirements.

The growing importance of MLOps in numbers

MLOps is quickly shifting from a "nice-to-have" to a core business necessity. The industry is taking note, with Gartner predicting that by 2026, 70% of organizations will have operationalized AI models using MLOps practices. This rapid adoption isn't just about following a trend; it’s a response to a critical need. Businesses are realizing that without a solid operational framework, their investments in AI often fail to deliver returns. By streamlining the path to production, MLOps ensures that powerful models actually make it into the hands of users. This is why managed platforms like Cake are becoming so valuable—they provide a production-ready MLOps stack, allowing teams to focus on building great AI instead of getting stuck on infrastructure.

Your checklist for choosing an open-source MLOps tool

Choosing the right open-source MLOps tools can feel like a monumental task. The landscape is packed with options, each promising to solve a different piece of the ML puzzle. While having choices is great, it can also lead to analysis paralysis. How do you know which tool is right for your project, your team, and your long-term goals? Making the wrong choice can lead to tangled workflows, integration headaches, and a lot of wasted time. But getting it right can completely transform your process, helping you move from idea to production-ready model faster and more reliably.

Think of this as your guide to sorting through the options. Instead of just picking the most popular tool, it’s about finding the one that fits your specific needs. A tool that works wonders for a small research team might not be the right fit for a large enterprise deploying models at scale. You need to consider the entire ML lifecycle, from initial data exploration to long-term model maintenance in a production environment. The key is to look for a set of tools that not only have the right features but also work well together and with your existing systems. Below are the essential criteria to consider as you evaluate your options and build a tech stack that truly supports your AI initiatives.

Track your experiments and control versions

One of the first things you'll need is a solid way to track your experiments. When you're training dozens or even hundreds of models, it's easy to lose track of which parameters, code versions, and datasets produced which results. A good MLOps tool provides a framework for logging everything automatically. This is crucial for maintaining version control and ensuring reproducibility in your workflows. Think of it as a detailed lab notebook for your ML projects. It allows you to compare runs, share findings with your team, and roll back to a previous version of a model if needed. This systematic approach prevents you from losing valuable work and ensures your results can be verified and built upon in the future.

Make sure it simplifies model deployment

Building a great model is only half the battle; you also need an effective way to get it into the hands of users. This is where model deployment comes in. Look for a tool that simplifies the process of pushing your models to a production environment. Powerful tools for deploying ML models often run on platforms like Kubernetes and should offer features that help you manage the model once it's live. This includes capabilities like performance monitoring to catch issues early, auto-scaling to handle traffic spikes, and A/B testing to compare different model versions. These features are critical for ensuring your models perform reliably and deliver real value in a live setting.

Check for seamless integration with your stack

A new tool should feel like a missing puzzle piece, not a wrench thrown into your workflow. Before you commit to an MLOps solution, it's crucial to consider its compatibility with your existing technology stack. Does it connect easily with your data sources, your code repositories, and your cloud infrastructure? A tool that integrates seamlessly with the systems you already use will significantly improve your team's productivity. You want to spend your time building models, not troubleshooting compatibility issues. Look for tools with well-documented APIs and a wide range of pre-built integrations to make the setup process as smooth as possible.

BLOG: How to build an enterprise AI tech stack

Can it scale with your project?

Your project's needs will likely grow over time. You'll work with larger datasets, build more complex models, and serve more users. The MLOps tools you choose today should be able to handle the demands of tomorrow. Scalability is about ensuring your system can grow with you without a drop in performance. Tools designed to orchestrate complex pipelines, especially in containerized environments like Kubernetes, are often built with scalability in mind. When evaluating a tool, ask yourself if it can efficiently manage increasing workloads. A solution that can't scale will eventually become a bottleneck, slowing down your entire operation.

Prioritize a clean, user-friendly interface

Even the most powerful tool is ineffective if your team finds it difficult to use. A clean, intuitive, and user-friendly interface can make all the difference in how quickly your team adopts a new tool and how effectively they use it. A good UI simplifies complex processes, making it easier to track experiments, visualize results, and monitor production models. This significantly reduces the learning curve for new users and empowers everyone on the team, regardless of their technical depth. When a tool is easy to use, it encourages good practices and helps maintain a clear, organized workflow across all your projects.

Find strong community support and documentation

When you use open-source software, you're not just adopting a tool; you're joining a community. Before you choose a tool, investigate the strength of its community and the quality of its documentation. Is there an active forum or Slack channel where users help each other? Are the official docs clear, comprehensive, and up-to-date? This support system is your lifeline when you run into problems or have questions. Strong community support and clear documentation are vital for troubleshooting issues and making the most of a tool's capabilities. A vibrant community is often a sign of a healthy, well-maintained project you can rely on for the long term.

BLOG: Why AI will be defined by open technologies

A breakdown of the best open-source MLOps tools

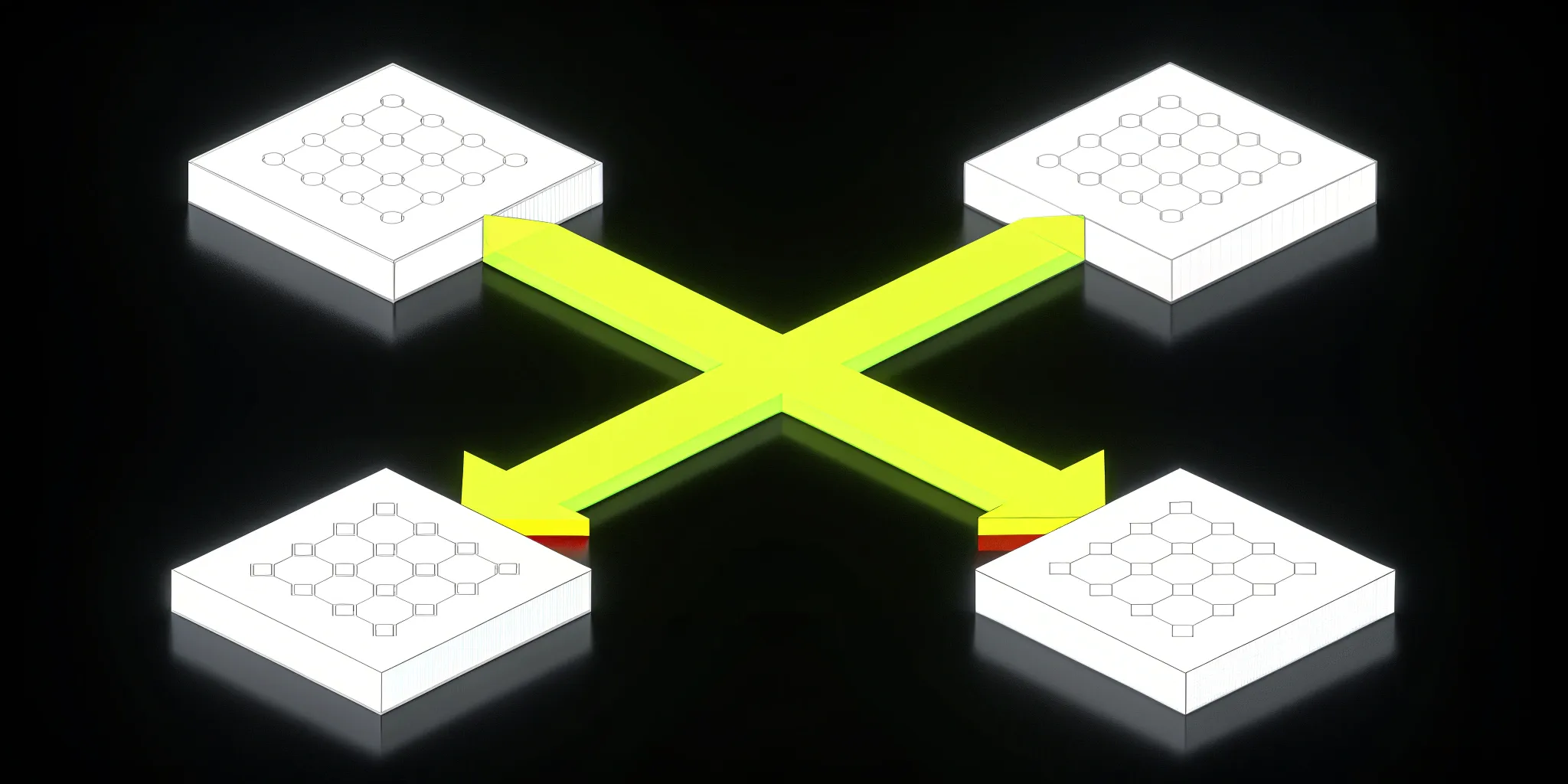

Building a powerful MLOps stack doesn't mean starting from scratch. The open-source community offers a rich ecosystem of tools designed to handle every stage of the ML lifecycle. The challenge isn't finding a tool; it's choosing the right one from a sea of options and making sure it works with everything else. Getting these different components to communicate effectively is often where projects slow down. This is why many teams turn to solutions like Cake that manage the entire stack, simplifying integration and accelerating deployment.

To help you find what you need, we’ve broken down some of the best open-source MLOps tools by their primary function. Think of this as a guide to the essential building blocks for a production-ready ML workflow. Whether you need to version your data, orchestrate complex pipelines, or monitor models in production, there’s a tool here for you. As you review these options, consider how each piece fits into your larger strategy and what it would take to integrate and maintain them.

Managing and versioning your data

Your ML models are only as good as the data they’re trained on, and managing that data is a critical first step. Data versioning allows you to track changes to your datasets just like you track changes to your code. This is essential for reproducibility and collaboration. If a model’s performance suddenly drops, you can easily trace it back to a specific data version.

One of the most popular tools in this space is DVC (Data Version Control). It integrates directly with Git to manage large files, datasets, and models without bogging down your code repository. DVC makes it simple to create reproducible pipelines and switch between different versions of your data, which is a lifesaver for any serious ML project.

DVC

If your team already lives and breathes Git, DVC is a natural fit. It extends the Git commands you already know to handle large datasets, models, and files without cluttering your code repository. Instead of storing massive files directly in Git, DVC saves small pointer files that link to your data in remote storage like S3 or Google Cloud. This keeps your repository lightweight and fast while ensuring every experiment is fully versioned and reproducible. It’s a straightforward approach that helps teams maintain a single source of truth for both their code and their data, making it much easier to track changes and collaborate effectively.

Pachyderm

For teams that need robust, automated data pipelines, Pachyderm is a powerful choice. Built on Kubernetes, it excels at creating data-driven workflows where pipelines automatically trigger when new data is committed. This means you can build complex, multi-stage processing jobs that are both reproducible and efficient. Pachyderm versions your data in repositories, similar to Git, and provides a full lineage of how your data was transformed at every step. Its language-agnostic design allows you to use any tool or library you want within your pipelines, offering great flexibility for teams with diverse tech stacks.

LakeFS

LakeFS brings the power of Git-like version control directly to your data lake. It allows you to perform operations like branching, committing, and merging on your data, which is a game-changer for data quality and governance. You can create an isolated branch to experiment with new data transformations, test a new model, or ingest a new data source without putting your production data at risk. Once you’ve validated your changes, you can safely merge them back into the main branch. This makes it possible to manage massive datasets with the same level of control and confidence you have with your source code.

Developing and deploying your models

Once your data is in order, you need frameworks that streamline the journey from experiment to production. These tools help you track experiments, package models, and deploy them as scalable applications. MLflow is an excellent open platform that covers the entire lifecycle, from tracking experiment metrics to managing and deploying models.

For organizing your code into clean, reproducible data pipelines, Kedro is a fantastic choice. It helps standardize your project structure, making your work easier for others to understand and build upon. When you’re ready to serve your model, BentoML simplifies the process of packaging it into a production-ready artifact. These frameworks for model development help bridge the gap between research and real-world application.

Experiment tracking with MLflow, Comet ML, and Weights & Biases

When you're iterating quickly, it's easy to lose track of which combination of data, code, and parameters produced your best results. Experiment tracking tools solve this by creating a detailed log of every run. MLflow is a popular open-source platform that helps you manage the entire ML lifecycle, from logging experiments to packaging models for deployment. Other great options include Comet ML, which excels at comparing and visualizing model performance, and Weights & Biases, known for its user-friendly dashboard that simplifies tracking experiments and versioning models. These tools ensure your work is reproducible, collaborative, and easy to review.

Containerization with Docker and Seldon-Core

The classic "it worked on my machine" problem can bring an ML project to a grinding halt. Containerization tools like Docker solve this by packaging your model, its dependencies, and all necessary configurations into a single, portable container. This ensures your model runs consistently across any environment, from a developer's laptop to a production server. For taking this a step further, Seldon-Core is an open-source framework that helps you serve your containerized models at scale on Kubernetes. It turns your models into production-ready microservices and adds critical features like advanced metrics, request logging, and A/B testing, making it easier to deploy models efficiently.

Model serving with Hugging Face Inference Endpoints

Once your model is trained and containerized, you need a way to serve it so applications can send it data and get predictions back. Setting up and managing the infrastructure for this can be complex and costly. Hugging Face Inference Endpoints offers a streamlined solution by providing a managed service to deploy your models. It handles the underlying infrastructure, automatically scales to meet demand, and offers a cost-effective, pay-as-you-go pricing model. This allows your team to focus on building great models instead of worrying about server management, making it a powerful option for getting your models into production quickly.

Orchestrating your ML workflows

As your projects grow, you’ll need to manage increasingly complex, multi-step workflows. Workflow orchestration platforms automate and schedule these tasks, ensuring your pipelines run reliably and efficiently. For teams working with Kubernetes, Kubeflow is a powerful, go-to solution. It’s designed to make deploying, scaling, and managing ML workflows on Kubernetes straightforward.

Another strong contender is Argo Workflows, a Kubernetes-native workflow engine that uses YAML to define your pipelines. It’s highly flexible and comes with a user-friendly interface for visualizing your workflows. Both of these platforms act as the conductor for your ML orchestra, making sure every component performs its part at the right time.

Prefect

If you're looking for a tool that brings clarity to complex data projects, Prefect is worth a look. It’s an open-source tool designed to monitor and organize workflows, especially for end-to-end machine learning pipelines. It provides a flexible and powerful way to manage all the moving parts, allowing your team to focus on building and deploying models instead of getting bogged down by the intricacies of orchestration. With Prefect, you get a clear view of what’s running, what’s failed, and why, which is incredibly helpful for troubleshooting and maintaining reliability as your projects scale.

Metaflow

Metaflow was built from the ground up with data scientists in mind. It's a strong workflow management tool that lets them concentrate on building models without getting tangled up in the engineering details. It automatically tracks experiments and data, which simplifies managing the entire machine learning lifecycle from the first line of code to final deployment. By handling much of the infrastructure side of things, Metaflow empowers data scientists to develop and iterate on their models more quickly and independently, creating a smoother path from research to production.

Monitoring and maintaining model performance

Deploying a model is just the beginning. To ensure it continues to perform well over time, you need robust monitoring and maintenance tools. These solutions track everything from system health to model accuracy, alerting you to potential issues before they impact your users. Prometheus is an industry-standard toolkit for monitoring system metrics and triggering alerts, giving you a clear view of your infrastructure’s health.

For a closer look at your model’s behavior, Evidently is an excellent tool. It specializes in evaluating and monitoring model performance, helping you detect problems like data drift or concept drift. By keeping a close eye on these monitoring and maintenance solutions, you can maintain model quality and make data-driven decisions about when to retrain.

Fiddler

When you need to understand exactly how your models are behaving in production, Fiddler is a powerful tool for AI observability. Its standout feature is advanced model explainability, which helps you get to the 'why' behind every prediction—a critical step for building trust and transparency. Fiddler also excels at root cause analysis, allowing your team to pinpoint exactly which features are impacting model performance so you can fix issues proactively. Beyond diagnostics, Fiddler is built for collaboration. It provides a centralized dashboard where data scientists, ML engineers, and operations teams can all see the same performance data. This shared view breaks down communication silos and helps everyone make better, more informed decisions, leading to more robust and dependable AI applications.

Keeping your team in sync

The right tools can help your team stay aligned by providing a central place to manage code, data, and experiments. MLReef is built specifically for this purpose, offering a collaborative platform where teams can manage the entire ML lifecycle together. It includes repositories for data and scripts, along with experiment tracking.

Another great tool for creating reproducible pipelines in a team setting is ZenML. It helps you standardize your workflow so that experiments are easily tracked and compared. This ensures that everyone on the team is working from the same playbook, which reduces errors and makes it easier to build upon each other’s work.

The tools you choose will shape your entire ML workflow, from initial experiments to production monitoring. Getting this right from the start saves you from massive headaches and costly re-work down the line.

Storing and serving features with feature stores

As your models become more complex, you'll find that different teams often need access to the same processed data, or "features." A feature store acts as a central hub to store, manage, and share these features across your organization. This prevents duplicate work and ensures consistency, so everyone is building models from the same reliable data. Think of it as a shared library for your model's most important ingredients, making collaboration smoother and model development faster. According to research from DataCamp, feature stores are a key component for managing data in ML pipelines and creating a single source of truth for your models.

Feast

Feast is a popular open-source feature store that provides a central place to store and serve features for your ML models. It's designed to bridge the gap between data engineering and data science, creating a single source of truth for feature data. This makes it easier to reuse features across different projects and ensures that your models are trained and served with consistent data.

Featureform

Featureform offers a virtual feature store that helps data scientists define, manage, and serve their features more effectively. It’s built to improve teamwork by creating a standardized way to handle features, which enhances reliability and makes it easier to track where your data is coming from. This is especially useful for teams looking to bring more structure and governance to their ML workflows.

Testing and validating your models

A model that works well in the lab might fail spectacularly in the real world if it isn't properly tested. Model validation is a critical step to ensure your models are robust, fair, and perform as expected on new, unseen data. This involves a series of checks to evaluate everything from data quality to model behavior. Without this step, you risk deploying a model that makes inaccurate predictions or, worse, reinforces harmful biases. It's your quality control process for building trustworthy AI and maintaining user confidence in your applications.

Deepchecks

Deepchecks is an open-source tool designed to check the quality of your data and models from start to finish. It helps you build custom checks and monitor your models in production, giving you a comprehensive view of your model's health. It’s a great way to catch potential issues early, before they become major problems for your users.

TruEra

For a deeper level of analysis, TruEra provides an advanced platform that improves model quality and performance. It uses automated testing to explain how your models work and helps you find the root causes of any performance issues. This is particularly valuable for teams that need to ensure their models are not only accurate but also fair and transparent.

Powering predictions with runtime engines

Once your model is trained and validated, you need a runtime engine to put it to work. These engines are the powerhouses that serve your model's predictions to users in real-time. They need to be fast, scalable, and reliable to handle incoming requests without breaking a sweat. Choosing the right runtime engine is crucial for ensuring your application can handle its workload, whether you're serving a handful of users or millions. This is a core piece of the operational puzzle that keeps your AI running smoothly.

Ray

Ray is a flexible framework designed to scale AI and Python applications. It simplifies the process of taking your ML projects from a single machine to a large cluster, making it easier to manage and optimize performance. If you're building applications that need to handle a lot of parallel processing, Ray is an excellent choice for your stack.

Nuclio

Nuclio is a high-performance serverless framework built for tasks that use a lot of data and computing power. It works well with many common data science tools and can be deployed across different devices and clouds. Its focus on speed makes it ideal for real-time applications where low latency is a top priority.

Specialized tools for large language models (LLMs)

Working with large language models (LLMs) introduces a unique set of challenges, especially when it comes to managing the complex data they rely on. Traditional tools often aren't equipped to handle things like vector embeddings, which are numerical representations of text that LLMs use to understand meaning. As a result, a new category of specialized tools has emerged to help developers build, deploy, and manage applications powered by LLMs. These tools are essential for anyone serious about building production-grade LLM applications.

Qdrant

Qdrant is an open-source vector database designed to store, search, and manage vector embeddings. It's built for speed and accuracy, making it a great choice for applications like semantic search or recommendation engines that need to find the most relevant information quickly. It’s also cloud-native, so it fits well into modern, scalable architectures.

LangChain

LangChain is a powerful framework for building applications that use large language models. It provides a set of tools and components that simplify the process of creating, deploying, and monitoring these complex applications. If you're looking to build anything from a sophisticated chatbot to a complex reasoning engine, LangChain gives you the building blocks you need to get started.

Tools for automation and optimization

Building a great model often involves a lot of repetitive tasks and fine-tuning. Tools for automation and optimization are designed to handle this heavy lifting for you, freeing up your team to focus on more strategic work. These tools can automatically build and tune models, test different parameters, and help ensure your models are fair and easy to understand. As highlighted in the awesome-mlops GitHub repository, these tools are essential for creating efficient and responsible AI systems. Integrating them all can be a job in itself, which is why a managed platform like Cake, which handles the entire stack, can be a game-changer.

AutoML

Automated Machine Learning (AutoML) tools are designed to automate the end-to-end process of applying machine learning to real-world problems. They handle tasks like feature engineering, model selection, and hyperparameter tuning automatically, allowing you to build high-performing models with minimal manual effort. This can significantly speed up your development cycle and make ML more accessible to your entire team.

Hyperparameter tuning

Hyperparameter tuning is the process of finding the optimal settings for your model to achieve the best performance. Doing this manually can be incredibly time-consuming and tedious. Tools for hyperparameter tuning automate this process by systematically testing different combinations of settings and identifying the ones that produce the most accurate results, saving you hours of guesswork.

Model fairness and interpretability

As AI becomes more integrated into our lives, ensuring that models are fair and their decisions are understandable is more important than ever. Tools for model fairness and interpretability help you analyze your models for potential biases and explain how they arrive at their predictions. This is crucial for building trust with your users and meeting regulatory requirements for responsible AI.

How to pick the right MLOps tools for your project

Picking the right open-source MLOps tools can feel like a huge decision, and it is. But it’s less about finding a single "best" tool and more about finding the right combination of tools for your specific situation. The tools you choose will shape your entire ML workflow, from initial experiments to production monitoring. Getting this right from the start saves you from massive headaches and costly re-work down the line. Think of it as building a custom toolkit rather than buying a pre-packaged set.

Before you get lost in feature comparisons and product demos, take a step back. The best approach is to start with a clear understanding of your own environment. A tool that’s perfect for a massive enterprise might be total overkill for your startup, and a framework that works for a team of seasoned data scientists might be too complex for a team that’s just getting started. By focusing on four key areas: 1) your project’s specific needs, 2) your team’s current skills, 3) your plans for future growth, and 4) your existing tech stack, you can create a clear scorecard for making the right choice and build a stack that truly works for you.

Start by assessing your project's needs

First things first: what are you actually trying to build? Before you even look at a single tool, map out the specific requirements of your project. Are you running a few small-scale experiments or deploying a complex, real-time model that will serve thousands of users? Be honest about the scale and complexity you’re dealing with. Make a list of your must-haves. Do you need robust data versioning, automated model retraining, or advanced performance monitoring? Getting clear on these needs will give you a practical checklist to measure potential tools against, helping you cut through the noise and focus on what really matters for your project to succeed.

Consider your team's current skills

A powerful tool is useless if no one on your team knows how to use it. Open-source tools are incredibly flexible, but that flexibility often comes with a steeper learning curve and requires more hands-on expertise for setup and maintenance. Take a realistic look at your team’s skills. Do you have engineers who are comfortable diving into documentation, configuring systems, and troubleshooting integration issues? Or is your team stretched thin and in need of a solution that’s more straightforward out of the box? Choosing a tool that aligns with your team’s current capabilities will ensure they can adopt it quickly and use it effectively.

Think ahead and plan for growth

The model you’re building today might be just the beginning. When selecting your tools, think about where your project will be in one, three, or even five years. Will your data volume grow? Will you need to support more models in production? Choosing a tool that can scale with you is critical. Look for solutions that are flexible and won't lock you into a rigid system that can’t adapt to future demands. You want a toolset that supports your growth, not one that holds you back when it’s time to expand your AI initiatives. This foresight prevents you from having to rip and replace your entire stack down the road.

Make sure it plays well with your tech stack

Your MLOps tools don’t operate in a bubble. They need to integrate smoothly with the systems you already have in place, like your cloud provider, databases, and CI/CD pipelines. A tool might look great on its own, but if it doesn’t play well with your existing technology stack, you’re signing up for a world of integration pain. Before committing to a tool, verify its compatibility. Check for pre-built integrations, well-documented APIs, and an active community that can help with custom connections. A seamless integration is key to creating an efficient, automated workflow instead of a clunky, disconnected process.

Be aware of common tool pitfalls and community feedback

Before you commit, do some digging beyond the official feature list. Spend time reading through community forums, GitHub issues, and user reviews to get an unfiltered look at the tool. Are users frequently running into the same bugs? Are there complaints about poor documentation or a lack of support? These are red flags that can signal future integration headaches and a lot of wasted time. On the other hand, a vibrant community with active discussions and responsive maintainers is a great sign. Strong support and clear documentation are your safety net, ensuring you can troubleshoot issues and rely on the tool for the long haul.

How to get your new MLOps tools up and running

Choosing the right open-source tools is a great first step, but the real magic happens during implementation. Bringing new tools into your workflow can feel like a huge undertaking, but with a thoughtful approach, you can set your team up for success without derailing your current projects. The key is to focus on people and processes just as much as you focus on the technology itself. Let’s walk through a few practical steps to make your MLOps adoption smooth and effective.

Getting past the initial learning curve

Let's be honest: adopting MLOps tools involves more than just installing software. It requires a shift in how your teams work together. Much like the move to DevOps, a successful MLOps strategy is built on a new mindset that values collaboration, automation, and continuous improvement. Encourage your data scientists, ML engineers, and IT operations teams to communicate early and often. Provide training resources and create space for experimentation. The goal is to build a shared understanding of the entire ML lifecycle, breaking down silos so everyone is working toward the same objective: delivering reliable models, faster.

SUCCESS STORY: Building with Cake was like "DevOps on steroids"

Handling common integration issues

One of the trickiest parts of building an open-source MLOps stack is getting all the different tools to play nicely together. You might select a best-in-class tool for data versioning and another for model monitoring, but they don’t always connect seamlessly out of the box. These integration gaps can lead to complex deployment pipelines and data management headaches. Before you commit to a set of tools, map out how they will connect to each other and to your existing systems. Proactively addressing integration helps you avoid bottlenecks and build a cohesive, functional workflow from the start.

Keeping your data clean and reliable

Your MLOps pipeline is only as reliable as the data flowing through it. Poor data quality is one of the quickest ways to derail an ML project, leading to inaccurate models and untrustworthy results. It's a challenge that's part technical and part communication. Establish clear processes for data validation, cleaning, and versioning early on. Automate data quality checks at every stage of your pipeline to catch issues before they impact your models. This ensures that your team can trust the data they're using and that your models are built on a solid foundation.

Staying secure and compliant

When you're managing your own MLOps stack, security is your responsibility. Protecting sensitive data and ensuring your models are safe from unauthorized access is critical, especially if you operate in a regulated industry. Don't treat security as an afterthought. Instead, build it directly into your MLOps workflow. This means implementing access controls for your data and models, regularly scanning your tools for vulnerabilities, and ensuring your deployment processes meet compliance standards. A proactive security posture protects your assets and builds trust with your users.

Try a phased rollout to start

It can be tempting to overhaul your entire workflow at once, but a "big bang" approach often leads to frustration and resistance. A much smoother path is a phased rollout. Begin by implementing your new MLOps tools on a single, low-risk project. This creates a pilot program where your team can learn the new processes, work out any kinks, and score an early win. Success builds momentum and makes it easier to get buy-in for wider adoption. This gradual approach allows your team to adapt and grow their skills without feeling overwhelmed by the change.

Open source vs. commercial MLOps tools: which is right for you?

Deciding between open-source and commercial MLOps tools is one of those big, strategic choices every AI team faces. It’s not just about the price tag. On one hand, you have the incredible flexibility of open-source software. On the other, the plug-and-play convenience of a commercial platform. The right answer really depends on your team’s budget, skills, and what you’re trying to build long-term.

With open-source, you get to be the architect, building a custom stack that fits your workflow perfectly. This is powerful, but it also means your team is responsible for integrating, maintaining, and fixing everything. Commercial tools offer a more all-in-one experience, where everything is designed to work together smoothly, usually with a support team on standby. The trade-off is often less customization and the potential for vendor lock-in. To make the best call, you need to look at the whole picture, from the true costs to your plans for the future.

Look beyond the price tag at the true costs

It’s easy to look at open-source tools and think "free is for me." But the sticker price doesn't tell the whole story. To get a real sense of the investment, you have to consider the total cost of ownership. While you won't pay for a license, you'll need to account for several potential hidden costs, like hosting the tools on your own servers and the engineering hours it takes to set everything up and keep it running.

If something breaks, the downtime can have its own business costs, too. Commercial tools wrap these expenses into a clear subscription fee, which can make budgeting much more predictable and let your engineers focus on building models instead of managing infrastructure.

What kind of support will you need?

Imagine one of your key models goes down in the middle of the night. Who do you call? With a commercial platform, you likely have a support contract and a team you can reach out to for help. With open-source, your support system is the community forums and your own team’s problem-solving skills.

You're also on the hook for all the ongoing maintenance, from security patches to updates. Some open-source MLOps tools even have proprietary parts, which can make maintenance tricky. You have to be realistic about whether your team has the time and expertise to handle that responsibility.

How much customization do you really need?

The biggest advantage of open-source is the freedom to build your MLOps stack exactly how you want it. If you have a very specific workflow, you can assemble a custom solution by picking and choosing the best tools for each job. This gives you ultimate control, but it also requires deep technical know-how to get all the pieces working together smoothly.

Commercial tools offer a more streamlined experience. They’re built to handle common use cases right away, letting you get started faster, even if it means giving up some of that custom-fit feeling. It’s a classic trade-off between total flexibility and immediate usability.

Consider the long-term picture

The MLOps stack you choose today needs to support you as your projects and team grow. An open-source approach means committing to managing and maintaining that stack in-house for the long haul. You need to ask if your team has the technical expertise for setup and maintenance, not just now, but as your models become more complex and your operations scale.

A commercial solution can offer a more predictable path for growth, as the vendor is responsible for updates and scaling the platform. However, you also risk getting locked into one vendor's ecosystem. Think about your long-term vision and pick the option that sets you up for sustainable success.

A commercial solution can offer a more predictable path for growth, as the vendor is responsible for updates and scaling the platform. However, you also risk getting locked into one vendor's ecosystem. Think about your long-term vision and pick the option that sets you up for sustainable success.

Major cloud platforms: AWS SageMaker, Azure ML, and Google Vertex AI

The major cloud providers offer a compelling alternative to building your own stack from scratch. These platforms bundle many of the MLOps capabilities we've discussed into a single, integrated environment. For example, AWS SageMaker is a popular, complete solution that covers everything from training and experiment tracking to deploying and monitoring models. Similarly, Microsoft's Azure ML provides a comprehensive toolkit for the entire ML lifecycle, while Google's Vertex AI offers a unified platform that's particularly strong for projects involving large language models. The core benefit of these platforms is that they are managed services. They handle the complex infrastructure, which means your team can spend less time wrestling with tools and more time focused on building valuable AI applications.

How to handle common open source MLOps challenges

Choosing the right open-source tools is a great first step, but making them work together effectively is where the real challenge begins. From getting your team on board to keeping your data secure, using the open-source landscape requires a thoughtful strategy. The good news is that these hurdles are well-known, and with the right approach, you can clear them easily. Let's walk through some of the most common challenges and how you can solve them.

Getting your team on board faster

A new tool stack is only effective if your team actually uses it. Getting everyone on board requires more than just a software license; it demands a cultural shift. Much like the move to DevOps, successful MLOps is built on a new mindset that values collaboration and continuous improvement.

Start by hosting workshops to explain the "why" behind the new tools and processes. When your data scientists, engineers, and operations specialists understand the shared goals, they're more likely to embrace new workflows. Encourage open communication channels and create opportunities for cross-functional pairing. The goal is to break down silos and build a unified team that sees the MLOps platform as a shared asset for creating better models, faster.

Tips for a smoother integration process

Stitching together various open-source tools can sometimes feel like building a Frankenstein's monster—powerful, but clunky and hard to manage. Common MLOps challenges like data management issues and complex deployments often stem from poor integration. A structured approach is your best defense.

Before you even think about specific tools, map out your entire ML lifecycle, from data ingestion to model monitoring. This blueprint will help you identify the critical connection points. When evaluating tools, prioritize those with robust APIs and well-documented integration capabilities. Start by connecting just two or three essential tools, test them thoroughly, and then gradually build out your stack. This methodical process prevents you from getting overwhelmed and ensures each component works seamlessly with the others.

Ensuring your MLOps tools can scale

The tools that work perfectly for a small pilot project can easily buckle under the pressure of production-level data. As your models become more complex and your user base grows, your MLOps stack needs to grow with you. Scalability isn't an afterthought; it's a foundational requirement you should plan for from day one.

When choosing tools, look for features designed for growth, like support for distributed computing. Dig into the documentation and community forums to see how others are using the tool at scale. The most important step is to stress-test your setup. Create simulations that handle increased data volume and user traffic to find potential bottlenecks before they impact your real-world performance. This proactive approach ensures your infrastructure is ready for success.

A practical guide to security and compliance

When you use open-source software, security is a shared responsibility. You can't assume a tool is secure just because it's popular. Security concerns are a major hurdle in MLOps, and protecting sensitive data and intellectual property has to be a top priority.

Start by implementing strict access controls to ensure only authorized team members can interact with your models and datasets. Use automated scanning tools to regularly check for vulnerabilities in your open-source dependencies and patch them quickly. For compliance, build automated checks into your CI/CD pipeline to verify that your workflows meet relevant standards like GDPR or HIPAA. By embedding security and compliance into your daily operations, you can protect your assets without slowing down innovation.

IN-DEPTH: Your data is your advantage (don't give it away to LLM providers)

What's next for open-source MLOps?

The world of MLOps is always moving forward, pushed by new tech and a growing need for ML solutions that are more efficient, scalable, and easy to use. If you want to stay current, you have to keep an eye on the trends shaping the future of the field. For teams building their own stacks, this means getting ready for more powerful tools and workflows that are better connected. The main goal is to make the ML lifecycle—from preparing data to monitoring models—run more smoothly and with more automation. As open-source tools get more advanced, they bring amazing opportunities, but also new challenges. Knowing where the industry is headed can help you build a stack that not only works well today but is also prepared for what’s coming tomorrow.

Keep an eye on these emerging trends

The MLOps landscape is shifting toward greater integration and accessibility. We're seeing a significant move toward using Kubernetes to manage complex ML workflows, as its container orchestration capabilities are ideal for scaling demanding AI workloads. At the same time, the rise of more user-friendly tools is making ML more democratic, allowing more people on your team to contribute without needing deep technical expertise. Another key trend is the convergence of DataOps and MLOps, which focuses on streamlining data management to ensure your models are always fed high-quality, reliable data. This integration is fundamental for building robust and dependable ML systems from the ground up.

How AI is changing the MLOps game

It might sound a little meta, but AI is set to become one of the biggest drivers of improvement within MLOps itself. AI will be key to automating various aspects of the ML lifecycle, from training and deployment to monitoring and retraining. Just imagine models that can learn and adapt in real time with very little human input. AI-powered tools will also offer much smarter model management, giving you deeper insights into performance and making it easier to know when a model needs an update. This improved automation and intelligence will also help teams work together better, giving data scientists, engineers, and business leaders a clearer, more unified view of the entire process.

Building your MLOps expertise

Diving into the world of MLOps can feel like a big undertaking, but it’s one of the most valuable skills you can develop in the AI space. The key is to approach it as a continuous learning process rather than a mountain you have to climb all at once. You don’t need to master every tool overnight. Instead, focus on understanding the core principles that turn a standalone model into a reliable, production-ready system. For teams just starting out, leveraging a managed platform like Cake can be a smart move. It allows you to focus on MLOps concepts and workflows without getting bogged down by the complexities of setting up and maintaining the underlying infrastructure yourself.

A recommended learning path for getting started

The best way to get started with MLOps is by building a solid foundation. Before you jump into specific tools, make sure you have a good grasp of machine learning fundamentals and core DevOps principles. MLOps is essentially the application of those DevOps practices to the ML lifecycle, designed to deploy and maintain models reliably. Once you have the basics down, focus on key MLOps concepts like experiment tracking, data and model versioning, and building automated CI/CD pipelines for ML. The final and most important step is hands-on practice. Pick a small project and try to apply what you’ve learned. This practical experience is where the concepts truly click, helping you understand how to choose the right tools for your specific needs.

Helpful community resources and where to find them

You don’t have to learn MLOps in a vacuum. The open-source community is incredibly active and supportive, offering a wealth of resources for learners at every level. A great place to start is with curated learning paths, like the ones you can find on GitHub, which gather articles, tutorials, and project ideas into a structured format. Websites like MadeWithML also offer excellent hands-on tutorials for beginners. When you run into specific problems, online communities on platforms like Reddit (check out the r/mlops subreddit) are fantastic for asking questions and learning from the experiences of others. Engaging with these community-contributed resources is one of the best ways to stay current and get practical advice as you build your skills.

How Cake makes MLOps feel simple

While building your stack with open-source tools gives you incredible flexibility, it can also feel like you’re trying to assemble a puzzle without the box. You have to make sure every piece fits, works with the others, and is secure—all while trying to actually build and deploy your models. This is where a managed approach can be a game-changer. Instead of spending your time on infrastructure management, you can focus on what you do best: creating value with AI.

Our all-in-one approach to MLOps

Cake simplifies the ML lifecycle by bringing everything you need into one managed environment. Think of it as a pre-built foundation for your AI projects. It integrates essential open-source tools for experiment tracking, model management, and data preparation, so you don't have to build it all from scratch. This all-in-one approach provides a cohesive platform that supports your workflow from the first line of code to full-scale deployment. By handling the infrastructure and tool integration, Cake lets your team move faster and build more efficiently, turning complex MLOps processes into a streamlined, manageable path to production.

Why managing your MLOps tools with Cake just makes sense

The biggest benefit of using a unified AI development platform is how it streamlines your team’s workflow. When everyone is working with the same integrated toolset, collaboration between data scientists and engineers becomes much smoother. There’s less friction, fewer handoffs, and a single source of truth for every stage of the project. This integrated environment reduces the complexity of managing a dozen different tools, which saves a ton of time and helps maintain consistency. Ultimately, this means you can get your MLOps blueprint right from the start, leading to faster, more reliable deployments and a stronger foundation for your AI initiatives.

Related articles

- MLOps: Your Blueprint for Smarter AI Today

- LLMOps 101: Your Essential Guide for AI Success

- 6 of the Best Open-Source AI Tools of 2025 (So Far)

- The High Cost of Sticking with Closed AI

- MLOps, Powered by Cake

Frequently asked questions

Why do I need MLOps if I can already build a working model?

Building a model that performs well in a controlled lab environment is a fantastic achievement, but it's very different from running that model in the real world. MLOps provides the operational framework to turn your model into a reliable, living product. It handles the entire lifecycle, ensuring your model can be deployed smoothly, monitored for performance drops, and retrained with new data, all while consistently delivering value to your users.

Building a custom stack with open-source tools sounds like a lot of work. Is it worth it?

It certainly can be, but it's a significant commitment. The main benefit of a custom open-source stack is the complete control and flexibility to tailor every component to your exact needs. However, this freedom comes with the responsibility of integrating, maintaining, and securing all those individual tools yourself. This requires a lot of engineering time and expertise, which can distract your team from their main goal of building great AI applications.

What is the most common mistake teams make when first implementing MLOps?

A frequent misstep is focusing too much on the tools and not enough on the people and processes. MLOps isn't just about installing new software; it's about changing how your teams work together. If your data scientists, engineers, and operations teams aren't communicating and collaborating effectively, even the best tools will fail to deliver. Success depends on building a shared culture of ownership over the entireML lifecycle.

How do all these different open-source tools actually connect and work together?

This is one of the biggest challenges of the do-it-yourself approach. Getting separate tools for data versioning, workflow orchestration, and model monitoring to communicate seamlessly often requires a great deal of custom engineering work. Teams have to build and maintain the "glue" that holds the stack together, which can be complex and brittle. This integration friction is a primary reason why many AI projects slow down or stall before reaching production.

How is a managed platform like Cake different from just using the open-source tools myself?

Think of it as the difference between building a car from individual parts versus getting a fully assembled, high-performance vehicle. While you could source all the open-source components yourself, Cake provides a managed platform where these essential tools are already integrated, secured, and optimized to work together. We handle the underlying infrastructure and maintenance, which frees your team from that burden and allows them to focus entirely on creating and deploying models faster.

About Author

Cake Team

More articles from Cake Team

Related Post

7 Best Open-Source AI Governance Tools Reviewed

Cake Team

The Main Goals of MLOps (And What They Aren't)

Cake Team

How to Evaluate Baseten for AI Infrastructure

Cake Team

MLOps vs AIOps vs DevOps: A Complete Guide

Cake Team