Cake for

Voice Agents

Cake’s AI Voice Agent solution helps you rapidly build, deploy, and scale high-performance voice bots without vendor lock-in, ballooning costs, or black-box limitations.

Overview

Voice interfaces are back, but this time, they’re powered by LLMs. Whether it’s customer support, sales, workflow automation, or helpdesk triage, voice agents offer high efficiency and intuitive UX. The challenge is delivering low-latency performance while orchestrating models, tools, and APIs across a real-time stack. This orchestration requires tightly integrated components across speech, inference, memory, and action.

Cake provides a composable voice agent stack with everything you need: low-latency model serving (via vLLM), real-time ASR/TTS, agent orchestration with LangGraph or Pipecat, and full integration with CRMs, databases, and telephony providers. Stream responses with millisecond latency, retrieve real-time data, and act on it all with observability and compliance built in.

With Cake, your voice agents don’t just talk, they act, retrieve, and scale across your enterprise systems.

Key benefits

-

Pre-integrated components: Start with a ready-to-go stack including orchestration, LLMs, telephony, speech-to-text, and observability, so you can focus on building, not plumbing.

-

Rapid deployment and scaling: Deploy voice agents into your own VPC with autoscaling, policy controls, and built-in security. No need to build and maintain custom cloud infrastructure.

-

Real-time monitoring and rapid iteration: Track performance, identify bottlenecks, and optimize conversational flows with Cake-managed open-source observability tools. No black boxes.

-

Built-in AI/ML optimization: Go beyond simple automation by easily layering in Retrieval-Augmented Generation (RAG), custom models, and analytics all managed through Cake.

RAPID PROTOTYPING

![]()

Build and iterate with speed

and full control

-

Plug-and-play support: for top-tier STT and TTS providers like Deepgram, ElevenLabs, Daily.co, and Cartesia.

-

Flexible orchestration: with the Cake Voice Builder for rapid voice agent assembly.

-

Version-controlled prompts and configs: with LangFuse for traceable iteration.

-

Dynamic model switching: using LiteLLM proxying to toggle between OpenAI, Gemini, Anthropic, and self-hosted models.

-

Built-in observability: with OTEL-compatible traces, TTFB spans, A/B testing via ClickHouse, and Grafana dashboards.

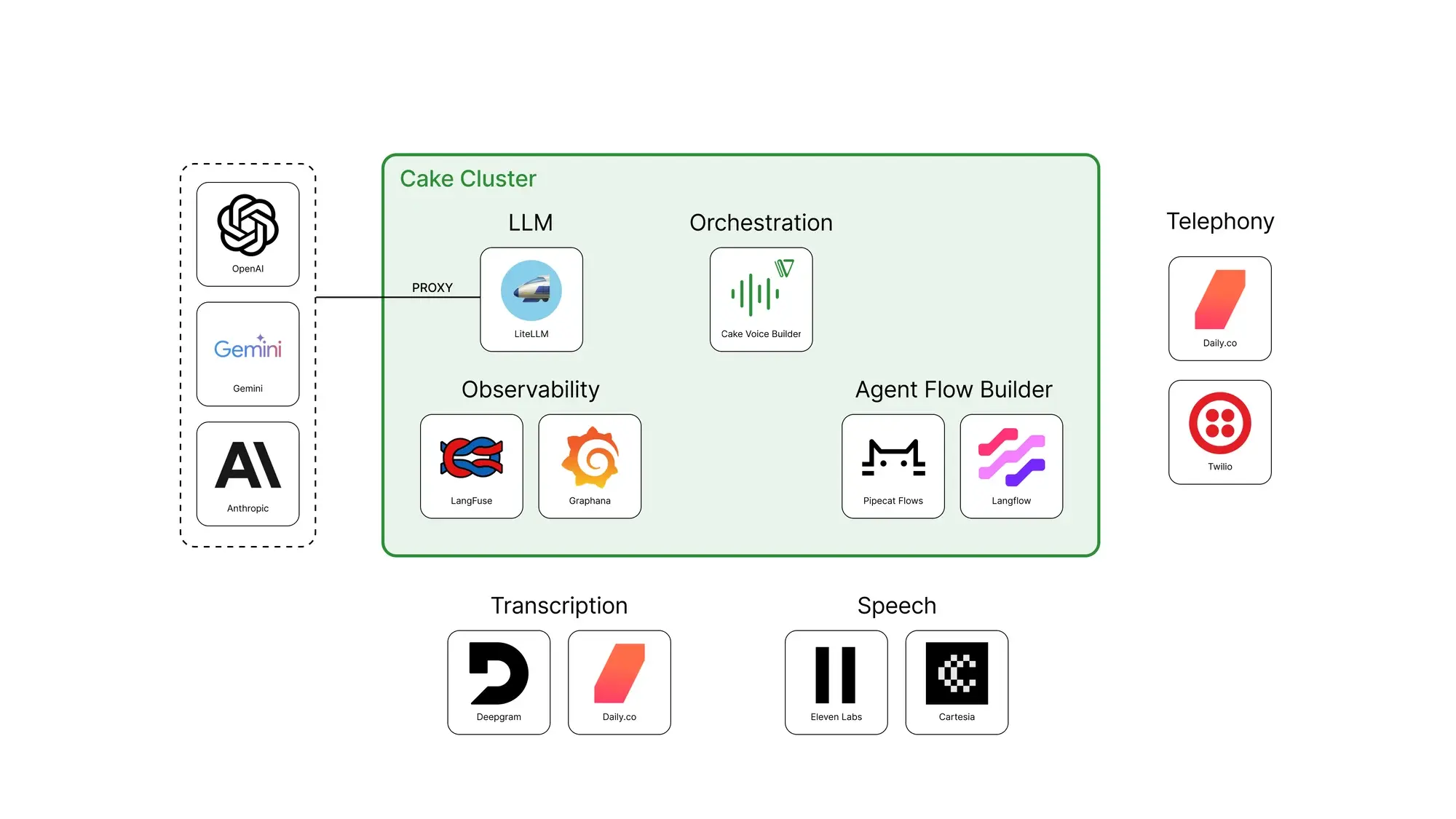

MORE AGENTS, LESS OVERHEAD

![]()

Scale fast without breaking

the bank

-

Efficient scaling: with Ray for parallelized execution and hundreds of agents (at a fraction of typical voice SaaS costs).

-

LiteLLM integration: distributes API calls across multiple services, with failover protection for high availability.

-

Custom observability: tools backed by LangFuse and ClickHouse facilitate A/B testing for performance optimization.

-

Independent voice components: like Deepgram, ElevenLabs, and Cartesia ensure high-quality speech experiences at scale.

-

Vendor-agnostic architecture: lets you run large-scale deployments without lock-in or brittle dependencies.

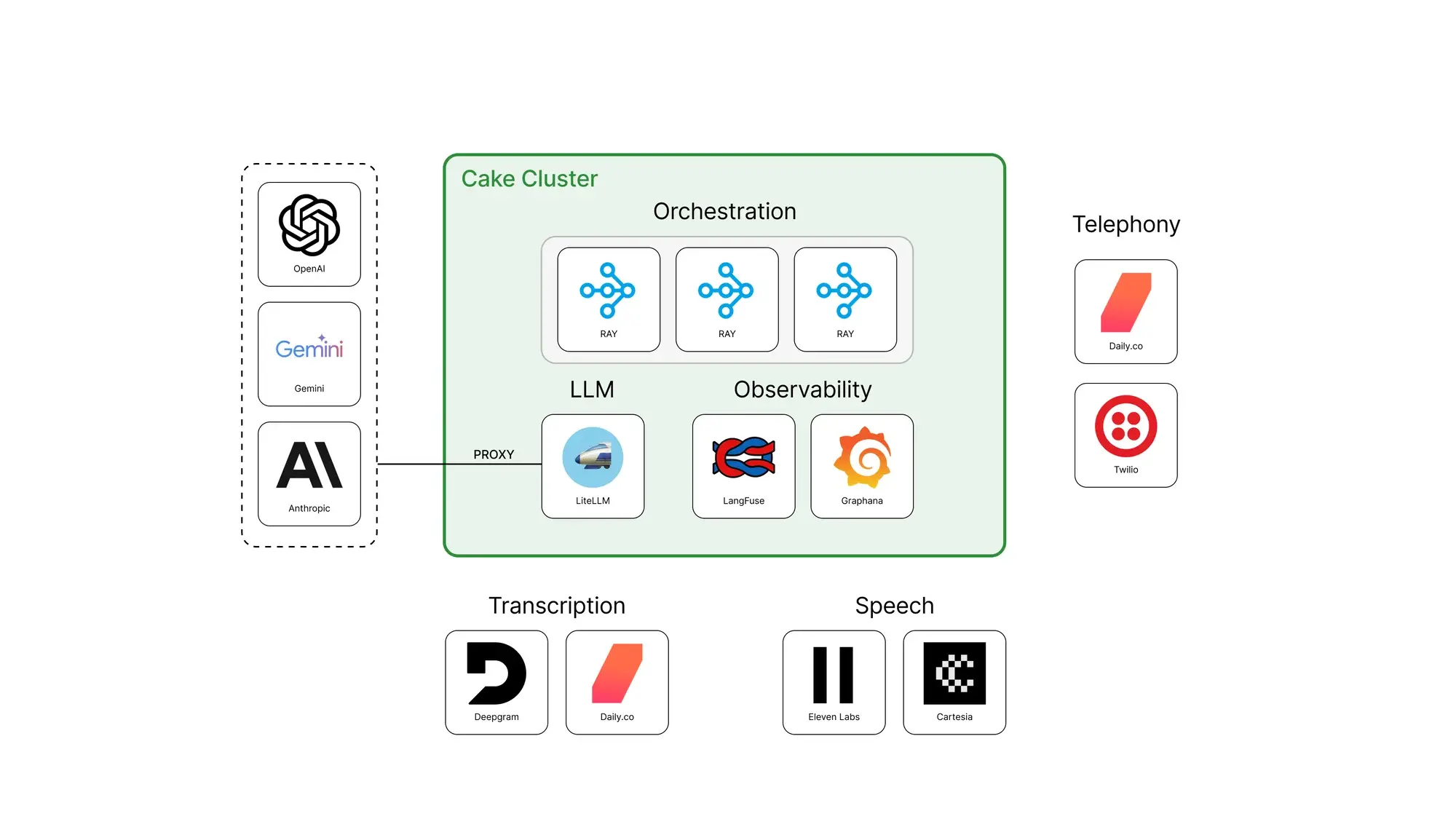

SMARTER RETRIEVAL, CLEANER ARCHITECTURE

![]()

Stop the egress. Bring voice to your data, not vice versa.

-

Bring-your-own vector store: with support for Milvus, Weaviate, pgvector, and more; and keep them running in your own environment.

-

End-to-end control: with zero data egress, no vendor lock-in, and no reliance on walled gardens or black-box infrastructure.

-

Better voice experiences: powered by real-time retrieval, secure context handling, and seamless orchestration.

THE CAKE DIFFERENCE

![]()

From brittle voice bots to scalable,

agentic architecture

Traditional IVR / voice bot

Rigid, scripted, and frustrating: Menu trees and keyword-based bots that often confuse more than they help.

- Predefined flows that can’t adapt mid-conversation

- Fails on ambiguous or multi-turn queries

- Requires constant manual scripting to update

- Poor handoff to human agents and no learning over time

Result:

High abandonment, low satisfaction, and expensive to maintain

Voice Agents with Cake

Conversational, adaptive, and action-oriented: Use Cake to build voice agents that understand intent, take action, and improve over time.

- Agents can retrieve, reason, and act across systems

- Handles follow-ups, clarifications, and goal completion

- Easily integrates with APIs, databases, and back-office tools

- Full observability, evaluations, and language model flexibility

Result:

Faster resolution, better CX, and continuous improvement

EXAMPLE USE CASES

![]()

Real-world voice workflows,

powered by AI

![]()

Customer support automation

Deploy AI voice agents that handle high call volumes while maintaining high-quality service and reducing costs.

![]()

Outbound sales

Automate repetitive outreach tasks to boost productivity, increase conversion rates, and free up human teams for higher-value work.

![]()

Virtual receptionists

Provide 24/7 phone coverage without the expense of round-the-clock staff, improving responsiveness and customer satisfaction.

![]()

Order processing & status updates

Automate inbound calls for order placement, tracking, and status updates in industries such as retail, food delivery, or logistics.

![]()

Appointment assistant

Send proactive reminders, reschedule appointments, or confirm bookings without human intervention, reducing no-shows and cancellations.

![]()

Internal helpdesk automation

Handle routine employee requests such as password resets, benefits inquiries, or system troubleshooting without tying up internal teams.

"Our partnership with Cake has been a clear strategic choice – we're achieving the impact of two to three technical hires with the equivalent investment of half an FTE."

Scott Stafford

Chief Enterprise Architect at Ping

"With Cake we are conservatively saving at least half a million dollars purely on headcount."

CEO

InsureTech Company

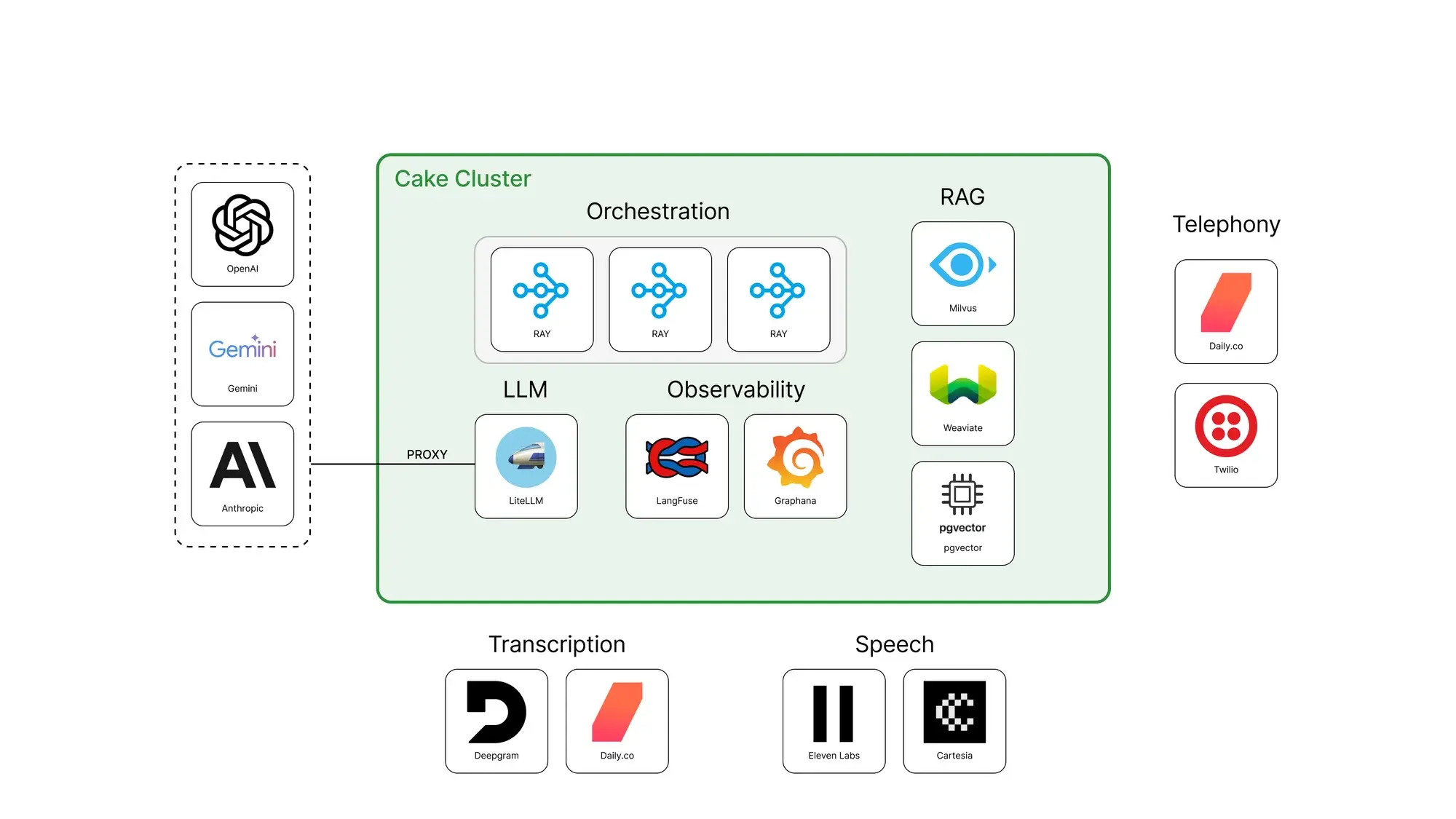

COMPONENTS

![]()

Tools for your Cake-powered

voice agent stack

LangGraph

Agent Frameworks & Orchestration

LangGraph is a framework for building stateful, multi-agent applications with precise, graph-based control flow. Cake helps you deploy and scale LangGraph workflows with built-in state persistence, distributed execution, and observability.

LiveKit

Streaming

LiveKit delivers real-time audio and video streaming through its open-source WebRTC platform. Cake makes it easy to integrate LiveKit into multi-agent AI workflows with built-in communication handling, orchestration, and secure infrastructure management.

Pipecat

Data Ingestion & Movement

Agent Frameworks & Orchestration

Pipecat is a context-aware routing engine that lets LLMs invoke live APIs, trigger tools, or respond to streaming data in real time. Cake provides the infrastructure to run Pipecat in production—handling execution, scaling, and security so you can build dynamic, tool-using agents that integrate with other components.

Milvus

Vector Databases

Milvus is an open-source vector database built for high-speed similarity search. Cake integrates Milvus into AI stacks for recommendation engines, RAG applications, and real-time embedding search.

Langflow

Agent Frameworks & Orchestration

Langflow is a visual drag-and-drop interface for building LangChain apps, enabling rapid prototyping of LLM workflows.

Langfuse

LLM Observability

Langfuse is an open-source observability and analytics platform for LLM apps, capturing traces, user feedback, and performance metrics.

Frequently asked questions

What is Cake’s AI Voice Agent solution?

Cake’s AI Voice Agent solution enables businesses to build, deploy, and scale AI-powered voice bots in their own cloud environment, which offers better control, lower costs, and built-in observability compared to traditional managed voice platforms.

How is Cake’s voice AI different from other providers?

Most voice AI platforms lock you into expensive, opaque ecosystems. Cake gives you full control over your stack, data, and costs while helping you move faster with modern tools and expert support.

Can I deploy Cake’s voice agents in my own cloud?

Yes. Cake’s voice agents are fully deployable in your own VPC, giving you better data security, lower latency, and no egress fees.

What kind of cost savings can I expect?

Customers using Cake for voice AI typically save significantly compared to traditional SaaS models, especially at scale, both in infrastructure costs and platform fees.

Does Cake help with observability and performance tuning?

Absolutely. Cake includes dynamic observability tools (like LangFuse and Grafana) and expert guidance to help you monitor, measure, and improve your voice agents over time.

Learn more about Cake and voice agents

How to Build an AI Voice Agent Users Actually Like

Learn how to build an AI voice agent step by step, from planning and design to launch, with practical tips for creating a natural, user-friendly...

Open Source Voice AI: Tools, Models & How-Tos

Voice interaction is no longer a novelty; it's an expectation. The real question isn't if you should add voice to your product, but how you can do it...

AI Voice Agent Services for Businesses: A 2025 Guide

See how ai voice agent services for businesses handle support, bookings, and more—improving customer experience and efficiency across every industry.

.png?width=220&height=168&name=Group%2010%20(1).png)