Cake for Fine Tuning

Customize LLMs and other foundation models for your domain using open-source fine-tuning pipelines on Cake. Save on compute, preserve privacy, and get production-ready faster without giving up control.

Overview

Generic foundation models are powerful, but they’re not personalized. Fine-tuning unlocks that final 10x by adapting base models to your industry, tone, workflows, or use case. The challenge is doing it cost-effectively, securely, and with reproducibility.

Cake provides a cloud-agnostic fine-tuning stack built entirely on open source. Use Hugging Face models and tokenizers, run experiments with PyTorch and MLflow, and orchestrate workflows with Kubeflow Pipelines. You can fine-tune LLMs or vision models using your own private datasets, with full observability, lineage, and governance support.

Because Cake is modular and composable, you can bring in the latest open-source fine-tuning tools such as PEFT, LoRA, or QLoRA without waiting for a platform update. And by running in your environment, you cut compute costs and avoid sharing sensitive data with third-party APIs.

Key benefits

-

Fine-tune securely and privately: Keep data in your environment while adapting open-source models to your needs.

-

Reduce compute and licensing costs: Use optimized workflows and control your infrastructure footprint.

-

Integrate the latest fine-tuning tools: Stay current with new methods like LoRA, QLoRA, and PEFT.

-

Track experiments and improve performance: Version datasets, configs, and results with full traceability.

-

Deploy anywhere: Run fine-tuned models across clouds, regions, or edge environments without retooling.

THE CAKE DIFFERENCE

![]()

Fine-tuning with Cake leads to

better results across the board

Base model (out-of-the-box)

Generic, inconsistent, and off-brand: Vanilla models are trained on broad internet data, not your product, customers, or voice.

- Responses are often vague, overly verbose, or hallucinated

- Doesn’t understand your terminology, structure, or edge cases

- Lacks alignment with your brand tone or compliance needs

- Requires heavy prompt engineering to get decent output

Result:

Okay for a demo, risky and unreliable in production

Fine-tuned model with Cake

Smarter, faster, and tailored to your business: Use Cake to fine-tune open models with your real data for domain-specific performance.

- Adapts to your tone, structure, and business logic

- Improves accuracy, reduces hallucinations, and cuts prompt complexity

- Securely trains in your own cloud with full compliance and observability

- Accelerates time to ROI by reducing manual intervention

Result:

Higher quality outputs, better user experience, and faster time to value

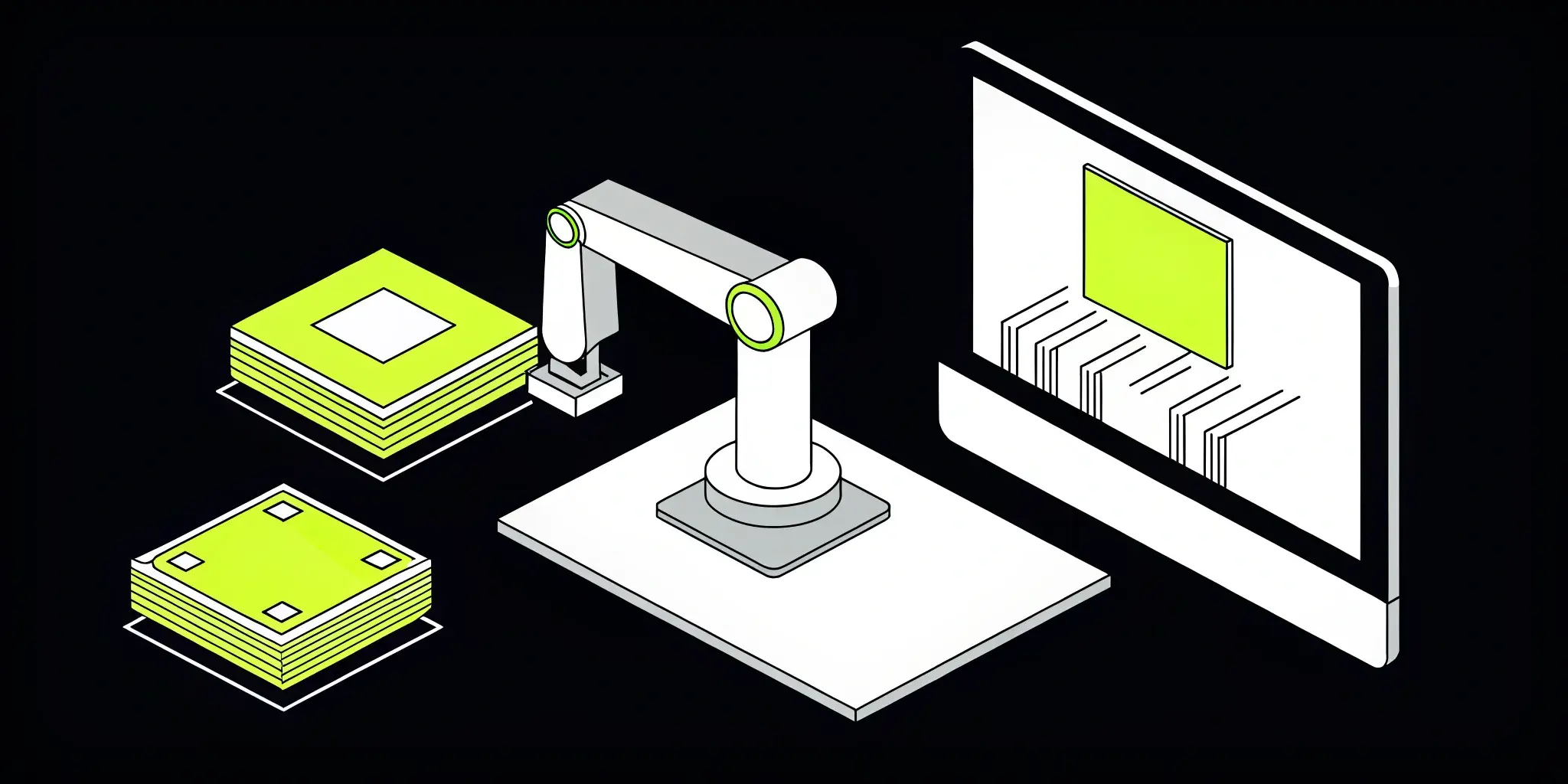

EXAMPLE USE CASES

![]()

How teams use Cake's fine-tuning

infrastructure to customize foundation

models for targeted performance

![]()

Domain-specific LLMs

Train a general-purpose model on legal, medical, or financial data for better relevance and terminology.

![]()

Instruction and task tuning

Fine-tune models to follow your internal formats, policies, or step-by-step procedures.

![]()

Multi-modal adaptation

Customize vision-language or audio-text models to work with your specific inputs or annotation structure.

![]()

Improving tone & voice for customer-facing AI

Fine-tune LLMs to match your brand’s tone, formality, or regional language preferences to ensure consistent customer experiences across channels.

![]()

Adapting models to handle company-specific jargon

Train models to understand internal acronyms, product names, and workflows, improving performance on support, search, and agent tasks.

![]()

Enhancing performance on non-English or low-resource languages

Fine-tune multilingual models to improve understanding and generation in target languages not well covered by default LLM training.

CUSTOMER SERVICE

Fine-tune your AI to speak your language

LLMs don’t come out of the box ready for customer interactions. See how teams use Cake to fine-tune models for brand voice, support workflows, and personalized service.

BLOG

Run production-ready fine-tuning in hours, not weeks

See how Cake helps teams run reproducible fine-tuning experiments, track model changes, and deploy with full observability and governance.

"Our partnership with Cake has been a clear strategic choice – we're achieving the impact of two to three technical hires with the equivalent investment of half an FTE."

Scott Stafford

Chief Enterprise Architect at Ping

"With Cake we are conservatively saving at least half a million dollars purely on headcount."

CEO

InsureTech Company

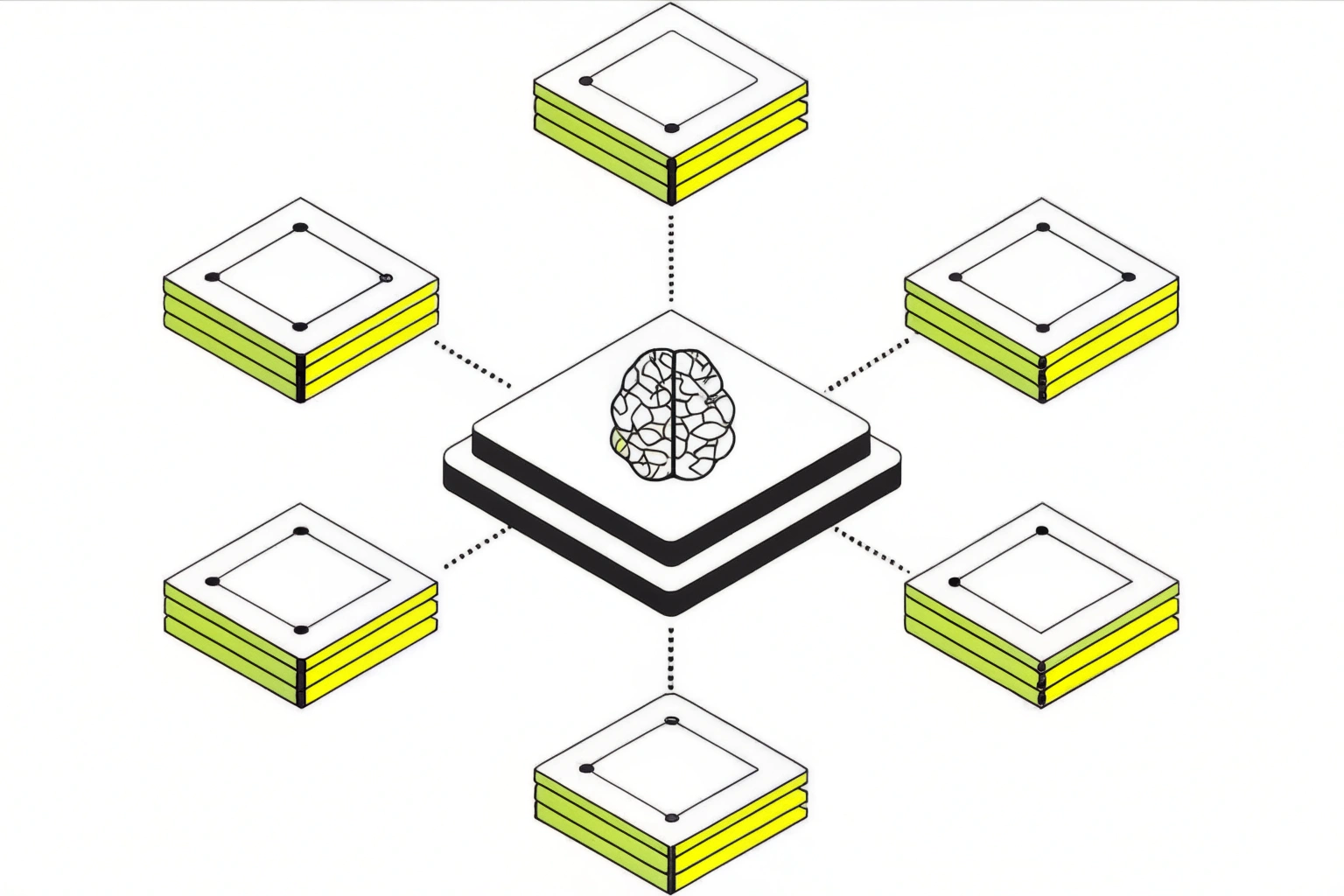

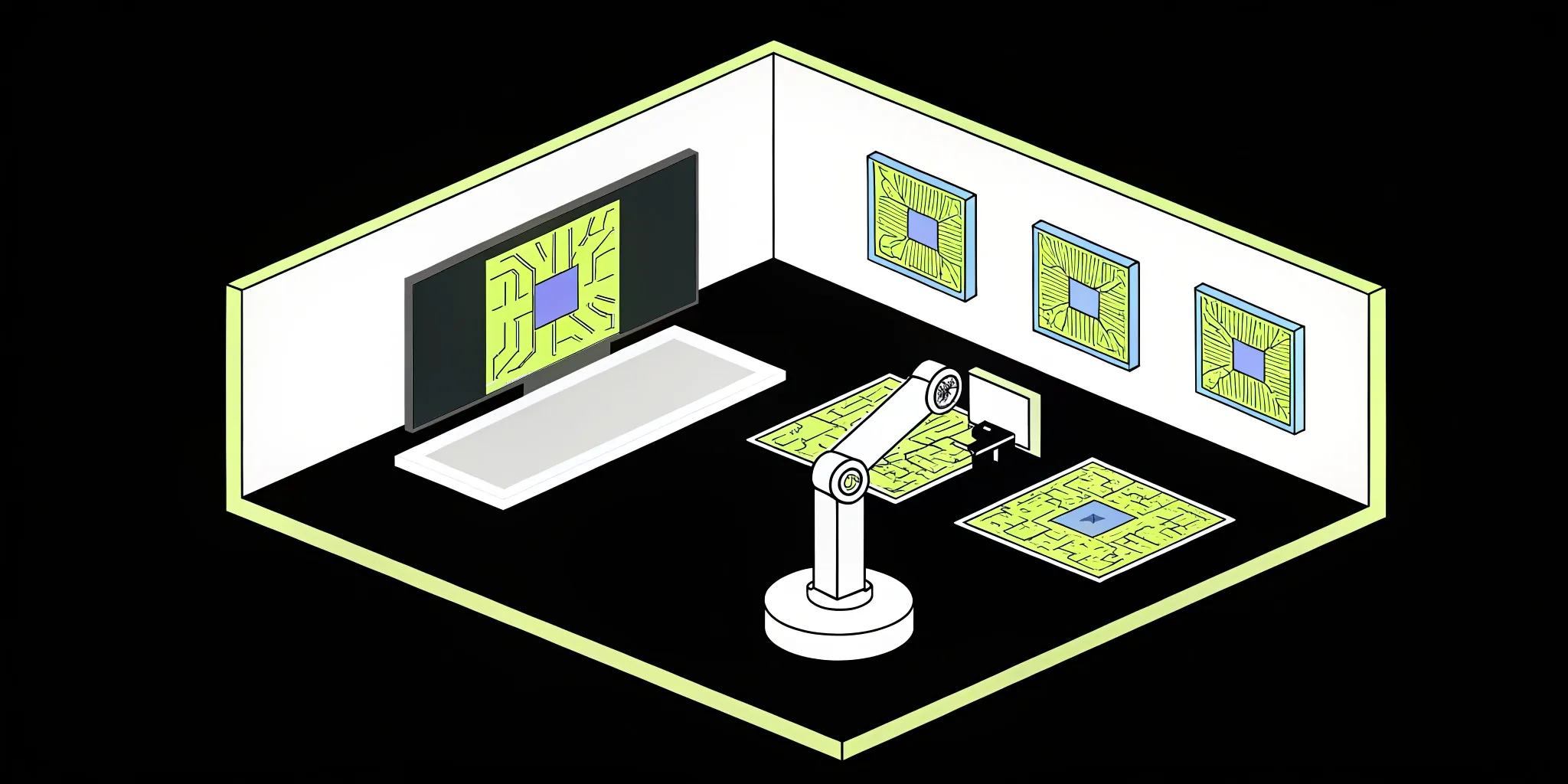

COMPONENTS

![]()

Tools that power Cake's fine-tuning stack

Deepchecks

Model Evaluation Tools

Automate data validation and monitor ML pipeline quality with Deepchecks, fully managed and integrated by Cake.

DeepSpeed

Model Training & Optimization

Accelerate large-scale model training and optimize compute costs with Cake’s DeepSpeed orchestration.

TRL

Fine-Tuning Techniques

Set up RLHF workflows and reward modeling for LLMs with Cake’s integrated TRL pipelines and performance tracking.

Unsloth

Model Training & Optimization

Fine-tune LLMs ultra-fast with Unsloth and Cake’s resource-optimized, version-controlled infrastructure.

Label Studio

Data Labeling & Annotation

Label Studio is an open-source data labeling tool for supervised machine learning projects. Cake connects Label Studio to AI pipelines for scalable annotation, human feedback, and active learning governance.

Promptfoo

LLM Observability

LLM Optimization

Promptfoo is an open-source testing and evaluation framework for prompts and LLM apps, helping teams benchmark, compare, and improve outputs.

.png)

Qlora

Model Training & Optimization

ML Model Libraries

QLoRA is a method for fine-tuning large language models efficiently by combining quantization and low-rank adapters. It reduces hardware requirements without compromising model performance.

Frequently asked questions

What is fine-tuning in machine learning?

Fine-tuning is the process of adapting a pretrained model to your specific domain, data, or task. It allows you to improve accuracy, alignment, and performance without training a model from scratch.

How does Cake support fine-tuning workflows?

Cake provides a modular, cloud-agnostic stack for fine-tuning. You can use open-source frameworks like Hugging Face, PyTorch, and QLoRA, run experiments with MLflow, and orchestrate jobs using Kubeflow Pipelines—all within your own environment.

Can I fine-tune both language and vision models with Cake?

Yes. Cake supports fine-tuning across modalities, including LLMs and computer vision models. You can use your own datasets, apply tools like LoRA or PEFT, and deploy models securely on the infrastructure of your choice.

How do I track experiments and results?

Cake integrates with MLflow to track hyperparameters, datasets, configurations, and results. You get full lineage and versioning to support reproducibility, auditing, and performance comparison over time.

Is Cake secure and compliant for regulated industries?

Yes. All fine-tuning jobs run in your environment, with no data egress. Cake includes support for SOC 2, HIPAA, and custom access controls, making it suitable for healthcare, finance, and other high-stakes domains.

Learn more about Cake

The Best Open Source AI: A Complete Guide

Find the best open source AI tools for 2025, from top LLMs to training libraries and vector search, to power your next AI project with full control.

Your Guide to the Top Open-Source MLOps Tools

Find the best open-source MLOps tools for your team. Compare top options for experiment tracking, deployment, and monitoring to build a reliable ML...

13 Open Source RAG Frameworks for Agentic AI

Find the best open source RAG framework for building agentic AI systems. Compare top tools, features, and tips to choose the right solution for your...

.png?width=220&height=168&name=Group%2010%20(1).png)

.png)