Cake for

Agentic RAG

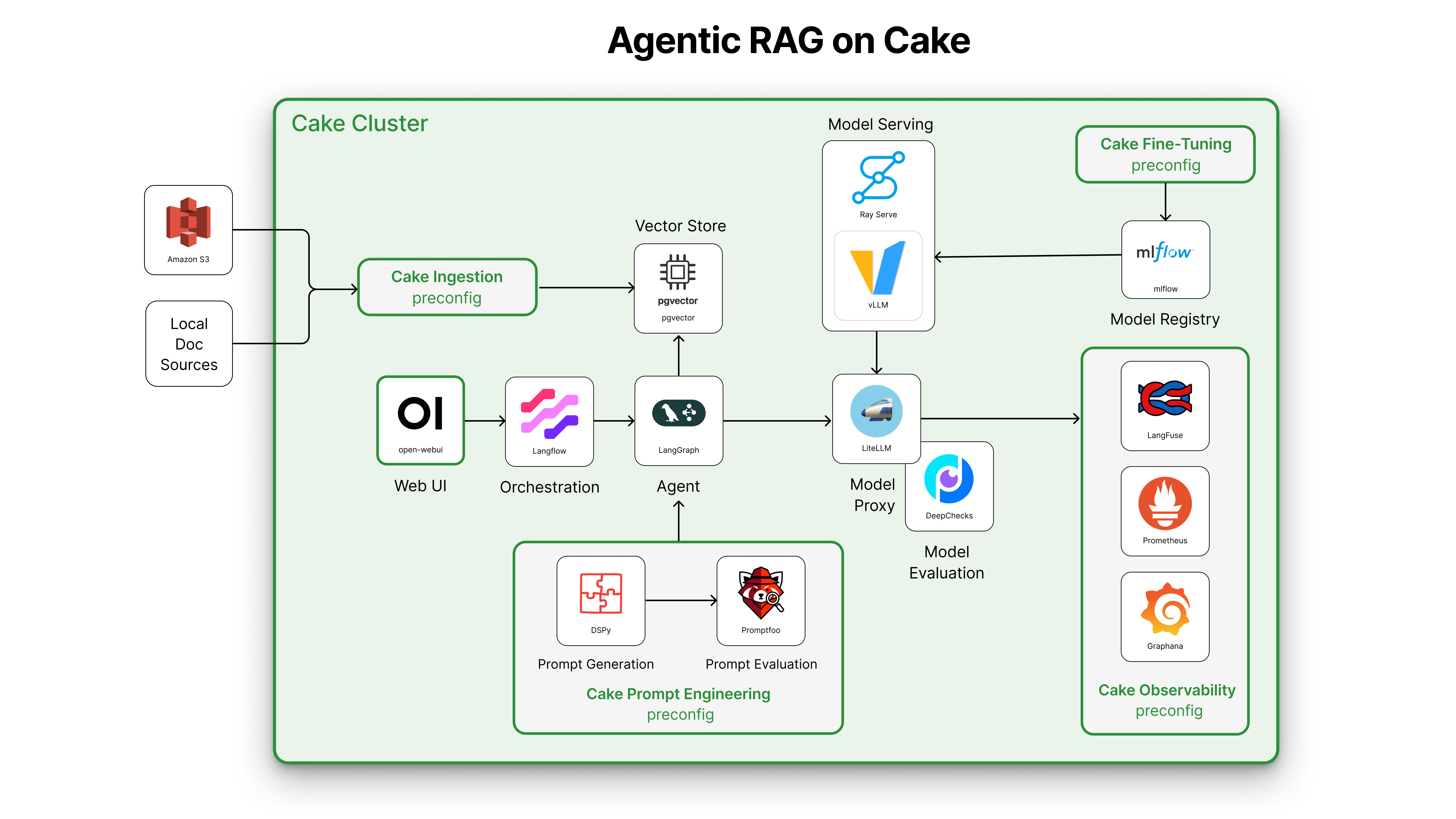

Move from prototype to production faster with pre-vetted configurations of the latest open-source tools. Build dynamic, multi-step agent workflows that retrieve, interpret, and act on enterprise data, with orchestration, prompt optimization, model routing, and full-stack observability on a cloud-agnostic, modular stack.

Overview

Retrieval-augmented generation (RAG) gives AI the ability to pull in live, relevant information from your enterprise knowledge before generating a response. Instead of relying only on static training data, RAG-powered agents can answer with accuracy, context, and up-to-the-minute insight. This is critical for tasks like customer support, research, and decision-making.

But building agentic RAG systems is notoriously complex. From vector search and orchestration to observability and fine-tuned access control, each layer introduces new integration challenges. Most teams spend weeks (if not months) just stitching components together, delaying launches and driving up costs.

Cake changes that. With pre-validated, production-ready configurations of the latest open-source tools, AI developers can go from prototype to production in days. You keep your own models, prompts, and vector stores. Cake delivers the glue logic, observability, and security scaffolding so you can move fast without cutting corners.

Key benefits

-

Ship faster: Go from notebook to deployed agentic system without gluing together infrastructure.

-

Use open-source tooling: Mix and match LLMs, routers, and chunkers without vendor constraints.

-

Scale securely: Build workflows that grow with your data, teams, and compliance needs.

-

Debug with visibility: Track agent behavior, data flow, and model decisions with full observability.

-

Build on a proven RAG foundation: Start from pre-vetted configurations of cutting-edge tools, reducing setup time and avoiding costly integration pitfalls.

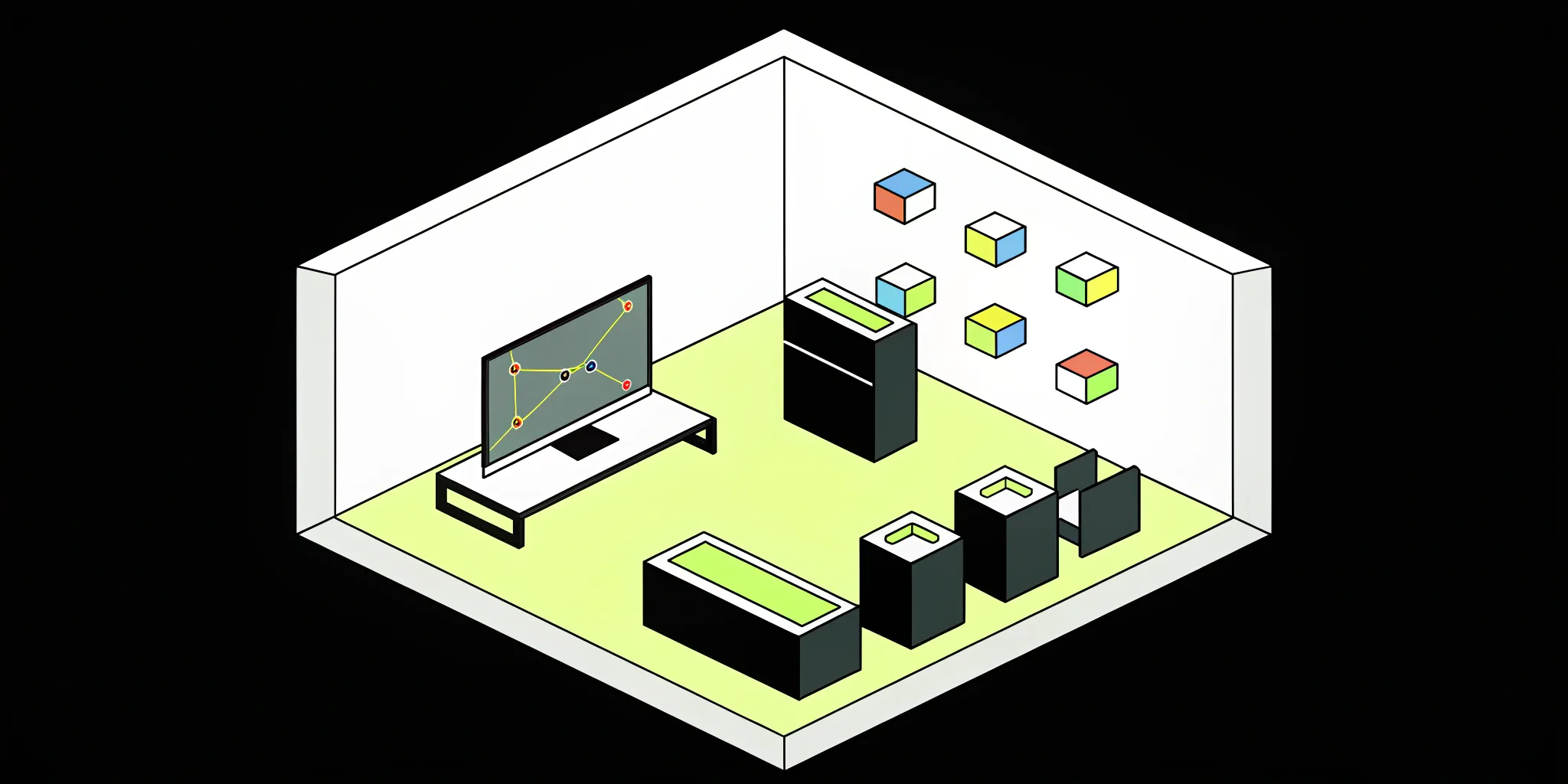

HOW IT WORKS

![]()

Accelerate to production with

Cake's pre-built configurations

-

Connect your data: Ingest unstructured documents from sources like S3 or local drives using Cake’s prebuilt ingestion pipelines.

-

Spin up intelligent agents: Build multi-step RAG workflows with LangGraph and Langflow, powered by your own vector store and orchestrated through a simple web UI.

-

Optimize your prompts: Use DSPy for prompt generation and Promptfoo for automated evaluation that's 100% pre-integrated and configurable.

-

Serve and route models: Run inference through LiteLLM and vLLM, with built-in model tracking and fine-tuning via MLflow.

HOW IT WORKS

![]()

From lookup answers to agents

that actually get work done

Naive RAG

Good for demos, brittle in production: A basic retrieval-augmented generation loop with a static prompt and shallow retrieval.

- Relies on simple query-to-response flow

- Single-turn generation without memory or reasoning

- Weak retrieval logic often returns irrelevant chunks

- Hard to scale, evaluate, or debug

Result:

Works in a notebook, fails in the real world

Agentic RAG with Cake

Built for reasoning, context, and multi-step tasks: Use Cake to deploy agents that retrieve, plan, and adapt in real time.

- Agents combine RAG with tools, workflows, and memory

- Supports multi-turn, multi-source reasoning

- Built-in evals, tracing, and observability

- Easily extend with function calling, vector filtering, and reranking

Result:

Scalable, production-grade AI agents that actually deliver value

EXAMPLE USE CASES

![]()

Turn data into action with Agentic RAG

![]()

Intelligent support agents

Retrieve relevant context, route queries across tools, and provide multi-turn assistance grounded in real data.

![]()

Research copilots

Use long-context models to iteratively retrieve, read, and synthesize information across multiple queries.

![]()

Enterprise task automation

Enable agents to retrieve internal data, reason over it, and take action using custom toolchains.

![]()

Autonomous report generation

Build agents that can retrieve internal data, run analysis, and compile human-readable reports without manual prompting.

![]()

Multi-step reasoning across tools

Enable agents to sequence multiple retrieval and execution steps, like querying a vector DB, calling a function, then refining the answer.

![]()

Secure RAG for regulated environments

Deploy retrieval workflows that respect row-level permissions, data residency rules, and auditability requirements.

VIDEO

What it really takes to productionize RAG

During his presentation at Data Council 2025, Cake co-founder & CTO Skyler Thomas breaks down what it takes to move RAG from prototype to production in enterprise environments, including:- Why most RAG projects stall before reaching production

- How to structure evaluation, orchestration, and observability

- The open-source patterns behind scalable agentic systems

- How Cake simplifies the path to enterprise-grade deployment

BLOG

Why 90% of Agentic RAG Projects Fail (and How Cake Changes That)

Most teams get stuck in the “60% demo trap,” i.e., a prototype that works in a notebook but breaks in production. This blog breaks down where naive RAG architectures fall short and how Cake helps you build agentic systems that are observable, scalable, and goal-driven.

"Our partnership with Cake has been a clear strategic choice – we're achieving the impact of two to three technical hires with the equivalent investment of half an FTE."

Scott Stafford

Chief Enterprise Architect at Ping

"With Cake we are conservatively saving at least half a million dollars purely on headcount."

CEO

InsureTech Company

COMPONENTS

![]()

Tools that power Cake's Agentic RAG stack

LangGraph

Agent Frameworks & Orchestration

LangGraph is a framework for building stateful, multi-agent applications with precise, graph-based control flow. Cake helps you deploy and scale LangGraph workflows with built-in state persistence, distributed execution, and observability.

LangChain

Agent Frameworks & Orchestration

LangChain is a framework for developing LLM-powered applications using tools, chains, and agent workflows.

Weaviate

Vector Databases

Weaviate is an open-source vector database and search engine built for AI-powered semantic search. Cake integrates Weaviate to support scalable retrieval, recommendation, and question answering systems.

Langflow

Agent Frameworks & Orchestration

Langflow is a visual drag-and-drop interface for building LangChain apps, enabling rapid prototyping of LLM workflows.

DSPy

LLM Optimization

DSPy is a framework for optimizing LLM pipelines using declarative programming, enabling dynamic tool selection, self-refinement, and multi-step reasoning.

Promptfoo

LLM Observability

LLM Optimization

Promptfoo is an open-source testing and evaluation framework for prompts and LLM apps, helping teams benchmark, compare, and improve outputs.

Frequently asked questions

What makes Cake ideal for building Agentic RAG systems?

Cake gives you a production-ready, cloud-agnostic stack that supports long-context LLMs, vector databases, chunkers, routers, and agent frameworks sans the glue code. You get observability, orchestration, and evaluation out of the box.

How does Cake speed up Agentic RAG development?

With Cake, you don’t have to manually integrate every component in your stack. Modular blueprints, built-in orchestration, and open-source compatibility let teams go from prototype to production up to 70% faster.

Can I use my preferred LLMs, retrievers, and agent frameworks with Cake?

Yes. Cake is designed to be composable and open. You can mix and match any LLM, embedding model, vector store, or routing logic—so your stack stays flexible and future-proof.

How does Cake help me scale Agentic RAG securely?

Cake runs in your own cloud, integrates with enterprise auth and audit systems, and meets compliance standards like SOC 2 and HIPAA. You stay in control of your data, compute, and governance from day one.

What kind of observability does Cake provide for Agentic RAG?

You get full visibility into each agent’s behavior, including routing decisions, tool calls, memory states, and prompt flows. This makes it easier to debug, optimize, and continuously improve your system.

Learn more about Agentic RAG

13 Open Source RAG Frameworks for Agentic AI

Find the best open source RAG framework for building agentic AI systems. Compare top tools, features, and tips to choose the right solution for your...

9 Most Popular Vector Databases: How to Choose

Find the most popular vector databases for AI projects. Compare features, scalability, and use cases to choose the right vector database for your...

19 Top RAG Tools for Your Enterprise AI Stack

A standard large language model is like a brilliant new hire—they aced the exams but have zero on-the-job experience with your company. They have a...

.png?width=220&height=168&name=Group%2010%20(1).png)