The Main Goals of MLOps (And What They Aren't)

Your team builds incredible AI models, but getting them deployed and managed effectively is a whole other game. This is the core challenge MLOps solves. It’s more than just DevOps for machine learning. A great way to test your understanding is to ask: which of the following is not one of the main goals of mlops? streamline moving of models into production making machine learning scale inside organizations. focus on code development and deployment. ensure deployed models are well maintained, perform as expected, and do not have any adverse effects on the business. The answer highlights a critical distinction. True mlops automation is about the entire model lifecycle management—from data to deployment and robust monitoring—not just the code.

Key takeaways

- Adopt MLOps for smarter AI delivery: Implement MLOps to refine the entire lifecycle of your ML models—from development to production—making your AI projects more efficient and dependable.

- Establish core MLOps capabilities: Systematically build essential practices, including rigorous data management, automated deployment workflows, continuous performance monitoring, and effective cross-team collaboration, for successful AI.

- Overcome obstacles and prioritize responsible AI: Proactively manage common MLOps hurdles, including data quality and infrastructure scaling, while embedding ethical considerations like fairness and transparency into your AI systems.

What is MLOps and why should you care?

So, you're hearing a lot about MLOps, and you're probably wondering what all the buzz is about. Think of MLOps as the essential framework that helps you take your brilliant ML ideas from the lab and make them work reliably in the real world. It’s a set of practices that brings together ML, DevOps (those trusty practices from software development), and Data Engineering. The main goal? To make deploying and maintaining your ML models smoother and more efficient. This involves managing the entire ML model lifecycle, from gathering and preparing data all the way to deployment, and keeping an eye on how the model performs.

Now, why should this matter to you and your business? Well, if you've ever tried to get an AI project off the ground, you know it’s not always a walk in the park. MLOps steps in to tackle some of the biggest headaches. By implementing MLOps, companies can achieve significant improvements. These MLOps benefits often include "faster releases, automated testing, agile approaches, better integration, and less technical debt." It’s all about creating a more streamlined workflow, encouraging your data scientists and operations teams to work together seamlessly, and ultimately, making your ML projects more successful.

Beyond just making things run smoother, MLOps is crucial for making sure your ML models are dependable, can grow with your needs, and actually help you achieve your business objectives. It’s not just a technical nice-to-have; it’s a strategic must-do if you're serious about getting real value from your AI efforts and ensuring your AI initiatives deliver tangible results.

The real goals of MLOps

When we talk about MLOps, it’s easy to get lost in the technical details of automation and pipelines. But at its core, MLOps is about achieving clear business goals. It’s the bridge that takes your machine learning models from a promising experiment to a reliable, value-generating part of your operations. The main objective is to make sure your ML models are developed, tested, and used in a consistent, reliable, and efficient way. This means creating a repeatable process that you can trust, whether you’re deploying your first model or your hundredth. It’s about building a foundation for scalable AI that doesn’t just work once, but continues to perform and adapt over time, driving real results for your organization.

Ensuring model reliability and accuracy

The single most important goal of MLOps is to ensure your ML models consistently give accurate results. A model that performs perfectly in a controlled lab environment is only useful if it maintains that performance in the messy, unpredictable real world. MLOps establishes the processes for rigorous testing, validation, and versioning to make sure that what you deploy is robust and dependable. But the work doesn’t stop at deployment. A crucial part of the framework is continuous monitoring. MLOps helps you monitor models once they are in use, making sure they stay accurate and reliable over time. This proactive approach helps you catch issues like data drift before they impact your business, ensuring your AI continues to be an asset, not a liability.

What MLOps is not

With all the buzz around MLOps, it’s just as important to understand what it isn’t. First, it’s not simply DevOps with a new name. While MLOps borrows heavily from DevOps principles like automation and continuous integration, it’s a distinct practice tailored to the unique challenges of machine learning. Unlike traditional software, ML systems have complexities related to data, model retraining, and performance monitoring that require specialized tools and workflows. Think of it this way: MLOps is like DevOps, but specifically for machine learning. It addresses the entire lifecycle, from data ingestion and model training to deployment and governance, recognizing that ML is not a one-and-done software release but a continuous, iterative process.

It's more than just code development

A common misconception is that MLOps is just about pushing model code into production. In reality, it's not just about focusing on writing code and putting it out there. The model's code is often a small part of the overall system. A successful MLOps strategy encompasses the entire machine learning lifecycle, including data pipeline management, feature engineering, model versioning, and regulatory compliance. It’s essential for making sure machine learning models work well, can grow with demand, and are easy to maintain once they are put into action. This holistic approach is why many teams turn to integrated platforms like Cake, which manage the entire stack and simplify the complexities of building a production-ready, scalable AI system from the ground up.

The key stages of the MLOps lifecycle

Think of MLOps as a well-oiled machine with several interconnected parts, all working together to get your AI initiatives running smoothly and efficiently. It’s not just one thing; it’s a collection of practices and tools that cover the entire lifecycle of an ML model. Each piece plays a crucial role, from the initial handling of your data to keeping your models in top shape long after they’ve been launched into the real world.

Understanding these components will help you see how MLOps can transform your ML projects from experimental ideas into tangible, impactful business solutions. When these pieces are in sync, you can expect more reliable models, faster development cycles, and a much clearer path to getting value from your AI investments. At Cake, we see firsthand how a solid MLOps framework helps businesses accelerate their AI projects by managing the entire stack effectively. Let's explore these key pieces one by one, so you can see how they fit together to build a robust MLOps practice.

Get your data ready for modeling

Your data is the absolute foundation of any ML model. Without high-quality, well-organized data, even the most sophisticated algorithms will struggle to deliver accurate results. MLOps brings a necessary discipline to this foundational step. It’s about establishing "a repeatable, automated workflow" for how you collect, clean, transform, and version your datasets. This isn't just a one-time task at the beginning; MLOps teams also continuously "monitor the data" to catch issues like data drift or degradation early on. By ensuring your data is always analysis-ready and reliable through strong data governance, you're setting your models up for success from the very start and building a trustworthy AI system.

Time to build and test your models

Building effective ML models is rarely a straight shot; it’s an iterative journey filled with experimentation. Your team will likely try out different algorithms, feature engineering techniques, and various settings to find what works best for your specific problem. MLOps supports this creative and analytical process by providing tools and frameworks for tracking these experiments, managing code, and facilitating effective team collaboration. While a key goal is to “automate the deployment of ML models,” the path to achieving this involves a significant amount of learning and refinement. MLOps helps streamline this experimentation phase, allowing your team to iterate faster and discover the most performant models more efficiently.

Unit, integration, and data quality testing

Once you have a model that looks promising, it’s time to put it through its paces with rigorous testing. In the world of MLOps, this goes far beyond just checking for bugs in your code. A comprehensive testing strategy ensures every part of your system is solid. This includes unit tests for individual code components, integration tests to see how different parts of your pipeline work together, and, most importantly, data quality tests. You need to continuously validate your data for things like accuracy, completeness, and drift to ensure your model’s predictions remain reliable. After all, your model is only as good as the data it’s trained on. Automating these checks is essential, as it allows you to catch issues early and maintain a trustworthy AI system over time.

Automate your deployment process

Once you've developed a promising model, the next significant step is getting it into production, where it can start delivering real value. MLOps truly shines here by automating the deployment process. Instead of relying on manual, often error-prone handoffs, you can build robust CI/CD (Continuous Integration/Continuous Delivery) pipelines specifically designed for ML. This strategy aids in coordinating ML project activities, enhances the ongoing delivery of effective models, and fosters successful collaboration between ML and other teams. Automation enables you to deploy new models more quickly, with greater consistency. It allows you to roll back to a previous version swiftly if something doesn’t go as planned, making your entire path to production much smoother and more reliable. This is a core principle of modern software delivery.

Safe deployment strategies

Pushing a new model into production can feel like a high-stakes gamble. Will it perform as well as it did in testing? Will it introduce unexpected errors? This is where safe deployment strategies come in. Instead of a risky, all-at-once launch, these methods allow you to release new models gradually and with control. The core idea is to limit potential negative impact while gathering real-world performance data. By implementing strategies like shadow deployment, A/B testing, and canary testing, you can validate your model's effectiveness and stability before it's fully live. This approach helps you measure the health of your models throughout their lifecycle, ensuring that each new version is a genuine improvement.

Shadow deployment

Imagine being able to test your new model with live production traffic without a single user ever knowing. That’s the magic of shadow deployment. With this strategy, you run the new model in parallel with your existing one. The live model continues to handle all user requests and serve responses, while the new "shadow" model receives the same inputs and makes its own predictions behind the scenes. You can then compare the outputs, performance, and error rates of both models in a real-world environment. It’s the ultimate safety net, allowing you to identify potential issues and validate performance without any risk to the user experience.

A/B testing

When you need to know which model version drives better business outcomes, A/B testing is your go-to strategy. This method involves routing a portion of your user traffic to the new model (version B) while the rest continues to use the current model (version A). This allows for a direct, statistical comparison of how each model influences key metrics like user engagement, conversion rates, or accuracy under the exact same conditions. It’s a powerful way to make data-driven decisions about which model to fully deploy. This kind of rigorous evaluation serves as one of the final model evaluation gates before a model is promoted across the board.

Canary testing

Canary testing is a cautious and methodical approach to rolling out a new model. You start by releasing the new version to a very small subset of users—the "canaries." Your team closely monitors the model's performance and the system's stability for this small group. If everything looks good, you gradually increase the traffic to the new model, expanding the user group in phases. This process minimizes the "blast radius" if an issue arises, as you can quickly roll back the change with minimal user impact. It’s a key practice for ensuring you can deploy ML models in a repeatable and automated way, building confidence with each successful phase of the rollout.

Keep an eye on your models in production

The work doesn’t stop once your model is live and making predictions. In fact, that’s when a new, crucial phase begins: continuous monitoring. MLOps emphasizes the importance of constantly tracking your model's performance in the real world. Are its predictions still accurate? Has the input data changed in unexpected ways that might affect its output? You need systems in place to monitor the data and forecasts, assessing when the model requires an update or deployment. Gathering this feedback is vital because it helps you understand how your model is truly performing and identify when interventions are needed. This proactive approach ensures your AI projects continue to generate higher return on investment from ML over their entire lifecycle. Effective model monitoring is key to long-term success.

Retraining and versioning for better performance

ML models aren't static; they operate in a dynamic world where data patterns can shift and evolve. As the underlying data changes, a model trained on older data can become less accurate over time. Data inconsistencies are among the most prevalent problems, and models can deteriorate without active management. MLOps addresses this by implementing systematic retraining and meticulous versioning. The ML development process is iterative, and this approach also applies to models currently in production. You'll need to retrain your models with fresh data periodically to maintain their accuracy and relevance. Just as importantly, model versioning allows you to keep a clear history of changes, compare the performance of different model iterations, and confidently roll back to a previous, stable version if a newly deployed model underperforms.

BLOG: How to choose a machine learning platform

MLOps vs. DevOps: what sets them apart?

You may wonder how MLOps fits into DevOps, especially if your team is already familiar with DevOps practices. Think of MLOps as an extension of DevOps, specifically tailored for the world of ML. Both share the same core principles: aiming for speed, frequent iteration, and continuous improvement to deliver higher quality results and better user experiences. If DevOps is about streamlining software delivery, MLOps applies that same thinking to the entire ML lifecycle.

The main difference lies in the unique complexities that ML introduces. While DevOps primarily focuses on the code and infrastructure for software applications, MLOps has to deal with additional layers like data and models. For instance, ML development is often highly experimental. Data scientists might test numerous models before finding the one that performs best, a process that needs careful tracking and management. Additionally, MLOps addresses specific ML challenges such as model drift (where a model's performance degrades over time as new data comes in), ensuring data quality, and the critical need for continuous monitoring and retraining of models.

This means MLOps involves a broader range of activities and often requires closer collaboration between data scientists, ML engineers, and operations teams. While DevOps handles software actions, MLOps is busy constructing, training, and fine-tuning ML models using vast datasets and specific parameters. It’s really a combination of practices from Machine Learning, DevOps, and Data Engineering, all working together to make deploying and maintaining ML models in real-world settings smoother and more dependable. So, while DevOps lays a fantastic foundation, MLOps builds upon it to address the specific needs of getting AI initiatives into production and keeping them there successfully.

A simple framework for MLOps success

Getting started with MLOps can feel like a huge undertaking, but it doesn't have to be. The key is to break it down into manageable pieces. By focusing on a few core principles, you can build a strong foundation that supports your AI projects as they grow in complexity. Think of it as building a house; you wouldn't start putting up walls without a solid blueprint and foundation. This framework provides that blueprint, giving you a clear path to follow. It helps you organize your efforts, ensure you're not missing any critical steps, and create a repeatable process for success. This is exactly what we focus on at Cake—providing a comprehensive solution that handles the complexities of the AI stack so you can focus on building and deploying great models. Adopting a structured approach helps demystify MLOps and turns it into a practical, achievable strategy for any team.

The 4 pillars of MLOps

To build a robust MLOps practice, you can focus your efforts on four key areas. These pillars work together to support the entire machine learning lifecycle, from the first experiment to a model that's been running in production for years. By ensuring you have a solid strategy for each one, you create a system that is transparent, efficient, and dependable. Let's look at what each of these pillars involves and why they are so essential for long-term success.

Tracking

Think of tracking as keeping a detailed lab notebook for every model you build. It’s about meticulously recording everything that goes into your model—the exact version of the dataset you used, the code, and all the specific configurations and parameters. This level of detail is crucial for reproducibility. If a model starts behaving unexpectedly, you can look back at its "recipe" to understand what might have gone wrong. Good experiment tracking also makes it easier for your team to collaborate, share findings, and build upon each other's work without starting from scratch every time.

Automation

Automation is what transforms your ML workflow from a series of manual, error-prone tasks into a streamlined and efficient pipeline. Just like in traditional software development, automating the build, testing, and deployment processes is a game-changer. For ML, this means automating model retraining whenever new data becomes available or performance dips. By setting up a CI/CD pipeline for your models, you can release updates faster, reduce the risk of human error, and ensure that your models are always ready for production, allowing your team to focus on innovation instead of repetitive manual work.

Monitoring

Deploying a model is just the beginning. Once it's live, you need to keep a close watch on its performance to make sure it continues to deliver accurate and valuable predictions. The real world is constantly changing, and this can cause a model's performance to degrade over time—a problem known as model drift. Continuous monitoring involves tracking key metrics, watching for shifts in the input data, and setting up alerts for any unusual behavior. This proactive approach allows you to catch issues early and decide when it's time to retrain or update your model, ensuring it remains effective and trustworthy.

Reliability

At the end of the day, an ML model is only useful if your business can depend on it. Reliability means that your model is not only accurate but also consistently available and performing as expected. This involves building a resilient system that can handle failures gracefully and scale to meet demand. When your predictions are a critical part of a business process or customer-facing application, you can't afford downtime or inconsistent results. Building a reliable system from the ground up fosters trust and ensures that your AI initiatives deliver consistent, long-term value.

Your MLOps toolkit: essential tools and tech

Alright, let's talk about the gear you'll need for your MLOps journey. Think of it like this: if MLOps is the roadmap to successful AI, then your tools and technologies are the vehicle, the fuel, and the navigation system all rolled into one. Without the right toolkit, even the best MLOps strategy can stall. The goal here is to equip your teams with what they need to move models from an idea in a data scientist's notebook to a real, value-generating application, and then keep it running smoothly. This means having systems in place for everything from managing your data and code to deploying your models and making sure they're still performing well weeks, months, or even years down the line.

There are lots of options, and it's easy to get overwhelmed. But don't worry, you don't need every shiny new gadget. Instead, focus on building a solid foundation with tools that support the core MLOps principles: automation, reproducibility, collaboration, and continuous monitoring. This may involve a combination of open-source solutions, commercial platforms, or custom-built tools, depending on your team's needs and expertise. The key is to choose technologies that integrate well and support your entire workflow.

For businesses looking to accelerate their AI initiatives, finding a comprehensive solution that manages the compute infrastructure, open-source elements, and integrations can make a world of difference, allowing your team to focus on building great models rather than wrestling with infrastructure. We're talking about tools that help you keep everything organized, automate repetitive tasks, and ensure everyone is on the same page.

Why you need version control

Imagine trying to bake a complex cake, making little tweaks to the recipe each time, but never writing them down. Chaos, right? That's what developing ML models without version control can feel like. Version control is your detailed recipe book for not just your code, but also your data and your models. It’s absolutely essential for tracking changes and understanding how your model evolves. This means if something goes sideways, you can easily roll back to a previous version. More importantly, it ensures that your experiments are reproducible. Anyone on your team should be able to recreate a specific model version and its results, which is fundamental for debugging, auditing, and building trust in your ML systems. It’s the bedrock of a reliable MLOps practice.

Tools like DVC

While Git is fantastic for managing your code, it wasn’t designed to handle the massive datasets and large model files common in machine learning. This is where tools like DVC (Data Version Control) come into play. DVC works on top of Git, allowing you to version your data and models without clogging up your code repository. It essentially creates small pointer files that you track in Git, while the actual large files are stored in a separate location, like cloud storage. This approach gives you the best of both worlds: the powerful versioning of Git for your entire project—code, data, and models included. By using a tool like DVC, you make your experiments truly reproducible. A team member can pull a specific version of your project and get the exact same code, data, and model you used, which is a game-changer for debugging and collaboration.

Building CI/CD pipelines for machine learning

CI/CD for ML automates the entire pipeline, from the moment you commit new code or data, through testing, validation, and all the way to deploying your model into production. This automation isn't just about speed; it's about reliability and consistency. By automating these steps, you reduce the chance of human error and ensure that your models are continuously integrated and delivered in a dependable way. This means faster updates, quicker responses to changing data, and a much smoother path from development to real-world impact for your AI projects.

Tools like Jenkins and GitLab CI

When it comes to actually building these automated pipelines, you’ll often run into powerful, open-source tools like Jenkins and GitLab CI. Think of them as the conductors of your MLOps orchestra. They are the engines that automatically trigger a sequence of actions—like running tests on your code, validating your data, and pushing your model into production—whenever a developer commits a change. This automation is what brings the principles of CI/CD to life, ensuring that every step is repeatable, consistent, and less prone to human error. While these tools are foundational, configuring them to handle the specific needs of ML can be complex, which is why many teams turn to comprehensive platforms that streamline these integrations from the start.

How to monitor your models effectively

Once your model is out in the wild, the work isn't over—far from it! Models can degrade over time, a phenomenon often referred to as "model drift." This happens because the real-world data your model sees can change, making its past learnings less relevant. That's where monitoring comes in. It's a critical part of MLOps, involving tracking your model's performance in real-time, looking for signs of drift, and understanding its accuracy. Good monitoring tools will alert you when performance dips, so you can investigate, retrain, or replace the model as needed. This continuous oversight ensures your models remain accurate, fair, and effective, delivering ongoing value instead of becoming stale and unreliable.

Techniques for detecting model drift

Model drift is one of the biggest reasons a perfectly good model can start to fail in production. It happens when the real-world data your model is seeing starts to look different from the data it was trained on. To catch this, you need to monitor for two main types of drift. The first is data drift, where the statistical properties of your input data change—think of a shift in customer demographics or purchasing habits. The second is concept drift, which is more subtle; this is when the relationship between your input data and the outcome you're predicting changes. For example, what once predicted customer churn might no longer be relevant. Detecting these shifts involves using statistical tests to compare data distributions over time and, most importantly, keeping a close eye on your model's core performance metrics like accuracy and precision. A sudden dip is your clearest signal that something is amiss.

Input monitoring vs. ground-truth evaluation

When it comes to monitoring, you have two main angles of attack: watching what goes in and checking what comes out. Input monitoring is your early warning system. It involves tracking the data being fed into your model in real-time. Are you suddenly seeing more missing values? Has the average value of a key feature shifted? Catching these changes can alert you to potential issues before they have a major impact on your model's performance. On the other hand, ground-truth evaluation is your ultimate report card. This is where you compare your model's predictions against actual, verified outcomes. While this is the most accurate way to measure performance, getting that "ground truth" can sometimes be delayed. A solid MLOps strategy uses both: proactive input monitoring to catch early signals and definitive ground-truth evaluation to confirm long-term accuracy.

Tools like Prometheus and Grafana

The good news is you don't have to build your monitoring infrastructure from the ground up. There are fantastic open-source tools that have become industry standards. Prometheus is a powerful tool for collecting time-series data, which is perfect for tracking your model's performance metrics over time and setting up alerts when those metrics cross a certain threshold. Then, you have Grafana, which is a brilliant visualization tool. It connects to data sources like Prometheus and turns all that raw data into beautiful, intuitive dashboards. This allows your team to see performance trends at a glance, spot anomalies quickly, and share insights easily. At Cake, we integrate these kinds of essential tools into our platform, simplifying the setup so your team can focus on monitoring insights, not infrastructure management.

Tools for better team collaboration

Machine learning is rarely a solo sport. It takes a village—data scientists, ML engineers, software developers, operations folks, and business stakeholders all play a part. MLOps thrives on and in turn fosters strong collaboration between these diverse teams. Effective collaboration means having shared tools, clear communication channels, and streamlined workflows that allow everyone to work together efficiently. When your data scientists can easily hand off models to engineers for deployment, and operations can provide quick feedback on performance, the entire ML lifecycle becomes smoother and faster. Tools that support shared workspaces, experiment tracking, and clear documentation are key to making this teamwork a reality and breaking down silos.

SUCCESS STORY: How Ping Established ML-Based Leadership

Experiment tracking with MLflow or W&B

Since building ML models is an iterative process, you need a way to keep track of every experiment. This is where experiment tracking tools come in. Think of them as a detailed lab notebook for your data science team. Tools like MLflow and Weights & Biases (W&B) help you log everything from the parameters you used and the code you ran to the resulting performance metrics and model artifacts. This systematic approach is crucial because it makes your work reproducible, allowing anyone on your team to understand and replicate your results. It also makes it much easier to compare different model versions side-by-side, so you can confidently choose the best one to move forward with.

Key technical components for your MLOps stack

Putting together an MLOps stack is a bit like assembling a high-performance engine; you need the right components working together in harmony. You don't need every tool under the sun, but you do need a core set of technologies that can handle the specific demands of the ML lifecycle. This includes everything from managing your data and experiments to deploying and monitoring your models in production. The goal is to create a cohesive, automated system that empowers your team to build and operate ML models efficiently and reliably. Each component plays a distinct role in creating a seamless workflow from development to operations.

Choosing and integrating these tools can be a significant undertaking, especially when you want to maintain flexibility with open-source solutions. This is where a managed platform can be a game-changer. At Cake, we specialize in managing this entire stack—from the underlying compute infrastructure to the open-source platform elements and common integrations. By providing a production-ready solution, we help your team bypass the complexities of infrastructure management and focus directly on building and deploying impactful AI, accelerating your path from concept to production.

The role of a model registry

Once you've trained a model you're happy with, where does it go? A model registry is the answer. It acts as a central repository, or a single source of truth, for all your trained models. Think of it as a version-controlled library specifically for your ML models. A good registry doesn't just store the model file; it also records critical metadata. This includes the model's version number, the source code and parameters used to train it, and details about the training data. This level of organization is vital for governance and reproducibility, ensuring you can track a model's entire lineage and confidently deploy the correct version into production.

Why you need a feature store

In machine learning, a "feature" is a specific piece of data used for a prediction, like a customer's purchase history or the average time they spend on your site. Often, different teams end up creating the same features over and over again, which is inefficient and can lead to inconsistencies. A feature store solves this problem by acting as a central, shared library for features. It allows data scientists to define, store, and share curated features across the organization. This not only saves time but also ensures that features are calculated consistently, whether they're being used for training a new model or making real-time predictions in a live application.

Actionable strategies for implementing MLOps

Alright, so you're on board with MLOps and understand its core components. That's a fantastic start! But knowing what MLOps is and actually doing it effectively are two different things. The real magic happens when you translate theory into practice, moving from understanding concepts to implementing concrete actions. This is where smart strategies come into play, helping you move beyond just understanding MLOps to truly leveraging it to accelerate your AI initiatives and achieve tangible results.

Think of these strategies as your MLOps playbook—a set of guiding principles to make your journey smoother and your outcomes more impactful. It’s not just about adopting new tools; it’s about fostering a new mindset and operational rhythm within your teams. At Cake, we specialize in helping businesses like yours manage the entire AI stack, and we see firsthand how a strategic approach to MLOps can transform AI projects from experimental concepts into production-ready solutions that drive real business value. These aren't just abstract ideas; they are practical steps you can begin to implement today. By focusing on collaboration, standardization, automation, reproducibility, and continuous improvement, you're building a robust foundation for AI success. This means less time wrestling with infrastructure and more time innovating.

Let's explore how you can put MLOps into action with strategies designed to make your AI efforts more efficient, reliable, and scalable. This is about making MLOps work for you, streamlining everything from data handling to model deployment and beyond, ensuring your AI investments deliver.

Knowing what MLOps is and actually doing it effectively are two different things. The real magic happens when you translate theory into practice, moving from understanding concepts to implementing concrete actions. This is where smart strategies come into play.

Start with a cross-functional team

One of the first things you'll want to focus on is bringing the right people together. Machine learning projects are complex, touching everything from data sourcing to software deployment. That's why successful MLOps relies heavily on cross-functional teams where data scientists, software engineers, and IT operations folks work hand-in-hand. When these groups collaborate closely, sharing their unique expertise, you break down silos and speed up the entire model lifecycle. Encourage open communication, shared goals, and a collective ownership of the ML models. This teamwork is fundamental to building, training, and fine-tuning models efficiently, ensuring everyone is aligned and contributing their best.

Key roles on an MLOps team

Building a successful MLOps practice isn't about finding one unicorn employee who can do it all. It’s about assembling a team with a diverse set of skills that work in harmony. Think of it like a symphony orchestra; you need different instruments and musicians, each playing their part, to create a beautiful piece of music. Similarly, in MLOps, you need specialists who can handle everything from the raw data to the production infrastructure. Each role is distinct but deeply interconnected, and their collaboration is what turns a promising model into a reliable, value-driving business asset. Let's meet the key players on a typical MLOps team.

MLOps engineer

The MLOps engineer is the essential connector in your AI initiative, acting as the bridge between the world of data science and the realities of IT operations. They take the brilliant models developed by data scientists and figure out how to deploy, manage, and scale them effectively in a live production environment. Their main job is to ensure that a model doesn't just work on a laptop but can be seamlessly integrated into your existing infrastructure. They are the ones who build the automated pipelines and systems that make the entire MLOps lifecycle possible, ensuring your AI is robust, scalable, and reliable.

Data engineer

If your ML model is a high-performance car, the data engineer is the one who builds the pristine, smooth racetrack it runs on. They are responsible for the entire data architecture, focusing on creating efficient and dependable data pipelines. This involves everything from collecting and storing data to cleaning and processing it so it's ready for model training. A data engineer ensures that data scientists have constant access to high-quality, reliable data, which is the absolute bedrock of any successful ML project. Without their work, your models would be running on a bumpy, unreliable dirt road.

Data scientist

Data scientists are the creative heart of the team, responsible for the actual development and training of the machine learning models. They are the experts who dive deep into the data, uncover hidden patterns, and design the complex algorithms that solve specific business problems. Their work is highly experimental, involving testing different approaches to find the most accurate and effective solution. In an MLOps framework, they don't work in a vacuum; they collaborate closely with MLOps engineers to ensure the models they build are not just clever but also practical and ready for the rigors of a production environment.

DevOps engineer

Your DevOps engineer brings a wealth of knowledge from the world of software development and applies it to the unique challenges of machine learning. They are the masters of automation and infrastructure management. Their crucial role involves setting up the CI/CD pipelines that automate the testing and deployment of models, ensuring that new versions can be released quickly and reliably. By applying core DevOps principles to the ML workflow, they help eliminate manual processes, reduce errors, and create a stable, scalable environment where your models can thrive.

Essential MLOps skills

To make MLOps work, your team needs a powerful blend of technical skills that spans several disciplines. This isn't about one person knowing everything, but about the team collectively covering all the bases. Key programming languages like Python and SQL are fundamental for data manipulation and model building. Familiarity with popular machine learning frameworks such as TensorFlow and PyTorch is essential for the data scientists. On the operations side, deep knowledge of DevOps tools like Docker for containerization, Kubernetes for orchestration, and robust CI/CD pipeline tools is non-negotiable. It’s this combination of data, software, and operations expertise that truly powers an effective MLOps engine.

Business stakeholders who benefit from MLOps

The impact of a strong MLOps practice extends far beyond the technical teams. When models are deployed efficiently and perform reliably, everyone wins. IT leaders see a more stable and predictable infrastructure. Data science leaders can demonstrate a clearer return on investment from their team's work. Risk and compliance teams gain confidence from the increased transparency and governance over models in production. And most importantly, company executives can trust that their AI initiatives are not just expensive science projects but are actually delivering tangible, measurable business value. MLOps ensures that your models are accurate, valuable, and aligned with your strategic goals.

Standardize your data and workflows

Consistency is your best friend in MLOps. Think about standardizing your data formats and your development processes. When everyone is on the same page with how data is handled and how models are built, it makes life easier for your engineers during both development and deployment. Adopting standardized MLOps practices means borrowing the best from traditional software development and applying it to ML. This could involve creating templates for data preprocessing, defining clear steps for model validation, or establishing consistent coding standards. It’s all about creating a predictable and understandable workflow that everyone can follow, which ultimately leads to more reliable and maintainable models.

Automate everything you can

If you want to scale your AI efforts and improve efficiency, automation is key. Implementing MLOps means you can automate many stages of the ML lifecycle, especially the deployment of your models. Imagine your models moving from training to production with minimal manual intervention—that’s the power of automated workflows! This not only speeds things up but also reduces the chance of human error and frees up your team to focus on more strategic tasks instead of repetitive manual work. Start by identifying bottlenecks in your current process and look for opportunities to automate tasks like testing, validation, and deployment pipelines for smoother operations.

Focus on model reproducibility

Imagine trying to fix a problem with a model, or even just understand why it made a certain prediction, if you can't recreate how it was built. That's why making your models reproducible is so important. This means meticulously tracking everything: the code, the data versions, the hyperparameters, and the environment used to train your model. Good MLOps practices help ensure effective model deployment and management. When you can reliably reproduce a model and its results, you build trust, simplify debugging, and make it much easier to iterate and improve over time. Think of it as keeping a detailed recipe for every model you create.

Document everything for transparency and governance

Think of documentation as the story of your model's life. It’s not just about commenting your code; it’s about creating a clear, accessible record of everything that goes into your model—from the data you used and why you chose it, to the experiments you ran and the decisions you made along the way. This detailed record is vital for transparency and AI governance. When you can easily explain how a model works and trace its history, you build trust with stakeholders and make audits much less painful. It also makes collaboration smoother, as new team members can get up to speed quickly. Keeping a thorough log is a non-negotiable part of a mature MLOps practice, ensuring your AI systems are not only effective but also accountable.

Create a plan for continuous monitoring

Launching a model into production isn't the finish line; it's just the beginning of its active life. The world changes, data drifts, and model performance can degrade over time. That's why continuous monitoring is a non-negotiable part of MLOps. Your teams need to keep a close eye on both the input data and the model's predictions to spot any issues early. This diligent monitoring helps you decide when a model needs to be retrained with fresh data, updated with new logic, or even rolled back to a previous version if something goes wrong. Setting up alerts for performance drops or data anomalies will keep your AI systems robust and reliable.

What are the benefits of adopting MLOps?

So, you're hearing a lot about MLOps, and you might be wondering if it's just another tech trend or something that can genuinely make a difference for your AI initiatives. Let me tell you, it’s absolutely the latter. Adopting MLOps isn't about adding more complexity; it's about bringing clarity, efficiency, and reliability to your entire ML lifecycle. Think of it as the set of best practices that helps your team move from exciting AI concepts to real-world impact, smoothly and sustainably.

When you embrace MLOps, you're essentially building a strong foundation for your AI projects. This means your data scientists can focus on what they do best—building innovative models—while your operations team can confidently deploy and manage them. It’s about creating a system where everyone knows their role, processes are streamlined, and the path from an idea to a production-ready model is clear and efficient. The benefits ripple out, touching everything from how quickly you can innovate to how much trust you can place in your AI-driven insights. It’s a strategic move that pays off by making your AI efforts more robust, scalable, and ultimately, more successful.

Improve efficiency and productivity

One of the most immediate benefits you'll notice with MLOps is a significant improvement in efficiency. It’s all about streamlining your ML operations so your team can focus on high-value tasks instead of getting bogged down in repetitive manual work. Implementing an MLOps framework allows you to automate many of the steps involved in model development, testing, and deployment. This means less time spent on the mundane and more time for innovation and refinement.

By following best practices and leveraging MLOps, companies can enhance how their teams operate. Imagine your data scientists being able to iterate on models faster because the deployment pipeline is automated, or your operations team having clear, standardized procedures for monitoring model performance. This isn't just about saving time; it's about creating a more agile and responsive AI development process.

Achieve higher quality, more reliable models

When it comes to ML, the quality and reliability of your models are important. MLOps plays a crucial role here by introducing rigor and consistency throughout the model lifecycle. It helps unify all the tasks involved in an ML project, from data preparation and model training to validation and deployment. This unified approach ensures that every model you put into production has gone through a standardized, quality-assured process.

The result? You get models that are not only performant but also dependable. MLOps facilitates the continuous delivery of high-quality models by incorporating practices like automated testing, version control for data and models, and ongoing performance monitoring. This means you can catch issues early, understand how your models behave over time, and retrain or update them proactively. This systematic approach builds trust in your AI systems.

Reduce your time to market

In today's environment, speed matters. The ability to quickly develop and deploy AI solutions can give you a significant competitive edge, and MLOps is a key enabler for this. By streamlining workflows and automating key stages of the ML lifecycle, MLOps dramatically reduces the time it takes to get a model from an idea to production. This means you can respond more rapidly to market changes, customer needs, and new opportunities.

Implementing MLOps allows companies to accelerate their AI projects and see a quicker return on their ML investments. Think about the time saved when deployment processes are automated, or when model retraining can be triggered and executed without extensive manual intervention. This acceleration isn't just about speed for speed's sake; it's about efficiently translating your AI efforts into tangible business value, allowing you to innovate faster.

Better collaboration and governance

AI projects are rarely a solo endeavor; they require close collaboration between data scientists, ML engineers, software developers, and operations teams. MLOps provides a common framework and set of practices that significantly enhance this teamwork. It establishes clear processes for development, testing, and deployment, ensuring everyone is on the same page and working towards shared goals. This shared understanding helps to break down silos and foster a more cohesive and productive environment.

Moreover, MLOps brings better oversight to your ML initiatives. With robust version control, comprehensive monitoring, and clear documentation, you gain greater visibility into your models and their performance. This improved governance makes it easier to track changes, reproduce results, and ensure compliance. Enhanced collaboration and streamlined development mean your teams can work together more effectively, leading to higher-quality models.

Common MLOps challenges and how to solve them

Adopting MLOps can truly transform your AI initiatives, but let's be real, it's not always a straightforward path. Like any powerful strategy, it brings a unique set of challenges. The great news is that with a smart approach, these hurdles are definitely beatable. So, let's explore some common obstacles and, more importantly, how you can tackle them to keep your AI projects moving smoothly.

Solving data quality and management issues

Think of data as the fuel for your ML models. If that fuel isn't clean, your engine won't perform its best. A major hurdle in MLOps is maintaining data quality; inconsistencies and errors can easily sneak in, significantly affecting how well your models work and how much you can trust them. Data inconsistencies are a very common issue that can derail even the most promising AI projects.

To get ahead of this, set up strict data validation checks right from the start. You'll want to continuously monitor both your data pipelines and what your models are predicting. This way, your MLOps team can quickly spot any strange patterns, figure out when a model might need a refresh due to changes in data, or even decide if it’s best to roll back to an earlier version. Being proactive about your data is fundamental for reliable AI.

The challenge of model versioning and reproducibility

Imagine trying to bake your favorite cake weeks later without remembering the exact ingredient amounts or oven settings. That’s the kind of tricky spot you're in without solid model versioning and reproducibility. As your models get updated and your datasets change, you absolutely need a clear, organized record of each version, the specific data it was trained on, and the code that brought it to life.

Implementing MLOps practices helps you automate the deployment of your ML models, which is a huge step towards consistency. This means every experiment gets logged, and every model you send out can be easily traced back and reproduced. This isn't just about being tidy; it's crucial for fixing issues, passing audits, and making sure your models perform reliably over time.

Making sure your ML models can be deployed and run effectively as demand grows is a common MLOps challenge. This calls for a sturdy infrastructure that can handle more data, frequent model updates, and changing patterns without breaking a sweat.

How to scale your machine learning infrastructure

A model that seems like a genius on your development machine might hit a wall when it faces the sheer volume and speed of real-world data and user requests. Making sure your ML models can be deployed and run effectively as demand grows is a common MLOps challenge. This calls for a sturdy infrastructure that can handle more data, frequent model updates, and changing patterns without breaking a sweat.

The answer is to design your MLOps practices with scalability in mind from day one. This means picking the right tools and platforms that can expand with your needs. For instance, solutions like those from Cake manage the entire AI stack, which can greatly simplify the process of scaling your infrastructure. Automating your deployment processes to manage increased loads and constantly watching performance to find and fix any slowdowns are also key.

Addressing the MLOps skills gap on your team

Putting MLOps into action successfully calls for a special mix of skills. You need team members who really get data science, understand AI principles, and are comfortable with software engineering practices. Finding people who are experts in all these areas at once can be quite a challenge, leading to a skills gap that many organizations encounter. This need for diverse expertise is a well-recognized factor in MLOps adoption.

To tackle this, focus on building teams where data scientists, ML engineers, and IT operations folks can work together seamlessly. It's also a smart move to invest in training and helping your current team members grow their skills. Plus, think about using MLOps platforms and tools that handle some of the more complex bits, letting your team concentrate on creating value instead of getting bogged down in tricky infrastructure details.

Staying on top of compliance and privacy

In the AI world, data is king, but with great data comes significant responsibility. Making sure your MLOps practices line up with all the relevant regulations, industry standards, and privacy rules isn't just a good idea—it's often a must-do. Any slip-ups, like data breaches or not following the rules, can lead to serious fines and really hurt your organization's reputation.

The key here is to integrate data governance and privacy considerations into every single step of your MLOps lifecycle. This means setting up clear rules for how data is handled, putting strong security measures in place, and ensuring your models are transparent and can be audited. Don't treat compliance like an item to check off at the end; build it into the very foundation of your MLOps strategy for secure and trustworthy AI.

Addressing specific security threats

As your AI models become more critical to your business, they also become more attractive targets for some pretty clever attacks. It's not just about protecting your servers anymore; you have to protect the models themselves. A robust MLOps strategy isn't complete without a strong security focus baked in from the very beginning. This means thinking proactively about the unique ways ML systems can be compromised and building defenses against them. Managing the entire AI stack, as we do at Cake, includes implementing security best practices across infrastructure and workflows to help protect your valuable AI assets from these emerging threats. Let's look at a couple of specific security challenges you should be aware of.

Adversarial attacks

Imagine someone slightly altering a photo of a stop sign—so subtly that a human wouldn't notice—but it causes your self-driving car's AI to see a "Speed Limit 80" sign instead. That’s the essence of an adversarial attack. Attackers intentionally manipulate input data to trick your model into making incorrect and potentially harmful predictions. They probe your model to find its weaknesses and then craft these deceptive inputs to exploit them. To defend against this, you need a layered security approach. This includes enforcing secure MLOps practices and, crucially, continuously testing your models with adversarial examples to find and fix vulnerabilities before they can be exploited in the real world.

Reverse engineering

Your trained ML model is a valuable piece of intellectual property. Reverse engineering is when an attacker tries to pick it apart to understand its inner workings—its architecture, parameters, or even the private data it was trained on. This is a huge risk because if they succeed, they could steal your model, replicate it, or find vulnerabilities to exploit. Some may even try to embed "backdoor attacks," which are hidden triggers that cause the model to misbehave when a specific, secret input is used. To mitigate this, you need to implement strong security measures throughout the model's lifecycle. Think about things like model encryption to protect it at rest and in transit, and strict access controls to ensure only authorized personnel and systems can interact with it.

The future of MLOps and responsible AI

As we get better at building and deploying ML models with MLOps, it's super important to think about the impact these models have. It's not just about making them work; it's about making them work right, fairly, and responsibly. This means putting ethics at the forefront of our MLOps practices. When we commit to ethical AI, we build systems that are not only powerful but also trustworthy and beneficial for everyone. Looking ahead, the ethical side of MLOps is only going to become more central as we strive to create AI that truly serves society. Let's explore how we can build AI that we can all trust and see what exciting developments are coming our way.

How to address bias in your machine learning models

It's a harsh truth, but our AI models can sometimes reflect the biases present in the data they're trained on. If we're not careful, this can lead to unfair or skewed outcomes for different groups of people. A core part of MLOps ethics is actively working to identify these biases and take steps to lessen their impact. This means setting up ongoing checks to see how your models are performing for various demographics. Think of it as regular health check-ups for your AI, ensuring it’s treating everyone equitably. By continuously monitoring and adjusting, we can build models that are not only smart but also fair, leading to more just outcomes.

The principles of responsible AI

Building AI responsibly goes hand in hand with MLOps. It’s about creating clear rules of the road for how your organization develops and uses AI. This includes being really thoughtful about user privacy—how are you collecting and using data?—and making sure you’re up to speed with any relevant regulations, like those outlined in the AI Risk Management Framework. When you establish these ethical guidelines upfront, it helps your team make consistent, responsible choices throughout the model lifecycle. This isn't just about avoiding problems; it's about building trust with your users and showing that you're committed to using AI for good, fostering a positive relationship between technology and society.

What's next for MLOps?

The world of MLOps and AI ethics is always moving forward, and some really positive trends are emerging. We're likely to see AI governance frameworks become even more integrated into the MLOps lifecycle, emphasizing transparency in how models make decisions and who is accountable for their performance. Expect more sophisticated tools that can automatically help detect bias, making it easier to build fairer systems from the ground up. Plus, there's a growing movement towards closer collaboration between the tech folks—data scientists and engineers—and ethicists. This teamwork will be key to ensuring AI develops in a way that truly benefits everyone and aligns with societal values.

AI-driven automation and monitoring

Looking ahead, we're seeing MLOps get even smarter by using AI to manage AI. The automation we've talked about is evolving, as "MLOps truly shines here by automating the deployment process... you can build robust CI/CD (Continuous Integration/Continuous Delivery) pipelines specifically designed for ML." The next step is to infuse these pipelines with AI that can predict integration issues before they happen, making your deployment process even more resilient. This intelligence is also transforming how we monitor models. Since "models can degrade over time, a phenomenon often referred to as 'model drift,'" the future is proactive, AI-powered monitoring that can predict drift before it impacts accuracy. This shift allows teams to retrain models preemptively, ensuring your AI systems maintain peak performance without constant manual oversight.

A greater focus on data quality management

If there's one trend that will define the next phase of MLOps, it's an intense focus on data quality. The old saying "garbage in, garbage out" has never been more true, as "a major hurdle in MLOps is maintaining data quality; inconsistencies and errors can easily sneak in, significantly affecting how well your models work." To counter this, the future of MLOps involves embedding strict data validation checks right from the start. This means you need to "continuously monitor both your data pipelines and what your models are predicting." By creating this constant feedback loop, your team can catch anomalies as they happen, understand when a model needs a refresh, and maintain a high level of trust in your AI systems. This disciplined approach to data quality is a sign of a maturing MLOps practice.

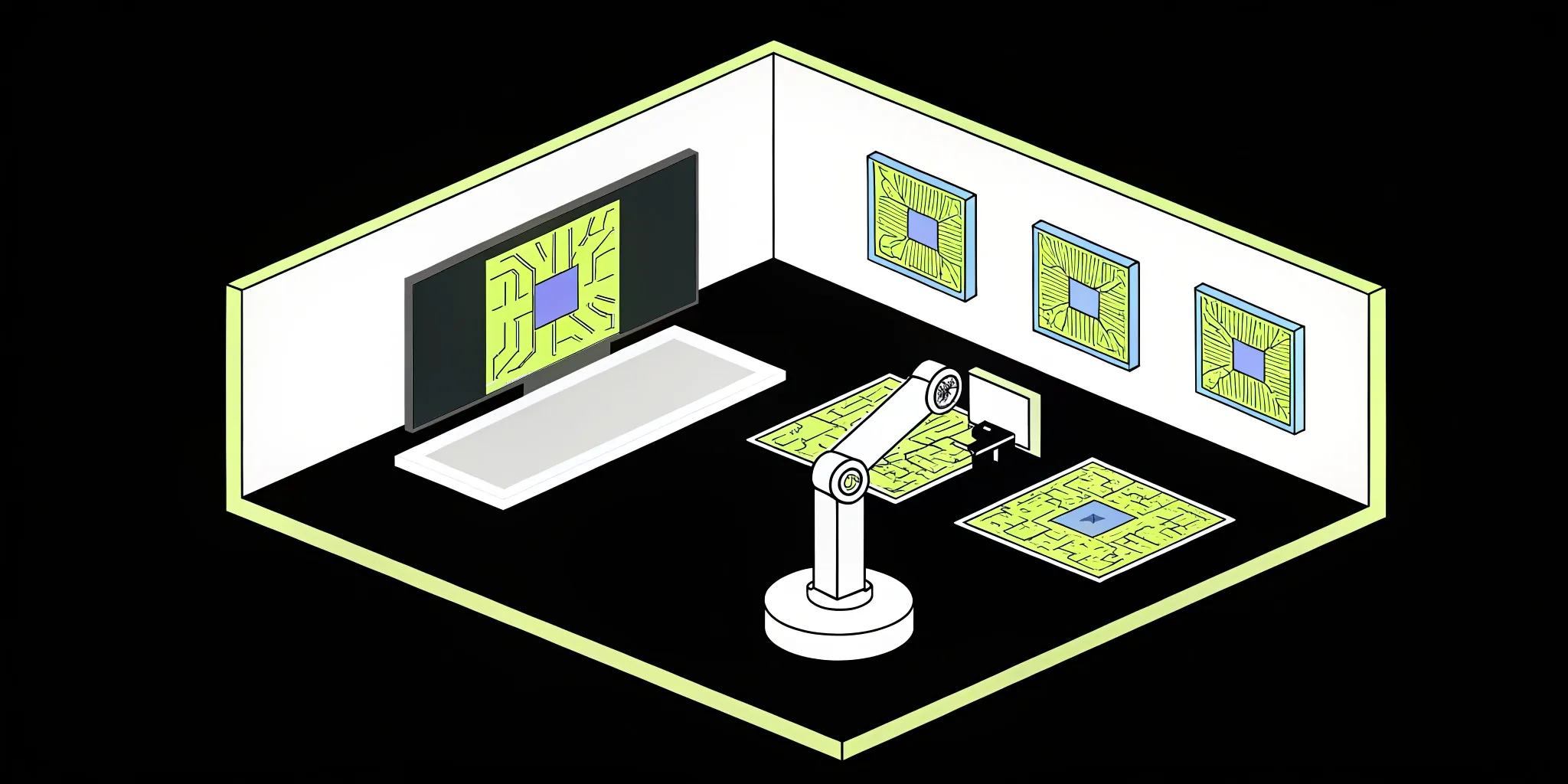

How Cake helps you succeed with MLOps

MLOps isn’t just about training models—it’s about managing the full machine learning lifecycle: from development and experimentation to deployment, monitoring, and iteration. Cake provides a modular, production-ready platform that integrates the entire MLOps stack, helping teams move faster without compromising reliability, governance, or performance.

Whether you're running a small R&D pipeline or scaling to thousands of parallel training jobs, Cake offers a consistent, cloud-neutral environment that supports open-source tools and best practices out of the box.

A look at Cake's MLOps features

- Notebooks: Cake integrates seamlessly with Jupyter and other notebook environments, giving data scientists a familiar interface for exploration, EDA, and early-stage model development.

- Ingestion and workflows: Build, manage, and rerun reproducible workflows using tools like Kubeflow Pipelines, directly within Cake’s orchestration layer. Go from ad-hoc experimentation to automated production pipelines.

- Experiment tracking and model registry: Cake ships with integrations for MLflow and similar tools to log experiments, capture metadata, compare runs, and register production-ready models.

- Training frameworks: Bring your own models. Cake supports frameworks like PyTorch, TensorFlow, XGBoost, and more, with hooks for custom training logic and prebuilt templates.

- Parallel compute: Scale training jobs from zero to thousands of cores with Cake’s native support for distributed compute engines like Ray and cluster autoscaling. Optimize for cost-efficiency without sacrificing performance.

- AutoML and hyperparameter tuning: Use tools like Ray Tune to automate hyperparameter search and run large-scale tuning experiments with minimal setup.

- Serving and inference: Deploy models using advanced inference frameworks like KServe with support for A/B testing, canary deployments, autoscaling, and shadow traffic.

- Model server integration: Use high-performance model servers like NVIDIA Triton behind your inference endpoints to maximize throughput and flexibility.

- Monitoring and drift detection: Get built-in observability with integrations for Prometheus, Grafana, and Istio. Monitor latency, throughput, failure rates, and model output. Add drift detection via tools like Evidently or NannyML to keep production models aligned with changing data.

- Feature stores and labeling tools: Integrate with labeling platforms like Label Studio and feature stores like Feast to manage your data pipeline from raw input to engineered feature sets.

- Data sources: Connect directly to data warehouses and object stores—such as Snowflake and S3—for training datasets, feature generation, and inference input.

With Cake you get:

- Cloud-agnostic MLOps: Run on AWS, Azure, GCP, or on-prem with zero vendor lock-in

- Open by default: Built on top of open-source tools with full extensibility

- Compliance-ready: Enterprise-grade security and audit trails built in

- Modular and composable infrastructure: Use the components you need, swap out what you don’t

Cake brings together all the moving parts of machine learning operations into one consistent, scalable platform, so your team can deliver models to production reliably, securely, and fast.

Related articles

- Success Story: How Ping Established ML-Based Leadership With Cake

- Building Out MLOps With Cake

- How a Materials Science Data Platform Saved a Year Building AI on Cake

An integrated platform for the entire ML lifecycle

Think about all the stages your model goes through: development, experimentation, deployment, monitoring, and then starting the loop all over again. MLOps is about managing this entire machine learning lifecycle, not just isolated parts of it. Juggling a patchwork of different tools for each step can create friction and slow your team down. This is where an integrated platform becomes so valuable. It brings all these pieces together into a single, cohesive environment, creating a seamless workflow from a data scientist's first experiment to a model running reliably in production. This unified approach helps teams move faster and collaborate more effectively without cutting corners on reliability or governance.

Streamlining infrastructure and open-source tooling

Getting a model from a data scientist's notebook into a real, value-generating application involves a lot of moving parts. Your team needs systems for managing data, versioning code, deploying models, and ensuring they perform well over time. A major benefit of a managed MLOps platform is that it streamlines the underlying infrastructure and integrates the best open-source tools for you. Instead of spending valuable time wrestling with compute resources and configurations, your team gets a production-ready environment right out of the box. At Cake, we manage this entire stack, which allows your team to focus on what they do best: building and improving your models.

Frequently asked questions (FAQs)

We're just starting to explore AI. Is MLOps something we should be thinking about from the get-go?

Absolutely! Even if you're just dipping your toes into AI, incorporating some basic MLOps principles early on can be incredibly helpful. Think of it as building good habits from the start, like how you manage your data or track your experiments. This groundwork will make it much easier to scale your efforts and keep things running smoothly as your AI projects grow more ambitious.

Our software team is pretty good with DevOps. Can't we just use those same practices for our ML projects?

That's a great starting point, as DevOps and MLOps share core ideas like automation and collaboration! However, MLOps adds a few extra layers specifically for ML. You're not just handling code; you're also managing large datasets, the experimental nature of model development, and the unique need to monitor models for things like performance changes over time. So, while DevOps provides a strong foundation, MLOps tailors those practices for the specific lifecycle of an ML model.

If we could only focus on one thing to kickstart our MLOps efforts, what would you suggest?

If I had to pick just one area to begin with, I’d strongly recommend focusing on solid version control for everything—your data, your code, and your models. Knowing exactly what data and code went into creating each model version, and being able to reliably reproduce it, is fundamental. This makes troubleshooting, collaboration, and building trust in your models so much simpler down the line.

How does putting MLOps into practice actually help my AI projects succeed and deliver real results?

MLOps helps your AI projects deliver real results by making the entire process more efficient, reliable, and scalable. It means you can move your models from an idea to actual use much faster. Plus, with continuous monitoring, you can trust that they're performing well and adapt them as your business or data evolves. Ultimately, it’s about ensuring your AI investments truly contribute to your business objectives rather than staying stuck in the lab.

What's a common hurdle teams face when they start with MLOps, and how can we prepare for it?

One common hurdle is managing data quality effectively. Your ML models are incredibly dependent on the data they're trained on, and issues like inconsistencies or changes in your data can really impact their performance. To prepare for this, it’s wise to establish clear processes for validating your data right from the beginning and set up systems to continuously monitor its quality and how it might be affecting your model's predictions.

About Author

Cake Team

More articles from Cake Team

Related Post

MLOps vs AIOps vs DevOps: A Complete Guide

Cake Team

MLOps Pipeline Optimization: A Complete Guide

Cake Team

MLOps in Retail: A Practical Guide to Applications

Cake Team

Your Guide to the Top Open-Source MLOps Tools

Cake Team