7 Best Open-Source AI Governance Tools Reviewed

Building an AI model without a governance plan is like constructing a house without a foundation. It might look fine at first, but it won't withstand real-world pressures. As you scale, you need a solid structure to manage data lineage, ensure security, and maintain compliance. AI governance provides that foundation, creating a clear, consistent, and responsible path for your projects to succeed. It turns your AI from a fragile experiment into a robust, production-ready asset. For teams that value transparency and control, open-source solutions are a powerful starting point. Let's walk through the best open-source AI governance tools so you can choose the right materials to build your AI future with confidence.

Key takeaways

- Choose a tool that fits your workflow: Select an open-source solution with essential features like data lineage, access controls, and strong integration capabilities. The right tool should solve your specific compliance and security challenges, not create new ones.

- Focus on people and process: A successful implementation depends on more than just technology. Drive team adoption by establishing clear policies, providing thorough training, and building a culture where responsible AI is a shared priority.

- Measure your effectiveness to stay on track: Define and monitor key performance indicators (KPIs) for compliance, data quality, and model fairness. Use these metrics to prove the value of your governance program and make data-driven decisions for continuous improvement.

Why you need open-source AI governance tools

As you build and scale your AI initiatives, it's easy to focus solely on the models and the data. But without a solid framework to manage everything, you risk running into issues with security, compliance, and performance. This is where AI governance comes in. It’s not about adding red tape; it’s about creating a clear, consistent, and responsible path for your AI projects to succeed.

Using open-source tools for AI governance gives you a powerful and flexible way to build this framework. You get the transparency and community support of open-source software, allowing you to create a system that fits your specific needs. Tools like Deepchecks play a crucial role here by enabling continuous testing and validation of AI models across their lifecycle—ensuring they meet both technical and ethical standards. This approach helps you manage your AI responsibly while maintaining the agility to innovate. It’s about building a foundation of trust and control so you can deploy AI with confidence.

What is AI governance?

For organizations using LLMs and RAG-based systems, Ragas is a powerful open-source tool that helps you evaluate retrieval-augmented generation pipelines for accuracy, grounding, and relevance—critical metrics in any governance framework. Effective AI governance practices ensure your models and data are handled according to established rules. It’s about asking the right questions: Do we have the right to use this data? Is our model fair? Can we explain its decisions? Answering these helps you build trustworthy and reliable AI systems.

Why choose an open-source solution?

Opting for an open-source solution is a smart way to get started with AI governance without a massive upfront investment. These tools provide a cost-effective entry point for managing metadata, tracking data lineage, and setting up basic governance rules. Because the code is open, you get full transparency into how the tools work, which is critical for building systems that need to be trusted and audited. Open-source observability stacks that include Prometheus and Grafana are especially useful in AI governance, offering detailed metrics and dashboards to monitor model performance, latency, and infrastructure utilization. Beyond the low cost, the open-source community is a huge asset. You’ll find a main hub for resources and developers focused on building responsible AI. This collaborative environment means tools are constantly improving, and you get the flexibility to customize the software to fit your needs.

The key benefits for your organization

Implementing a solid AI governance framework brings benefits that go far beyond checking a compliance box. It ensures your AI systems are effective, fair, and transparent, which builds trust with both customers and internal teams. When you can clearly track data origins and model behavior, you can mitigate bias, reduce security risks, and ensure your AI is operating ethically. Tools like Langfuse add critical observability into your AI stack, enabling you to track usage patterns, latency, errors, and prompt effectiveness in real-time—making governance actionable and ongoing. This ultimately helps you accelerate your projects. With clear rules in place, your teams can innovate faster and with more confidence. They can rely on a standardized, trustworthy process instead of starting from scratch. This is how you move from experimental projects to deploying production-ready open-source AI solutions that drive real business success.

How to choose the right AI governance tool

Picking the right AI governance tool can feel like a huge task, but it doesn't have to be overwhelming. When you know what to look for, you can confidently choose a solution that fits your team's needs, budget, and long-term goals. Think of it as creating a checklist. By focusing on a few key areas—from compliance to cost—you can systematically evaluate your options and find the perfect open-source tool to support your AI initiatives. Let's walk through the five most important factors to consider.

Check for compliance and regulatory needs

First things first: your governance tool must help you stay compliant. With data privacy regulations constantly evolving, you need a solution that can keep up. The responsible use of AI depends on protecting sensitive information according to policies like GDPR, CCPA, and other industry-specific rules. A solid governance tool will help you enforce these policies automatically, track data access, and generate reports for audits. Deepchecks is particularly strong in this area, providing automated testing that helps enforce fairness, drift detection, and robustness—making it easier to meet regulatory and ethical requirements. This isn't just about avoiding fines; it's about building trust with your customers by showing you take their data privacy seriously.

Evaluate integration capabilities

A new tool should make your life easier, not create another silo. That's why integration is critical. Your AI governance tool needs to fit neatly into your existing tech stack. Can it connect to your data warehouses, BI platforms, and machine learning libraries? The goal is to streamline data visualization and analysis by integrating governance metrics into the dashboards your team already uses. Tools like Grafana excel here, allowing you to plug in real-time governance data from Prometheus, Langfuse, and other sources into a unified monitoring dashboard that’s already familiar to your DevOps and ML teams. A seamless integration means less friction, higher adoption rates, and a more holistic view of your AI ecosystem.

Consider scalability and performance

Your AI ambitions are only going to get bigger, so you need a governance tool that can grow with you. Think about your future needs. Will the tool handle an increase in data volume, model complexity, and user requests without slowing down? A scalable solution allows you to adapt your governance framework as your projects evolve. OpenCost, for example, brings financial observability into your governance strategy by helping you understand and manage compute costs across cloud-native AI pipelines—essential as projects scale.This approach supports both innovation and risk management, giving you the visibility to make decisions based on data, not assumptions. Look for a tool with a flexible architecture that can scale efficiently, ensuring it remains a valuable asset as your organization’s AI maturity increases.

Review community support and documentation

When you choose an open-source tool, you're also choosing its community. A vibrant, active community is one of your greatest resources. It means you'll have access to extensive documentation, tutorials, and forums where you can get help from other users and developers. Promptfoo is an emerging tool with a growing community that’s focused on helping teams build, test, and govern prompts at scale—an increasingly important piece of the governance puzzle in the age of LLMs. Before you decide, spend some time exploring the tool's community hubs, like GitHub or Slack channels. A good place to start is by looking for a collection of resources that can help you with implementation and best practices for responsible AI.

Calculate the total cost of ownership

Open source might be free to download, but it's not free to operate. To get a true sense of the investment, you need to calculate the total cost of ownership (TCO). This includes the initial setup and configuration, data migration, and any necessary hardware. You also need to factor in ongoing costs like maintenance, updates, and the time your team will spend on training and support. Tools like Langfuse and OpenCost help quantify the usage and cost of your AI infrastructure, making it easier to manage budgets and avoid over-provisioning as your AI footprint expands. .

Creating a well-defined AI governance framework requires resources, so understanding the full financial picture helps you budget effectively and demonstrate the long-term value of the tool to stakeholders.

BLOG: What Drives AI Infrastructure Cost (And How Governance Controls It)

7 must-use open-source AI governance tools

Choosing the right tool often feels like the hardest part, but it’s really about matching a tool’s strengths to your specific needs. The open-source community has produced some incredible options, but they each come with their own focus areas and ideal use cases. Some specialize in observability, others in evaluation or cost transparency. As you review these tools, think about your current tech stack, your team’s technical skills, and your long-term compliance goals. The goal isn’t to find one tool that does everything—it’s to assemble the right toolkit that fits your governance strategy.

1. Deepchecks

Deepchecks is built for continuous testing and validation of machine learning models. It helps teams evaluate data integrity, model performance, and fairness over time, making it easier to detect drift, bias, or data quality issues before they impact production. It also includes built-in validations to support regulatory compliance efforts. If your goal is to enforce robust, test-driven governance throughout the model lifecycle, Deepchecks is a foundational choice.

2. Ragas

Ragas is a specialized tool designed for evaluating retrieval-augmented generation (RAG) pipelines. It provides structured metrics for measuring grounding, faithfulness, and relevance—three key aspects of trustworthy LLM applications. If you’re deploying GenAI and care about ensuring outputs are accurate and verifiable, Ragas brings essential governance coverage that most other tools lack.

3. Grafana

Grafana gives you a powerful way to visualize real-time metrics from across your AI systems. While it’s not a governance tool on its own, it becomes critical when paired with other sources like Prometheus, OpenCost, or Langfuse. It allows you to build dashboards that show model performance, latency, usage, and security indicators—giving both technical and non-technical stakeholders a clear view into how AI systems are behaving.

4. OpenCost

OpenCost brings financial observability into your AI governance strategy. It helps you understand, allocate, and control compute spend across Kubernetes clusters, which is especially valuable for large-scale AI deployments. With OpenCost, teams can set budgets, track usage by model or project, and optimize for cost efficiency—an important governance lever when it comes to keeping projects sustainable and on track.

5. Prometheus

Prometheus is a widely used monitoring tool that collects and queries time-series data. It’s often used to track infrastructure and model-level metrics such as uptime, latency, error rates, and throughput. Prometheus acts as the data pipeline for governance observability, enabling other tools like Grafana to display actionable insights. It’s ideal for teams that want full visibility into their operational AI stack.

6. Promptfoo

Promptfoo is focused on prompt evaluation and versioning for LLM workflows. As prompt engineering becomes a more critical part of GenAI development, having governance over prompt quality, behavior, and output consistency is essential. Promptfoo helps you compare prompts side by side, run regression tests, and track changes—bringing rigor and traceability to one of the most overlooked parts of the model stack.

7. Langfuse

Langfuse is an observability layer purpose-built for LLM applications. It tracks inputs, outputs, prompt tokens, latency, user feedback, and other metrics—giving you a real-time picture of how your GenAI systems are being used and where issues might arise. Langfuse also supports session logging and replay, making it a valuable tool for debugging, auditing, and compliance in production environments.

Bringing it all together with Cake

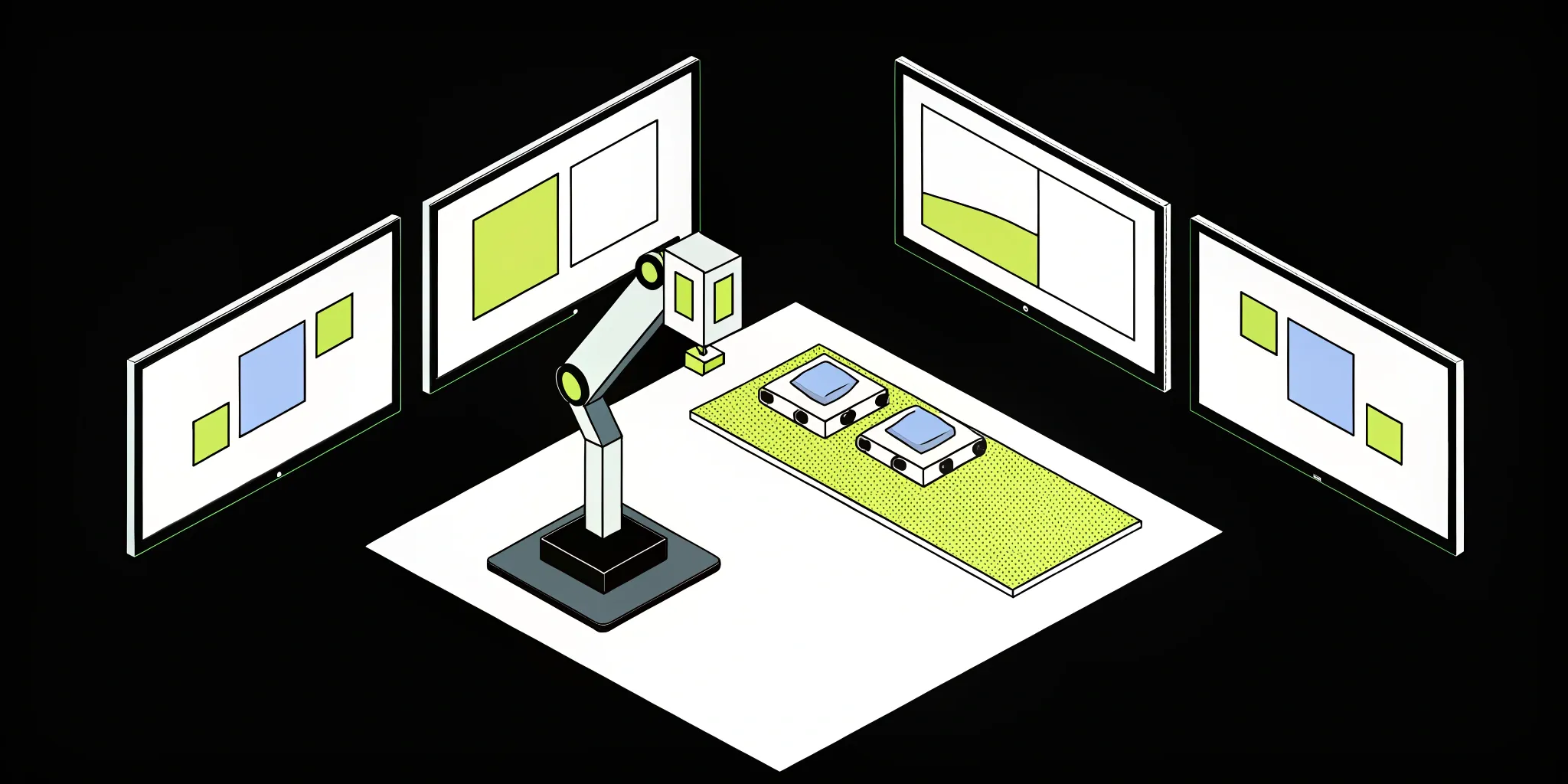

Each of the tools above plays an important role in the governance puzzle—but stitching them together on your own can be time-consuming and fragile. That’s where Cake comes in.

Cake provides a cloud-agnostic AI development platform that integrates the best open-source governance tools into a single, production-ready stack. Instead of spending weeks wiring up Grafana to Prometheus, configuring Langfuse for every model, or building dashboards for Ragas evaluations, Cake gives you a unified platform where all of it works out of the box. You get built-in support for cost visibility, model monitoring, prompt evaluation, and policy enforcement—without the operational overhead.

Whether you’re running fine-tuned LLMs, RAG pipelines, or agentic workflows, Cake ensures that governance isn’t an afterthought. It’s baked into every step of your AI lifecycle, from development to deployment.

Key features for a successful implementation

Once you’ve shortlisted a few open-source AI governance tools, it’s time to look closer at their features. The right tool isn’t just about ticking boxes; it’s about finding a solution that fits into your workflow and actively supports your governance goals. A successful implementation hinges on having the right capabilities to manage your data, secure your systems, and ensure your AI models are trustworthy and compliant.

Think of these features as the foundation of your AI governance house. Without them, things can get messy and unstable pretty quickly. You need strong support for tracking where your data comes from, controlling who has access to it, and making sure it’s high-quality. You also need your governance tool to play nicely with the other software you use and help you keep an eye on compliance. Let’s walk through the essential features you should look for.

Track metadata and data lineage

If you can’t trust your data, you can’t trust your AI. That’s where metadata and data lineage come in. Metadata is simply data about your data—things like where it came from, when it was created, and what it means. Data lineage is the story of your data’s journey, tracking its path from origin to its current state. Good governance tools help you automatically capture this information, giving you a clear view of your data flow. This is crucial for debugging models, ensuring data integrity, and building trust in your AI outputs. When you can trace every piece of data, you can confidently stand behind your results.

Implement access control and security

Not everyone in your organization should have access to all your data. Strong access control is fundamental to data security and compliance. Your AI governance tool should allow you to manage user roles and permissions effectively, ensuring that team members can only view or modify the data relevant to their jobs. This is especially important for protecting sensitive customer information and adhering to regulations like GDPR. Look for a tool that makes it easy to set up these controls and provides a clear audit trail of who accessed what and when. This isn't just about following rules; it's about building a secure data environment from the ground up.

Manage your data quality

There’s a classic saying in data science: "garbage in, garbage out." Your AI models are only as good as the data they are trained on. That’s why features for managing data quality are non-negotiable. A solid governance tool will help you ensure your data is accurate, complete, and consistent. It should provide ways to profile your data, identify anomalies, and set up rules to validate new information as it comes in. By maintaining high data quality standards, you can prevent biased outcomes, improve model performance, and make more reliable, data-driven decisions.

Look for integration and collaboration features

Your AI governance tool doesn’t operate in a silo. It needs to connect seamlessly with your existing data stack, including cloud data warehouses, business intelligence platforms, and other systems. Many open-source tools require custom coding to get everything connected, so look for solutions with pre-built connectors and robust APIs to make this process easier. Collaboration is just as important. The tool should help your data scientists, engineers, and business analysts work together effectively. Features like shared data catalogs, annotation tools, and integrated communication can make a huge difference in streamlining your AI projects.

Use compliance monitoring tools

Staying compliant with industry regulations and internal policies is a major part of AI governance. Instead of relying on manual checks, find a tool with built-in compliance monitoring. These features can help you track your adherence to rules and automatically flag potential issues. Effective governance platforms often use key performance indicators (KPIs) to measure compliance and manage risk. This could include dashboards that visualize your compliance status or automated alerts for when a dataset falls out of compliance. This proactive approach helps you address problems before they become serious and demonstrates a commitment to responsible AI.

How to overcome common implementation challenges

Choosing the right tool is just the first step. The real work begins with implementation, and let's be honest, it's not always a smooth ride. But don't worry—many of the common hurdles are predictable and manageable. By anticipating these challenges, you can create a clear plan to address them head-on and set your team up for success from day one. Here’s how to tackle some of the most frequent obstacles.

1. Simplify integration and setup

Many open-source tools feel like a box of parts rather than a finished product. Connecting them to your existing systems, like cloud data warehouses or business intelligence tools, can require a ton of custom coding and effort. This can quickly drain your engineering resources before you even get to the governance part. To avoid this, look for platforms that offer a more unified experience or come with pre-built integrations. This saves your team countless hours, letting them focus on driving value instead of just keeping the lights on.

2. Solve data quality issues

Your AI models are only as good as the data they're trained on. Unfortunately, many open-source tools don't have strong, built-in features to automatically check for errors or missing information. This leaves your team doing manual checks or juggling yet another tool to get the job done. To avoid this, prioritize solutions with integrated data quality management. Features that automatically flag inconsistencies or missing values will ensure your data is reliable and your AI initiatives are built on a solid foundation from the start.

3. Close security and compliance gaps

When you're dealing with sensitive data, security can't be an afterthought. While most tools offer basic access controls, they often lack the advanced features needed for enterprise-level security and compliance. This includes things like data masking, encryption, and automated checks for regulations like GDPR. Make sure you thoroughly evaluate the security posture of any tool you consider. A strong governance tool should help you enforce policies and protect sensitive information, not create new vulnerabilities for you to manage.

4. Encourage team adoption and training

You can have the most powerful tool in the world, but it won't do you any good if your team doesn't use it. Low adoption is a silent project killer. To get everyone on board, you need a plan that goes beyond the initial setup. This means providing thorough training, creating clear documentation, and fostering a culture of cross-functional collaboration between your data, IT, legal, and business teams. When everyone understands the "why" behind the tool and how it helps them, they're far more likely to embrace it.

5. Plan your resource allocation

A successful implementation requires more than just technical know-how; it requires strategic planning. Before you dive in, take the time to map out the resources you'll need—not just budget, but also people's time and expertise. Think about the entire data lifecycle, from cataloging and integration to privacy and access management. A clear resource allocation plan ensures your implementation stays on track and aligns with your broader business goals, turning your AI governance program into a true organizational asset.

Best practices for long-term success

Choosing the right open-source AI governance tool is a huge step, but the work doesn’t stop there. To truly get the most out of your efforts, you need to build a culture of responsible AI that supports your tools and processes. Think of it as creating a sustainable ecosystem for your AI initiatives to thrive in. It’s about embedding good habits into your daily operations so that governance becomes second nature, not an afterthought. These practices will help you build a strong foundation for responsible, effective, and scalable AI for years to come.

Establish clear governance policies

Before you can enforce anything, you need to define the rules. Your first step is to create and document clear, comprehensive AI governance policies. This is your playbook for everything from data quality and privacy to model development and monitoring. Think about how you’ll manage data to train your models, protect sensitive information, and ensure your AI systems are transparent and explainable. Getting everyone on the same page with a written framework prevents confusion and ensures consistency across all your AI projects, making compliance much easier to manage down the line.

Set up monitoring and auditing

Once your policies are in place, you need a way to ensure they’re being followed. This is where continuous monitoring and auditing come in. Setting up systems to track your AI models in real-time helps you detect and prevent issues before they become major problems. Maintaining audit-ready logs is also essential for tracing vulnerabilities or policy violations if they occur. Think of it as a security system for your AI. By keeping a close watch on performance and activity, you can maintain the integrity of your systems and quickly respond to any unexpected behavior, keeping your projects secure and on track.

Prioritize training and documentation

A great AI governance framework is only effective if your team understands and uses it. That’s why training and education are so important. Make sure every stakeholder, from data scientists to project managers, understands the governance policies and knows their specific role within them. Good documentation that is easy to access and understand is a must. When you invest in training, you empower your team to make responsible decisions independently. This not only improves compliance but also fosters a culture of accountability and shared ownership over your company’s AI initiatives.

Collaborate with the community

You don’t have to figure everything out on your own. Engaging with internal stakeholders and the broader open-source community can make your AI governance practices much more effective. Internally, getting feedback from different departments ensures your policies are practical and address real-world needs. Externally, the open-source community is an incredible resource for shared knowledge, best practices, and support. By participating in these conversations, you can learn from others’ experiences, stay on top of new trends, and contribute to a collective effort to build more responsible AI for everyone.

Plan for continuous improvement

The world of AI is constantly evolving, and your governance strategy should, too. Governance is not a one-and-done project; it’s an ongoing process of refinement. You should regularly review and update your practices to adapt to new challenges, regulations, and technologies. Schedule periodic assessments of your framework to see what’s working and what isn’t. This proactive approach will help you stay ahead of the curve and maintain a robust, future-proof AI governance program.

The world of AI is constantly evolving, and your governance strategy should, too. Governance is not a one-and-done project; it’s an ongoing process of refinement. You should regularly review and update your practices to adapt to new challenges, regulations, and technologies.

How to measure your governance effectiveness

Putting an AI governance tool in place is a great first step, but how do you know if it’s actually working? Simply having the tool isn’t enough; you need to measure its effectiveness to make sure you’re getting the results you want. This is how you turn your governance framework from a theoretical plan into a practical, value-driving part of your operations. Measuring your success helps you justify the investment, identify areas for improvement, and ensure your AI initiatives stay aligned with your business goals.

A solid measurement strategy gives you clear insights into how well your AI systems are managed and controlled. It involves looking at a few key areas: performance, quality, security, and ongoing optimization. By tracking the right metrics, you can catch potential issues before they become major problems, build trust in your AI systems, and make data-driven decisions to refine your approach over time. Think of it as a feedback loop that keeps your AI governance sharp, relevant, and effective.

Define your key performance indicators

Before you can measure success, you need to define what it looks like. This is where key performance indicators (KPIs) come in. For AI governance, KPIs are measurable values that show you how well you’re managing your AI systems against your ethical, legal, and business standards. Instead of guessing, you’ll have hard numbers to back up your progress.

Your KPIs should be tied directly to your organization's goals. For example, you might track the time it takes to approve a new model for production, the percentage of models that pass fairness audits, or the number of data access requests that are automatically fulfilled. The right KPIs give you a clear, at-a-glance view of your governance health and help you focus your efforts where they matter most.

Track quality assurance metrics

An AI model can be incredibly accurate but still cause major problems if it’s biased or unfair. That’s why quality assurance metrics are so important. These metrics go beyond simple performance to evaluate the integrity of your AI systems. You should be tracking things like fairness and transparency to identify and reduce biases, ensuring your models treat all user groups equitably.

Tracking these metrics helps you build and maintain trust with your customers and stakeholders. When you can demonstrate that your AI is not only effective but also ethical and transparent, you create a stronger foundation for your AI initiatives. This isn't just about compliance; it's about building responsible AI that reflects your company's values and serves your audience well.

Monitor security and compliance

In the world of AI, data is everything—and protecting it is non-negotiable. Security and compliance metrics are essential for safeguarding your AI systems and the sensitive data they handle. These metrics help you monitor for vulnerabilities, potential attacks, and data breaches. A strong governance framework relies on these checks to keep your operations safe, as security metrics ensure the protection of your AI systems.

Beyond security, you also need to stay on top of regulatory requirements like GDPR or CCPA. Compliance monitoring helps you track how well you’re adhering to these rules, reducing the risk of costly fines and legal trouble. Regularly reviewing security and compliance metrics ensures your AI systems are not only effective and fair but also trustworthy and legally sound.

Develop your optimization strategy

Collecting metrics is only half the battle; the real value comes from using that information to get better. An optimization strategy is your plan for turning insights into action. This means regularly reviewing your KPIs and metrics to spot trends, identify bottlenecks, and find opportunities for improvement. It’s all about creating a cycle of continuous improvement for your AI governance program.

To make this easier, integrate your AI governance metrics into the dashboards and reporting tools your teams already use. This makes the data more visible and accessible. Encourage collaboration between your data science, IT, and legal teams to analyze the findings and decide on the next steps. This teamwork ensures that your optimization efforts are well-rounded and effective.

Related articles

- 6 of the Best Open-Source AI Tools of 2025 (So Far)

- What is AI Governance & Why It Matters Now

- 9 Top Open-Source Tools for Financial AI Solutions

- How to Build an LLM Governance Framework That Works

Frequently asked questions

What's the single most important first step to take when setting up AI governance?

Before you even think about choosing a tool, your first step should be to define and document your governance policies. This means sitting down with your team and creating a clear playbook that outlines your rules for data quality, privacy, security, and model fairness. A tool is only as effective as the strategy behind it, so having this framework in place first ensures you choose a solution that actually supports your goals, rather than trying to fit your goals into a tool.

You mentioned 'total cost of ownership.' What are the real costs of a "free" open-source tool?

While you don't pay a licensing fee for open-source software, there are definitely other costs to consider. The biggest ones are the time and technical expertise required from your team for the initial setup, custom integrations, and ongoing maintenance. You also need to factor in the resources for training your team and potentially migrating data. Calculating this total cost of ownership gives you a much more realistic picture of the investment required.

How can I get my team to actually use a new governance tool without it feeling like a burden?

The key is to frame the tool as a benefit, not a chore. Involve your team in the selection process so they have a say in what you choose. During implementation, focus your training on how the tool solves their specific pain points, like reducing manual data checks or making it easier to find trustworthy datasets. When people see how it makes their work easier and more effective, adoption happens much more naturally.

With so many tools available, how do I choose one that will still be right for us in a few years?

Instead of focusing only on your current needs, look for a tool with a flexible architecture and strong integration capabilities. Your AI projects will inevitably grow more complex, so you need a solution that can scale with you. Pay close attention to the tool's community support and development activity. An active community is a good sign that the tool will continue to evolve and adapt to new challenges.

What if my team doesn't have the engineering resources to manage a complex open-source setup

That's a very common situation, and it's where a managed platform can be a great fit. If you don't have a dedicated team to handle the setup, integration, and maintenance, piecing together different open-source tools can be a major headache. An integrated solution like Cake handles the entire stack for you, giving you the benefits of a robust governance framework without the heavy operational lift. This lets your team focus on building models instead of managing infrastructure.

About Author

Cake Team

More articles from Cake Team

Related Post

The Best Open-Source Tools for Building Powerful SaaS AI Solutions

Cake Team

MLOps vs AIOps vs DevOps: A Complete Guide

Cake Team

How to Evaluate Baseten for AI Infrastructure

Cake Team

The Main Goals of MLOps (And What They Aren't)

Cake Team