Cake for Ingestion & ETL

Automated ingestion & transformation pipelines designed for modern AI workloads, which means they are composable, scalable, and compliant by default.

Overview

Before you can build or deploy AI models, you need clean, structured, and accessible data. Cake streamlines this process with open-source components and orchestration designed for modern AI workflows. Ingestion and ETL (Extract, Transform, Load) is the foundation of every ML and LLM workflow, but traditional pipelines are brittle, expensive to maintain, and often stitched together with fragile integrations.

Cake provides a modular, cloud-agnostic ETL system optimized for AI teams. Whether you’re ingesting tabular data, scraping external sources, or harmonizing structured and unstructured inputs, Cake’s open-source components and orchestration logic make it easy to go from raw data to training-ready inputs.

Unlike legacy ETL tools, Cake was designed with AI-scale workloads and modern security standards in mind. You get out-of-the-box support for key compliance needs, seamless integration with vector databases like pgvector, object stores like AWS S3, and integration with popular ML/AI tools like PyTorch and XGBoost, all in a cloud-agnostic infrastructure.

Key benefits

-

Accelerate delivery without custom plumbing: Use best-in-class ingestion and ETL components to move faster without reinventing the stack. Integration with tools across the entire AI/ML stack saves you from hours of manual setup.

-

Stay modular and cloud agnostic: Avoid lock-in and rigid architectures with a modular system that works across clouds and workloads. Deploy your ETL pipelines on any infrastructure (AWS, GCP, Azure, on-prem, or hybrid) while connecting to your existing tools via Cake’s open-source integrations.

-

Ensure compliance from day one: Build pipelines that meet enterprise security and audit requirements from day one. Comply with regulations like SOC2 and HIPAA with minimal effort.

THE CAKE DIFFERENCE

![]()

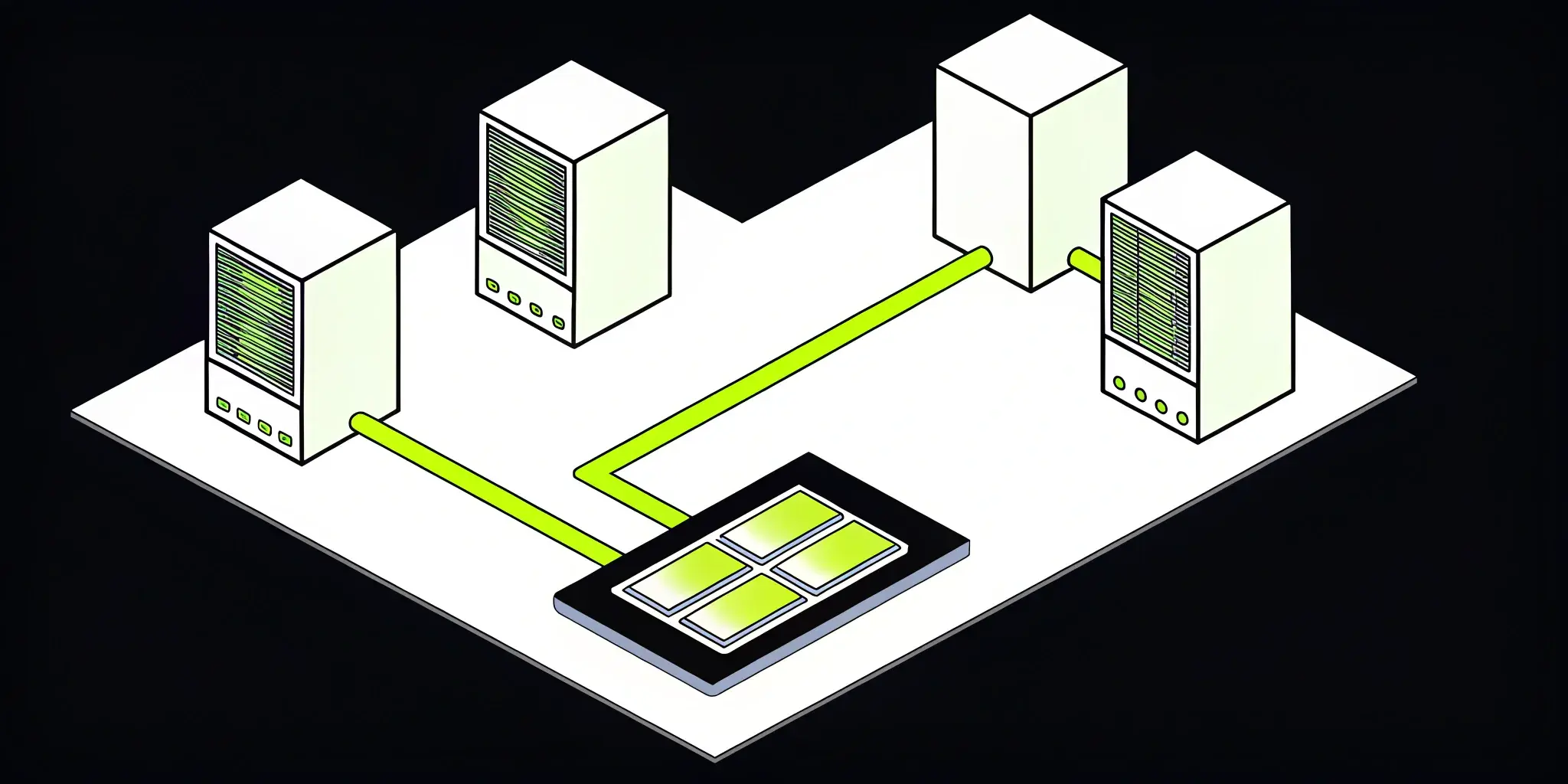

The better way to move your data

In-house pipelines

Total control (until it breaks): Custom Airflow or Spark pipelines offer flexibility, but demand constant engineering upkeep.

- Every connector, retry, and alert is hand-built

- Pipeline logic is hard to reuse across teams or projects

- Changes to schemas or sources cause brittle failures

- Observability, compliance, and access control are all bolt-ons

Result:

Full control, but growing technical debt and slow response to new needs

The Cake approach

Composable ingestion built for AI workflows: Use open-source tools like Airbyte, Beam, and Prefect—pre-integrated and production-ready.

- Pipelines deploy in hours with built-in retries, alerts, and observability

- Easily extend for LLM training, vectorization, or transformation

- Deploy in your own cloud with enterprise-grade access control

- Shared orchestration and logging across all your data workflows

Result:

Fast, reliable, and future-proof ingestion with less engineering effort

EXAMPLE USE CASES

![]()

Where teams are using Cake's

ingestion & ETL capabilities

![]()

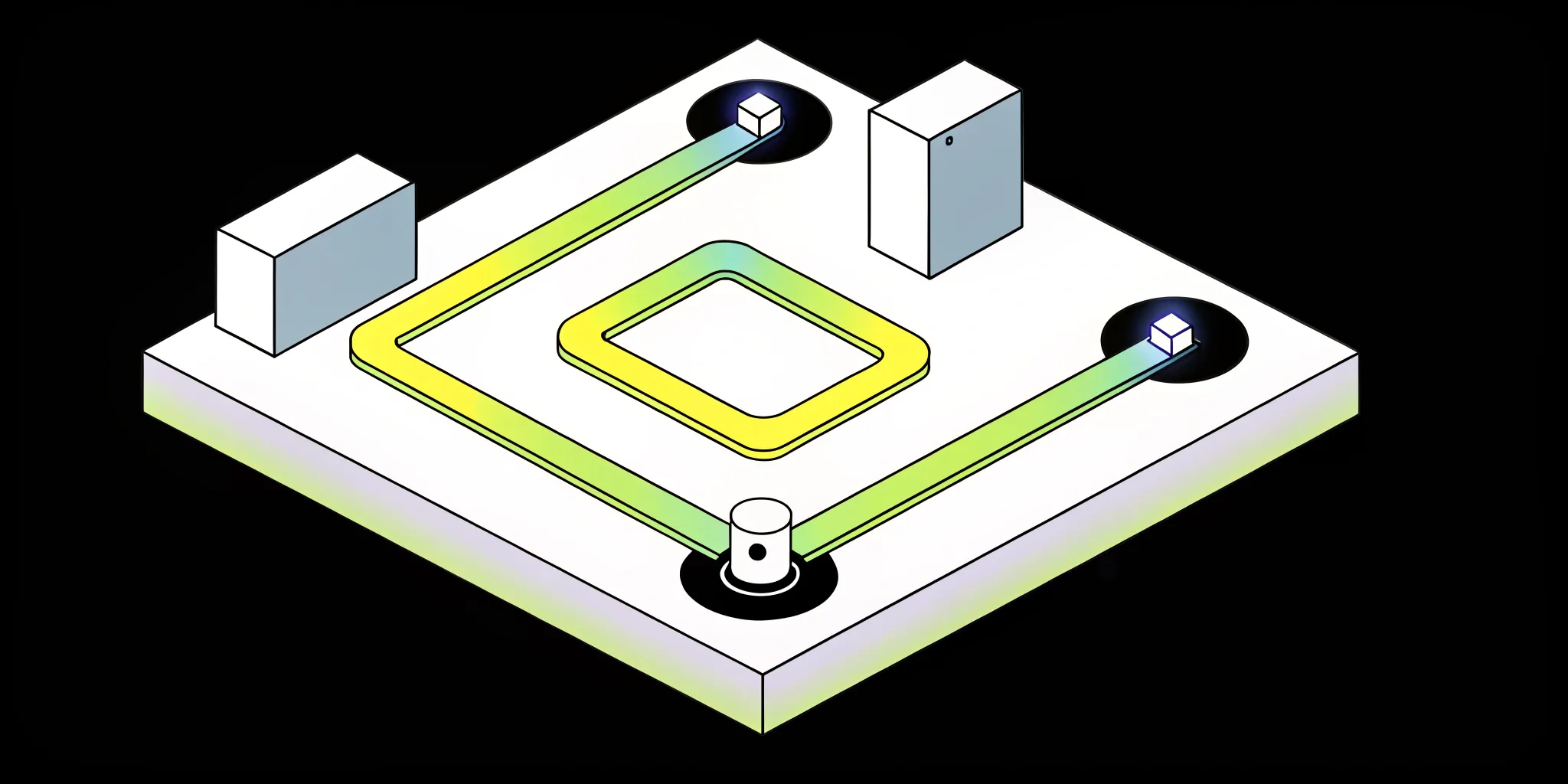

Model training prep

Automate ingestion from cloud buckets, APIs, and tabular stores to create model-ready datasets.

![]()

Data harmonization

Combine and normalize structured and unstructured data for unified downstream processing.

![]()

Compliance-driven logging

Capture and transform sensitive data with audit-ready lineage and masking tools.

![]()

Real-time data streaming

Ingest high-velocity event data from Kafka, Kinesis, or Pub/Sub for real-time analytics and alerting.

![]()

Batch processing at scale

Use orchestrated pipelines to process large volumes of historical data on a schedule without pipeline sprawl.

![]()

Cross-cloud data unification

Consolidate data from multiple cloud services and SaaS tools into a single lake or warehouse with consistent schema and lineage tracking.

BLOG

Why AI demands a new approach to ETL pipelines

Learn how ETL pipelines for AI can streamline your data processes, ensuring clean, reliable data for better insights and decision-making.

IN DEPTH

Launch customer service agents in days with Cake

Use Cake’s production-ready platform to spin up reliable AI agents, connect to CRMs, and scale securely.

"Our partnership with Cake has been a clear strategic choice – we're achieving the impact of two to three technical hires with the equivalent investment of half an FTE."

Scott Stafford

Chief Enterprise Architect at Ping

"With Cake we are conservatively saving at least half a million dollars purely on headcount."

CEO

InsureTech Company

COMPONENTS

![]()

Tools that power Cake's ingestion & ETL stack

Airbyte

Data Ingestion & Movement

Airbyte is an open-source data integration platform used to build and manage ELT pipelines. Cake integrates Airbyte into AI workflows for scalable, governed data ingestion feeding downstream model development.

Airflow

Orchestration & Pipelines

Apache Airflow is an open-source workflow orchestration tool used to programmatically author, schedule, and monitor data pipelines. Cake automates Airflow deployments within AI workflows, ensuring compliance, scalability, and observability.

dbt

Orchestration & Pipelines

dbt (data build tool) is an open-source analytics engineering tool that helps teams transform data in warehouses. Cake connects dbt models to AI workflows, automating feature engineering and transformation within governed pipelines.

Prefect

Orchestration & Pipelines

Prefect is a modern workflow orchestration system for data engineering and ML pipelines. Cake integrates Prefect to automate pipeline execution, monitoring, and governance across AI development environments.

Apache Spark

Distributed Computing Frameworks

Apache Spark is a distributed computing engine for large-scale data processing, analytics, and machine learning.

Langflow

Agent Frameworks & Orchestration

Langflow is a visual drag-and-drop interface for building LangChain apps, enabling rapid prototyping of LLM workflows.

Frequently asked questions

What is data ingestion and ETL?

Data ingestion is the process of collecting data from various sources and moving it into a central location for storage and analysis. ETL (Extract, Transform, Load) goes a step further, transforming that raw data into a structured, usable format before loading it into your target system.

How does Cake support ingestion and ETL workflows?

Cake provides a cloud-agnostic, compliance-ready platform for running ingestion and ETL at scale. It integrates best-in-class open-source tools, orchestrates them with secure, production-grade infrastructure, and simplifies the entire workflow so teams can focus on building value—not managing plumbing.

Can I use my existing ingestion and ETL tools with Cake?

Yes. Cake is designed to work with your preferred open-source or commercial ingestion and ETL components, including tools like Airbyte, Apache Beam, dbt, and more. You can bring your own stack and run it with Cake’s managed orchestration, observability, and security.

What makes Cake different from traditional ETL platforms?

Unlike traditional ETL platforms that lock you into a single vendor or cloud, Cake is cloud-agnostic and modular. You can swap tools, run workloads in your own environment, and adopt the latest open-source innovations—without rewriting pipelines or sacrificing compliance.

Does Cake help with real-time as well as batch ingestion?

Yes. Cake supports both batch and streaming data ingestion, giving you the flexibility to handle scheduled jobs, near-real-time updates, or high-velocity data streams—depending on your business needs.

Learn more about ETL and Cake

10 Best Data Ingestion Tools for Your AI Stack

Find the best data ingestion tools for building seamless data pipelines. Compare features, scalability, and ease of use to choose the right solution...

ETL Pipelines for AI: Streamlining Your Data

Building a powerful AI model without a solid data strategy is like constructing a skyscraper on a weak foundation. It doesn’t matter how impressive...

AI Data Extraction vs. Traditional: Which Is Best?

Your business is sitting on a goldmine of information locked away in unstructured documents like emails, PDFs, and scanned images. Traditional...

.png?width=220&height=168&name=Group%2010%20(1).png)

.png)