Cake for Inference

Inference is how AI creates outcomes, from accurate predictions to personalized user experiences. Cake streamlines the technical complexity by combining model serving, orchestration, and observability into a platform that scales seamlessly across models and infrastructure.

Overview

Inference is where AI delivers real impact, turning trained models into predictions, automated decisions, and intelligent applications at scale. Yet moving from development to production is rarely straightforward. Many teams face challenges around latency, cost efficiency, observability, and compliance that make scaling inference complex.

Cake makes inference simple, secure, and scalable. With a composable platform that supports everything from small models to the latest frontier LLMs, you can deploy, route, and optimize inference workloads without being tied to a single vendor. Built on open source and cloud-agnostic infrastructure, Cake ensures your applications stay reliable, cost-effective, and compliant as they grow.

Key benefits

-

Accelerated deployment: Teams were able to move models from notebooks to production environments in minutes instead of months.

-

Optimized performance: Inference workloads were balanced across GPUs, TPUs, and CPUs to meet latency and cost targets.

-

End-to-end visibility: Full observability into inference pipelines made it easy to debug performance, track usage, and meet compliance requirements.

-

Future-proof flexibility: Enterprises avoided lock-in by deploying inference across any cloud, leveraging the latest open-source model servers and frameworks.

-

Cost savings at scale: Organizations reduced infrastructure overhead by automatically scaling inference workloads up and down to match demand.

THE CAKE DIFFERENCE

![]()

From simple model calls to

production-grade inference

Vanilla model inference

Simple to start, expensive to scale: Calling an API works for a prototype, but lacks control, efficiency, and visibility.

- High cost per request, no optimization for usage patterns

- Limited visibility into inputs, outputs, and failures

- No fine-grained routing, caching, or fallback logic

- Vendor lock-in with little flexibility across models

Result:

Fast to test, but hard to scale securely or affordably

Inference with Cake

Fast, observable, and cost-efficient by design: Cake turns inference into a fully managed, production-ready system.

- Deploy vLLM, TGI, or your own optimized serving stack in your cloud

- Add caching, fallback routing, and model switching with zero vendor lock-in

- Built-in observability: log inputs/outputs, costs, latencies, and errors

- Support for open models, finetuned models, and commercial APIs in one pipeline

Result:

Reliable, cost-effective inference that scales with your needs

EXAMPLE USE CASES

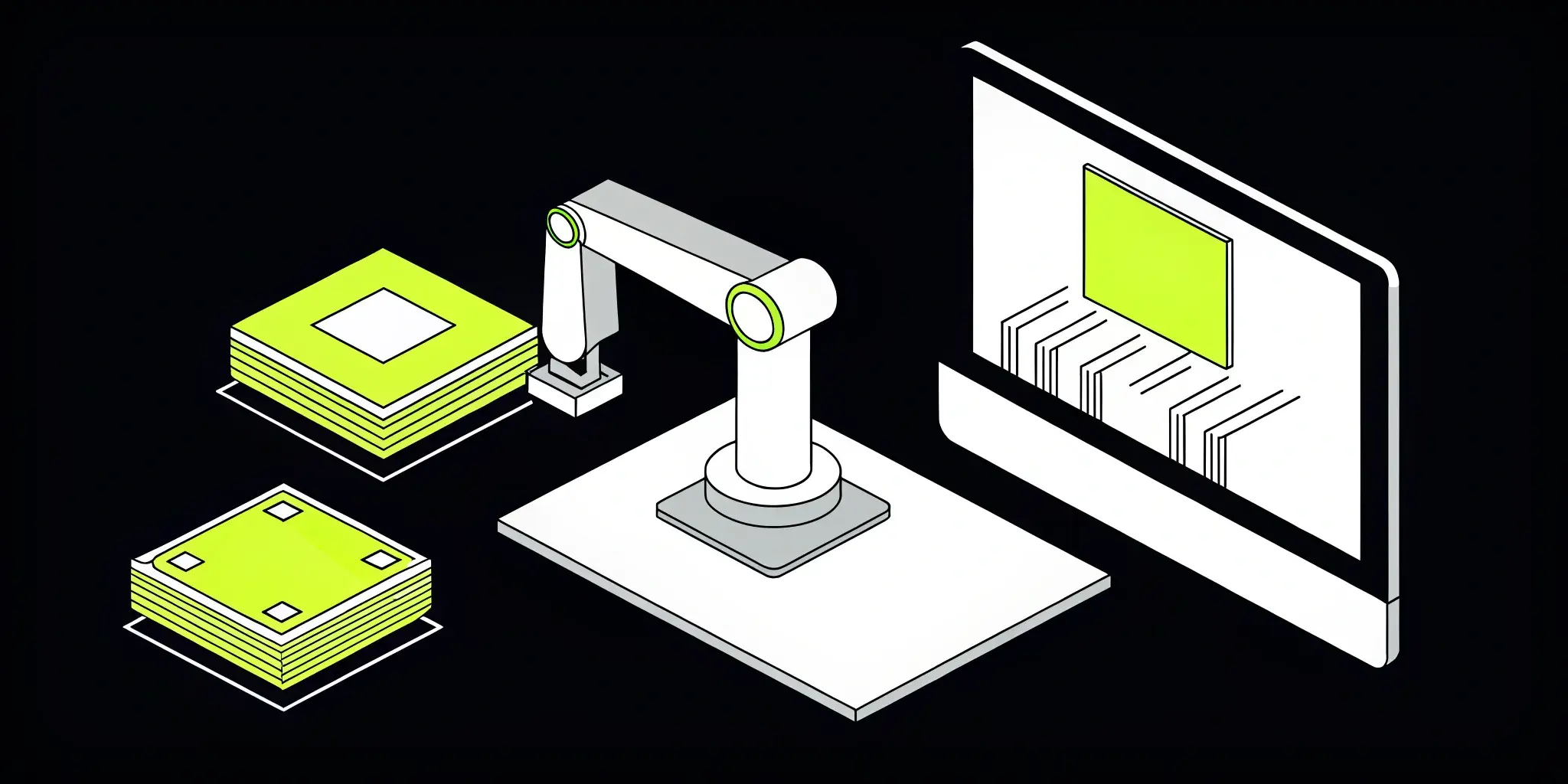

![]()

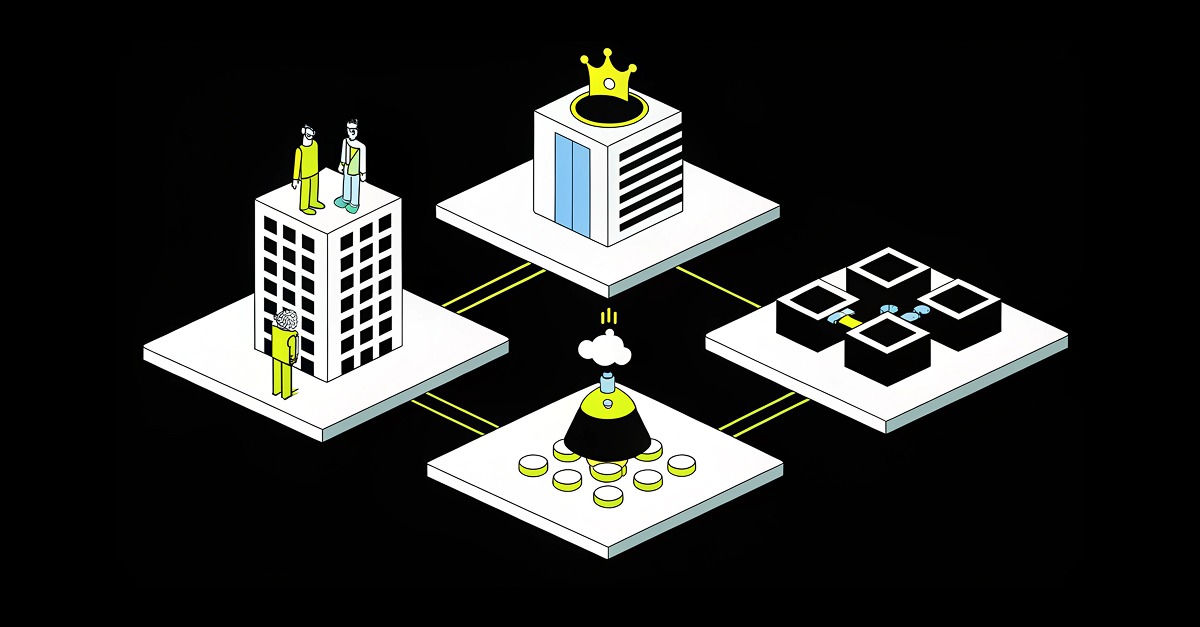

How teams run inference securely with Cake

![]()

Customer-facing AI

Power chatbots, recommendation engines, and personalization systems that serve millions of users in real time.

![]()

Operational automation

Deploy computer vision, fraud detection, or anomaly detection models into mission-critical business workflows.

![]()

Research to production

Take fine-tuned or experimental models from lab environments into compliant, production-grade infrastructure with minimal effort.

![]()

Real-time analytics

Enable fast, on-demand predictions to support decision-making in areas like trading, logistics, and supply chain optimization.

![]()

Edge inference

Run models close to the data source on devices, sensors, or edge servers to minimize latency and bandwidth costs.

![]()

Multi-model routing

Dynamically serve, A/B test, or ensemble multiple models to improve accuracy and optimize cost-performance tradeoffs.

IN DEPTH

Launch customer service agents in days with Cake

Use Cake’s production-ready platform to spin up reliable AI agents, connect to CRMs, and scale securely.

ANOMALY DETECTION

Spot issues before they're issues

Detect anomalies in data streams, applications, and infrastructure with real-time AI monitoring.

"Our partnership with Cake has been a clear strategic choice – we're achieving the impact of two to three technical hires with the equivalent investment of half an FTE."

Scott Stafford

Chief Enterprise Architect at Ping

"With Cake we are conservatively saving at least half a million dollars purely on headcount."

CEO

InsureTech Company

COMPONENTS

![]()

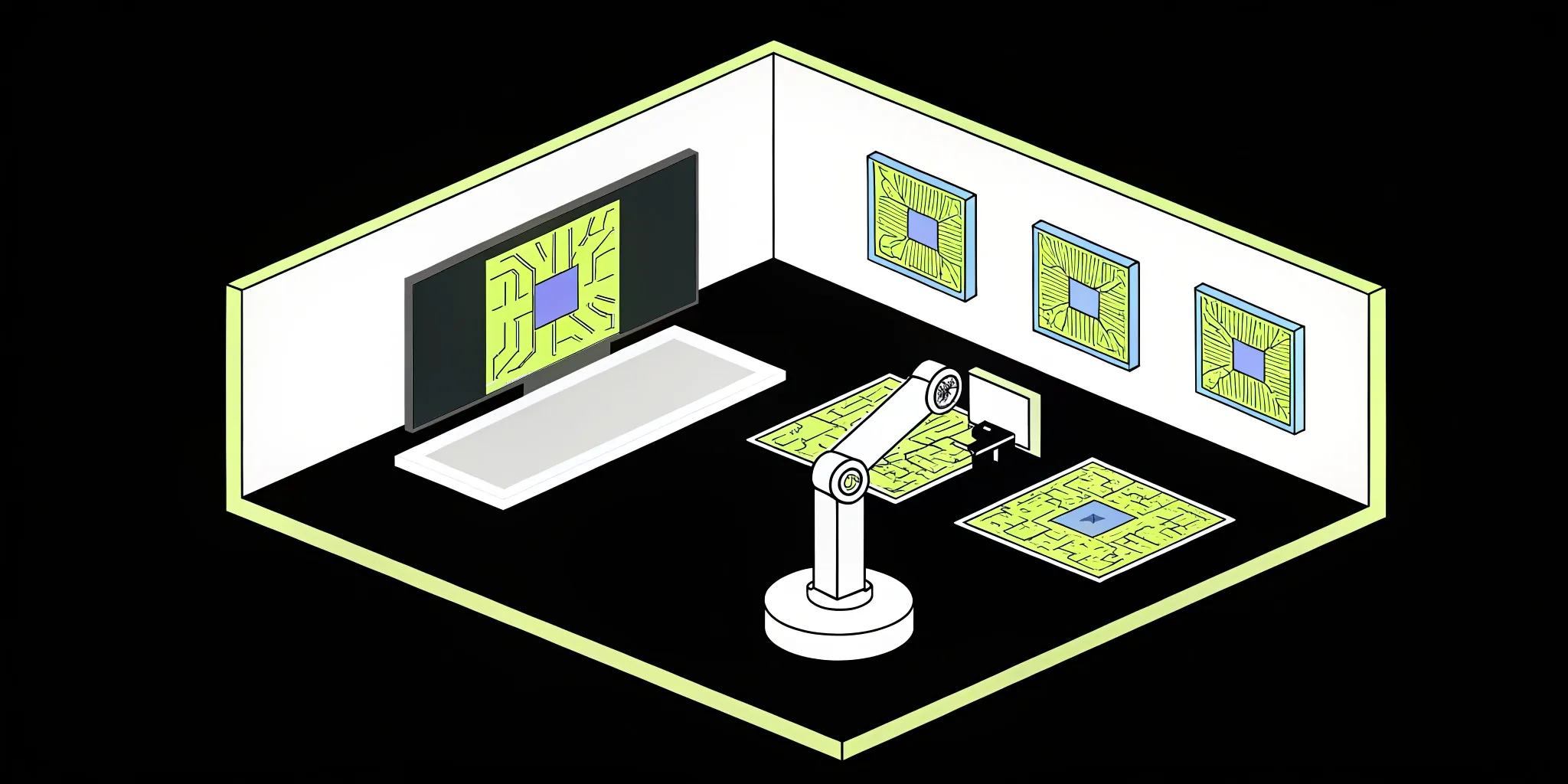

Tools that power Cake's inference stack

Ray Serve

Inference Servers

Ray Serve is a scalable model serving library built on Ray, designed to deploy, scale, and manage machine learning models in production.

NVIDIA Triton Inference Server

Inference Servers

Triton is NVIDIA’s open-source server for running high-performance inference across multiple models, backends, and hardware accelerators.

Ollama

Inference Servers

Ollama is a local runtime for large language models, allowing developers to run and customize open-source LLMs on their own machines.

vLLM

Inference Servers

vLLM is an open-source library for high-throughput, low-latency LLM serving with paged attention and efficient GPU memory usage.

Langfuse

LLM Observability

Langfuse is an open-source observability and analytics platform for LLM apps, capturing traces, user feedback, and performance metrics.

LiteLLM

Observability & Monitoring

Inference Servers

Data Catalogs & Lineage

LiteLLM provides a lightweight wrapper for calling OpenAI, Anthropic, Mistral, and other LLMs through a common interface. It supports token metering, caching, and governance tools.

Frequently asked questions

What is inference in AI?

Inference is the process of using a trained machine learning or AI model to generate predictions or outputs from new input data. It’s how models deliver real-world value after training.

How does Cake support AI inference?

Cake provides a managed, cloud-agnostic platform that integrates open-source model servers, routing tools, and observability frameworks to make inference fast, secure, and reliable.

Can I run inference on large language models with Cake?

Yes. Cake supports frontier-scale LLMs and provides the infrastructure to deploy, optimize, and observe them in production.

What are the cost considerations for inference?

Inference costs typically come from compute usage, scaling demands, and infrastructure overhead. Cake helps reduce costs by optimizing resource allocation and autoscaling workloads.

Why choose Cake for inference over cloud-native services?

Cloud-native AI services often create vendor lock-in and limit flexibility. Cake gives enterprises control and portability with a secure, open-source–based stack.

Learn more about Cake

The Best Open Source AI: A Complete Guide

Find the best open source AI tools for 2025, from top LLMs to training libraries and vector search, to power your next AI project with full control.

Your Guide to the Top Open-Source MLOps Tools

Find the best open-source MLOps tools for your team. Compare top options for experiment tracking, deployment, and monitoring to build a reliable ML...

13 Open Source RAG Frameworks for Agentic AI

Find the best open source RAG framework for building agentic AI systems. Compare top tools, features, and tips to choose the right solution for your...

.png?width=220&height=168&name=Group%2010%20(1).png)

.png)