Cake for AIOps

Automate monitoring, diagnostics, and remediation using LLM-powered AIOps pipelines built on open-source infrastructure. Reduce costs and resolve incidents faster with a composable, cloud-agnostic stack.

Overview

Legacy operations stacks can barely keep up with modern infrastructure, let alone modern data. Logs, metrics, and alerts pour in faster than teams can triage, and manual responses slow everything down. AIOps bridges that gap, bringing intelligence and automation to incident detection, diagnosis, and resolution.

Cake provides a full AIOps stack built on open-source components and designed for real-world infrastructure. Use LLMs to interpret logs, correlate events, and trigger actions. Connect to observability tools like Prometheus and Grafana, orchestrate workflows with Kubeflow Pipelines, and monitor system health using open models like Evidently or NannyML.

With Cake, you can integrate the latest AIOps innovations into your workflows without being locked into an opaque vendor product. And because everything is modular and cloud agnostic, you reduce costs, improve flexibility, and maintain control over critical operational logic.

Key benefits

-

Automate root-cause analysis: Use LLMs to summarize logs, correlate alerts, and reduce time-to-resolution.

-

Reduce costs and complexity: Replace brittle custom scripts and siloed dashboards with integrated, reusable pipelines.

-

Integrate open-source observability: Connect to tools like Prometheus, Grafana, and LLM-based detectors out of the box.

-

Stay modular and cloud agnostic: Deploy anywhere and evolve your AIOps stack without lock-in.

-

Ensure compliance and traceability: Capture logs, actions, and incident lineage for review and audits.

THE CAKE DIFFERENCE

![]()

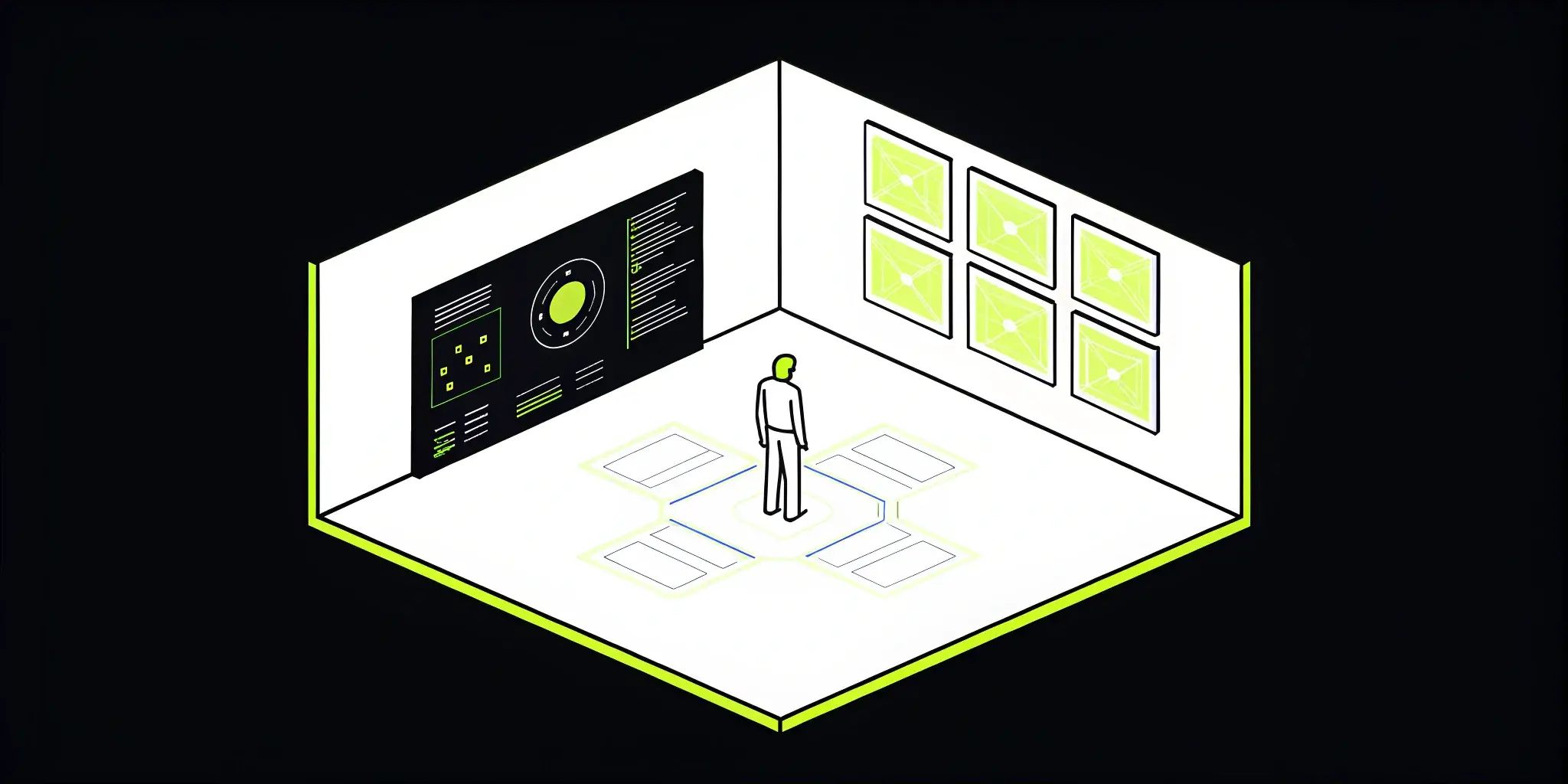

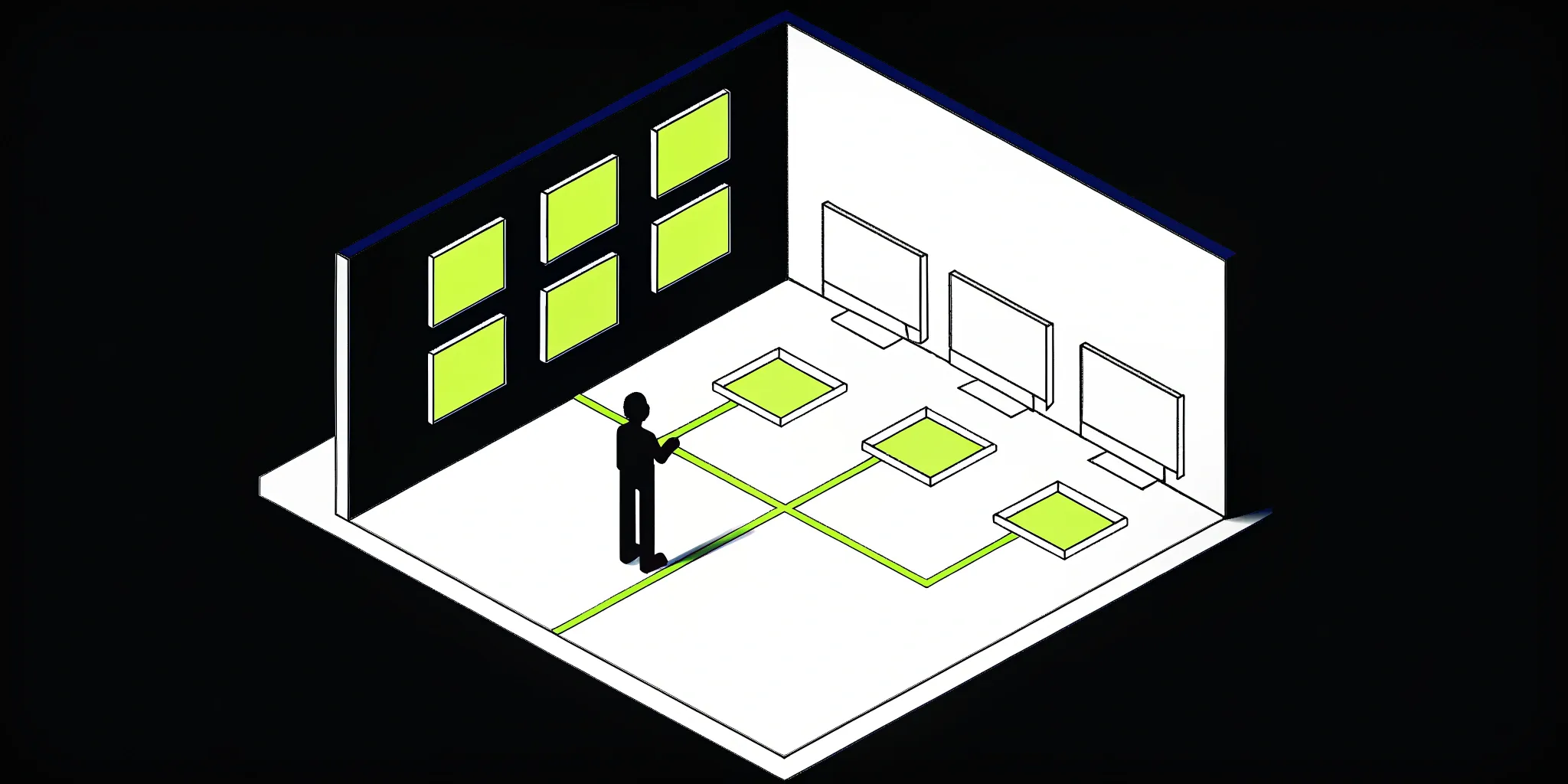

From alerts to intelligent action

Traditional monitoring tools

Siloed alerts and dashboards with no context: Legacy tools generate endless alerts without insight or automation.

- Can only report metrics, not explain root causes

- No reasoning or correlation across systems

- Teams waste time triaging false positives

- Zero ability to take action or adapt dynamically

Result:

Alert fatigue, slow incident response, and high operational overhead

AIOps with Cake

Proactive agents that observe, reason, and act: Cake lets you deploy AI agents that monitor, analyze, and take real-time actions.

- Understand patterns and context across logs, metrics, and traces

- Correlate signals to surface root causes automatically

- Take action through APIs, scripts, or playbooks

- Built-in observability, audit logs, and continuous learning

Result:

Faster resolution, reduced noise, and more resilient systems

EXAMPLE USE CASES

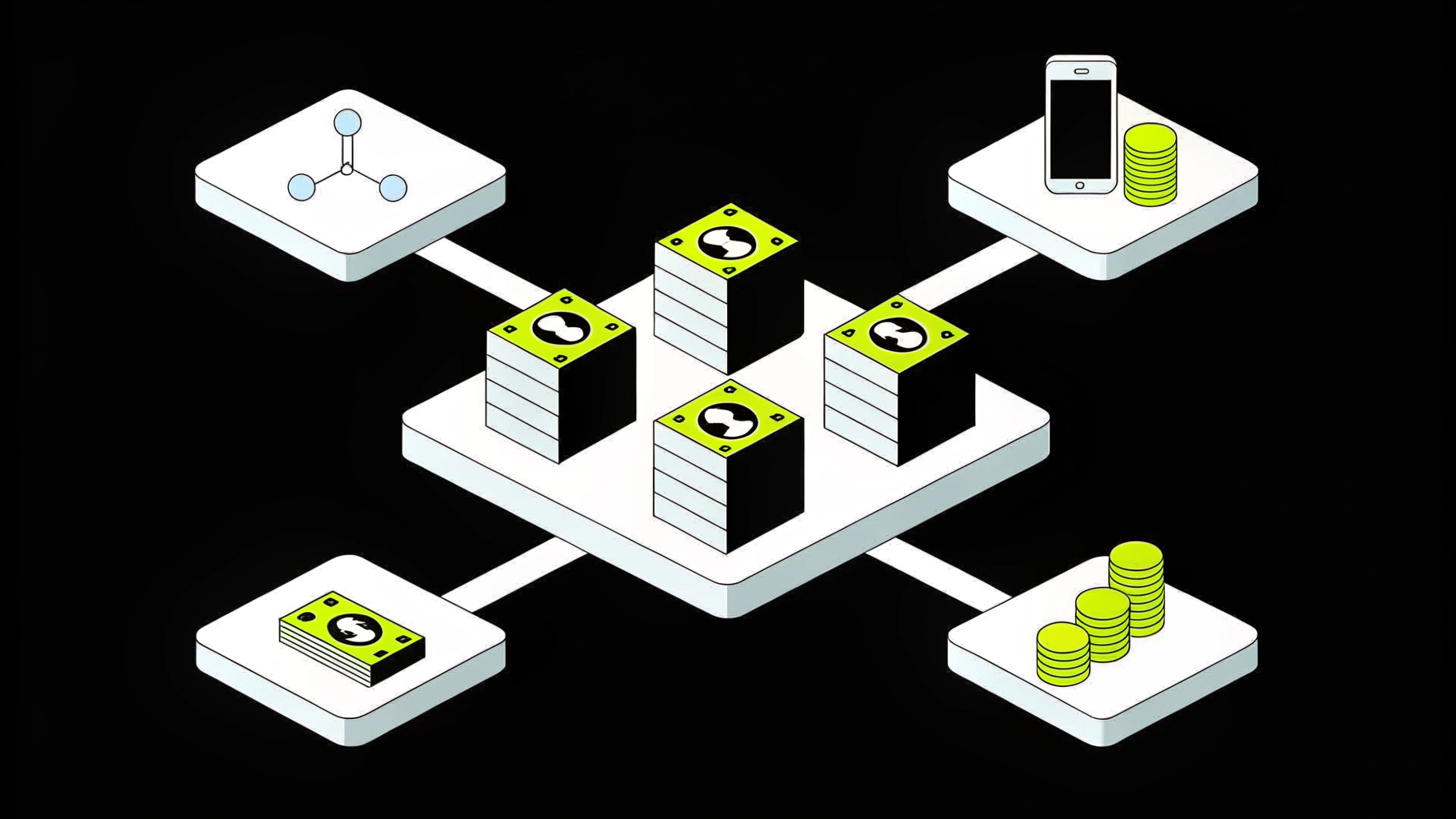

![]()

How teams use Cake’s AIOps infrastructure

to streamline operations

![]()

Intelligent alert routing

Use LLMs to cluster, summarize, and prioritize alerts based on severity and context.

![]()

Automated diagnostics

Parse logs and telemetry in real time to identify root causes and suggest remediations.

![]()

LLM-powered runbooks

Trigger automated actions (e.g., scaling, restarting, or reconfiguring) based on AI-generated insights.

![]()

Capacity forecasting for infrastructure scaling

Use historical usage patterns to anticipate when and where to scale cloud resources—preventing outages while optimizing spend.

![]()

Change impact analysis for safer deployments

Analyze past deployment data to predict the downstream effects of code or config changes before they hit production.

![]()

Ticket triage and resolution acceleration

Automatically classify and route IT tickets based on urgency, scope, and similarity to past issues to reduce response times and backlog.

BLOG

How to build a scalable GenAI infrastructure in 48 hours (yes, hours)

Cake CTO and co-founder Skyler Thomas explains how Cake helped a team scale a secure, observable, and composable GenAI infrastructure in just 48 hours. Even better? No glue code or rewrites required.

BLOG

The last mile of machine learning: getting models into production

Learn how to effectively implement machine learning in production with practical tips and strategies for deploying, monitoring, and maintaining your models.

"Our partnership with Cake has been a clear strategic choice – we're achieving the impact of two to three technical hires with the equivalent investment of half an FTE."

Scott Stafford

Chief Enterprise Architect at Ping

"With Cake we are conservatively saving at least half a million dollars purely on headcount."

CEO

InsureTech Company

COMPONENTS

![]()

Tools that power Cake's AIOps stack

Milvus

Vector Databases

Milvus is an open-source vector database built for high-speed similarity search. Cake integrates Milvus into AI stacks for recommendation engines, RAG applications, and real-time embedding search.

Airflow

Orchestration & Pipelines

Apache Airflow is an open-source workflow orchestration tool used to programmatically author, schedule, and monitor data pipelines. Cake automates Airflow deployments within AI workflows, ensuring compliance, scalability, and observability.

Ray

Distributed Computing Frameworks

Ray is a distributed execution framework for building scalable AI and Python applications across clusters.

Langflow

Agent Frameworks & Orchestration

Langflow is a visual drag-and-drop interface for building LangChain apps, enabling rapid prototyping of LLM workflows.

Promptfoo

LLM Observability

LLM Optimization

Promptfoo is an open-source testing and evaluation framework for prompts and LLM apps, helping teams benchmark, compare, and improve outputs.

vLLM

Inference Servers

vLLM is an open-source library for high-throughput, low-latency LLM serving with paged attention and efficient GPU memory usage.

Langfuse

LLM Observability

Langfuse is an open-source observability and analytics platform for LLM apps, capturing traces, user feedback, and performance metrics.

Open WebUI

Visualization & Dashboards

Open WebUI is a user-friendly, open-source interface for managing and interacting with local or remote LLMs and AI agents.

LiteLLM

Observability & Monitoring

Inference Servers

Data Catalogs & Lineage

LiteLLM provides a lightweight wrapper for calling OpenAI, Anthropic, Mistral, and other LLMs through a common interface. It supports token metering, caching, and governance tools.

Frequently asked questions

What is AIOps?

AIOps (Artificial Intelligence for IT Operations) applies AI and machine learning to automate and enhance IT operations. It helps teams detect, diagnose, and resolve issues faster while reducing noise from alerts and improving system reliability.

How does Cake support AIOps?

Cake provides a secure, cloud-agnostic AI infrastructure that integrates leading observability, automation, and analytics tools. This allows you to build and scale AIOps workflows without getting locked into proprietary platforms.

What are the benefits of using Cake for AIOps?

With Cake, teams can accelerate deployment, streamline integrations, and improve the accuracy of incident detection. The platform’s modular architecture ensures you can add or swap components as your needs evolve.

Can Cake integrate with our existing monitoring and logging tools?

Yes. Cake is designed for composability, so you can connect it to your preferred observability stack—whether that’s Prometheus, Grafana, OpenTelemetry, or other tools—while keeping full control over your data.

Is Cake compliant with enterprise security and regulatory requirements?

Absolutely. Cake meets rigorous security standards and can be configured to comply with frameworks such as SOC 2, HIPAA, and GDPR, depending on your operational environment.

How quickly can we get started with AIOps on Cake?

Most teams can go from proof of concept to production in weeks, not months, thanks to Cake’s pre-integrated open-source components and automated deployment workflows.

Learn more about AIOps powered by Cake

MLOps in Retail: A Practical Guide to Applications

Think of a brilliant machine learning (ML) model as a high-performance race car engine. It’s incredibly powerful, but on its own, it can’t get you...

Identify & Overcome AI Pipeline Bottlenecks: A Practical Guide

Your AI pipeline should be a superhighway for data, but too often it feels like a traffic jam during rush hour. A single slowdown, or bottleneck, can...

Save $500K–$1M Annually on Each LLM Project with Cake

Teams running LLM projects on Cake's platform are realizing significant cost advantages, saving between $500K and $1M annually per project. These...

.png?width=220&height=168&name=Group%2010%20(1).png)

.png)

.png)