Linear vs Nonlinear Regression: A Practical Guide

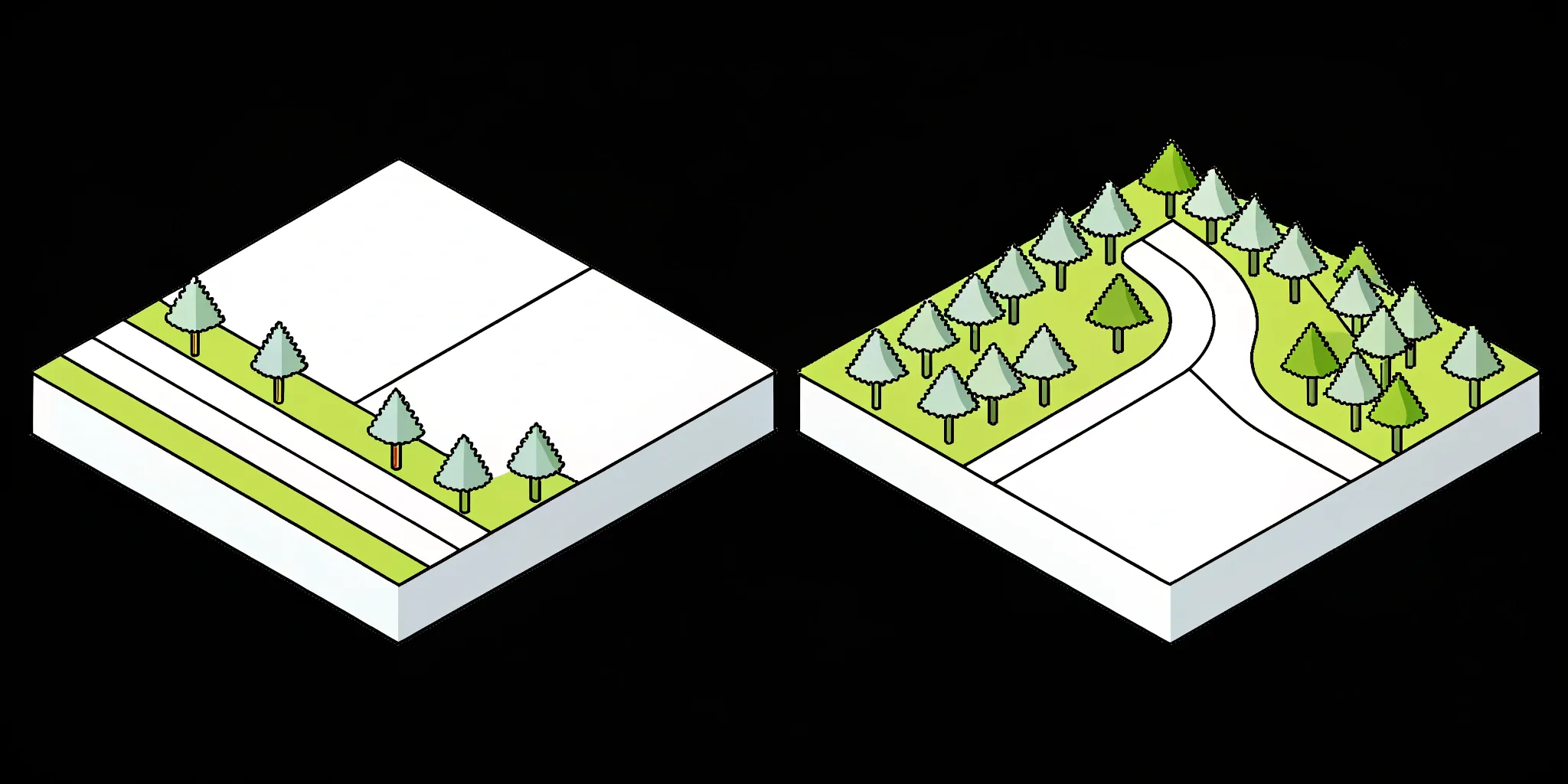

Some data tells a simple, straight-line story. Spend more on ads, sales go up. Easy. But other data has twists and turns, like a plant’s growth that starts slow, shoots up, and then levels off. Machine learning can model both. The trick is knowing which tool to use—the core of the linear vs nonlinear regression in machine learning debate. Linear regression is your go-to for straightforward patterns. For everything else, you need a nonlinear model. This guide gives you a clear framework for deciding, so you capture the real story in your data without overcomplicating things.

Key takeaways

- Start with linear regression as your baseline: It's the simplest, fastest, and most interpretable option. Only move to a nonlinear model if you can visually confirm a curved relationship in your data that a straight line fails to capture.

- Plot your data to guide your choice: A simple scatter plot is your best tool for understanding the relationship between your variables. After building your model, use validation techniques to confirm it works well on new data, not just the data it was trained on.

- Prioritize interpretability for business decisions: A highly accurate but unexplainable model is hard to act on. A slightly less precise linear model that provides clear, actionable insights is often the more valuable choice for your team.

So what is regression in machine learning?

Think of regression analysis as a way to play detective with your data. It’s a statistical method that helps you understand the relationship between different factors. At its core, it looks at how a main outcome you care about (the dependent variable) changes when other contributing factors (the independent variables) change. For example, you could use regression to determine how much your ice cream sales (the outcome) increase for every degree the temperature rises (the contributing factor).

This process is fundamental to predictive modeling and is a cornerstone of machine learning. By identifying these relationships, you can build models that make forecasts, helping you answer critical business questions and plan for the future. Managing these kinds of AI initiatives can feel complex, but understanding the basic building blocks like regression is the first step. There are two main flavors of regression we'll be focusing on: linear and nonlinear. Each has its own way of looking at the data, and choosing the right one is key to getting accurate and useful results.

Why you should care about regression analysis

So, why should you care about regression? Because it helps you find meaningful patterns in your data and shows which factors have the biggest impact on your results. It’s a powerful tool for moving beyond simple guesswork and making data-driven decisions. Regression is used across countless fields—from finance and economics to biology and engineering—to make predictions and guide strategy. Whether you're trying to predict stock prices, forecast customer demand, or optimize a manufacturing process, regression analysis provides a framework for understanding the forces at play and anticipating future outcomes.

The core concepts you'll need to know

The two main types of regression, linear and nonlinear, approach relationships differently. Linear regression assumes a straight-line relationship between your variables. Think of it as drawing the best-fitting straight line through your data points. Nonlinear regression, on the other hand, is used when the relationship follows a curve. It’s more flexible and can model more complex patterns. Understanding the difference between these models is crucial because picking the wrong one can lead to inaccurate predictions. The right choice always depends on the underlying patterns in your data.

Let's clear up some common myths

It’s a common mistake to think that linear models can only produce straight lines and that you always need a nonlinear model to fit a curve. In reality, both types of models can fit curves to your data. The "linear" in linear regression refers to the way the model’s parameters are structured, not necessarily the shape of the line it draws. You can add terms to a linear model (like squared variables) to help it fit a curve. This is an important distinction to remember as you start building and evaluating your own models.

IN DEPTH: Build powerful regression analytics functionality with Cake

How does linear regression actually work?

Linear regression is often the first model you learn in machine learning, and for good reason. It’s straightforward, easy to interpret, and provides a solid foundation for understanding more complex models. At its core, linear regression tries to find the best possible straight line to describe the relationship between two or more variables. Think of it as drawing a trend line through a scatter plot of your data. This approach is incredibly useful for making predictions, like forecasting sales based on ad spend or estimating a house price based on its square footage. It’s a powerful tool for finding clear, linear patterns in your data.

Because it's so transparent, it's not just a predictive tool; it's also a great way to understand the strength and direction of relationships within your dataset. For businesses, this means you can confidently answer questions like, "For every extra dollar we spend on marketing, how much additional revenue can we expect?" This clarity makes it an essential part of any data scientist's toolkit and a reliable first step before moving on to more complex algorithms. It sets a performance baseline, helping you judge whether a more sophisticated model is actually providing a meaningful improvement.

Its building blocks and key assumptions

Before you can rely on a linear regression model, you need to make sure your data plays by the rules. The model operates on a few key assumptions about the relationship between your variables. The most important one is linearity—it assumes that as one variable changes, the other changes in a steady, proportional way. If your data points form a curve instead of a straight line, linear regression won't be the right fit. It also assumes that your data points are independent of each other and that the errors, or the distances from the data points to the line, are consistently scattered. Understanding these foundational assumptions is crucial for building a reliable model.

The assumption of independent observations

Another critical rule is that your observations need to be independent. This just means that one data point in your set shouldn't have any influence over another. For example, if you're analyzing customer purchases, the fact that Customer A bought a product shouldn't directly cause Customer B to buy the same product. Each observation should be a unique, standalone event, as if it were drawn using a simple random sample. When this assumption is violated—a common issue in time-series data where today's value depends on yesterday's—it can lead to a problem called autocorrelation. This essentially means your model's errors become predictable, which undermines its reliability and can give you a false sense of confidence in your predictions. Making sure your observations are truly independent is fundamental to building a trustworthy model.

A quick peek at the math involved

You don’t need to be a math whiz to understand how linear regression works. It all comes down to finding the one straight line that best fits the data. The model uses a simple equation you might remember from school: y = (slope * x) + (y-intercept). It calculates the perfect slope and y-intercept for a line that cuts through your data points, getting as close to as many of them as possible. To do this, it uses a method called "least squares," which minimizes the total squared distance between each data point and the line. This ensures your model's predictions are, on average, as accurate as they can be for a linear relationship.

Understanding what "linear in its parameters" means

This is where things can get a little confusing, but it's a really important concept. When we say a model is "linear," we're not talking about the final shape it draws. We're talking about how the model's equation is built. A regression model is considered linear in its parameters if the equation is a simple sum of terms. Each term is just a parameter (a value the model learns) multiplied by a variable. There are no parameters being multiplied, divided, or put inside functions like a logarithm. This simple structure is what makes the model "linear." You can still model a curve by changing the variables themselves—for instance, by including a squared term like x². The model remains linear because the parameter attached to x² is still just added to the rest of the equation.

Why its simplicity is a major strength

One of the biggest advantages of linear regression is its simplicity. The results are easy to understand and explain to people who aren't data scientists, which is a huge plus in any business setting. You can clearly state how a change in one variable is expected to affect another. This high level of model interpretability helps build trust with stakeholders and makes it easier to act on the insights you uncover. Plus, linear regression is computationally light, meaning it doesn't require a ton of processing power. You can run it quickly, making it a great choice for initial data exploration or when you need a fast, baseline model.

How to handle its limitations

Linear regression is a fantastic tool, but it has its weaknesses. Its biggest vulnerability is its sensitivity to outliers—data points that are far away from the rest of the group. A single extreme value can pull the entire line of best fit toward it, skewing your results and leading to inaccurate predictions. Imagine one mansion in a neighborhood of average-sized homes; it would throw off the price prediction for everyone else. To handle this, you can use techniques to identify and manage outliers before building your model. This might involve removing them if they're errors or using more robust regression methods that are less influenced by extreme values.

IN DEPTH: Implement predictive Analytics Into Your Stack With Cake

How to spot and fix common problems

Building a model is one thing, but trusting its results is another. Before you put your linear regression model to work, you need to do a little detective work to make sure it’s actually a good fit for your data. This means running a few diagnostic checks to spot potential issues. Luckily, there are some straightforward visual tools and techniques that can help you identify problems like non-linearity or other violated assumptions. Think of it as a quick health check for your model to ensure its predictions are reliable and accurate before you base any important decisions on them.

Using residual plots to check for non-linearity

One of the most useful diagnostic tools is the residual plot. Residuals are simply the errors your model makes—the difference between the predicted values and the actual values. When you plot these errors, they should look completely random, like a shapeless cloud of dots scattered around the zero line. If you see any kind of pattern, like a U-shape or a curve, that’s a major red flag. It tells you that your linear model is missing a more complex, curved relationship in the data. A pattern in your residuals means your model is biased, and its predictions won't be consistently accurate across the board.

Using QQ plots to check for normality

Another key assumption of linear regression is that the residuals are "normally distributed," meaning they follow a classic bell-curve shape. A great way to check this is with a Quantile-Quantile plot, or QQ plot. This chart compares your residuals to what they *should* look like if they were perfectly normally distributed. In a perfect scenario, your data points will form a neat, straight diagonal line on the plot. If you see the points straying from the line, especially in an S-shape or a curve at the ends, it suggests your residuals aren't normal. This can affect the validity of your model's confidence intervals and hypothesis tests.

Applying transformations to fix model issues

So what do you do if your diagnostic plots reveal problems? You don't always have to scrap the linear model and start over with something more complex. Often, you can apply a mathematical transformation to one of your variables to fix the issue. For example, if your data shows a curve, taking the logarithm or the square root of a variable can sometimes straighten out the relationship. This allows your simple linear model to capture the pattern effectively. It’s a powerful technique that can improve your model’s accuracy while keeping it easy to interpret, giving you the best of both worlds.

How nonlinear regression tackles complex data

Not every pattern in your data follows a straight line. Think of pricing airline tickets: as the flight date approaches, prices may stay flat for weeks, spike suddenly, drop again during a sale, and then surge at the last minute. A simple line won’t capture that kind of fluctuation.

This is where nonlinear regression comes in. It’s designed to model more intricate, curved relationships that change in ways a straight line can’t capture. Unlike linear regression, which assumes a constant rate of change, nonlinear regression lets the relationship between variables bend and shift, which makes it ideal for modeling real-world systems where change isn’t always predictable or smooth.

With nonlinear models, you can represent a wide variety of behaviors (e.g., plateaus, spikes, dips, and more) giving you the flexibility to work with data that doesn’t play by linear rules. Whether you’re forecasting customer behavior, modeling energy consumption, or estimating demand across different conditions, nonlinear regression gives you the tools to build a more accurate and responsive model.

Exploring different nonlinear models

When you step into the world of nonlinear regression, you’ll find it’s not a single model but a whole family of them. Each one is designed to fit a different type of curve. For example, polynomial regression can model data with multiple bends, while exponential regression is perfect for situations involving rapid growth or decay, like population studies. Other types, like logarithmic models, are useful when the effect of a variable tapers off as its value increases.

The key is to visualize your data first. A simple scatter plot can often give you clues about the shape of the relationship. From there, you can start exploring which type of nonlinear model might be the best fit. The goal is to find a model that accurately captures the underlying curved relationships without being overly complex.

The math that creates the curves

So, what actually makes a model "nonlinear"? It all comes down to the math. In linear regression, the equation is a simple sum of terms, where each parameter is a coefficient. Nonlinear models are much more flexible because their equations aren't so restricted. The parameters can show up in all sorts of places—as exponents, inside logarithmic functions, or as divisors.

This mathematical freedom is what allows nonlinear models to fit such a huge variety of curves. They can bend and shape themselves to the data in ways a straight line never could. You don't need to be a math whiz to use them, but it helps to understand that this flexibility is their core strength. It’s what makes them so effective at modeling the complex, real-world phenomena that don't always follow a simple path. For those curious about the specifics, there are great resources that explain the difference between linear and nonlinear equations.

Examples of nonlinear model equations

Seeing a few examples can make the difference between linear and nonlinear equations click. For instance, a polynomial model might look like y = (a * x²) + (b * x) + c. By adding that squared term, you give the model the ability to create a curve with a single bend, perfect for modeling things like the relationship between advertising spend and sales, which might see diminishing returns. An exponential model, on the other hand, could be y = a * (b^x), where the variable `x` is in the exponent. This is the go-to for modeling rapid growth or decay, like a viral marketing campaign. Each of these nonlinear functions gives you a different tool to capture the unique shape of your data.

The challenge of estimating parameters

With great flexibility comes great responsibility—and a few challenges. Finding the best parameters for a nonlinear model is typically harder than for a linear one. Linear regression often has a straightforward method to find the best-fit line. Nonlinear regression, on the other hand, usually relies on an iterative process. The algorithm starts with a guess for the parameters and then gradually refines them over many steps to minimize the error.

This process can be more computationally intensive and time-consuming. It also means you might need to provide good starting values for the parameters to help the algorithm find the best solution. Because of this complexity, creating nonlinear models requires more careful setup and a deeper understanding of the underlying model. This is where having a robust AI platform can make a huge difference by managing the computational load and streamlining the modeling process.

How to keep model complexity in check

Because nonlinear models are so adaptable, there's a risk of making them too complex. This is known as overfitting, where the model learns the noise in your training data instead of the underlying trend. An overfit model might look perfect on the data it was trained on, but it will perform poorly when it sees new, unseen data.

To manage this, always start with the simplest model that could plausibly fit your data. Visualizing your data on a scatter plot is a great first step. It’s also crucial to use validation techniques, like splitting your data into training and testing sets, to check how well your model generalizes. Picking the right curve can take some trial and error, but focusing on balance is key. You want a model that is complex enough to capture the true relationship but simple enough to be stable and useful.

Linear regression vs nonlinear regression: a head-to-head comparison

Choosing between linear and nonlinear regression is like deciding between a ruler and a flexible curve to trace a pattern. Linear regression tries to draw a single straight line through your data, while nonlinear regression can bend and adapt to fit more complex shapes. This fundamental difference has major implications for your AI project, influencing everything from accuracy to how you interpret the results. A linear model is wonderfully straightforward, but it can fall short if the real-world relationship you're modeling isn't a straight line.

On the other hand, a nonlinear model can capture those intricate, real-world patterns but often requires more computational power and can be harder to explain. In this section, we'll compare these two approaches side-by-side. We'll look at how each one sees data patterns, their relative flexibility, and what they demand from your computer. We'll also cover how to make sense of their results and understand their limitations, especially when it comes to common performance metrics. This will give you a clear framework for deciding which model is the right tool for your job.

How they interpret data patterns

Think of linear regression as a tool that's always looking for a steady, proportional relationship. It assumes that as one variable changes, the other changes at a constant rate. This is perfect for many straightforward scenarios, like predicting how temperature affects ice cream sales—as it gets hotter, sales go up in a fairly predictable, straight-line fashion. It’s simple, clean, and effective when the underlying pattern is also simple.

Nonlinear regression is what you reach for when a straight line just won’t do. It’s designed to find curved relationships in your data. For example, the effect of practice time on skill improvement isn't always linear; you might see rapid gains at first, followed by slower progress as you approach mastery. A nonlinear model can accurately capture this kind of diminishing return, providing a much more realistic picture of the data.

Comparing their flexibility and assumptions

The core difference in flexibility comes down to the math behind the models. Linear models are built on a rigid structure where the parameters—the values the model learns from the data—are combined in a simple, additive way. This structure is a key feature, as it makes the model stable and its behavior predictable. It operates under a clear set of rules that don't change.

Nonlinear models are far more flexible because their equations aren't so constrained. Parameters can be multiplied, raised to powers, or nested inside other functions, allowing the model to form a wide variety of curves. This adaptability is powerful, but it also means these models don't adhere to the same key assumptions as their linear counterparts. You have to be mindful of this when you're building and evaluating them to ensure your results are sound.

When it comes to computational resources, linear regression is the lightweight champion.

How they find the best fit

Both linear and nonlinear models have the same goal: to find the parameters that make the model fit the data as closely as possible. However, they take very different paths to get there. Linear regression has a direct, straightforward method for finding the single best solution. Nonlinear regression, on the other hand, uses a more exploratory approach, starting with a guess and refining it over and over until it lands on a solution. This difference in methodology is a major factor in their respective speed and computational requirements.

Solving linear models with ordinary least squares (OLS)

Linear regression tries to find the best possible straight line to describe the relationship between two or more variables. To do this, it typically uses a method called ordinary least squares, or OLS. This sounds technical, but the idea is simple: OLS calculates the one unique line that minimizes the sum of the squared vertical distances between each data point and the line itself. It's an elegant, one-step calculation that directly solves for the best parameters. This approach is incredibly useful for making predictions, like forecasting sales based on ad spend or estimating a house price based on its square footage. Because it's a direct solution, it's also incredibly fast and efficient.

Solving nonlinear models with iterative methods

Finding the best parameters for a nonlinear model is typically harder than for a linear one. Since there's no simple, one-step formula, nonlinear regression usually relies on an iterative process. The algorithm starts with a guess for the parameters and then gradually refines them over many steps to minimize the error. It's like searching for the lowest point in a valley by taking small steps downhill until you can't go any lower. This process requires more computational power and can be sensitive to the initial guess. Managing these more intensive AI initiatives is where a streamlined platform can be a huge help, handling the heavy lifting so your team can focus on the modeling itself.

A practical look at the pros and cons

When you're deciding between a linear and a nonlinear model, there's no single "best" choice—it's all about tradeoffs. Linear regression offers speed and clarity, making it a fantastic starting point for almost any project. Nonlinear regression provides flexibility and power, allowing you to capture complex patterns that a straight line would miss. Understanding the specific advantages and disadvantages of each will help you choose the right tool for your data and your business goals, ensuring you strike the right balance between accuracy and interpretability.

Advantages and disadvantages of linear regression

One of the biggest advantages of linear regression is its simplicity. The results are easy to understand and explain to people who aren't data scientists, which is a huge plus in any business setting. You can clearly state how a change in one variable is expected to affect another. This interpretability, combined with its computational speed, makes it an excellent baseline model. The main disadvantage, of course, is its rigidity. If the true relationship in your data is curved, a linear model will fail to capture it, leading to an inaccurate representation of reality and poor predictive performance.

Advantages and disadvantages of nonlinear regression

Nonlinear models are far more flexible because their equations aren't so constrained. Parameters can be multiplied, raised to powers, or nested inside other functions, allowing the model to form a wide variety of curves. This adaptability is powerful, letting you accurately model complex, real-world systems. However, this flexibility comes at a cost. These models are more computationally expensive, can be prone to overfitting if not handled carefully, and their results are often much harder to interpret. Explaining the precise impact of one variable can be difficult when its effect changes depending on its own value and the values of other variables in the model.

What they demand from your computer

When it comes to computational resources, linear regression is the lightweight champion. The process for finding the best-fitting straight line is mathematically direct and can be solved very efficiently. This makes it an excellent choice for quick analyses, establishing a baseline model, or working on projects where you have limited computing resources. It gets you answers fast without needing a supercomputer.

Nonlinear regression, however, is more computationally intensive. Finding the best curve is often an iterative process, where the algorithm tests many different parameter values again and again to zero in on the optimal fit. This can take much more time and processing power, especially with large datasets. For these demanding tasks, having a robust infrastructure like the one provided by Cake is crucial for managing the workload and getting results in a reasonable timeframe.

How they handle errors differently

One of the most common hurdles with nonlinear models is figuring out how well they're actually performing. For linear regression, we have go-to metrics like R-squared, which gives us a neat percentage of how much of the outcome the model can explain. These metrics are reliable because linear models are built on a foundation of well-understood statistical properties.

With nonlinear models, these familiar metrics can be deceptive. The complex relationships they model often violate the assumptions that make metrics like R-squared and p-values meaningful. This doesn't mean you're flying blind; it just means you need a different toolkit for evaluation. You might rely more on visual diagnostics, like plotting the residuals, or use other statistical tests that are better suited for the specific type of nonlinear model you're working with.

How easy is it to interpret the results?

A huge advantage of linear regression is how easy it is to explain. The results are intuitive, even for people without a background in statistics. You can make clear statements like, "For every additional year of experience, salary increases by an average of $5,000." Each variable has a distinct, quantifiable impact that’s simple to communicate and act on.

Nonlinear regression results are typically much harder to translate into simple terms. Because the relationship between variables isn't constant, you can't make such straightforward statements. The impact of one variable might change depending on the value of another. This trade-off between predictive power and model interpretability is a critical strategic decision. Sometimes, a slightly less accurate model that everyone can understand is more valuable than a highly complex one that nobody can.

How to choose between linear and nonlinear models

Deciding between a linear and nonlinear model isn't about finding the "best" model in a vacuum, but about finding the best model for your specific data and goals. The right choice depends on the underlying patterns in your dataset and what you're trying to predict. A simpler model that accurately captures the trend is always better than a complex one that's hard to interpret and maintain. Think of it as choosing the right toolset for the job—you don’t use a map of one city to navigate another. Let's walk through how to pick the right approach for your project.

When to use a linear regression model

You should reach for linear regression when the relationship between your variables looks like a straight line. If you can plot your data points and see a clear, steady trend—as one variable goes up, the other goes up or down in a consistent way—linear regression is your best starting point. It’s simpler to build, runs faster, and the results are much easier to explain to stakeholders. This is where linear's simplicity pays off. It works beautifully when you're modeling straightforward relationships, like predicting an increase in sales for every dollar spent on advertising. Always start here unless you have a strong reason to believe the relationship is more complex.

Real-world applications: risk assessment and economic forecasting

In fields like finance and economics, regression is the backbone of decision-making. For risk assessment, a linear model is often perfect for the job. A bank, for instance, can use it to predict the likelihood of a loan default based on clear factors like income and credit score. The relationship is relatively straightforward, and the model's interpretability is crucial for making fair, explainable decisions. Economic forecasting, on the other hand, often deals with more complex dynamics. The relationship between interest rates and consumer spending isn't always a straight line; it can be influenced by dozens of other factors. This is where analysts use nonlinear models to capture these more intricate, curved relationships, allowing them to build more accurate forecasts and guide strategy in a constantly changing environment.

When a nonlinear model is the better choice

If your data plot looks more like a curve than a straight line, it’s time to consider nonlinear regression. This model is far more flexible and can adapt to a wide variety of complex relationships, like the growth of a user base over time or the effect of temperature on a chemical reaction. While a straight line can't capture these nuances, a nonlinear model is designed for them. If you try a linear model first and find that it doesn't fit your data well, or if your domain knowledge tells you the connection between variables isn't linear, then a nonlinear model is likely the better choice for achieving accurate predictions.

First, always try linear regression. It’s the simplest and most efficient baseline.

Real-world applications: population growth and medical research

Nonlinear models are essential in fields like biology and medicine, where relationships are rarely straightforward. For instance, modeling how populations grow is a classic case. A population doesn’t increase in a straight line forever; it often follows a curve, starting slow, accelerating, and then leveling off as resources become limited. A linear model would completely miss this pattern, but a nonlinear model can capture it perfectly. Similarly, in medical research, nonlinear regression is used to understand how a drug's concentration in the body changes over time—it rises and then falls. Accurately modeling this curve is critical for determining correct dosages. These applications show how nonlinear regression provides the flexibility to work with intricate, curved relationships that don’t play by linear rules.

A simple framework to help you decide

Here’s a straightforward way to approach this choice. First, always try linear regression. It’s the simplest and most efficient baseline. Plot your data and the resulting regression line to visually inspect the fit. Does the line seem to capture the general trend? If it does, you might be done. If the line is a poor fit and the data points clearly show a curve, it’s time to explore nonlinear regression. A great way to technically validate your model is with cross-validation. This involves splitting your data, building a model on one part, and testing its performance on the other to see how well it generalizes to new data.

How your choice impacts model performance

Your model choice has a direct impact on performance and interpretability. Linear models are easy to understand; the coefficients tell you exactly how much the output variable changes for each unit change in an input variable. Nonlinear models, while potentially more accurate for complex data, are often more of a "black box." It can be much harder to pinpoint the exact relationship between variables. Furthermore, standard performance metrics like R-squared and p-values aren't always reliable for nonlinear models. This trade-off between simplicity and flexibility is a core consideration when building any AI solution.

How to measure your model's performance

Building a regression model is one thing, but knowing if it’s actually any good is another. You can’t just build it and hope for the best. Measuring your model's success is how you prove its value and ensure it’s making reliable predictions for your business. This step separates a cool data science experiment from a tool that drives real results. It’s all about checking your work, understanding the model’s strengths and weaknesses, and having the confidence to put it into production. Without proper evaluation, you’re essentially flying blind.

Think of it like this: you wouldn’t launch a new product without testing it first, right? The same principle applies here. By using the right metrics and validation techniques, you can be sure your model is ready for the real world. This process helps you fine-tune its performance, explain its outcomes to stakeholders, and ultimately, trust the insights it provides. It’s a non-negotiable step for any team serious about implementing AI that works. At Cake, we know that a successful AI initiative depends on robust, well-vetted models.

Key metrics you should be tracking

Once your model is trained, you need a way to score its performance. For regression, several key metrics give you a clear picture of how close your model's predictions are to the actual values. The most common ones include Mean Absolute Error (MAE), which tells you the average size of the errors, and Mean Squared Error (MSE), which penalizes larger errors more heavily. You'll also see Root Mean Squared Error (RMSE), which is just the square root of MSE, making it easier to interpret in the original units of your data.

Another crucial metric is the aforementioned R-squared, which measures how much of the variation in the outcome your model can explain. By using these specific metrics, you can evaluate the accuracy and effectiveness of your models in a standardized way.

Important statistics to report for linear models

For linear models, you have a standard toolkit of statistics that tells you the full story of your model's performance. You should always report the Beta weights, which show you exactly how much each input variable affects the outcome. The t-test results and p-values for each variable tell you if that effect is statistically significant or just due to random chance. Then, the overall F-score and p-value confirm whether your entire model is a better predictor than just guessing the average. Finally, R-squared (R²) gives you a simple percentage that explains how much of the variation in your outcome is captured by the model. Together, these stats provide a comprehensive report card for your linear model.

Why R-squared isn't always reliable for nonlinear models

While R-squared is the go-to metric for linear regression, it can be misleading when you're working with nonlinear models. The statistical assumptions that make R-squared a reliable measure for straight lines often don't apply to curves. As we've noted before, "With nonlinear models, these familiar metrics can be deceptive. The complex relationships they model often violate the assumptions that make metrics like R-squared and p-values meaningful." A high R-squared value for a nonlinear model might look impressive, but it doesn't guarantee a good fit. It could simply mean the model is overfitting—hugging the training data so tightly that it won't perform well on new, unseen data. This makes it a less trustworthy indicator of a nonlinear model's true predictive power.

Using standard error of the regression (S) as an alternative

So, if R-squared is off the table for nonlinear models, what should you use instead? A great alternative is the Standard Error of the Regression (S). This metric measures the typical distance between the observed data points and the model's fitted line. The best part is that S is measured in the same units as your outcome variable, making it very intuitive to understand. For example, if you're predicting house prices in dollars, S will also be in dollars. When you're comparing different models, the rule is simple: a smaller S value is better. According to expert advice, "A smaller 'S' value means your data points are closer to the fitted line, which is better." This makes S a reliable tool for choosing the model that most accurately fits your data.

Simple ways to validate your model

Validation is about making sure your model performs well on new, unseen data—not just the data it was trained on. A common technique is cross-validation, where you split your data into several parts, train the model on some parts, and test it on the parts it hasn't seen. This gives you a more realistic estimate of its real-world performance. For nonlinear models, the goal is often to find the curve that best fits the data by making the "sum of the squares" of the errors as small as possible. This process ensures the model generalizes well and isn't just memorizing the training data.

For nonlinear models, the goal is often to find the curve that best fits the data by making the "sum of the squares" of the errors as small as possible.

How to analyze your model's performance

Looking at a single metric isn't always enough. You need to analyze your model's performance from a few different angles. R-squared, for example, is a relative metric. It’s fantastic for comparing different models trained on the same dataset. It helps you understand how much better your model is at making predictions compared to a very simple model that just predicts the average value for everything. When you evaluate how much better your model fits the data, you get a clear sense of the value it’s adding. Analyzing error plots can also reveal patterns, showing you where your model is struggling and where it excels.

Why statistical significance really matters

Statistical significance helps you determine if the relationships your model has identified are real or if they just occurred by chance. This is especially important when you need to explain why the model is making certain predictions. However, this can get tricky with nonlinear regression. Because there can be many possible "best" fits for a complex curve, common statistical measures like R-squared and p-values (which test the importance of each factor) aren't always reliable. Understanding these limitations is key to interpreting your results correctly and avoiding making business decisions based on random noise. It’s a reminder that complexity requires a more careful and nuanced approach to validation.

Ready to put your model into practice?

Choosing between linear and nonlinear regression is a great first step, but the real work begins when you start applying your model to actual data. Moving from theory to a working model involves a few critical stages that can make or break your project. It’s all about preparing your foundation, being mindful of common pitfalls, and carefully refining your approach. Let’s walk through the essential steps to get your model up and running successfully.

How to get your data ready for modeling

Before you can build an effective model, your data needs to be in top shape. Think of this as the prep work you do before painting a room—it’s not the most glamorous part, but it’s essential for a good result. The goal of regression analysis is to understand how your main variable of interest changes based on other factors. To see those relationships clearly, you need clean, well-structured data. This means handling missing values, correcting errors, and making sure everything is formatted consistently. You might also need to perform feature scaling to ensure one variable doesn’t disproportionately influence the model just because its scale is different. A little effort here saves a lot of headaches later.

A guide to fine-tuning your parameters

Getting your model to perform its best often comes down to tuning its parameters. These are the settings you configure before the training process begins. For nonlinear models, this can be a bit of an art form. They are often harder to build than linear models because finding the best fit "involve[s] trying out different options until the best fit is found." This iterative process, known as hyperparameter tuning, can involve testing different combinations of settings to see what works best. Techniques like grid search or random search can help automate this process, but it still requires a thoughtful approach to find that sweet spot between accuracy and simplicity.

What should you do with outliers?

Outliers are data points that are significantly different from the rest of your data. They can be the result of a measurement error or a genuinely rare event. Linear regression, in particular, is very sensitive to them; a single outlier can pull the entire regression line off course. The first step is always to investigate them. Is that extreme value a typo or a real piece of information? Depending on the answer, you might choose to remove it, transform it to be less extreme, or use a more robust regression model that is less affected by outliers. Properly handling outliers ensures your model reflects the true underlying trend in your data, not just the noise.

Helpful tools and resources to get you started

Getting started with regression modeling doesn't mean you have to build everything from the ground up. The machine learning community is incredibly collaborative, and there are tons of powerful tools and resources available to help you implement these models effectively. Whether you're setting up your first project or looking to refine your existing workflows, having the right tools can make all the difference. Think of this as your starter kit for building, testing, and understanding regression models. We'll walk through some essential libraries, development environments, and learning materials that can help you move from theory to practice with confidence.

These resources are designed to simplify complex tasks, from fitting models to visualizing data, so you can focus on what really matters: getting meaningful insights from your data. Instead of getting stuck on the syntax, you can use these tools to streamline your process. For example, pre-built functions can handle the heavy lifting of complex calculations, while comprehensive documentation can guide you through any roadblocks. By leveraging these existing resources, you can accelerate your development cycle and build more robust, reliable models without reinventing the wheel. Let's look at a few key areas where you can find support.

Essential Python libraries for regression

When you're working with regression models, Python is a fantastic choice, largely because of its powerful libraries. For nonlinear regression, a few stand out. Libraries like NumPy, SciPy, and statsmodels are your best friends for fitting models, running statistical tests, and creating visualizations. For example, the statsmodels library is packed with tools specifically for nonlinear regression analysis, which makes implementing more advanced models much more straightforward. These libraries handle a lot of the complex math for you, so you can concentrate on interpreting the results and refining your approach.

Using scikit-learn for linear regression

For most machine learning tasks in Python, scikit-learn is the first library you'll turn to, and for good reason. It offers a clean, consistent way to use a wide range of models, and its linear regression tool is a perfect example. When you want to find that "best possible straight line" to describe your data, scikit-learn makes it incredibly simple. You can import the LinearRegression model, feed it your data, and it handles all the underlying math to find the optimal slope and intercept. This makes it an ideal tool for building your baseline model, allowing you to quickly implement a solution and see if a simple, straight-line relationship can effectively solve your problem.

Using SciPy's curve_fit for nonlinear regression

When your data has a clear curve that a straight line can't capture, you'll need a different tool. This is where the SciPy library comes in, specifically its powerful curve_fit function. Unlike scikit-learn's approach, curve_fit requires you to first define the mathematical function—the shape of the curve—you think best describes your data. You provide the function and the data, and curve_fit runs an iterative process to find the parameters that make your function fit the data as closely as possible. This gives you immense flexibility to model all sorts of complex relationships, from exponential growth to logarithmic decay, but it does require a bit more upfront thinking about the underlying pattern you're trying to model.

How to set up your development environment

A solid development environment is the foundation of any successful modeling project. It’s where you’ll write code, test ideas, and visualize your results. While many options exist, a platform like MATLAB offers excellent support for nonlinear regression, complete with built-in functions and detailed documentation. This can be a huge help if you prefer a more graphical interface or are already working within the MATLAB ecosystem. Having a robust environment means you spend less time wrestling with setup and configuration and more time actually building and analyzing your models.

Managing the infrastructure for compute-heavy models

Nonlinear models can be real workhorses, but they're also resource-hungry. Unlike linear models that often have a direct, efficient solution, nonlinear regression is more computationally intensive. Finding the best curve relies on an iterative process where the algorithm tests countless parameter values again and again to zero in on the optimal fit. This can be incredibly time-consuming and requires significant processing power, especially with large datasets. This is where managing your infrastructure becomes critical. You don't want your data science team bogged down by slow computations or infrastructure headaches. A platform that handles the entire stack, from compute to integrations, can free up your team to focus on what they do best: building great models. For teams looking to accelerate their AI initiatives, having a solution that manages this complexity is a game-changer.

Where you can go to learn more

Once you have the basics down, you'll probably want to go deeper. There are plenty of online resources to guide you, but a great place to start is with a practical overview. For a clear and thorough introduction to the topic, check out this Medium article on nonlinear regression. It breaks down the concepts with helpful examples and real-world applications, making it a valuable read for both newcomers and anyone looking for a quick refresher. Continuing your education is key to mastering these techniques, and well-written guides like this one can make the learning process much smoother.

How to find documentation and support

Building a model is one thing, but knowing if it's any good is another. Evaluating your model's performance is a critical step you can't afford to skip. You need to understand how well it fits your data and whether it's making accurate predictions. For a detailed guide on this, you can learn more about evaluating goodness-of-fit for nonlinear models. This resource covers different methods and metrics you can use to assess your model's performance, helping you ensure that your final model is both robust and reliable. It’s an invaluable read for making sure your hard work pays off.

Related articles

- Regression | Cake AI Solutions

- Machine Learning in Production

- XGBoost

- Comparative Analysis | Cake AI Solutions

Frequently asked questions

Is linear regression only useful for straight lines?

Not at all, and this is a super common point of confusion. The "linear" part of the name actually refers to how the model's parameters are structured, not the shape of the line it can produce. You can add terms to a linear model, like squared variables, to help it fit a curve to your data. The key is that it's less flexible than a true nonlinear model, but it's definitely not limited to perfectly straight-line relationships.

So, which model is actually better: linear or nonlinear?

This is like asking if a hammer is better than a screwdriver—it completely depends on the job. Neither one is inherently superior. Linear regression is your go-to for its simplicity, speed, and how easy it is to interpret. Nonlinear regression is the right choice when you have a complex, curved relationship that a simpler model just can't capture accurately. The best model is always the one that reliably fits your data without being overly complicated.

What's the very first thing I should do when deciding which model to use?

Always start by visualizing your data. Create a simple scatter plot to see what the relationship between your variables looks like with your own eyes. This one step can save you a ton of time and effort. If the points seem to follow a straight line, start with a linear model. If you see a clear curve, you know you'll likely need to explore nonlinear options.

How can I tell if my model is overfitting the data?

A classic sign of overfitting is when your model performs incredibly well on the data it was trained on but fails miserably when you introduce it to new data. To check for this, always split your data into a training set and a testing set. If your model's accuracy is amazing on the training set but drops significantly on the testing set, it's a red flag that it has learned the noise instead of the actual trend.

Why can't I just use R-squared to evaluate my nonlinear model?

While R-squared is a fantastic metric for linear models, it can be misleading when applied to nonlinear ones. The statistical assumptions that make R-squared a reliable measure for linear regression often don't hold true for more complex, curved models. This means a high R-squared value on a nonlinear model might not actually mean what you think it does. It's better to use a combination of other evaluation methods, like analyzing residual plots, to get a true sense of your model's performance.

About Author

Cake Team

More articles from Cake Team

Related Post

Practical Guide to Regression Techniques for Enterprise Data Science

Cake Team

AI Regression Testing: What It Is & Why It Matters

Cake Team

A Practical Guide to Business Forecasting Models

Cake Team

How to Predict Hourly Chart Moves with Python

Cake Team