Observability for Modern AI Stacks

Modern AI infrastructure moves fast, and without the right signals in place, small problems can spiral into major outages. Cake helps teams embed observability into every layer of their stack, using open source tools, automated setup, and centralized insight to stay ahead of failures.

Overview

Most teams don’t realize they’re flying blind until something breaks. In AI environments, that might look like degraded model performance, slow inference, or stuck pipelines with no obvious root cause. Cake brings observability into focus, so you can catch and fix problems before they affect users or outcomes.

With Cake, you can deploy and scale open source observability tools across your entire stack in your own environment. Stream logs, metrics, and traces into a unified view. Monitor GPU workloads, pipelines, and agent behavior with full context. And keep everything secure, compliant, and easy to customize.

Key benefits

-

Full-stack visibility for AI infrastructure: Monitor everything from ingestion pipelines to inference endpoints in one place.

-

Custom instrumentation with zero boilerplate: Auto-instrument your stack using OpenTelemetry, Prometheus, and more without extra configuration.

-

Keep observability data secure and private: Run everything inside your VPC with fine-grained access controls and redaction policies.

-

Use the open source tools your team already loves: Plug in tools like Grafana, Jaeger, and Loki without rewriting your stack.

-

Shorten incident response time: Trace system behavior back to the source and resolve issues before they hit production..

THE CAKE DIFFERENCE

![]()

Observability built for AI,

not just infrastructure

Traditional logging & monitoring

Fine for infra, blind to AI behavior: Legacy tools like Datadog, Splunk, or CloudWatch can track system health, but not model performance.

- Focused on infra (CPU, latency, error rates), not model output

- No visibility into prompts, retrievals, or model responses

- Hard to debug LLM failures or hallucinations

- No context on user queries or model decision paths

Result:

You know your system is slow, but not why your model gave the wrong answer

The Cake approach

Purpose-built visibility into every layer of your AI stack: Track prompts, responses, retrievals, costs, evals, and more in real time.

- Trace every request across agents, tools, and model calls

- View full prompt/response pairs, retrieval sources, and ranking logic

- Built-in evals for quality, latency, and cost across agents

- Connect logs to user behavior and business outcomes

Result:

Full transparency, faster debugging, and better performance tuning

EXAMPLE USE CASES

![]()

Put observability to work

across your stack

![]()

Monitor LLM performance in real time

Track response latency, token usage, and context window issues across inference calls to spot degradation before users notice.

![]()

Catch pipeline failures before they cascade

Trace bottlenecks across ingestion, transformation, and model serving to prevent data freshness issues and silent errors.

![]()

Detect agent drift and context loss

Surface when agent behaviors change unexpectedly or start returning low-confidence responses, with full visibility into upstream signals.

![]()

Debug GPU utilization and scaling issues

Correlate workload performance with GPU usage, autoscaling events, and memory constraints to fine-tune system behavior.

![]()

Enable secure, auditable observability in regulated environments

Keep all observability data in your own VPC with full control over access, retention, and redaction.

![]()

Accelerate root cause analysis across hybrid infrastructure

Unify logs, traces, and metrics from cloud, on-prem, and edge environments to reduce MTTR and eliminate guesswork.

BLOG

The 6 open-source observability tools you should know

Find the top open source tools for observability & tracing to enhance your system's performance. Learn how these tools can provide insights and improve efficiency.

BLOG

A beginner's guide to metrics, logs, and traces

Learn how to use these tools to understand and improve your system's performance.

"Our partnership with Cake has been a clear strategic choice – we're achieving the impact of two to three technical hires with the equivalent investment of half an FTE."

Scott Stafford

Chief Enterprise Architect at Ping

"With Cake we are conservatively saving at least half a million dollars purely on headcount."

CEO

InsureTech Company

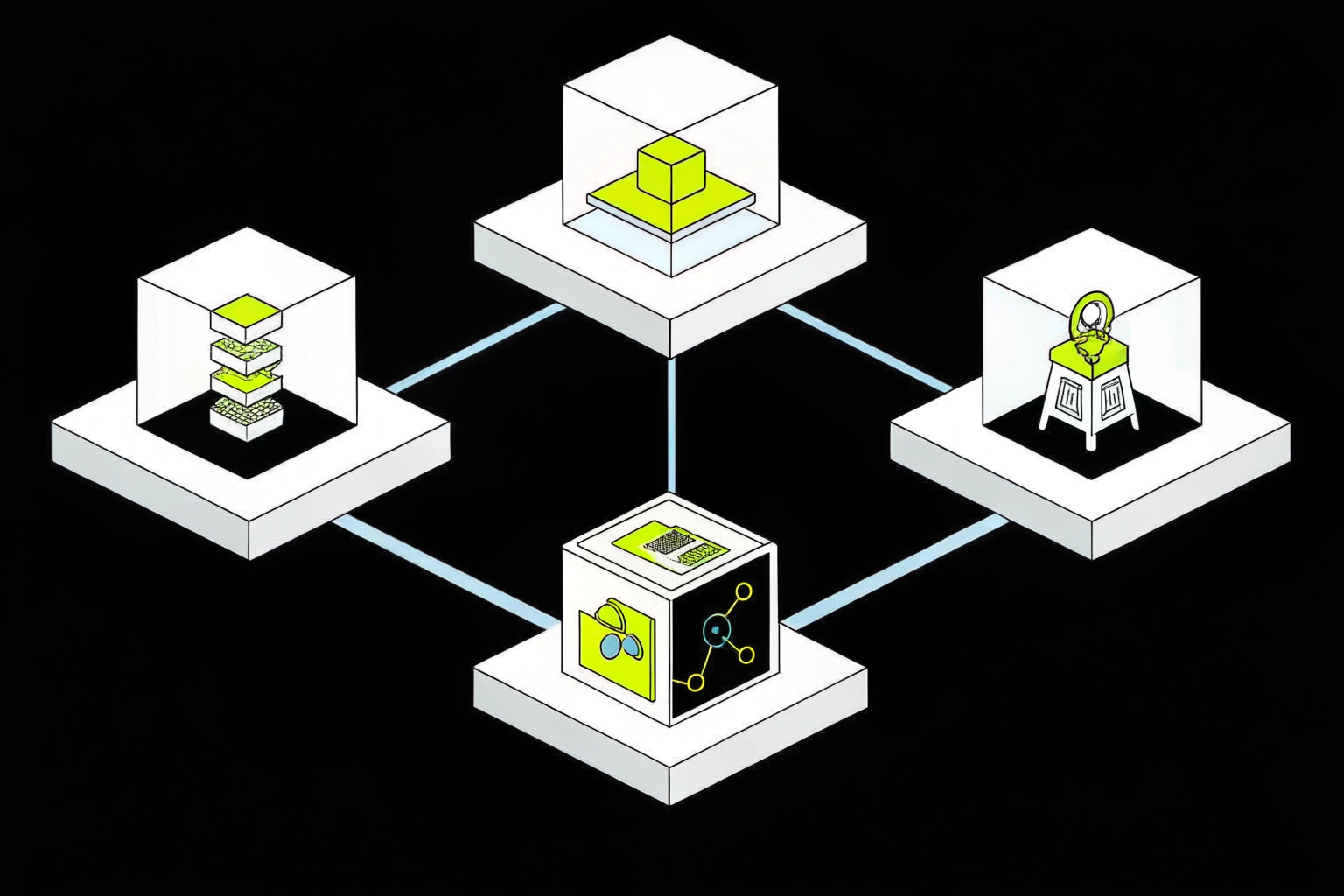

COMPONENTS

![]()

Tools powering Cake's

observability stack

Deepchecks

Model Evaluation Tools

Automate data validation and monitor ML pipeline quality with Deepchecks, fully managed and integrated by Cake.

Evidently

Model Evaluation Tools

Evidently is an open-source tool for monitoring machine learning models in production. Cake operationalizes Evidently to automate drift detection, performance monitoring, and reporting within AI workflows.

Grafana

Cloud Compute & Storage

Grafana is an open-source analytics and monitoring platform used for observability and dashboarding. Cake integrates Grafana into AI pipelines to visualize model performance, infrastructure metrics, and system health.

NannyML

Model Evaluation Tools

NannyML is an open-source library for monitoring post-deployment data and model performance. Cake integrates NannyML to automate AI drift detection, quality monitoring, and compliance reporting.

Prometheus

Observability & Monitoring

Prometheus is an open-source systems monitoring and alerting toolkit. Cake integrates Prometheus into AI pipelines for real-time monitoring, metric collection, and governance of AI infrastructure.

Langfuse

LLM Observability

Langfuse is an open-source observability and analytics platform for LLM apps, capturing traces, user feedback, and performance metrics.

OpenTelemetry

Observability & Monitoring

Infrastructure & Resource Optimization

Orchestration & Pipelines

OpenTelemetry provides standardized tools for tracing, metrics, and logging in distributed systems. It enables deep visibility into applications, services, and infrastructure.

OpenNMS

Observability & Monitoring

Infrastructure & Resource Optimization

Orchestration & Pipelines

Agent Frameworks & Orchestration

OpenNMS provides comprehensive fault, performance, and topology monitoring for networks of all sizes. It supports SNMP, flow data, and custom integrations.

Frequently

asked

questions

What is observability in the context of AI infrastructure?

Observability is the ability to understand the internal state of your AI systems based on their external outputs, such as logs, metrics, and traces. It helps teams detect issues, monitor performance, and debug complex pipelines across data, models, and infrastructure.

How does Cake support observability?

Cake makes it easy to deploy and scale observability tools like Prometheus, Grafana, Jaeger, and OpenTelemetry. These tools can be automatically instrumented and run inside your environment, giving you full visibility and control.

Can I use my own logging and monitoring tools with Cake?

Yes. Cake is built for composability and integrates seamlessly with popular open source tools. You can bring your existing logging, metrics, and tracing stack and Cake will handle the orchestration and scaling.

Is Cake observability secure and compliant for regulated industries?

Absolutely. Observability tools can be deployed within your private cloud or on-prem infrastructure. You maintain full control over log retention, access policies, and redaction—making it suitable for finance, healthcare, and other regulated sectors.

What kinds of AI workloads benefit most from observability?

Observability is essential for any AI workload running in production. This includes model inference, agent orchestration, vector search pipelines, and data ingestion workflows. With Cake, you can monitor them all in one place.

Learn more about Cake and observability

Top Open Source Observability Tools: Your Guide

Building and maintaining modern software, particularly for AI initiatives, can feel like you're constantly reacting to problems. An alert fires, and...

Beginner's Guide to Observability: Metrics, Logs, & Traces

Building powerful AI is one thing; keeping it running smoothly is another challenge entirely. Your team's success depends on having systems that are...

What Is Observability? A Complete Guide

At its core, understanding observability is about being able to ask any question about your software’s internal state and get a clear, data-backed...

.png?width=220&height=168&name=Group%2010%20(1).png)