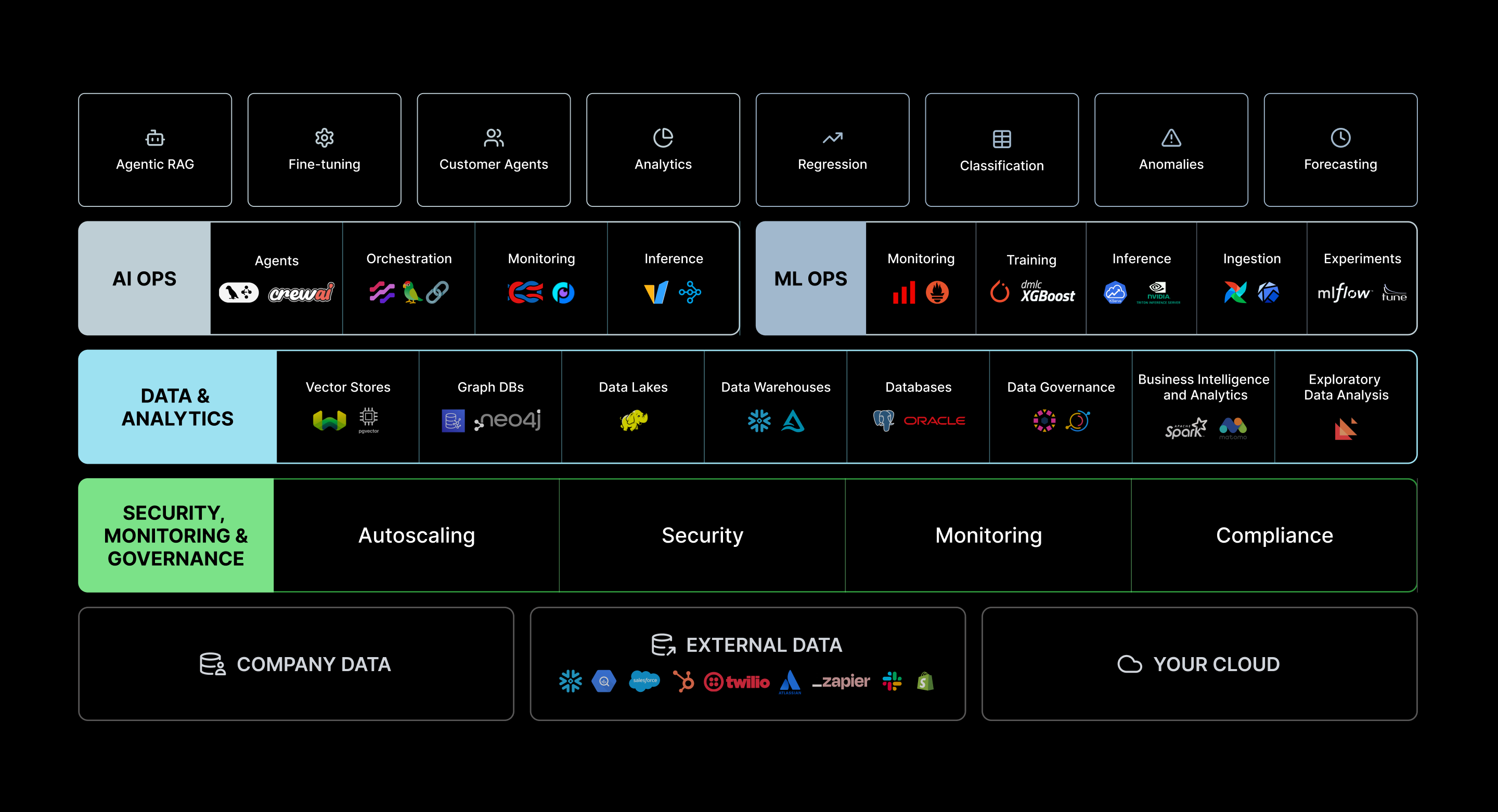

The Cake Platform

Cake brings your entire AI/ML operation onto a single system with best-in-class open-source components so you can build faster, with lower costs, and less risk.

OVERVIEW

The unified platform to unlock the power of open-source AI for your organization

![]()

Faster time to production

Launch AI projects in days instead of months. Spend less time on integrations and instead focus on innovation and building amazing use cases.

![]()

Lower costs

Reduce the need for extensive in-house resources for development and ongoing maintenance. Build solutions into a single offering instead of multiple platforms. Enable managers to set cost controls at a project level.

![]()

Reduced risk

Build with a curated list of components and ensure you’re never locked in to prior versions. Benefit from expert project guidance, vetted integrations and central oversight. Ensure projects are safe with pre-built authentication and RBAC.

![]()

Improved quality

Work with the latest versions of the best tools available in AI development. Gain a central organization point for all AI projects. Build with tools you’re already familiar with.

CORE

Built for scale, secured by design

Cake's core platform offers robust capabilities designed to enhance scalability, security, and operational efficiency:

Dynamic autoscaling

Seamlessly adjusts resources to match workload demands, optimizing performance and cost-efficiency.

Comprehensive security

Implements robust security measures, including role-based access control and encryption at rest and in transit, to safeguard sensitive data and ensure compliance with standards including SOC 2 Type 2.

Advanced monitoring

Provides real-time insights into system performance and health, enabling proactive issue detection and resolution.

Infrastructure flexibility

Supports deployment across various environments, including Kubernetes clusters and cloud services such as AWS, ensuring adaptability to diverse operational needs.

GitOps integration

Utilizes Infrastructure-as-Code practices through integration with code repositories such as GitHub, facilitating consistent and version-controlled infrastructure management.

DATA & ANALYTICS

Data in, intelligence out

From ingestion to synthetic generation, Cake’s data layer equips teams with the tools to build, tune, and scale AI/ML systems with security, observability, and performance built in.

Ingestion & ETL pipelines

Leverages best-in-class tools like Airflow, DBT, and Prefect to automate ingestion, transform raw inputs, and scale workflows that prep data for model consumption, because no model trains without it.

Data extraction for LLMs

Supports chunking, vectorization, and retrieval using vector databases (Weaviate, Milvus, Qdrant, pgvector,), embedding models (BGE), and orchestration frameworks (LangGraph, Langflow) to feed LLMs the context they actually need.

Analytics & visualization

Enables analysts with business intelligence tools and dashboards through Metabase, Matomo, and Superset, analytics engines like Spark. Cake includes visualization and EDA tools like Tensorboard and Autoviz.

Synthetic dataset creation

When labeled data is scarce, Cake helps generate high-quality synthetic datasets via statistical and GAN models. Cake includes frameworks like SDV, Mostly.AI, Synthcity, YData, and Faker.

AIOPS

Operationalize AI at scale

Cake streamlines the end-to-end lifecycle of generative AI applications—from orchestration to evaluation—backed by scalable infrastructure and built-in observability.

Orchestration & agentic flows

Integrates with model catalogs like Hugging Face and enables modular workflows using LangChain, LlamaIndex and Langflow to build and route LLM-powered applications faster. Rich tooling for agentic frameworks including LangGraph, CrewAI, Autogen, OpenAI, and Google.

Flexible inference infrastructure

Supports high-performance serving of large models via frameworks like vLLM and RayServe, with autoscaling and GPU optimization built-in.

Prompt & model evaluation

Stop manually engineering all your prompts. Take advantage of frameworks like DSPy and allow the computer to generate many options. Track, test, and compare these prompts with multiple foundation and fine-tuned models with tools like Promptfoo and DeepChecks ensuring safe, performant, and cost-effective results.

Tracing & monitoring

Visualize and debug complex generative flows using LangFuse and Arize Phoenix. Use integrated tracing to get full observability into system and model behavior, errors, and latency.

Developer & user interfaces

Quickly deploy rich chat interfaces with OpenWebUI, Streamlets and Vercel. Host secure cloud based notebooks while radically accelerating your development and data science using vibe coding tools like Cursor and Cline.

MLOPS

Your models deserve better infrastructure

Cake gives teams the tools to develop, train, and operate machine learning models with reproducibility, scalability, and observability built in.

Experimentation to deployment

From Jupyter-based prototyping to Kubeflow pipelines, Cake integrates with tools like MLflow and ClearML to track experiments, manage hyperparameters, and promote the best models to production.

Scalable model training

Run distributed training workloads with autoscaling compute across frameworks like PyTorch and XGBoost, powered by Ray for cost-efficient parallelism.

Production-grade serving

Deploy models using inference frameworks like vLLM, Ray Serve and NVIDIA Triton. Cake provides built-in support for A/B testing, canary deploys, and autoscaling your models.

Observability & drift detection

Monitor model health, latency, and performance with Grafana, Prometheus, and Istio; detect drift in real-world data using tools like Evidently or NannyML before it impacts accuracy.

Learn more

The Best Open Source AI: A Complete Guide

Find the best open source AI tools for 2025, from top LLMs to training libraries and vector search, to power your next AI project with full control.

Your Guide to the Top Open-Source MLOps Tools

Find the best open-source MLOps tools for your team. Compare top options for experiment tracking, deployment, and monitoring to build a reliable ML...

13 Open Source RAG Frameworks for Agentic AI

Find the best open source RAG framework for building agentic AI systems. Compare top tools, features, and tips to choose the right solution for your...