Why 90% of Agentic RAG Projects Fail (and How Cake Changes That)

TL;DR

-

Most RAG projects stall at 60%: Quick demos are easy to build, but brittle architectures break down before reaching production.

-

The last 40% takes real infrastructure: You need evaluation sets, tracing, re-ranking, ingestion pipelines, and agentic guardrails to go live reliably.

-

Cake gets you to production faster: With pre-integrated components and full-stack observability, Cake eliminates the integration tax that kills most RAG efforts.

You’ve probably seen the pattern: someone strings together LangChain, a vector DB, and an LLM and claims they’re doing RAG. Maybe it works well enough in a demo. But it never makes it to production.

Based on our experience working with dozens of enterprise teams, fewer than 10% of RAG projects actually succeed in production. And for Agentic RAG, where multi-step tool use, action-taking, and query transformation come into play, the bar is even higher.

That’s not because RAG is a bad idea. It’s because naive RAG fails. Every time.

What fails: naive RAG and the 60% demo trap

Here’s what a naive RAG architecture looks like:

1. Chunk your documents

2. Embed them and store in a vector DB

3. Retrieve a few chunks on similarity

4. Throw it all into an LLM

This "works" in early demos, giving you the illusion of progress. But it fails in production because:

Context windows are limited

Retrieval quality is inconsistent

Evaluations are nonexistent

Prompting is brittle

Chunking introduces semantic gaps

You have no idea what broke when things go wrong

Teams hit a wall at 60% quality, then flail for months trying to claw their way to 85%+.

What they need isn’t more prompt tuning. It’s infrastructure. So what does it actually take to go beyond the demo?

The missing infrastructure for real Agentic RAG

To go from prototype to production, you need:

1. Ground truth evaluation sets

- Triples of question / answer / citation

- Stored with lineage and metadata

- Annotated by SMEs and continuously updated

- Synthetic generation and real-user feedback loops

Without evals, you’re flying blind. Most teams fail here.

2. Tracing and observability

- Multi-hop agentic flows are fragile

- You need full-span tracing: prompt in, model out, tools called, results returned

- Debugging agent errors without tracing is impossible

3. Prompt engineering at scale

- Prompt generation and versioning (e.g., DSPy)

- Prompt evaluation across cost, latency, and accuracy

- Prompt regression tracking over time

4. Reranking and retrieval quality

- Hybrid search by default (semantic + keyword)

- Re-ranking with LLMs or lightweight models

- Multi-stage fusion, metadata filtering, and reranker cascades

5. Chunking, ingestion, and cost controls

- Chunking strategies that preserve semantic coherence

- Parallelized ingestion with source-link preservation

- Entity extraction and doc metadata capture

- Cost-aware embeddings and tiered vector storage

6. Agentic workflows with guardrails

- JSON schema-constrained outputs

- Human-in-the-loop options

- Step count minimization to avoid error cascades

- Sandboxing and secure tool usage (esp. with MCP)

A simple system that works during testing can quickly fall apart as document volume increases, inference costs grow, and agent behaviors become harder to predict.

Scaling isn’t just about more users

Most RAG projects don’t fail in the prototype phase. They fail when it’s time to scale. A simple system that works during testing can quickly fall apart as document volume increases, inference costs grow, and agent behaviors become harder to predict. Scaling RAG means making sure every part of your stack (retrieval, prompting, orchestration, and monitoring) runs reliably under pressure from real workloads.

- Handling millions of documents without reindexing disasters

- Autoscaling inference with engines like vLLM

- Choosing the right proxying and caching strategies (e.g., LiteLLM)

- Monitoring GPU memory, token throughput, and failure modes across APIs

- Canary releasing new prompt chains, agents, or retrieval flows

These aren’t nice-to-haves. They’re table stakes for real-world deployments.

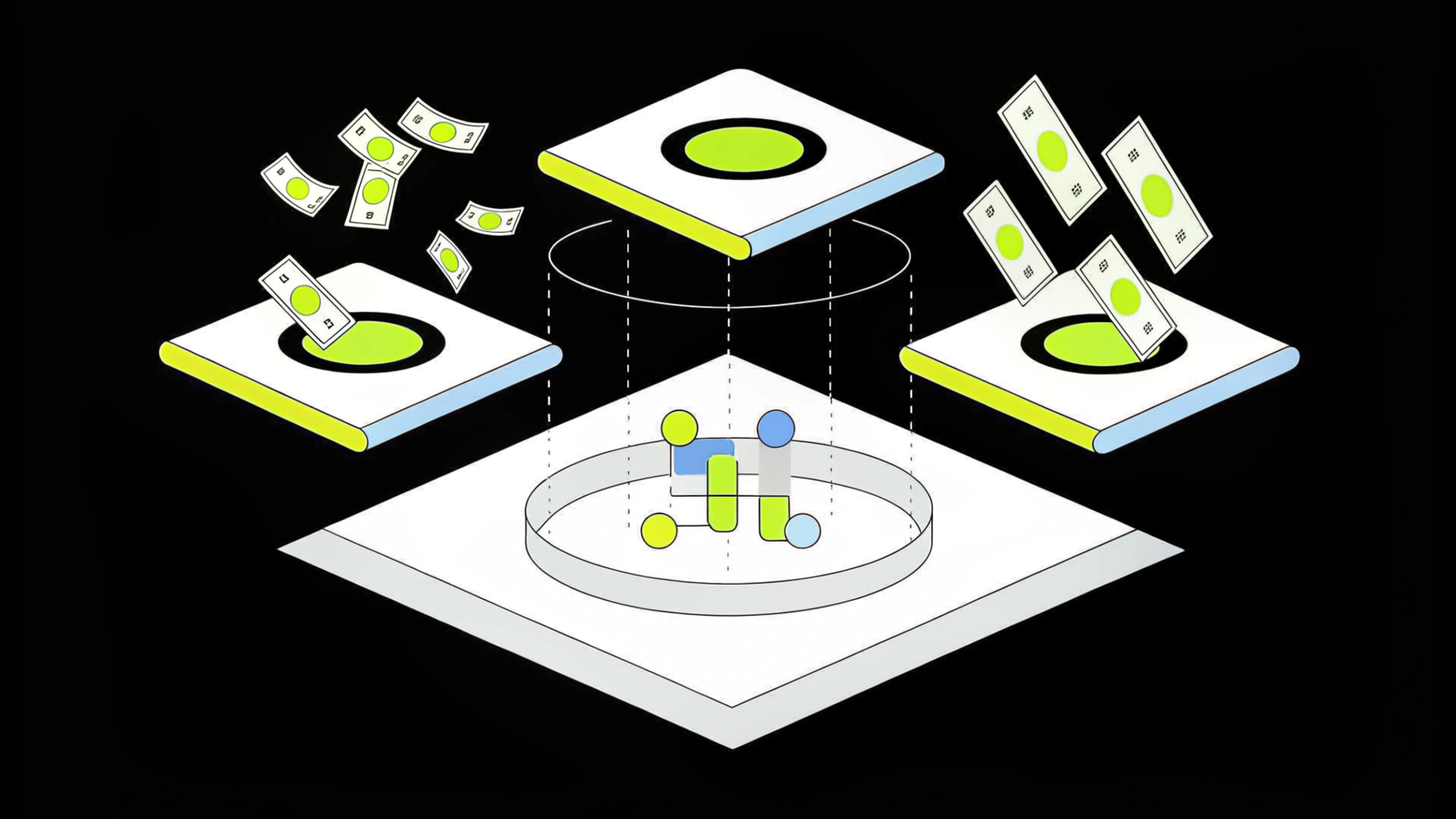

How Cake gets you to production (and keeps you there)

Most teams spend months cobbling together open-source tools to make their RAG stack work: LangChain, LangGraph, Label Studio, Langfuse, Ragas, DSPy, Phoenix, Prometheus, Ray, Kubernetes, Milvus, etc.

Cake brings all of this together in one cohesive, production-grade platform:

- Evaluation pipelines with SME workflows and versioned ground truth

- Prompt tracing, versioning, and monitoring fully integrated

- Hybrid search + intelligent re-ranking ready to use

- Agentic orchestration with guardrails and visual editing tools

- First-class observability via OTEL-compatible tracing

- Scalable ingestion with semantic chunking, metadata capture, and cost optimization

- Model routing and autoscaling for self-hosted (vLLM, Ollama) or managed (Bedrock) environments

No glue code. No integration tax. No lost quarters.

Agentic RAG isn’t magic. It’s engineering.

RAG systems are software systems. They require rigor, not just intuition. At Cake, we’ve seen the same story play out again and again: teams hit a wall, then accelerate the moment they get observability, evaluation, and orchestration dialed in.

If you’re betting on RAG for your business, don’t waste time duct-taping your way to a brittle stack.

Get to production faster. Stay there longer. Build something real.

Start with Cake.

About Author

Skyler Thomas

Skyler is Cake's CTO and co-founder. He is an expert in the architecture and design of AI, ML, and Big Data infrastructure on Kubernetes. He has over 15 years of expertise building massively scaled ML systems as a CTO, Chief Architect, and Distinguished Engineer at Fortune 100 enterprises for HPE, MapR, and IBM. He is a frequently requested speaker and presented at numerous AI and Industry conferences including Kubecon, Scale-by-the-Bay, O’reilly AI, Strata Data, Strat AI, OOPSLA, and Java One.

More articles from Skyler Thomas

Related Post

The Hidden Costs Nobody Expects When Deploying AI Agents

Skyler Thomas & Carolyn Newmark

Top 10 Vector Databases: Choosing the Right One for Your Project

Skyler Thomas

How to Build Scalable GenAI Infrastructure in 48 Hours (Yes, Hours)

Skyler Thomas

The AI Budget Crisis You Can’t See (But Are Definitely Paying For)

Skyler Thomas & Carolyn Newmark