Cake for Enterprise RAG

Securely index, retrieve, and inject internal context into LLMs with a scalable, cloud-agnostic, composable, and compliance-ready RAG stack built on open source.

Overview

Retrieval-augmented generation (RAG) gives LLMs real context for better answers. But at the enterprise level, the context is massive, fragmented, and access-restricted. Moving beyond demos requires production-grade ingestion, fine-tuned access controls, and traceable responses you can defend in audits.

Cake gives you the full RAG stack: ingest data from S3, SaaS APIs, or SQL; chunk, embed, and store it in performant vector DBs like Weaviate; and orchestrate retrieval pipelines with open tooling like LangChain and LlamaIndex. Everything is cloud-agnostic, composable, and auditable for real-world enterprises.

From regulated industries to internal knowledge management, Cake makes it easy to move from pilot to production without duct tape, vendor lock-in, or surprise costs.

Key benefits

-

Deploy faster without vendor lock-in: Use open-source components and Cake’s orchestration to build quickly and own your stack. Cake ensures the stack stays up-to-date with the latest technologies, giving you the best-in-class performance.

-

Secure your data: Apply fine-grained access controls, data masking, and audit-ready workflows by default.

-

Adapt as you scale: Integrate with any data source or environment using a fully composable RAG stack.

-

Improve performance over time: Monitor retrieval quality, identify gaps, and continuously fine-tune models and prompts for better results.

-

Support real-time and batch use cases: Run low-latency RAG for chat and decision support, or schedule document processing and summarization workflows at scale.

EXAMPLE USE CASES

![]()

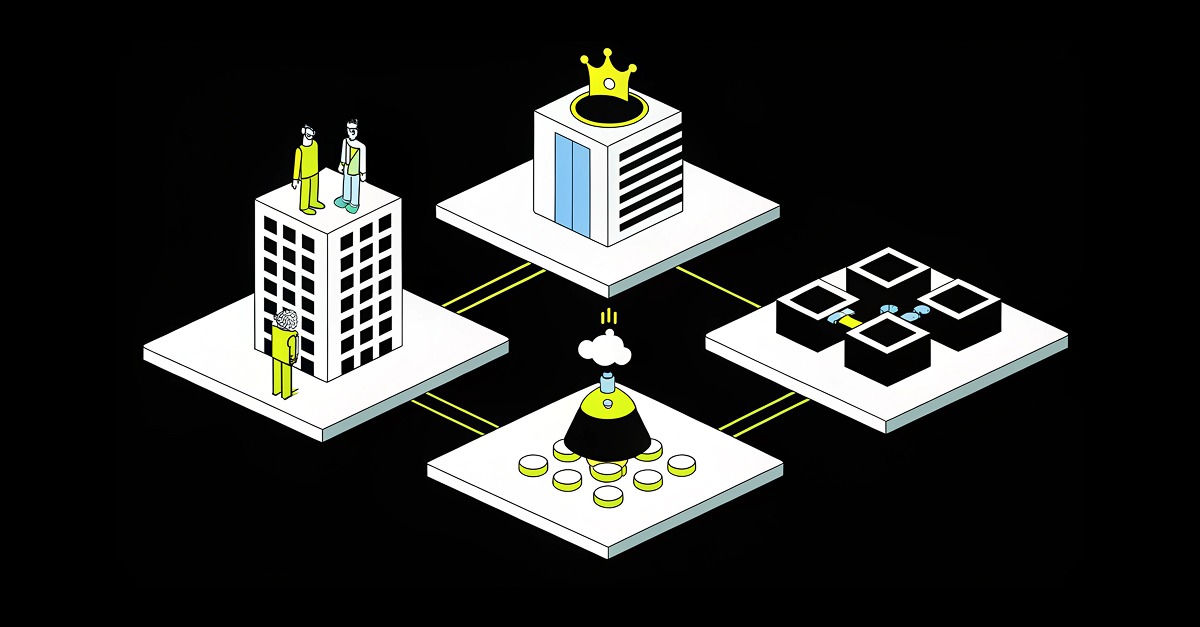

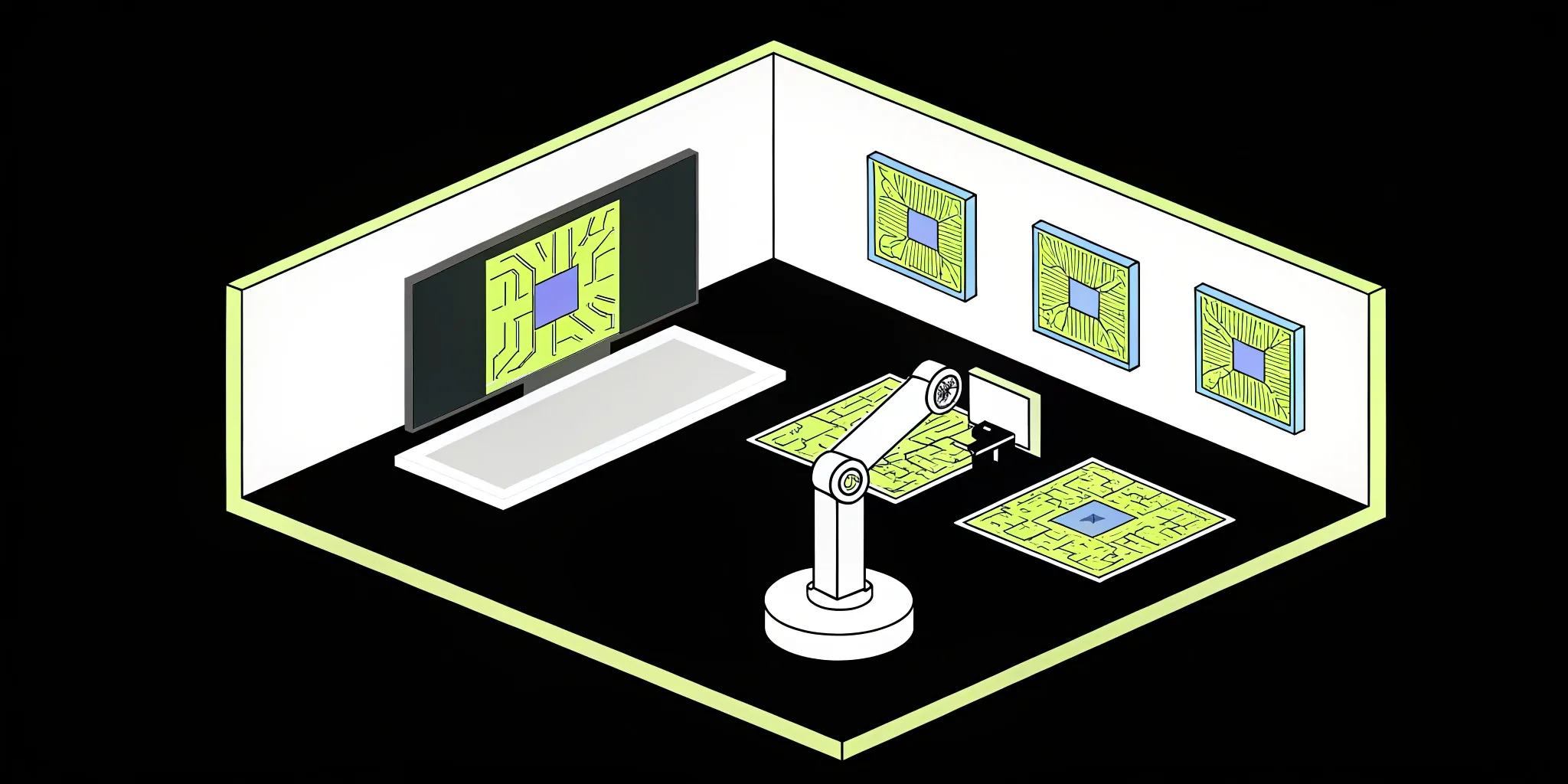

Composable RAG workflows built

on your terms with Cake

![]()

Internal knowledge search

Empower employees with natural-language access to internal docs, wikis, policies, and support materials.

![]()

Customer support intelligence

Retrieve relevant product guides, contracts, and CRM records to assist support reps or fine-tune AI responses.

![]()

Regulated industry applications

Provide grounded answers in finance, legal, or healthcare, with full traceability and data controls.

![]()

AI assistants for employee workflows

Equip teams with agents that can answer questions, complete tasks, or generate content using internal knowledge, streamlining day-to-day operations.

![]()

Contract and policy analysis

Allow legal and compliance teams to search and summarize terms, clauses, or obligations across thousands of documents instantly.

![]()

Enterprise-wide search augmentation

Upgrade traditional search portals with AI-assisted answers grounded in real-time data from SharePoint, Confluence, Notion, and more.

THE CAKE DIFFERENCE

![]()

From quick demos to production-grade

RAG systems

Prototype RAG

Fast to build, but not built to last: Notebook-level demos with hardcoded prompts and limited observability.

- Hardcoded prompts and simple query-to-response loops

- No access control, data isolation, or audit trails

- Fails to scale across teams, tools, or data domains

- No built-in evals or cost monitoring to improve over time

Result:

Works in a demo, breaks in enterprise environments

Enterprise RAG with Cake

Secure, scalable, and observable by design: Cake gives you a modular stack to deploy goal-driven RAG systems across your org.

- Deploy with access control, logging, and full traceability

- Integrate structured and unstructured data sources

- Run evals, tune retrieval logic, and monitor cost and latency

- Scale across teams with multi-tenant pipelines and reusable workflows

Result:

Production-grade RAG with enterprise security, governance, and performance

BLOG

How to build a scalable RAG stack in 48 hours

See how teams go from zero to production-ready retrieval with Cake’s modular infrastructure. No glue code. No vendor lock-in. Just fast, open-source orchestration that works.

IN DEPTH

Structure your data before you retrieve it

RAG is only as good as your inputs. Learn how Cake automates document parsing with LLMs and OCR, turning PDFs, emails, and forms into clean, queryable context.

"Our partnership with Cake has been a clear strategic choice – we're achieving the impact of two to three technical hires with the equivalent investment of half an FTE."

Scott Stafford

Chief Enterprise Architect at Ping

"With Cake we are conservatively saving at least half a million dollars purely on headcount."

CEO

InsureTech Company

COMPONENTS

![]()

Tools that power Cake's RAG stack

LangGraph

Agent Frameworks & Orchestration

LangGraph is a framework for building stateful, multi-agent applications with precise, graph-based control flow. Cake helps you deploy and scale LangGraph workflows with built-in state persistence, distributed execution, and observability.

LangChain

Agent Frameworks & Orchestration

LangChain is a framework for developing LLM-powered applications using tools, chains, and agent workflows.

Weaviate

Vector Databases

Weaviate is an open-source vector database and search engine built for AI-powered semantic search. Cake integrates Weaviate to support scalable retrieval, recommendation, and question answering systems.

Langflow

Agent Frameworks & Orchestration

Langflow is a visual drag-and-drop interface for building LangChain apps, enabling rapid prototyping of LLM workflows.

DSPy

LLM Optimization

DSPy is a framework for optimizing LLM pipelines using declarative programming, enabling dynamic tool selection, self-refinement, and multi-step reasoning.

Promptfoo

LLM Observability

LLM Optimization

Promptfoo is an open-source testing and evaluation framework for prompts and LLM apps, helping teams benchmark, compare, and improve outputs.

Frequently asked questions

What is Enterprise RAG?

Enterprise RAG (retrieval-augmented generation) combines large language models with your proprietary data to generate accurate, context-aware responses. Cake lets you build these systems with full control over orchestration, retrieval, inference, and compliance.

How does Cake support enterprise-grade RAG?

Cake provides a modular open-source stack for building RAG systems. You can connect to your preferred tools for retrieval, orchestration, and model inference, while keeping everything secure, observable, and production-ready.

Can I use my own vector store or model with Cake?

Yes. You can bring your own vector store (like Milvus, Weaviate, or pgvector) and run inference through LiteLLM, vLLM, or any custom or proprietary endpoint.

What kind of RAG use cases can I build with Cake?

Teams use Cake to power real-time agents, document summarization tools, contract analysis pipelines, customer support assistants, and more. Cake supports both live and scheduled RAG workflows.

How does Cake handle security and compliance for RAG?

All workloads run inside your environment with no data egress. Cake supports HIPAA, SOC 2 Type II, and lets you apply fine-grained access controls, redaction, and full audit logging across the stack.

Related posts

The Best Open Source AI: A Complete Guide

Find the best open source AI tools for 2025, from top LLMs to training libraries and vector search, to power your next AI project with full control.

Your Guide to the Top Open-Source MLOps Tools

Find the best open-source MLOps tools for your team. Compare top options for experiment tracking, deployment, and monitoring to build a reliable ML...

13 Open Source RAG Frameworks for Agentic AI

Find the best open source RAG framework for building agentic AI systems. Compare top tools, features, and tips to choose the right solution for your...

.png?width=220&height=168&name=Group%2010%20(1).png)