ETL Pipelines for AI: Streamlining Your Data

Building a powerful AI model without a solid data strategy is like constructing a skyscraper on a weak foundation. It doesn’t matter how impressive the structure is if the base can’t support it. In the world of AI, your data pipeline is that foundation. A traditional ETL process can get your data from one place to another, but it often lacks the sophistication needed for complex AI applications. To truly accelerate your initiatives, you need a smarter approach. This is where AI-enhanced systems come into play. We’ll explore how to design, build, and maintain resilient ETL pipelines for AI, ensuring your data infrastructure is strong enough to support your most ambitious projects.

Key takeaways

- Let AI handle the grunt work: Use artificial intelligence to automate the tedious, repetitive parts of ETL, like data mapping and cleaning. This frees your engineers from manual tasks so they can focus on high-value work like architectural design and strategy.

- Prioritize data quality with ML: Your AI models are only as good as the data they train on. Embed ML directly into your transformation stage to automatically standardize formats, fix inconsistencies, and ensure your data is clean and reliable.

- Define your objective before you build: A successful pipeline starts with a clear goal. Knowing the specific business problem you want to solve will guide every decision, from which data sources to use to how you structure the final output, ensuring your efforts are focused and effective.

What is an ETL pipeline?

If you've ever tried to make sense of data from multiple sources—like your sales platform, marketing tools, and customer support logs—you know it can get messy. Each system has its own way of organizing information, and bringing it all together for a clear picture is a huge challenge. This is where an ETL pipeline comes in.

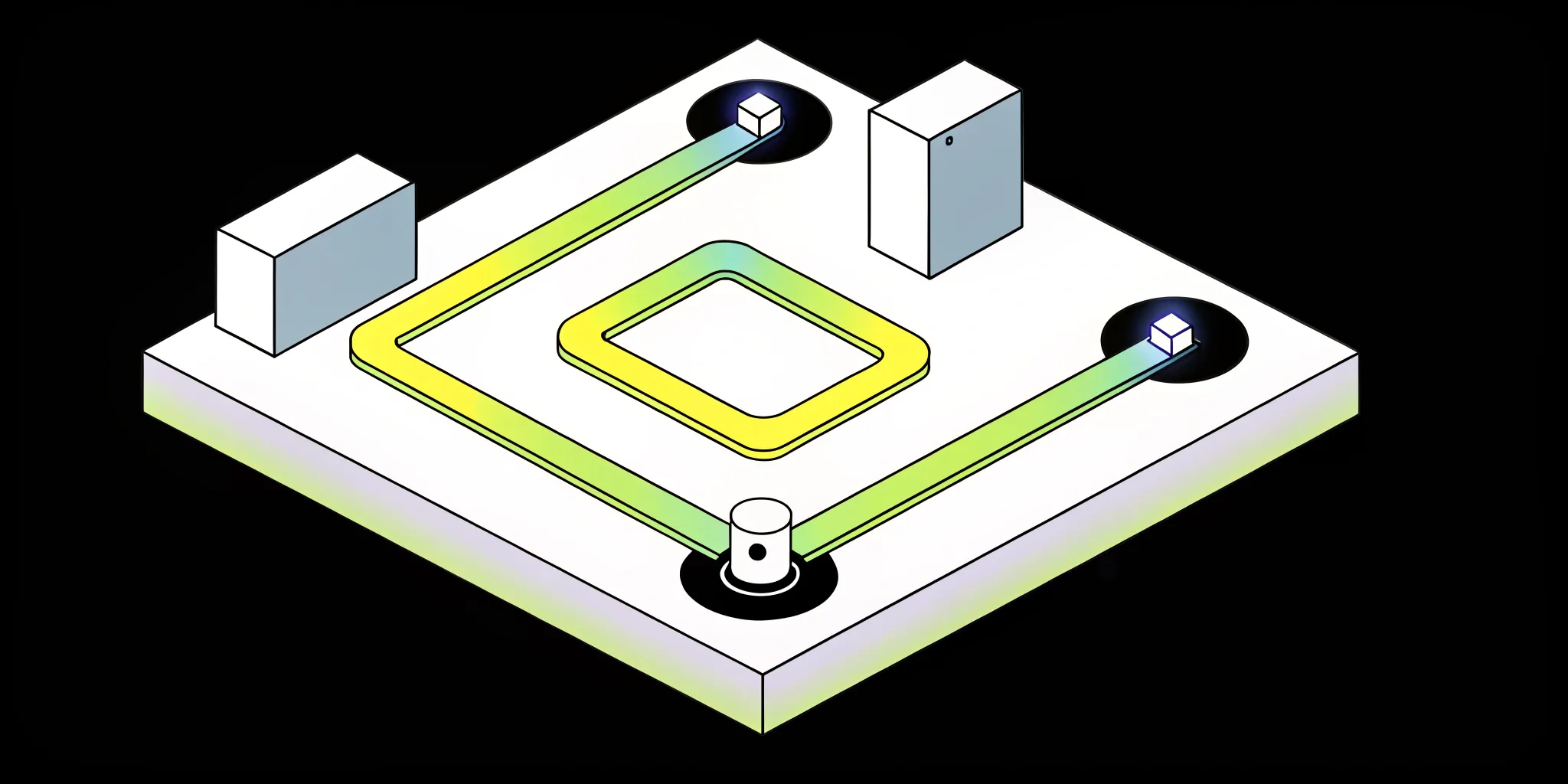

ETL stands for Extract, Transform, and Load. It’s a data integration process that pulls data from various sources, cleans it up, and moves it to a single destination, like a data warehouse. Think of it like organizing a chaotic kitchen. First, you extract all the ingredients from different cupboards and the fridge. Next, you transform them by washing, chopping, and measuring everything you need for your recipe. Finally, you load the prepared ingredients into a single bowl, ready for cooking.

An ETL pipeline automates this entire workflow, creating a reliable, repeatable process for preparing data. Instead of manually exporting spreadsheets and trying to match up columns, the pipeline does the heavy lifting for you. This ensures that the data you use for analytics and AI models is consistent, accurate, and ready for action. It’s the foundational plumbing that makes modern data analytics possible.

BLOG: What is ETL?

Why ETL is essential for AI and ML

You’ve probably heard the phrase "garbage in, garbage out." It’s especially true for AI. Machine Learning (ML) and AI models are incredibly powerful, but their performance is completely dependent on the quality of the data they are trained on. If you feed a model messy, inconsistent, or incomplete data, you’ll get unreliable predictions and flawed insights.

ETL is the critical process that ensures your data is clean, reliable, and structured correctly for AI applications. It provides a standardized framework for preparing data, which is essential for building effective models. By automating data preparation, ETL pipelines free up your data scientists and engineers from manual data wrangling so they can focus on building and refining AI solutions. This creates a stronger foundation for more advanced AI applications and ultimately drives better business outcomes.

BLOG: What is MLOps?

How an AI-ready ETL pipeline works

At its core, an AI-ready ETL pipeline is a three-step process that gets your data into shape for ML. Think of it as the essential prep work before the main event. It involves extracting raw data from various sources, transforming it into a clean and consistent format, and loading it into a system where your AI models can put it to work. Let's walk through how each stage functions.

1. Extract: gather your data

The first step is to pull data from all the places it lives. This could include customer relationship management (CRM) systems, databases, cloud applications, and even IoT sensor logs. In this phase, the goal is to collect all the raw information you need without worrying about its format just yet. For an AI project to succeed, your pipeline must be able to connect to diverse data sources and handle everything from structured tables to unstructured text and images. This initial gathering is the foundation for the entire ETL ML process, as the quality of your AI's insights depends entirely on the quality of the data you feed it.

2. Transform: prepare your data for analysis

This is where the real magic happens. The transform stage takes the raw, messy data you’ve extracted and gets it ready for analysis. This involves cleaning up inconsistencies, standardizing formats, removing duplicates, and enriching the data with additional context. AI is a game-changer here, as it can automate many of these tedious tasks. ML algorithms can identify patterns, classify information, and even suggest ways to improve data quality. By building ETL pipelines with AI, you can create more efficient, self-correcting systems that require less manual coding and deliver higher-quality data for your models.

3. Load: store your processed data

Once your data is cleaned and structured, it’s time to load it into its final destination. This is typically a data warehouse, data lake, or another storage system optimized for large-scale analysis. The key is to make this processed data readily accessible for your AI and ML models to train on and query against. An effective loading process ensures that your clean data is delivered efficiently to the right place, turning it into a reliable resource for generating insights. Ultimately, a well-designed AI data pipeline automates this entire flow, turning raw information into a powerful asset for making smarter business decisions.

IN DEPTH: Data ingestion & ETL, powered by Cake

Solve common ETL challenges for AI

An ETL pipeline is essential for any serious AI project, but it’s not always a straight shot from raw data to valuable insight. You’re likely to run into a few common roadblocks along the way. The good news is that many of these challenges—from messy data to massive scale—can be solved by strategically applying AI to the ETL process itself. Let's break down the three biggest hurdles and how to clear them.

1. Maintaining data quality and consistency

Your AI models are only as good as the data they’re trained on. Maintaining clean, consistent data is non-negotiable, but it’s often a manual, error-prone process. AI can be the solution here. Intelligent tools can automatically spot anomalies, fix inconsistencies, and validate data against your rules. This is where having a comprehensive solution that manages the entire stack can accelerate your AI initiatives. Instead of wrestling with data quality tools, your team can focus on building models. AI can also continuously monitor your pipelines and adjust to changes, ensuring your data quality remains high over time.

2. Addressing scalability and performance

As your business grows, so does your data. Manually managing ETL processes quickly becomes a significant bottleneck, slowing down your entire AI project. ML can take over many of the repetitive and time-consuming tasks, from data mapping to transformation scheduling. This automation not only makes your ETL process faster and more accurate but also allows it to become more scalable. By handing off the routine work to AI, you free up your engineers to focus on higher-value problems instead of getting bogged down in pipeline maintenance.

3. Handling complex integrations

Your data probably lives in a lot of different places and comes in all sorts of formats, from structured databases to messy, unstructured documents. Pulling all of that together is a major integration headache. AI can simplify this by assisting at every stage of the ETL workflow. For example, it excels at extracting information from unstructured text where some approximation is acceptable. While structured data requires more precision, AI can still help by suggesting data mappings and transformations. This doesn't require a complete overhaul of your process; instead, AI acts as a smart assistant, making your existing ETL workflow more efficient.

Use AI to improve ETL efficiency

Traditional ETL processes can be robust, but they often become a bottleneck. They can be slow, rigid, and require a lot of manual work to build and maintain. This is where AI steps in to completely change the game. By infusing your ETL pipelines with AI, you can automate tedious work, make smarter data transformations, and monitor your systems with incredible precision. It’s about moving from a reactive, manual process to a proactive, intelligent one that gets high-quality data to your AI models faster and more reliably.

Automate repetitive tasks

Think about all the time your team spends on repetitive ETL chores: mapping data fields, writing simple cleaning scripts, and fixing recurring errors. AI can take over these tasks, freeing up your engineers to focus on more strategic work. AI-powered tools can automatically scan your data sources and suggest mappings, saving hours of manual effort. They also offer intelligent suggestions to solve problems as they arise. Even better, many modern platforms use natural language processing to create no-code or low-code solutions, allowing team members to describe a task in plain English while the AI builds the necessary workflow. This makes data integration more accessible to everyone, not just developers.

Optimize data transformation with ML

The transformation stage is where your raw data gets its real value, and ML algorithms are exceptionally good at this. ML models excel at identifying patterns and classifying information within large datasets. In an ETL pipeline, this means they can automatically identify which data is usable, normalize different formats into a single standard, and flag outliers that a human might miss. For example, an ML model can standardize addresses or categorize customer feedback without manual rules. This automated approach not only speeds up the transformation process but also improves data governance by ensuring your data is consistent, clean, and ready for analysis.

Implement real-time processing and monitoring

In many business scenarios, yesterday’s data is already old news. AI helps you move away from slow, batch-based processing toward real-time data pipelines. This shift to real-time processing means your AI applications always have the most up-to-date information for tasks like fraud detection or inventory management. Beyond speed, AI also provides intelligent monitoring for your pipelines. Instead of waiting for a pipeline to break and cause downstream problems, AI tools can monitor data flow, detect anomalies, and even predict potential failures before they happen. They can also automatically adjust to changes in data sources, making your entire ETL process more resilient and self-sufficient.

How to design an AI-ready ETL pipeline

Building an ETL pipeline that’s ready for artificial intelligence is less about flipping a switch and more about thoughtful design. It requires a strategic approach that aligns your data processes with your AI ambitions from the very beginning. By focusing on clear goals, data quality, and continuous monitoring, you can create a robust foundation that not only feeds your AI models but also adapts and improves over time. Here’s how you can design a pipeline that sets your AI initiatives up for success.

Define clear AI objectives

Before you move a single byte of data, you need to know why you're doing it. What specific business problem will your AI solve? Are you trying to predict customer churn, optimize inventory, or personalize user experiences? Your answer shapes the entire ETL process. Having clear goals helps you identify the exact data sources you need and the transformations that will make the data most valuable for your models. AI is revolutionizing data management by making ETL pipelines much faster to build and maintain, but this efficiency is only realized when you have a clear direction. A well-defined objective is your roadmap, guiding every decision you make while building your ETL pipeline.

Use ML for data quality

Clean, consistent data is the lifeblood of any effective AI model. Manually scrubbing massive datasets is not only tedious but also prone to error. This is where you can use ML within your transformation step. ML algorithms are brilliant at spotting patterns, so they can automatically identify and correct inconsistencies, normalize formats, and classify information. For example, an algorithm can learn to recognize and standardize different address formats from multiple sources. By embedding ML in your ETL process, you automate data governance and ensure the information loaded into your systems is reliable, consistent, and ready for analysis.

Incorporate monitoring and feedback loops

An ETL pipeline isn't a "set it and forget it" system. Data sources change, APIs get updated, and schemas can drift, all of which can break your pipeline or silently corrupt your data. That’s why continuous monitoring is essential. Modern AI tools provide powerful data observability, helping you watch for problems and automatically adjust to shifts in the data landscape. Think of it as an early warning system that flags anomalies, like a sudden drop in data volume from a key source. By creating these feedback loops, your pipeline can either self-correct or alert a developer to intervene, ensuring the integrity and performance of your data flow remains high.

Ensure compliance and explainability

While AI can automate and optimize many parts of the ETL process, it doesn’t make human experts obsolete. On the contrary, their role becomes even more strategic. An experienced developer is still essential for designing complex workflows, troubleshooting unexpected issues, and ensuring the final output meets business requirements. Furthermore, humans are critical for ensuring compliance and explainability. You need to be able to explain how your data was processed to meet regulatory standards like GDPR. ETL developers provide the oversight needed to validate that the automated processes are fair, transparent, and aligned with your company’s ethical guidelines.

The traditional ETL process has always been a workhorse for data management, but it’s getting a major upgrade. AI and ML are not just adding a new layer to ETL; they are fundamentally changing how it works.

How ETL is evolving with AI

The traditional ETL process has always been a workhorse for data management, but it’s getting a major upgrade. AI and ML are not just adding a new layer to ETL; they are fundamentally changing how it works. This evolution is making data pipelines smarter, faster, and more accessible than ever before. Instead of being a rigid, code-heavy process managed only by engineers, ETL is becoming an intelligent, automated system that can empower your entire team to work with data more effectively.

Key trends and technologies

One of the most significant shifts in ETL is the move toward intelligent automation. AI can now handle many of the repetitive tasks that used to consume engineering resources, from mapping data fields to identifying and correcting errors. Generative AI, in particular, is transforming how we process unstructured data like text, images, and audio. This technology is also changing who performs ETL work. Business users with deep domain knowledge but less technical expertise can now handle some tasks that were previously reserved for data engineers, closing the gap between data and decision-making.

The role of predictive analytics in ETL

Modern ETL pipelines are moving beyond simple, rule-based transformations. By incorporating predictive analytics, these pipelines can anticipate issues before they happen. ML models can analyze incoming data to predict potential quality problems, suggest the most efficient transformation logic, and even dynamically adjust resources to handle fluctuating data loads. This proactive approach results in greater efficiency, higher data quality, and improved scalability. Ultimately, using ML within your ETL process builds a stronger foundation for more advanced AI applications, ensuring the data feeding your models is clean, consistent, and reliable.

IN DEPTH: How to build predictive analytics for your business with Cake

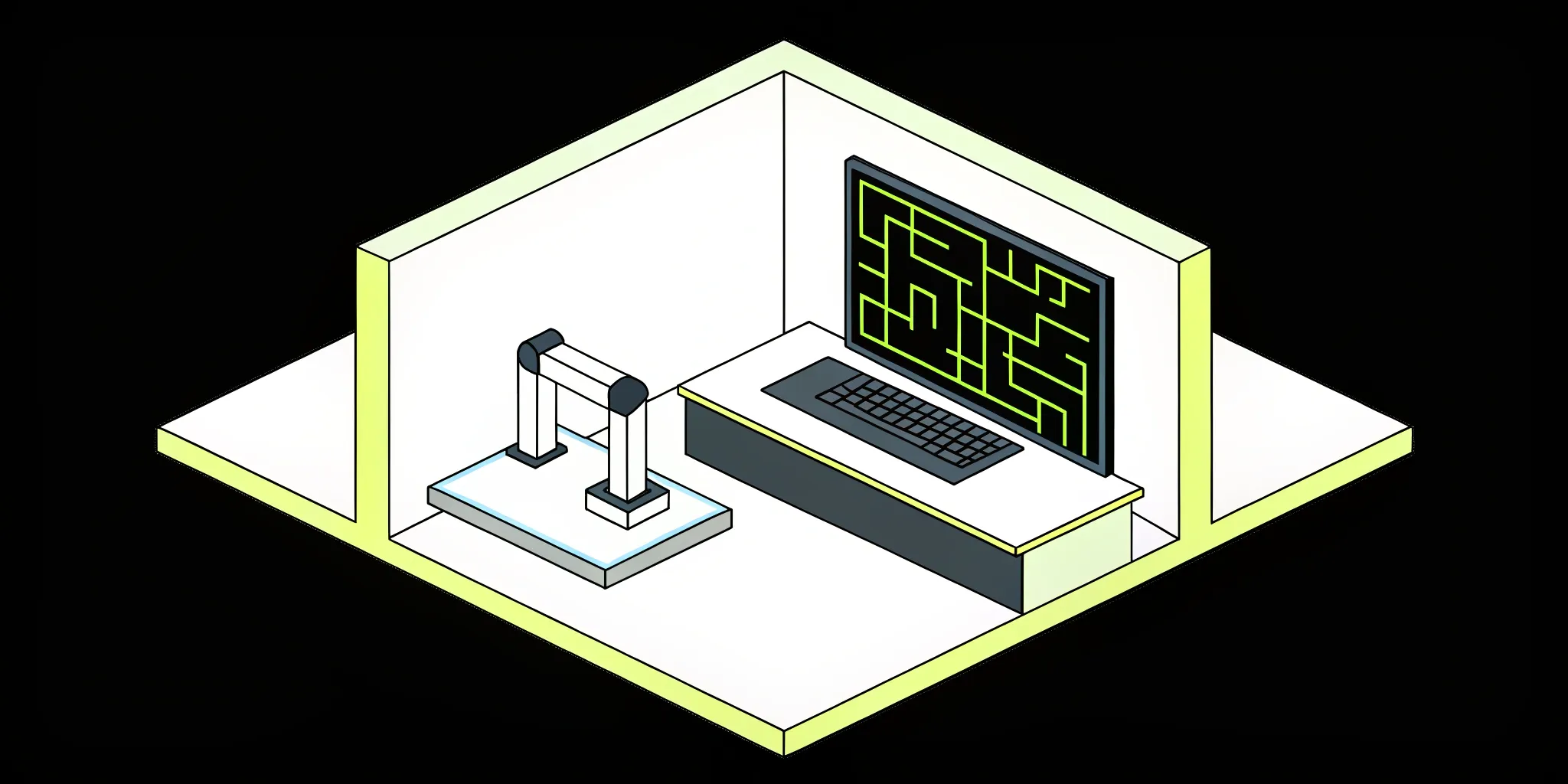

Using natural language processing for ETL

Another exciting development is the use of Natural Language Processing (NLP) to simplify pipeline creation and management. This trend is driving the adoption of no-code/low-code solutions where you can describe your data needs in plain language, and the system generates the necessary pipeline. For example, a user could simply type, "Extract customer names and purchase history from sales data, and load it into the marketing database." This dramatically reduces the reliance on manual coding, which means your team can build and modify data pipelines much faster, accelerating your entire data-to-insight lifecycle.

Get the most from your ETL process

Building an AI-ready ETL pipeline is a huge step, but the work doesn’t stop there. To truly get value from your data infrastructure, you need to continuously refine your process. This means making sure your pipeline is not just functional, but is actively and efficiently supporting your specific AI initiatives. It’s about moving from a generic data flow to a highly tuned engine for your models. By focusing on your goals and measuring performance, you can ensure your ETL process delivers the high-quality data your AI projects need to succeed.

Align your ETL strategy with AI goals

Your ETL process shouldn't exist in a vacuum. It needs to be directly tied to what you want your AI to achieve. Before you even think about data sources, ask yourself: What problem is my AI model solving? What specific insights do I need? The answers will guide every decision you make, from which data to extract to how you clean and structure it. This alignment ensures you’re not just moving data around, but are purposefully preparing the exact fuel your AI model needs to perform well.

AI itself is a powerful ally in this process, making pipelines faster and easier to maintain. By integrating ML directly into your ETL workflows, you can automate quality checks and transformations, ensuring the data that reaches your model is clean, consistent, and ready for analysis. This creates a positive feedback loop where AI helps improve the very data pipelines that feed it.

Measure and improve ETL performance

If you’re not tracking your ETL pipeline’s performance, you’re missing major opportunities for improvement. Key metrics to watch include data processing speed, error rates, and overall cost. The goal is to make your pipeline faster, more reliable, and more cost-effective over time. For instance, AI can dramatically cut down on manual effort; some teams have seen time spent on data preparation drop from 80% of their workflow to just 20%. This frees up your data engineers to focus on more strategic work.

When you build ETL pipelines with AI, it’s smart to be strategic about where you apply it. Using AI during the design and development phases is a great way to optimize transformations and catch errors early. However, using it at runtime can be less predictable. If you do use AI components in your live pipeline, make sure you have strong validation checks in place to maintain data integrity and prevent unexpected outcomes. This careful approach helps you get the benefits of AI without introducing unnecessary risk.

Common myths about ETL in AI

As AI technology becomes more integrated into our data workflows, it’s easy to get tangled in the hype. The conversation around AI and ETL is filled with bold predictions and a few misunderstandings. Separating fact from fiction is the first step toward building an effective AI strategy that actually delivers results. When you understand what AI can and can’t do for your ETL pipelines, you can set realistic goals and empower your team to use these new tools effectively.

The truth is, AI isn’t here to make ETL obsolete; it’s here to make it smarter. Instead of replacing core processes, AI enhances them by automating tedious tasks and providing intelligent suggestions. This shift changes the nature of ETL work itself. It allows data engineers to move away from manual, repetitive coding and focus on more complex, high-value challenges like architectural design and data governance. At the same time, it empowers business users with less technical backgrounds to handle some data tasks themselves, thanks to AI-driven, low-code solutions. This collaborative approach is where the real potential lies—using AI to augment human expertise, not erase it. By understanding this partnership, you can build more efficient, resilient, and intelligent data pipelines for your AI initiatives.

Debunking misconceptions and clarifying facts

Let's clear the air on a few common myths. First is the idea that AI will replace ETL developers. The reality is that AI acts as a powerful co-pilot. Developers are still very much needed to design complex workflows, handle unexpected data anomalies, and ensure the entire process aligns with business requirements. Another misconception is that AI in ETL is flawless. Because AI can be non-deterministic, strong validation and error-handling protocols are crucial for maintaining data quality. AI significantly improves ETL by automating tasks and monitoring pipelines, but it requires human oversight to guide it and ensure its outputs are accurate and reliable.

What's next for ETL pipelines in AI?

The evolution of ETL is all about making your data work smarter, not just harder. As AI becomes more integrated into data processes, we're seeing a shift from manual, rigid pipelines to more intelligent, automated systems. This change brings incredible opportunities for efficiency and insight, but it also introduces new considerations for your data strategy. Let's look at what you can expect and how you can prepare your team for what's ahead.

Future predictions and advancements

The future of ETL isn't about replacing the entire framework but making it more autonomous. We're moving toward a reality where AI can handle the heavy lifting of pipeline design. Imagine an AI that assesses incoming data and your project goals to automatically build the most efficient pipeline for the job. This shift toward more dynamic and real-time pipeline creation means your team can focus on strategy instead of setup. AI will also become a key player in monitoring, using its pattern-recognition skills for anomaly detection within your data and the ETL process itself. This helps you catch errors before they become major problems, ensuring your data stays clean and reliable from start to finish.

Prepare for upcoming challenges

As we get excited about these advancements, it’s also smart to prepare for the challenges they bring. AI can sometimes be non-deterministic, meaning it might not produce the exact same result every time. This requires building robust error-handling and validation checks to ensure consistency. The most critical challenge, however, remains data quality. The old saying "garbage in, garbage out" is more relevant than ever in AI data pipelines. If your source data is messy, your AI-driven insights will be, too. Preparing for the future means doubling down on data governance and implementing rigorous validation at every stage. Getting this right is key to unlocking the cost savings and efficiencies that a well-oiled, AI-enhanced ETL process can deliver.

The benefits of an AI-enhanced ETL process

Integrating AI into your ETL pipelines isn't just a technical upgrade; it's a strategic move that delivers tangible business advantages. By moving beyond traditional, manual methods, you can create a data infrastructure that is not only more efficient but also more intelligent and responsive. This allows your organization to make faster, smarter decisions while optimizing resource allocation. Let's look at two of the most significant benefits you can expect.

Improve cost efficiency with automation

Manual ETL processes are notorious for being slow and expensive. They often require a large team of data engineers to write and maintain complex code for every data source and transformation rule. This not only drains your budget but also ties up your most skilled technical talent in repetitive work. By introducing AI, you can automate many of these tedious tasks. AI-powered tools can intelligently map data fields, suggest transformations, and even generate the necessary code with minimal human oversight. This automation can drastically speed up the process and significantly reduce operational costs. It allows you to accomplish more with a smaller, more focused team, freeing your engineers to concentrate on strategic initiatives that drive real business value.

Get immediate insights with real-time processing

In business, timing is everything. Traditional batch-based ETL processes can leave you waiting hours or even days for updated data, meaning your decisions are always based on old information. AI changes the game by enabling real-time processing. Instead of waiting for the next scheduled batch, ML models can achieve faster data processing and transform data as it arrives. This provides a continuous flow of up-to-date information, allowing you to make decisions based on what’s happening right now. Beyond speed, AI also improves the reliability of your pipeline. It can be trained to perform anomaly detection, automatically identifying and flagging unusual data patterns or process errors that might otherwise go unnoticed, ensuring your insights are both timely and trustworthy.

Related articles

- 9 Best Data Ingestion Tools: A Deep Dive Review

- Ingestion & ETL | Cake AI Solutions

- Data intelligence and your business

- What is ETL?

Frequently asked questions

What's the main difference between a regular ETL pipeline and one that's "AI-ready"?

The biggest difference is intelligence and adaptability. A traditional ETL pipeline is a workhorse that follows a fixed set of instructions you program yourself. An AI-ready pipeline, on the other hand, uses ML to automate and optimize the process. It can learn to identify data quality issues, suggest the best ways to structure information, and even adjust to changes in your data sources, making the entire system more efficient and less brittle.

Will AI eventually replace the need for data engineers in ETL?

Not at all. Think of AI as a powerful co-pilot, not a replacement for the pilot. AI is excellent at handling the repetitive, time-consuming tasks like data cleaning and mapping. This frees up your data engineers to focus on more strategic work, like designing the overall data architecture, solving complex integration challenges, and ensuring the final output aligns with your business goals. Their role simply shifts from manual coding to high-level oversight and problem-solving.

Where should I start if I want to introduce AI into my current ETL process?

A great place to begin is with data quality. You can apply ML models during the transformation stage to automatically spot and fix inconsistencies, standardize formats, or remove duplicate entries. This is a high-impact area that delivers immediate value. Another practical first step is to use AI to automate the data mapping process, which can save your team countless hours of manual work when integrating new data sources.

My data comes from many different systems. Can AI really simplify the integration process?

Yes, this is one of the areas where AI truly shines. Manually integrating data from different CRMs, databases, and applications is a major headache. AI can act as an intelligent assistant by automatically suggesting how to map fields between different systems. It's also particularly good at extracting useful information from unstructured sources like text documents or customer feedback, which are notoriously difficult to handle with traditional methods.

Is it risky to let AI handle data transformations? How do I ensure the data is still accurate?

That's a very smart question. While AI is incredibly powerful, it shouldn't operate in a black box. The key is to implement strong validation checks and monitoring throughout your pipeline. This means you're not just trusting the AI's output blindly; you're creating rules and feedback loops to verify its accuracy and consistency. Human oversight is still critical for designing these safeguards and ensuring the final data is reliable, compliant, and ready for your models.

About Author

Cake Team

More articles from Cake Team

Related Post

MLOps vs AIOps vs DevOps: A Complete Guide

Cake Team

MLOps Pipeline Optimization: A Complete Guide

Cake Team

MLOps vs DevOps: What's the Core Difference?

Cake Team

Machine Learning in Production: A Practical Guide

Cake Team