How to Evaluate Baseten for AI Infrastructure

So, you're ready to move your AI from a cool side project to the core of your business. That's a huge step. But getting past the proof-of-concept stage is tricky. It all comes down to your foundation—a modern, scalable AI infrastructure stack built for serious workloads. You're probably weighing your options, trying to figure out the right AI infrastructure architecture. Maybe you're looking at specialists and trying to evaluate the software development applications company Baseten on AI infrastructure deployment. This guide will help you make sense of it all.

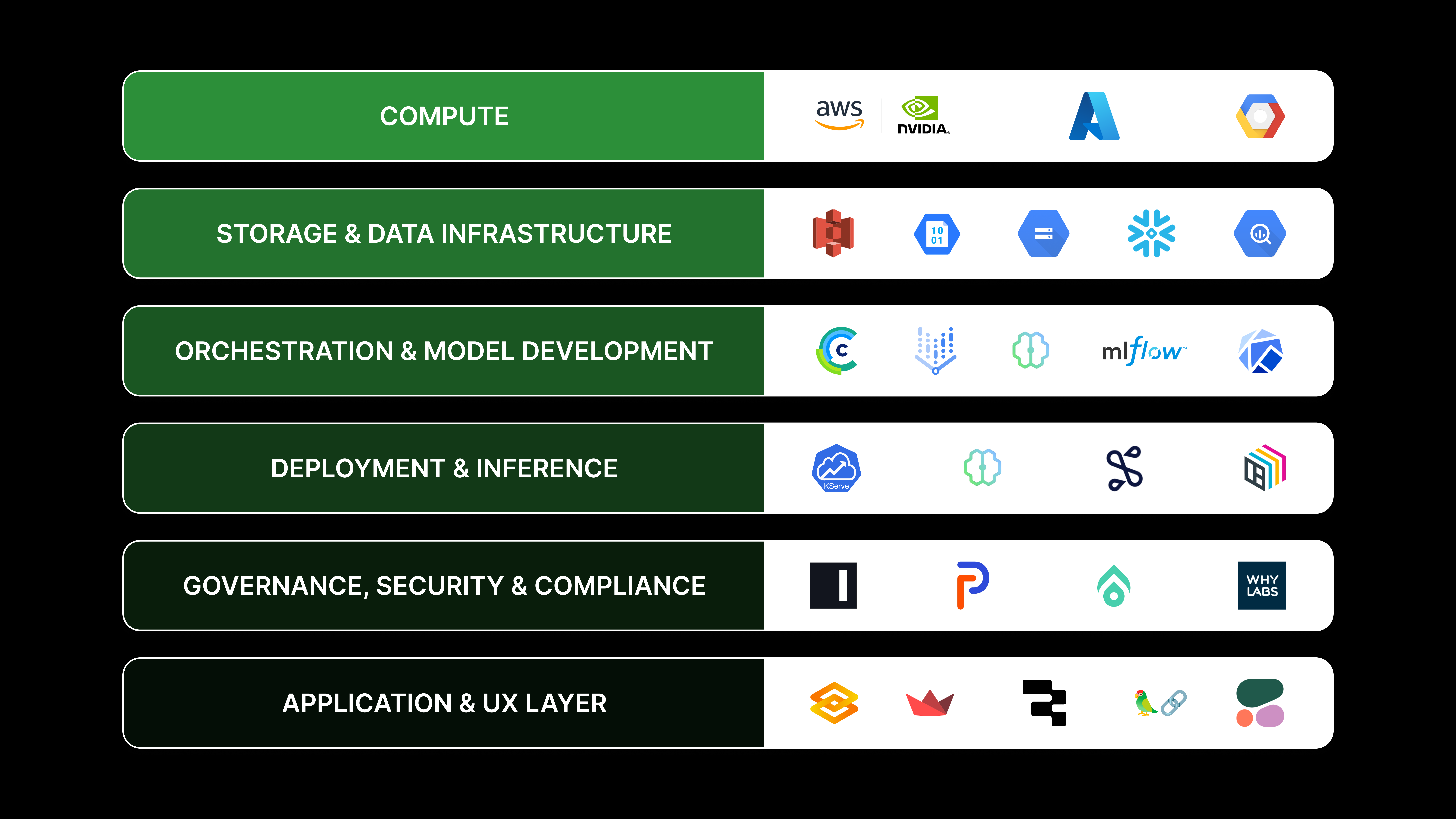

In this primer, we break down the layers of a modern AI infrastructure stack—from the bottom (compute) to the top (end-user experience)—and highlight key vendors and tools in each layer. Whether you're building from scratch or modernizing an existing pipeline, understanding this layered model helps you design for flexibility, scale, and speed.

Let's break down the compute layer

What it is: The physical and virtual hardware that powers model training and inference.

What matters: Performance, elasticity, cost, and availability of GPUs or other accelerators.

Key vendors:

AWS EC2 (with NVIDIA GPUs) – scalable cloud-based GPU compute

Azure NC-Series, ND-Series – optimized for AI workloads

GCP A3 & TPU v5e – cutting-edge AI accelerators

CoreWeave, Lambda Labs – specialized GPU cloud providers

NVIDIA DGX – on-prem enterprise GPU servers

Cake.ai – abstracts compute across clouds and vendors for flexibility and scale

Enterprises often adopt a hybrid or multi-cloud strategy, mixing on-demand cloud compute with reserved capacity or colocation for cost control. Cake.ai simplifies this by offering a unified abstraction layer across compute environments.

- LEARN: AIOps, Powered by Cake

How Baseten handles compute

Baseten is another platform that tackles the compute challenge by managing the underlying infrastructure for you. Their goal is to abstract away the complexities of hardware so your team can focus entirely on building and shipping AI models. This approach is becoming a standard for modern AI platforms because it directly solves the operational headaches that come with running AI workloads at scale. Let's look at how they specifically manage their hardware and optimize for performance.

Dedicated hardware and auto-scaling

One of the biggest hurdles in the compute layer is efficiently matching resources to demand. Baseten addresses this by providing the necessary hardware and building in auto-scaling. This means the platform automatically adjusts compute power based on your application's traffic, ensuring you have the resources for peak moments without overpaying during quiet periods. This elasticity allows your team to focus on creating AI-powered features instead of becoming infrastructure experts. The platform also supports the development lifecycle by managing model training jobs, saving progress with checkpoints, and organizing logs for easier debugging.

Performance and optimization

Performance is non-negotiable for AI applications, especially those that interact directly with users. Baseten’s infrastructure is designed for high availability, with a 99.99% uptime that ensures reliability. They’ve demonstrated impressive results, helping one customer achieve speech-to-text transcription in under 300 milliseconds. For more complex, multi-step AI workflows, their Baseten Chains product improves GPU utilization by six times while cutting latency in half. This deep focus on optimization ensures that the models you deliver are not just powerful, but also consistently fast and responsive for your end-users.

Getting your storage and data infrastructure right

What it is: Where datasets, model checkpoints, and training logs live. Plus the pipelines that move data through the system.

What matters: Throughput, latency, scalability, and governance.

Key vendors:

Amazon S3 / Azure Blob / GCS – standard object storage

Snowflake / BigQuery / Databricks Lakehouse – analytic data platforms

Delta Lake / Apache Iceberg / Hudi – data lake table formats

Fivetran, Airbyte – data ingestion pipelines

Apache Kafka / Confluent – real-time event streaming

Storage is often decoupled from compute and accessed via high-throughput networks. AI workloads benefit from structured data lakes and high-bandwidth file systems, particularly during training.

Making sense of orchestration and model development

What it is: The layer where models are trained, experiments are tracked, and pipelines are orchestrated.

What matters: Experimentation speed, reproducibility, scalability, and developer experience.

Key vendors:

Cake.ai – full-stack orchestration with built-in compliance and multi-cloud support

Kubeflow / Metaflow / MLflow – open-source MLOps frameworks

SageMaker / Vertex AI / Azure ML – managed orchestration platforms

Weights & Biases / Comet – experiment tracking and collaboration

This is where the modeling magic happens. Teams iterate on data preprocessing, model architectures, hyperparameters, and evaluation. Enterprises that standardize this layer reduce operational friction and make onboarding new team members faster.

- BLOG: A Guide to LLMOps

The developer experience on Baseten

While some platforms like Cake manage the entire AI stack from the ground up, other tools focus on specific parts of the developer workflow. Baseten is one such platform, designed to simplify the process of turning a trained model into a production-ready application. It offers a structured environment that helps developers package, deploy, and scale their models with a clear, step-by-step process. This focus on the developer experience makes it a popular choice for teams who want to get their models into production quickly without managing all the underlying infrastructure themselves. Let's look at a few key features that define its workflow.

Packaging models with Truss

Getting a model from your laptop to a live server can be tricky. Baseten addresses this with an open-source tool called Truss. Think of Truss as a standardized shipping container for your AI model. It lets you package your model's code, weights, and any other dependencies into a single, portable format. This makes the deployment process predictable and repeatable, which is exactly what you want when you're building reliable applications. Because Truss is open-source, you aren't locked into Baseten's platform; you can use it to package models for deployment anywhere, giving your team flexibility.

Streamlining workflows with Chains

Modern AI applications often involve more than just a single model. You might need to pre-process data, run it through one model, use that output as the input for another model, and then format the final result. Baseten simplifies these complex sequences with a feature called Chains. It allows you to link multiple models and custom Python code together into a single, cohesive workflow that can be called with one API request. This is incredibly useful for building sophisticated features, like an image analysis pipeline that identifies objects and then generates descriptive text, without having to write complex orchestration code from scratch.

From development to production

Safely testing and deploying new model versions is critical for any serious application. Baseten provides a standard software development lifecycle with separate environments for development, staging, and production. You can build and test your model in a dedicated development environment without affecting live users. Once you're confident it's working correctly, you can promote it to a staging environment for final checks. The last step is promoting it to production, where it will begin handling real traffic. This structured promotion process helps prevent bugs and ensures that your users always have a stable, reliable experience with your AI features.

Training new models

Beyond just deploying models, Baseten also provides the infrastructure needed to train them. You can define your training code, specify the software environment it needs to run in, and select the amount of computing power required for the job. Baseten then provisions the necessary systems and runs your training process. This abstracts away the complexity of managing GPUs and other hardware, letting your team focus on building and improving the models themselves. It’s a key piece of the MLOps puzzle, which platforms like Cake solve by integrating compute management into a unified, full-stack solution that covers the entire AI lifecycle.

What you need for successful AI infrastructure deployment

What it is: Where trained models are operationalized as real-time services or batch processing jobs.

What matters: Latency, throughput, versioning, and observability.

Key vendors:

Cake.ai – scalable, portable, and secure model deployment across environments

Seldon / BentoML / KServe – open-source model serving frameworks

AWS SageMaker Endpoints / Vertex AI Prediction – managed model hosting

OctoML / Modal / Baseten – inference optimization and deployment platforms

Enterprises need inference to be fast, reliable, and cost-efficient—whether for real-time applications or asynchronous batch jobs. Fine-grained traffic routing and rollback support are also key at this layer.

Baseten's approach to deployment and inference

Baseten is another key player specializing in the deployment and inference layer, focused on helping teams get their models into production. The platform is built for speed and reliability, making AI models accessible and performant enough for real-world applications. At its core, Baseten's approach is about providing teams with ready-to-use APIs and flexible deployment configurations that can adapt to different business needs and technical requirements. This allows data science and engineering teams to move faster from a trained model to a live, scalable service that users can interact with, without having to build the serving layer from the ground up.

Ready-to-use model APIs

At its core, Baseten helps businesses put their AI models into action. The platform is engineered to transform your trained models into high-performance, reliable APIs that can be easily integrated into your applications. The main goal is to simplify the process of operationalizing AI, so you can focus on building your product instead of managing complex serving infrastructure. This is particularly useful for teams that need to move quickly and require a stable, scalable endpoint for their models without getting bogged down in the underlying setup.

Flexible deployment options

Flexibility is a key part of Baseten’s offering. You have the option to run models on their managed cloud or host the platform in your own cloud environment for more control. Baseten is also specifically designed to handle the demanding workloads of modern generative AI applications, providing specialized tools and optimizations. You can configure how you get predictions, whether you need them in real-time, processed in batches, or delivered as a continuous stream. This allows you to fine-tune your setup for speed, high request volumes, or cost efficiency, depending on what your project requires.

Don't forget about governance, security, and compliance

What it is: Controls that ensure models are safe, compliant, and aligned with enterprise policy.

What matters: Access control, auditability, data protection, and model transparency.

Key vendors:

Cake.ai – platform-level policy enforcement, RBAC, and audit trails

Immuta / Privacera – data access governance

Aporia / WhyLabs / Arize AI – model monitoring and drift detection

Truera / Fiddler – explainability and fairness

AWS IAM / Azure AD / Vault – access and secrets management

As models impact customer decisions, compliance becomes a first-class requirement. Enterprises are expected to maintain records of data lineage, explain model decisions, and control who can access what at every level of the stack.

Key platform features for teams

Beyond the technical layers, the right AI platform should also equip your team with the tools they need to work together effectively and securely. When you’re evaluating different solutions, it’s easy to get lost in compute specs and model serving latency. But the features that enable smooth collaboration, provide clear insights, and offer expert guidance are often what make or break a project. A platform that prioritizes the developer and operator experience helps your team move faster, build more reliable systems, and ultimately drive better business outcomes. Let's look at a few critical features that every enterprise team should look for.

Observability and real-time monitoring

Once a model is deployed, your work is far from over. You need a clear view of how it’s performing in the real world. True observability goes beyond simple uptime monitoring; it means having access to real-time data, logs, and detailed traces for every request. A strong platform allows you to see exactly what’s happening with your model, including the inputs it receives, the outputs it generates, and any errors that occur along the way. This level of detail is essential for debugging issues, identifying performance bottlenecks, and detecting model drift before it impacts users. The ability to export this telemetry to other monitoring tools is also key for integrating AI workloads into your existing operational dashboards.

Collaboration and security controls

AI development is a team sport, bringing together data scientists, ML engineers, and application developers. Your infrastructure platform should be a central hub that supports this collaboration without compromising security. Look for a solution with an intuitive design that works with the tools your team already uses, like TensorFlow and PyTorch. At the same time, it must provide robust security measures. Features like role-based access control (RBAC) and encrypted storage are non-negotiable. These controls ensure that team members can work together efficiently while only accessing the data and models they are authorized to use, protecting sensitive information and maintaining a clear audit trail.

Hands-on expert support

Even the most skilled teams run into challenges that a documentation page can’t solve. This is where expert support becomes a game-changer. When evaluating platforms, consider the level of hands-on assistance available. The best partners offer more than just a support ticket system; they provide access to dedicated experts who can help guide your team from the initial setup to full production deployment. This kind of partnership is invaluable for navigating complex architectural decisions, troubleshooting tough problems, and ensuring you’re getting the most out of the platform. A solution like Cake.ai, which manages the entire stack, is designed to provide this comprehensive support, helping you accelerate your initiatives with confidence.

The final piece: your application and UX layer

What it is: The user-facing surface where AI meets real-world tasks—via apps, dashboards, APIs, or embedded experiences.

What matters: Usability, responsiveness, integration, and safety.

Key vendors:

Streamlit / Gradio / Dash – quick UI layers for models

Retool / Appsmith – internal AI-powered tools

LangChain / LlamaIndex / RAG frameworks – powering GenAI apps with enterprise data

OpenAI / Anthropic / Cohere / Mistral APIs – plug-and-play LLMs

Cake.ai – unified runtime to deploy and iterate on GenAI experiences securely

This layer is where value is realized. Whether through a chatbot, a decision support dashboard, or a personalized customer interface, delivering AI to end users in a reliable, interpretable way is where impact happens.

Building generative AI applications

So, how do all these layers come together to create a real-world generative AI application? It all starts with a solid foundation. You need the right compute power—like high-performance GPUs—and a well-organized data infrastructure to properly feed your models. This is the bedrock of your entire project. From there, the orchestration layer is where your team brings the model to life, managing everything from training experiments to complex data pipelines. This can be the most challenging part, which is why a comprehensive platform like Cake.ai that manages the entire stack is such a game-changer. Once your model is trained, you need to deploy it efficiently for inference, making sure it’s fast and reliable for your users. Finally, it all culminates in the user experience layer, where your AI delivers tangible value through an intuitive interface, whether that’s a chatbot, a dashboard, or an API.

Putting your AI infrastructure stack together

Enterprise AI infrastructure is no longer just a few GPUs and a model. It’s a full-stack system—from compute and storage to governance and UX—that must scale, comply, and evolve as fast as your business.

Teams that get this stack right don’t just build smarter models—they build durable, enterprise-grade systems that can evolve with new data, new regulations, and new opportunities.

Cake helps you unify and abstract this stack across environments—so your team can move fast without sacrificing compliance, portability, or performance. Whether you're training foundation models, deploying LLM apps, or running sensitive inference workloads, Cake is the infrastructure layer that meets you where you are and helps you scale where you're going.

Where Baseten fits in the market

Among the specialized platforms for deployment and inference, Baseten has carved out a niche for itself by focusing on developer experience and speed. It’s designed for teams who want to get their models into production quickly without wrestling with the complexities of underlying infrastructure. Baseten aims to be the bridge between a trained model and a live, scalable API endpoint. For companies that have their model development and data pipelines figured out but need a reliable and straightforward way to serve those models, Baseten presents a compelling option. It’s particularly well-suited for teams that prioritize ease of use and want to offload the operational burden of model deployment.

Ease of use compared to competitors

When you look at the AI platform landscape, you’ll find a spectrum of complexity. On one end, you have comprehensive but often intricate platforms like AWS SageMaker, which offer a vast toolkit but can come with a steep learning curve. Baseten positions itself as a more accessible alternative. It’s often cited as being easier to learn and use, allowing developers to deploy models with less initial setup and configuration. This focus on simplicity means teams can move from a Jupyter notebook to a production-ready API faster. The trade-off is that you might not get every single feature offered by the larger cloud suites, but for many use cases, Baseten’s streamlined workflow is exactly what’s needed to accelerate development.

Pricing and target audience

Baseten’s approach to pricing also makes it an attractive choice for startups and smaller businesses. With a flexible and transparent pricing plan, companies can get started without committing to a massive upfront investment or navigating the often-confusing billing structures of major cloud providers. This predictability is a huge advantage for teams with tight budgets who need to manage their burn rate carefully. By targeting companies that are scaling their AI capabilities, Baseten provides an accessible on-ramp to production-grade model serving. It allows them to pay for the resources they actually use, making it a cost-effective solution for proving out AI-powered products before scaling to massive enterprise-level traffic.

Choosing the right solution for your stack

Deciding on the right tools for your AI stack is a balancing act. A specialized platform like Baseten can be a perfect fit if your primary bottleneck is model deployment. However, it’s important to look at your entire workflow. Do you also need a unified solution for data management, experiment tracking, and multi-cloud compute orchestration? The best choice depends on your team’s existing expertise, your long-term scalability plans, and your governance requirements. For some, piecing together best-in-class tools for each layer of the stack is the right approach. For others, a more integrated platform that manages the entire lifecycle is more efficient and secure.

The value of a fully managed AI platform

The core promise of a fully managed platform is simple: let your data scientists and engineers focus on building great AI products, not on managing servers. By handling the complexities of autoscaling, dependency management, and hardware optimization, these platforms abstract away the tedious and time-consuming parts of MLOps. This allows teams to iterate faster and deliver value to the business more quickly. While Baseten excels at managing the deployment and inference layer, a comprehensive solution like Cake extends this principle to the entire AI stack. We manage everything from the compute infrastructure and open-source tooling to security and compliance, providing a single, production-ready platform that accelerates your entire AI initiative.

Frequently Asked Questions

What’s the real difference between a platform like Cake.ai and a tool like Baseten? Think of it like building a house. A specialized tool like Baseten is like hiring an expert contractor who is fantastic at framing and drywall—they excel at a specific, crucial part of the process, in this case, deploying models and creating a great developer workflow. A full-stack platform like Cake.ai is more like a design-build firm that manages the entire project from laying the foundation to handing you the keys. We handle every layer, from the compute and data pipelines to security and compliance, ensuring all the pieces work together seamlessly. Your choice depends on whether you need an expert for one part of the job or a partner to manage the whole build.

Do I need to build a custom stack, or can I just use a major cloud provider's all-in-one AI service? Using a single cloud provider's suite, like AWS SageMaker or Vertex AI, can certainly get you started. However, many teams find they quickly run into limitations or get locked into a specific ecosystem. This can make it difficult to use the best tools for a particular job or control costs effectively across different cloud environments. A platform like Cake.ai gives you the benefits of a managed solution while abstracting away the underlying providers. This gives you the flexibility to run workloads where it makes the most sense without being tied to a single vendor's roadmap.

My team is just getting started with production AI. Isn't a full infrastructure stack overkill? It's a fair question, but thinking about the full stack from the beginning is about setting a strong foundation, not over-engineering. You don't have to implement every component at once, but understanding how the layers connect helps you avoid choices that will box you in later. Starting with a platform that can scale with you means you won't have to re-architect everything when your proof-of-concept suddenly becomes a business-critical application. It’s about planning for success from day one.

Why is so much emphasis placed on the governance and security layer? In the early days of a project, it's easy to focus only on getting the model to work. But as soon as your AI starts making real decisions or handling sensitive data, governance and security become non-negotiable. This layer is what ensures you can track who did what, protect customer data, and prove to regulators that your models are fair and compliant. Building these controls in from the start saves you from massive headaches and potential crises down the road. It’s the professional-grade part of the stack that turns a cool project into a trustworthy enterprise system.

What's the most common mistake teams make when building their AI infrastructure? The most common mistake is underestimating the operational complexity of running AI in production. It’s one thing to train a model in a notebook, but it’s another thing entirely to serve it reliably to thousands of users, monitor its performance for drift, manage the underlying hardware, and keep everything secure. Teams often focus so much on the model itself that they neglect the infrastructure needed to support it. This leads to brittle, unscalable systems that are a nightmare to maintain.

Key Takeaways

- View your AI infrastructure as a full stack: A successful AI system isn't just a model; it's a series of connected layers, including compute, data, orchestration, and deployment. Building for scale means planning for how each of these layers will work together.

- Decide between an integrated platform and specialized tools: You can either adopt a unified platform that manages the entire AI lifecycle or assemble your stack with different tools for each layer. The right choice depends on whether your team needs end-to-end efficiency or granular control over specific components.

- Focus on your primary bottleneck: Pinpoint the biggest challenge in your workflow. If getting models into production is your main hurdle, a specialized deployment tool can help. If you face friction across the entire process, a fully managed platform provides a more comprehensive solution.

Related Articles

About Author

Cake Team

More articles from Cake Team

Related Post

How to Choose the Right Anomaly Detection Software

Cake Team

Top Open Source Observability Tools: Your Guide

Cake Team

AIOps Tools: A Complete Guide for Modern Teams

Cake Team

7 Best Open-Source AI Governance Tools Reviewed

Cake Team