Custom AIPC Builds for Healthcare IT: A Blueprint

You see the potential for AI in your organization, but the path from a great idea to a working tool feels overwhelming. Generic AI solutions often miss the mark, failing to understand the unique needs of your clinicians and patients. This guide provides the blueprint for creating customizable AIPC builds for healthcare IT that solve real-world problems. We'll walk you through the actionable steps, from pinpointing a specific challenge to gathering quality data and assembling the right team. You'll learn exactly how to build a custom AI solution for healthcare that truly works.

Key Takeaways

- Define your problem before you touch any technology: The most successful AI projects solve a specific, real-world challenge. Get clear on the exact problem you want to fix for your clinicians or patients before you consider which tools to use.

- Data quality and compliance are non-negotiable: Your AI solution will only be as trustworthy as the data it’s trained on. Prioritize gathering high-quality, representative data and build your entire process around strict compliance with regulations like HIPAA to protect patient privacy.

- Plan for the entire lifecycle, not just the launch: Building a great AI tool is a team sport that requires ongoing effort. Assemble a cross-functional team and plan for continuous monitoring, testing, and refinement to ensure your solution remains accurate, fair, and effective long-term.

Why your practice needs a custom AI solution

Healthcare organizations are under constant pressure, juggling growing patient loads, complex administrative tasks, and a flood of data. While general AI tools are becoming more common, healthcare has unique, high-stakes needs that a one-size-fits-all solution often can't meet. Your workflows, patient data, and compliance requirements are specific to your organization, and your technology should be too. This is where a custom approach makes a significant difference.

Instead of trying to fit a square peg into a round hole, a custom AI solution is built from the ground up to address your specific challenges. Imagine an AI agent designed to perfectly integrate with your existing electronic health record (EHR) system to streamline patient intake or one that automates billing codes based on your clinic’s specific services. By tailoring the technology to your team's daily reality, you can reduce the administrative burden and free up your skilled professionals to focus on what they do best: providing excellent patient care.

Ultimately, the goal is to improve patient outcomes. Custom AI can help you get there by enhancing diagnostic accuracy, personalizing treatment plans, and making your operations more efficient. While building a custom AI solution is a complex process that requires careful planning and the right expertise, the investment pays off. You get a tool that works for you, not the other way around, creating a more effective and sustainable system for both your staff and the patients you serve.

Before you write a single line of code or even think about what technology to use, you need to get crystal clear on what you're trying to accomplish. This might sound obvious, but it’s the step where many ambitious AI projects go wrong.

Custom AI is a business asset you own

When you choose a custom AI solution, you're not just renting a tool; you're building a valuable company asset. Unlike off-the-shelf software, a custom-built tool is designed to integrate perfectly with your existing systems and workflows. It’s tailored to your specific needs and can scale as your organization grows. Most importantly, you own it. This ownership gives you complete control over the technology's future, allowing you to adapt it as regulations change or new opportunities arise. It's a long-term investment that becomes more valuable over time, rather than a recurring subscription fee for a service you can't control or modify to fit your unique operational challenges.

Proven efficiency gains and market growth

The benefits of custom AI in healthcare aren't just theoretical—they're backed by real-world results. For example, some organizations have successfully cut administrative costs by 40% by deploying custom AI agents for tasks like scheduling and patient management. These tools can also dramatically improve accuracy, with AI-powered transcription and summarization achieving 98% accuracy in medical records. For clinicians, the impact is profound. By automating routine coordination tasks, doctors have been able to save up to two hours per day, freeing them to focus more on patient care and reducing the risk of burnout. These efficiency gains translate directly into better care and a stronger financial footing for your practice.

The sheer scale of health data demands AI

Modern healthcare generates an incredible amount of data from countless sources, including electronic health records, wearable devices, medical imaging, and even social media. It's simply too much information for any human team to analyze effectively. This is where AI becomes essential. It has the unique ability to process these massive datasets, identify subtle patterns, and extract actionable insights that would otherwise remain hidden. This capability is the engine behind the shift toward precision medicine, which promises to revolutionize healthcare by tailoring treatments to individual patients. By leveraging AI, you can turn your organization's data from an overwhelming challenge into a powerful tool for personalized care.

Understanding the power of AI in healthcare

Before we get into the "how," it's important to understand what AI really means in a clinical context. It’s not about futuristic robots taking over hospitals. Instead, it's about using advanced computing to find patterns and insights in complex health data that would be impossible for humans to spot on their own. This technology acts as a powerful assistant, helping medical professionals work more efficiently and make more informed decisions. The core idea is to enhance human expertise, not replace it, leading to better care, streamlined operations, and groundbreaking medical advancements that were once out of reach.

Augmented intelligence: Helping, not replacing, doctors

One of the biggest misconceptions about AI in healthcare is that it’s here to replace clinicians. The reality is far more collaborative. The goal is to achieve augmented intelligence, where AI acts as a partner to medical professionals. Think of it as a highly skilled analyst that can process millions of data points—from patient records and lab results to medical journals—in seconds. This allows doctors to see a more complete picture, validate their diagnoses, and consider treatment options they might not have otherwise, all while dedicating their time and empathy to direct patient care.

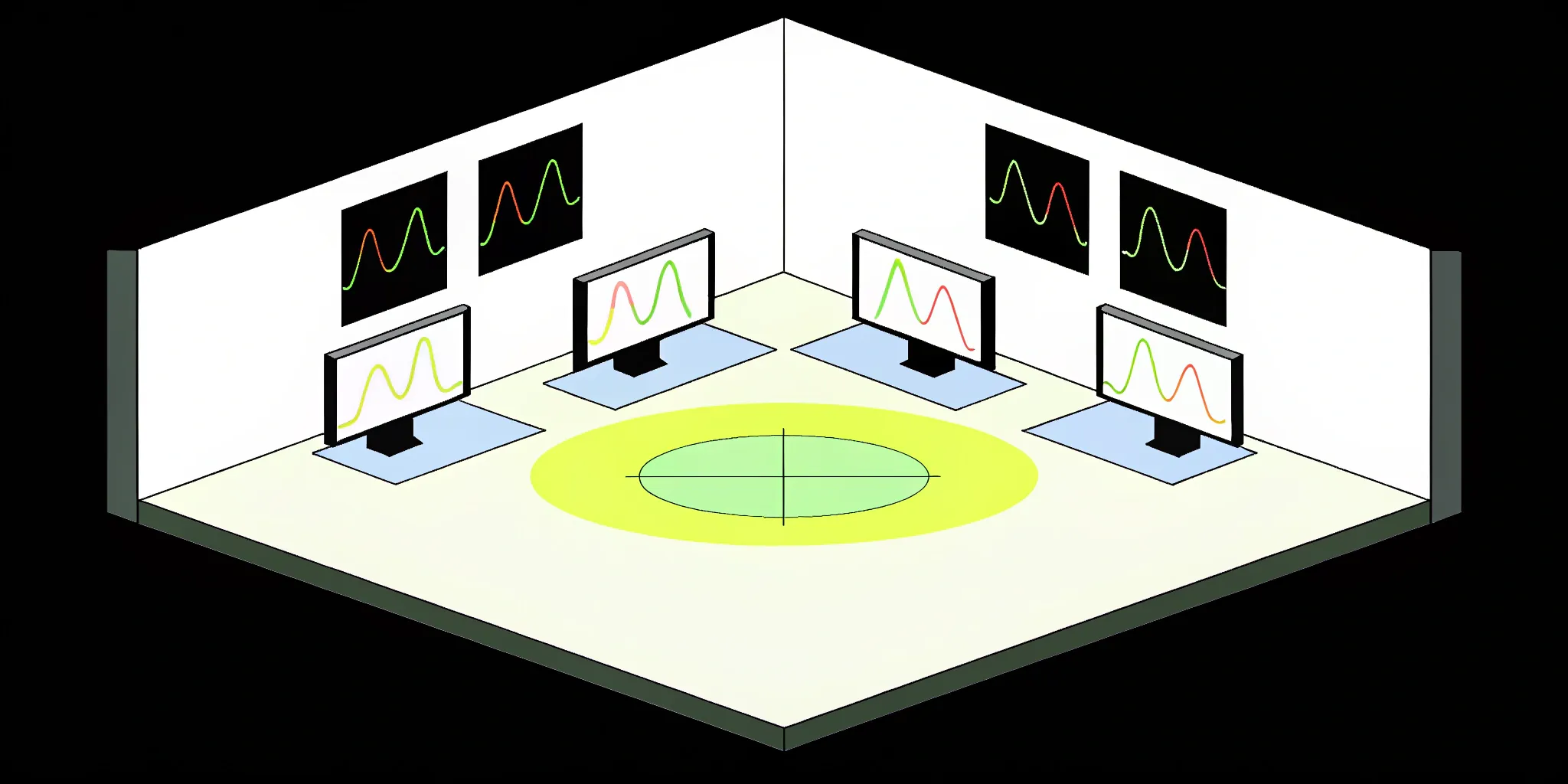

Agentic AI: Moving beyond simple commands

As AI technology matures, we're moving beyond simple tools that just follow a set of pre-programmed rules. The next frontier is Agentic AI, a more advanced system designed to understand high-level goals, create a plan, and take action across different applications to achieve them. For example, instead of just flagging an abnormal lab result, an AI agent could flag the result, check the patient’s calendar, schedule a follow-up appointment with the right specialist, and send a notification to the primary care physician. This proactive capability, which often relies on a well-managed AI stack to connect disparate systems, is what allows AI to truly streamline complex clinical workflows.

Precision medicine: Tailoring care with data

We all respond to treatments differently based on our genetics, lifestyle, and environment. Precision medicine aims to tailor healthcare to the individual, but it relies on analyzing enormous and complex datasets. This is where AI becomes essential. By processing genomic data, electronic health records, and real-world evidence, AI algorithms can help identify which treatments are most likely to be effective for a specific person. This moves us away from a one-size-fits-all approach and toward truly personalized care plans that can lead to significantly better patient outcomes.

Common use cases for custom AI in healthcare

The true value of AI comes to life when you see it in action. Across the healthcare landscape, custom AI solutions are already tackling some of the industry's most pressing challenges. From accelerating the search for new cures to making diagnostics more accurate, these tools are creating tangible improvements for both patients and providers. These applications demonstrate how tailored AI can solve specific, high-impact problems, turning data into a powerful asset for improving health and wellness on a global scale. Here are a few of the most common and effective use cases.

Speeding up medical research and drug discovery

Developing a new drug is an incredibly long and expensive process, but AI is changing the timeline. By analyzing vast biological and chemical datasets, AI can identify promising compounds for new drugs and predict their potential effectiveness, dramatically shortening the initial research phase. Furthermore, AI helps optimize clinical trials by identifying the most suitable patient candidates from large populations, ensuring that studies are more efficient and more likely to yield clear results. This acceleration helps get life-saving treatments to the people who need them faster.

Improving public health with predictive analytics

Beyond individual care, AI is a powerful tool for managing the health of entire communities. By analyzing public health data, electronic health records, and even environmental factors, predictive analytics can identify populations at high risk for certain diseases, forecast the spread of infectious outbreaks like the flu, and predict hospital readmission rates. This allows public health officials and hospitals to allocate resources more effectively, launch targeted prevention campaigns, and intervene before health issues become widespread crises, creating a more proactive and resilient healthcare system.

Powering advanced diagnostics

One of the most successful applications of AI in healthcare is in medical imaging analysis. AI models can be trained on thousands of X-rays, CT scans, and MRIs to recognize subtle signs of disease—like cancerous tumors or early indicators of diabetic retinopathy—that the human eye might miss. This doesn't replace the radiologist; it provides them with a second set of eyes, helping them prioritize urgent cases and make diagnoses with greater speed and accuracy. Earlier and more precise detection ultimately leads to better treatment outcomes for patients.

Using virtual assistants and AI-assisted surgery

AI is also transforming how patients and surgeons interact with the healthcare system. AI-powered virtual assistants and chatbots can handle routine administrative tasks like scheduling appointments, answering frequently asked questions, and providing medication reminders, freeing up staff time for more complex patient needs. In the operating room, AI-assisted robotic surgery allows surgeons to perform complex procedures with enhanced precision, control, and flexibility. These robotic systems can lead to smaller incisions, reduced blood loss, and faster recovery times for patients.

Monitoring personal wellness with wearable tech

The rise of smartwatches and other wearable devices has created a constant stream of personal health data. AI is the key to turning that raw data into actionable insights. By analyzing metrics like heart rate, sleep patterns, and activity levels, AI algorithms can detect early warning signs of chronic conditions such as atrial fibrillation or diabetes. This enables continuous, real-time health monitoring outside of the clinic, empowering individuals to take a more proactive role in managing their own wellness and allowing clinicians to intervene sooner when potential issues arise.

What do you want your healthcare AI to achieve?

Before you write a single line of code or even think about what technology to use, you need to get crystal clear on what you're trying to accomplish. This might sound obvious, but it’s the step where many ambitious AI projects go wrong. They start with a cool technology instead of a concrete problem. Building an effective AI solution begins with a deep understanding of the challenge you want to solve, because many AI projects fail when they don't align with real-world needs.

So, what does this look like in practice? It means sitting down with your team and defining the specific, measurable goals for your AI. Think less about "building an AI" and more about "solving a problem." Focus on tasks that are currently difficult, time-consuming, or require significant human effort. For example, are you trying to reduce the time clinicians spend on administrative paperwork? Or maybe you want to help radiologists detect anomalies in medical scans more quickly.

To get to the heart of your project, ask these key questions:

- What specific job will the AI perform? Will it summarize patient notes, answer billing questions, or predict patient readmission risks?

- What information will it need? Will it process text from electronic health records, analyze images like X-rays, or use numerical data from lab results?

- How will we know it’s working? What are the key performance indicators (KPIs)? This could be anything from a 20% reduction in administrative tasks to a 15% improvement in diagnostic accuracy.

Clearly defining these objectives is the foundation of your entire project. It sets the direction for everything that follows, from the data you'll need to gather to the AI technologies you'll ultimately choose. With a solid plan in place, you can move forward confidently, knowing that you're building a solution that will make a tangible difference in your healthcare setting.

Blog: Top 14 use cases for AI in healthcare

How to build a custom AI solution for your healthcare needs

Building a custom AI solution can feel like a massive undertaking, but it becomes much more manageable when you break it down into a clear, step-by-step process. A structured approach helps you stay focused on the end goal and avoid common pitfalls, like building a solution that doesn’t solve a real-world problem. Think of this as your roadmap from initial idea to a functional, effective tool that can make a real difference for clinicians and patients.

Each stage builds on the last, creating a strong foundation for a successful project. It all starts with a deep understanding of the problem you want to solve and the data you have to work with. From there, you move into the more technical phases of choosing your tools, training your model, and testing its performance. While the technical details can get complex, having a partner or a comprehensive platform can streamline the entire journey. A managed solution like Cake handles the underlying infrastructure and provides production-ready components, letting your team focus on the development steps that matter most.

1. Pinpoint the problem you want to solve

Before you write a single line of code, you need to know exactly what you’re trying to achieve. An effective AI solution starts with a clearly defined problem, not a piece of technology. Many AI projects stumble because they are disconnected from the actual needs of the people they’re meant to help. Talk to clinicians, administrators, and even patients to understand their biggest pain points. Are you trying to reduce diagnostic errors, automate tedious administrative tasks, or predict patient readmission rates? Get specific. A focused goal, like "reducing appointment no-shows by 15%," is much more actionable than a vague one like "improving efficiency." This initial problem discovery phase is your most important one.

2. Gather and prepare high-quality data

High-quality data is the lifeblood of any AI model. Your solution will only be as good as the data you train it on. This stage involves gathering all the relevant information you need and then getting it ready for the model. Data preparation isn't glamorous, but it's essential. It involves cleaning the data to remove errors, fill in missing values, and get rid of duplicate entries. You’ll also need to normalize it, which means structuring everything in a consistent format so the AI can process it effectively. Rushing this step is a recipe for an inaccurate and unreliable model, so invest the time to get your data quality right from the start.

3. Choose the right AI technologies

With a clear problem and clean data, you can start thinking about the tools you’ll use to build your solution. The AI landscape is full of powerful open-source frameworks and libraries, and your choice will depend on the complexity of your project and the specific task at hand. Popular options include TensorFlow for large-scale models, PyTorch for its flexibility in research, and Scikit-learn for more traditional machine learning tasks. You don't need to be an expert in all of them, but it's important to select a technology stack that fits your team's skills and your project's goals. This is another area where a managed platform can help by providing pre-vetted, integrated tools that are ready for production.

4. Develop and train your AI model

This is where the magic happens. Training is the process of "teaching" your AI model to recognize patterns and make decisions based on the data you’ve prepared. You feed your cleaned data into the model, and it adjusts its internal parameters over and over again to improve its predictions and minimize errors. It’s an iterative process of feeding it information, letting it make a guess, correcting it, and repeating until its performance is strong. This phase requires significant computational power and expertise to fine-tune the model for the best results. The goal is to create a model that can generalize what it has learned to make accurate predictions on new, unseen data.

5. Test, validate, and iterate

Once your model is trained, you need to see how well it performs in the real world. This means testing it on a fresh set of data it has never seen before. This step is crucial for ensuring your model is not just memorizing the training data but has actually learned the underlying patterns. You’ll measure its performance using key metrics like accuracy to see how often it gets things right. But testing isn't a one-time event. It’s a continuous cycle. Based on the results, you’ll likely go back to refine your data, adjust your model, and retrain it. This iterative loop of model validation and improvement is what leads to a robust, reliable, and truly helpful healthcare AI solution.

You’re dealing with protected health information (PHI), which means every step of your data handling process is under a microscope. Getting this right isn’t just about building an effective model; it’s about protecting patients, maintaining trust, and meeting strict legal requirements.

The importance of contextual understanding

Defining your problem is more than just setting a goal—it’s about deeply understanding the real-world environment where your AI will operate. This requires stepping out of the planning meetings and into the clinic to observe your team's actual day-to-day. Spend time with the people who will ultimately use this tool. Talk to clinicians about their biggest headaches, watch how administrators manage patient information, and learn the unwritten rules of their workflow. An AI solution that seems brilliant on a whiteboard can quickly become a burden if it disrupts an established process or adds friction to a busy schedule. By truly understanding the context of the problem, you can design a tool that integrates seamlessly into your team's routine, acting as a helpful partner rather than another piece of tech they have to wrestle with.

Understanding project timelines and the validation process

Building a custom AI solution isn't a linear path with a fixed end date; it's a cycle of continuous improvement. The timeline is heavily influenced by the validation process, which is far more than a final quality check. After you've trained your initial model, you'll test it against new data to see how it performs in real-world scenarios. This is where the real work begins. Based on those results, you'll almost certainly go back to refine your data or adjust the model, then train and test it all over again. This iterative loop is what transforms a promising model into a reliable, production-ready tool. In healthcare, this process is even more critical. You’re not just validating for accuracy—you’re ensuring every step of the process is secure and compliant, protecting sensitive patient information and building trust with both clinicians and patients.

How to handle sensitive healthcare data correctly

Data is the engine of any AI solution, but in healthcare, it’s also incredibly sensitive. You’re dealing with protected health information (PHI), which means every step of your data handling process is under a microscope. Getting this right isn’t just about building an effective model; it’s about protecting patients, maintaining trust, and meeting strict legal requirements. A single misstep can have serious consequences for both your project and the people it’s meant to serve.

Before you write a single line of code, you need a solid strategy for your data. This involves more than just finding a dataset. You need to think through what kind of data you need, how you’ll ensure its quality and privacy, and what security measures you’ll put in place to protect it. Building a robust data management plan from the outset will save you from major compliance headaches and technical debt down the road. It’s the foundation upon which your entire AI solution rests. While a platform like Cake can manage the complex infrastructure for you, your team is still responsible for the data itself.

What kind of data do you need?

Your AI model is a direct reflection of the data it learns from. That’s why gathering high-quality, accurate information is the most critical step in the entire process. If you feed your model incomplete or irrelevant data, you’ll get unreliable results. For a healthcare application, that’s a risk you can’t afford to take. Focus on sourcing data that is clean, well-structured, and directly related to the problem you’re trying to solve. This could include electronic health records (EHRs), medical imaging files, or genomic data. The data preparation phase—cleaning, labeling, and organizing—is often intensive, but it’s an investment that pays off in model accuracy and reliability.

Looking beyond clinical data to the whole person

While clinical data like lab results and medical scans are the foundation, they don't tell the whole story about a patient's health. To create truly personalized care, you need to look at the bigger picture, which includes everything from a person's genetic makeup and lifestyle choices to their environment and social factors. This is where AI really shines. It can process vast and varied datasets, combining structured clinical notes with unstructured information like patient-reported outcomes or even data from wearable devices. By analyzing all this information together, AI helps create highly tailored treatment plans that are proactive rather than reactive, moving healthcare toward a future of true precision medicine.

How to ensure your data is high-quality and compliant

In healthcare, poor data quality can lead to incorrect predictions and flawed medical decisions. Beyond quality, you have a legal and ethical duty to protect patient privacy. This means your project must adhere to strict regulations. In the United States, the primary regulation is the Health Insurance Portability and Accountability Act (HIPAA), while Europe follows the General Data Protection Regulation (GDPR). Following these rules isn’t just about checking a box; it’s a fundamental requirement for operating in the healthcare space. Building compliance into your workflow from the start is essential for protecting patient data, avoiding steep fines, and establishing your solution as trustworthy and credible.

BLOG: Cake's data security & compliance commitment

Create your data management and security plan

A strong security framework is non-negotiable. Your data management plan should outline exactly how you’ll protect sensitive information at every stage. Start by ensuring all data is encrypted, both when it’s stored (at rest) and when it’s being transmitted (in transit). Implement strict access controls so that only authorized individuals can access patient data, and use detailed logs to track every action taken. Security isn’t a one-time setup, either. You need to continuously monitor your systems and maintain your AI model to ensure it remains secure and effective long after deployment. This ongoing vigilance is key to long-term success.

Choosing the right tech for your custom AI build

Once you have your data and your model in mind, it’s time to choose your toolkit. Selecting the right technology is about more than just picking the flashiest new platform; it’s about building a sustainable, secure, and effective solution. Your tech stack will be the foundation of your AI project, influencing everything from development speed to long-term maintenance.

This decision has two main parts. First, you’ll need to select the AI frameworks and platforms that are best suited for your specific healthcare application. Second, you have to create a solid plan for how this new solution will integrate with the complex web of systems already running in your healthcare environment. Getting this right is critical, and it’s where having a partner that manages the entire stack can make a huge difference. By handling the infrastructure and integrations, a comprehensive platform like Cake lets your team focus on what they do best: solving healthcare challenges.

How to find the best AI platforms for healthcare

The AI landscape is full of powerful tools, and your job is to find the one that fits your project’s purpose and complexity. You don’t need the most complicated tool if a simpler one will get the job done right. For many custom builds, developers turn to popular open-source libraries like PyTorch or TensorFlow. These frameworks provide the flexibility to build and train models from the ground up.

You can also look at platforms designed for certain tasks. For example, you might use a specialized tool like Microsoft Azure Health Bot for conversational AI or a more general platform like Google Vertex AI for a wider range of machine learning projects. The key is to match the tool to the task. There isn't a single "best" platform for every situation, so focus on what your solution needs to accomplish and choose the technology that gives you the clearest path to success.

BLOG: A primer on AI infrastructure

Exploring powerful open-source models

You don’t have to build your AI solution from scratch. The AI community is built on powerful, flexible, and transparent open-source frameworks that give you a massive head start. For complex deep learning tasks, developers often turn to libraries like TensorFlow for its strength in building large-scale, production-ready models, or PyTorch for its flexibility in research and development. For more traditional machine learning, a tool like Scikit-learn might be the perfect fit. The advantage of these tools is that they are constantly being improved by a global community. The challenge, however, is managing the complex infrastructure needed to run them securely and at scale. This is where a managed platform can be invaluable, handling the compute, integrations, and deployment so your team can focus on the model itself.

How to integrate AI with your current systems

Your AI solution can’t operate in a silo. To be truly effective, it needs to communicate seamlessly with the systems your organization relies on every day, especially Electronic Health Records (EHRs). This is where a thoughtful integration plan becomes essential. Without it, your powerful new tool might end up being a clunky, standalone application that creates more work than it saves.

The key to smooth integration is interoperability. You’ll need to use established healthcare data standards, like FHIR (Fast Healthcare Interoperability Resources), to ensure your AI can securely access and exchange information with your EHR and other clinical systems. This connection needs to be built with security, privacy, and scalability in mind from the very beginning. Planning for this early ensures your AI solution can deliver real-time insights and automate tasks right where your team needs them most.

Who should be on your healthcare AI team?

Building a successful healthcare AI solution is a team sport, not a solo mission for your tech department. The complexity of healthcare means you need more than just brilliant coders; you need a group of experts who can bridge the gap between technology and real-world clinical practice. Think of it less like hiring a single developer and more like assembling a specialized medical team where every member brings a unique and critical perspective to the table. Your project's success depends on this blend of technical skill and deep industry knowledge.

A truly effective team brings together diverse expertise. On one side, you have your technical powerhouses: data scientists, AI and machine learning engineers, and software developers who can build and train the models. On the other, you have your indispensable domain experts: the doctors, nurses, and administrators who understand patient workflows, clinical needs, and the nuances of healthcare delivery. This effective collaboration is non-negotiable. Your clinicians are the ones who can validate whether your solution actually solves a real problem for them and their patients, not just a theoretical one.

As you assemble your roster, remember to include people with a firm grasp of healthcare regulations. Their guidance will be essential for keeping your project compliant and ethically sound. You can build this team by hiring new talent with specific experience or by investing in training for your existing staff who already know your organization's culture and goals. By bringing together the right people, you create an environment where technical innovation is guided by practical, clinical wisdom, ensuring the solution you build is not only powerful but also genuinely useful and safe.

A practical guide to AI compliance and ethics in healthcare

When you’re building a tool that can impact someone’s health, getting the ethics and compliance right isn’t just a feature—it’s the foundation of your entire project. While a platform like Cake can manage the technical stack and accelerate your build, your team is ultimately responsible for creating a solution that is safe, fair, and trustworthy. This means going beyond the code to address the human side of AI.

Successfully handling these responsibilities comes down to three key areas. First, you need a deep understanding of the legal landscape, including the major regulations that govern patient data. Second, you must commit to ethical principles that ensure your AI is transparent and accountable. Finally, you have to actively work to identify and eliminate bias from your models to ensure equitable care for everyone. Tackling these challenges head-on is the only way to build a solution that clinicians will trust and patients will benefit from.

What you need to know about HIPAA and GDPR

Following rules like HIPAA and GDPR is non-negotiable. These regulations are designed to protect sensitive patient information, and compliance is essential for avoiding serious legal trouble and financial penalties. More importantly, adhering to these standards is how you build fundamental trust with both patients and providers.

Think of these regulations not as a restrictive checklist, but as a framework for responsible innovation. They guide you in setting up secure data handling practices and ensuring patient privacy is respected at every step. Your approach to HIPAA and other regulations will define your reputation and the long-term viability of your solution.

How to apply ethical AI principles

AI decisions in healthcare can’t be a “black box.” For a clinician to trust and act on a recommendation from your AI, they need to understand how the model arrived at its conclusion. This is the core idea behind transparent and explainable AI. It’s not enough for the tool to be accurate; it must also be interpretable, especially when a decision could have significant consequences for a patient’s care.

Putting ethical principles into practice means designing your system for accountability. Who is responsible if the AI makes a mistake? How can a clinician override a recommendation if their expertise suggests a different path? Building a trustworthy AI solution requires clear answers to these questions and a commitment to an AI Risk Management Framework that prioritizes patient safety and clinical judgment.

How to reduce bias in your AI models

An AI model is only as good as the data it’s trained on. If your training data is skewed, your model will be, too. For example, if an AI learns to identify a disease primarily from data from one demographic, it may be less accurate for others, leading to disparities in care. This is a critical risk, as algorithmic bias in health care can perpetuate and even amplify existing inequities.

To reduce bias, you must be intentional about sourcing diverse and representative datasets. Your work doesn’t stop once the model is built. You need to continuously test your AI for fairness across different patient populations, checking for any performance gaps. Regularly auditing your models for bias and refining them with more inclusive data is an ongoing process that is essential for creating a truly equitable healthcare solution.

Common roadblocks in AI development (and how to fix them)

Even the most well-planned AI project will run into a few challenges. Thinking about these potential hurdles ahead of time is the best way to keep your project on track. While every project is unique, two of the most common issues that pop up during healthcare AI development are getting new tools to work with existing systems and, just as importantly, getting people to embrace and use the new solution. Addressing these technical and human factors from the start will make the entire process smoother and set your solution up for success.

How to make your different systems talk to each other

One of the biggest technical hurdles is interoperability—making sure your custom AI solution can communicate effectively with the systems a hospital or clinic already relies on. You aren't building in a vacuum; your tool needs to connect smoothly with existing software, especially Electronic Health Records (EHRs). This is where a custom approach really shines, as it can be built specifically for your organization's needs. To make this connection happen, your development team will likely use established healthcare data standards. A key one to know is FHIR (Fast Healthcare Interoperability Resources), which provides a common language for different health tech systems to exchange information securely and efficiently.

Get your team on board with the new AI

A brilliant AI tool is only useful if people actually use it. Many AI projects fail not because the technology is flawed, but because they don't solve a real-world problem for the end-users. The best way to avoid this is to talk to everyone who will be affected—doctors, nurses, administrators, and even patients—before you write a single line of code. This user-centered design approach builds trust from day one. When designing the AI itself, focus on clear, empathetic communication. In healthcare, a calm and reassuring tone is far more effective than overly casual language.

Bridging the gap from the lab to the real world

A model that performs perfectly in a controlled lab setting is one thing; a tool that works in the fast-paced, complex reality of a clinic is another. The transition from development to deployment is where many projects stumble. To succeed, your solution must integrate seamlessly into existing clinical workflows, not disrupt them. This means it needs to communicate flawlessly with your EHR and other core systems. More importantly, it requires a continuous feedback loop with the clinicians who will use it every day. Their insights are what will transform a technically impressive model into a genuinely useful tool that improves their work and patient care. This iterative process of testing, gathering feedback, and refining is critical for real-world success.

What happens after your AI goes live?

Getting your AI solution up and running is a huge milestone, but the work doesn’t stop at launch. Think of it like a garden; it needs continuous care to thrive. The most successful AI initiatives are the ones that are actively monitored, measured, and improved over time. This ongoing process ensures your solution remains accurate, effective, and continues to deliver real value to your healthcare organization. Focusing on these final steps is what separates a short-term project from a long-term strategic asset. It’s all about creating a cycle of feedback and refinement that keeps your AI performing at its best.

Keep monitoring and improving your solution

Your AI model isn't static. Over time, its performance can decline in a process known as "model drift." This happens because the real-world data it encounters starts to differ from the data it was trained on. To prevent this, you need a plan for maintaining your AI solution from day one. This involves regularly tracking its performance metrics, retraining it with fresh data, and addressing any issues that pop up. Being proactive here is key. By setting up a consistent monitoring and maintenance schedule, you ensure your AI remains a reliable and effective tool for your team, adapting as healthcare data and practices evolve.

Measure your results and adapt your strategy

How do you know if your AI is truly making a difference? You have to measure its performance and impact. Start by testing how well the model performs on new data it has never seen before, looking at metrics like accuracy and precision. It's also critical to check for any unintended bias to ensure fair outcomes for all patient groups. If the performance isn't quite right, don't worry—that's a normal part of the process. You can fine-tune the model by adjusting its settings or training it with more data. Creating a feedback loop to make improvements based on input from clinicians and patients is also incredibly valuable for guiding your adjustments.

Related Articles

- Agentic AI: The Future is Autonomous & Smart

- Key Applications of Artificial Intelligence Today

- Healthcare, Powered by Cake

- Top 14 Healthcare AI Use Cases

- 9 Best Data Ingestion Tools: A Deep Dive Review

Frequently asked questions

Why can't I just use a general, off-the-shelf AI tool for my clinic?

While general AI tools are powerful, they aren't designed for the specific, high-stakes environment of healthcare. Your clinic has unique workflows, patient data requirements, and compliance needs that a one-size-fits-all solution can't address. A custom solution is built to integrate perfectly with your existing systems, like your EHR, and is tailored to solve the specific problems your staff faces every day, leading to better adoption and more meaningful results.

This sounds very technical. What's the most important non-technical step my team should focus on?

Before you even think about technology, the most critical step is to clearly define the problem you want to solve. This involves talking to the people who will actually use the tool—your clinicians, nurses, and administrators. By understanding their biggest challenges and daily frustrations, you can set a specific, measurable goal. Focusing on a real-world need ensures you build a solution that people will actually want to use.

My team is great with technology, but we're worried about the healthcare regulations. How do we start?

Navigating regulations like HIPAA is a valid concern, and it's a non-negotiable part of the process. The best way to start is by making compliance a core part of your project plan from day one, not an afterthought. This means building a team that includes someone with regulatory expertise and designing your data management plan around security principles like encryption and strict access controls. Think of these rules as a framework for building trust, not just a hurdle to clear.

Our biggest challenge is getting new software to work with our existing EHR system. How does a custom AI solution handle that?

This is a common and significant challenge, and it's one of the main reasons a custom approach is so effective. A custom AI solution is designed from the start with your specific systems in mind. It uses established healthcare data standards, like FHIR, to create a secure and reliable bridge to your EHR. This ensures the AI can access the information it needs and deliver insights directly into your team's existing workflow, rather than forcing them to use a separate, disconnected application.

Once our AI solution is built and launched, is the project finished?

Launching your AI is a major accomplishment, but the work isn't over. An AI model needs ongoing attention to remain effective. You'll need to continuously monitor its performance to ensure its accuracy doesn't degrade over time, a process known as model drift. This involves regularly retraining the model with new data and making adjustments based on feedback from users. This cycle of monitoring and improvement is what turns a good AI tool into a lasting strategic asset for your organization.

Defining and tracking success metrics

This is where everything comes full circle, connecting back to the goals you set at the very beginning. You can't know if your AI is successful if you haven't defined what success looks like. Your metrics should directly reflect the problem you wanted to solve. For example, if your goal was to reduce administrative tasks, a key metric would be the percentage of time saved for your clinical staff. If it was to improve diagnostic accuracy, you'd measure the rate of improvement. Defining these key performance indicators (KPIs) gives you a clear benchmark to measure against. These numbers aren't just for a final report; they are your guide for continuous improvement, helping you spot issues like model drift and showing you where to focus your efforts for the next iteration.

About Author

Cake Team

More articles from Cake Team

Related Post

Top 14 Use Cases for AI in Healthcare

Cake Team

8 Challenges of AI in Healthcare & How to Fix Them

Cake Team

Anomaly Detection with AI & ML: A Practical Guide

Cake Team

Real-Time Anomaly Detection in Automation: A Full Guide

Cake Team