Real-Time Anomaly Detection in Automation: A Full Guide

Your production environment is a constant stream of data—from sensor readings and transaction logs to user activity. Hidden within that stream are the first faint signals of trouble: a machine about to fail, an anomalous timing event, or a security threat. The challenge isn't a lack of information, but finding these critical signals in time. This is how you shift from reacting to problems to preventing them entirely. Implementing real-time anomaly detection in your automation systems gives you an always-on watchtower, spotting unusual patterns the moment they occur. This informational guide walks you through the essentials, from choosing the right algorithms to overcoming common implementation hurdles.

Key takeaways

- Get ahead of problems before they start: The core value of real-time anomaly detection is its ability to catch unusual patterns instantly. This lets you fix issues like equipment malfunctions or supply chain hiccups before they turn into expensive failures.

- The right algorithm is only half the battle: While choosing a method like an Isolation Forest or Autoencoder is key, a truly effective system is built on a foundation of clean data and carefully calibrated alert thresholds to ensure accuracy.

- Measure what matters to build a system you can trust: An effective system finds the right anomalies without overwhelming your team with false alarms. Track metrics like response time and false positive rates to fine-tune performance and prove its value.

What is real-time anomaly detection?

Think of real-time anomaly detection as an always-on security guard for your data. Its job is to spot unusual patterns or strange behaviors in your data streams the moment they happen. Instead of finding out about a problem in a weekly report, you get an alert right away. This is crucial for things like predictive maintenance, where the goal is to fix equipment before it breaks down, saving you from costly downtime and production losses. It’s about shifting from a reactive mindset to a proactive one, giving you the power to act immediately.

This approach is essential in modern manufacturing and tech, where systems need to collect and analyze data constantly to run efficiently. By monitoring key performance indicators (KPIs) online, you can catch issues as they develop, not after they’ve already caused damage.

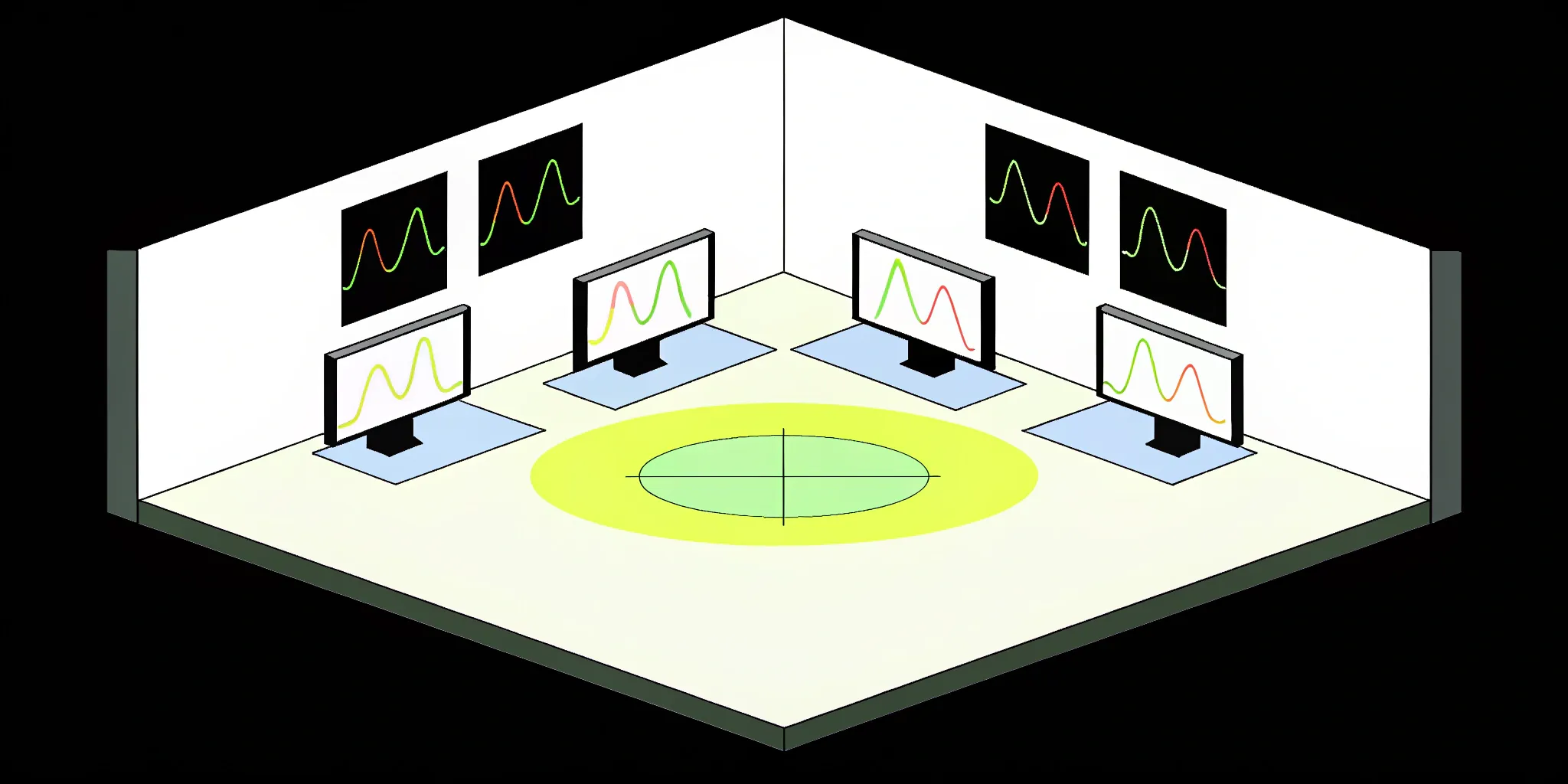

What makes up an anomaly detection system?

To get real-time anomaly detection running, a few key pieces must work together seamlessly. It all starts with a constant flow of data from your systems—think sensor readings from factory machines, transaction logs, or user activity on a website. This data feeds into a processing engine that analyzes information on the fly. The core of the system is the anomaly detection model, which is trained to understand what 'normal' looks like for your specific operations. Finally, an alerting mechanism instantly notifies the right people when the model flags something unusual, so they can investigate and take action.

How it works in the real world

In a live environment, the process is a continuous loop. As new data streams in, the detection model analyzes it against an established baseline of normal behavior. This baseline isn't static; it learns and adapts as your operations evolve. When the system encounters a data point that deviates significantly from this norm, it flags it as an anomaly. The real power here is that it doesn't just tell you something is wrong—it helps you pinpoint the root cause almost instantly. By using a smart mix of statistical methods and machine learning, these systems accurately identify real issues while minimizing false alarms.

Understanding the need for speed: what is latency?

In anomaly detection, timing is everything. Latency is the delay between when an event happens and when your system detects it. The goal is to make this delay as close to zero as possible. When you're dealing with massive, fast-moving data streams, finding problems "right away, not hours or days later" is critical for a fast response. Think about it: if a critical machine on your assembly line starts showing signs of failure, you want to know immediately—not in tomorrow's report after production has already stopped. Reducing latency means you can react quickly to issues like quality control dips, supply chain disruptions, or security threats, preventing small problems from becoming major disasters.

The technology making real-time analysis possible

Achieving this kind of speed isn't possible with traditional databases. You need technology built specifically for real-time analytics. Modern data platforms are designed to ingest and query enormous datasets in milliseconds, making instant analysis a reality. To achieve instant anomaly detection, you need databases that can keep up with the firehose of data without slowing down. Setting up and managing this high-performance infrastructure—from the compute resources to the open-source platform elements—can be complex. This is why many teams turn to comprehensive solutions like Cake, which manage the entire stack, letting you focus on the results instead of the underlying architecture.

A field built on extensive research

While the technology feels cutting-edge, the principles behind real-time anomaly detection are built on a solid foundation of extensive research. One recent review, for example, analyzed 90 different studies to organize the various approaches into a clear framework. This body of work provides a roadmap for building effective systems and validates the methods used in production today. This means that when you implement an AI-based anomaly detection system, you're not stepping into the unknown. The results of this research guide developers in creating robust and reliable software, ensuring the solutions you deploy are based on proven, well-understood principles.

What kinds of anomalies will you find?

Not all anomalies look the same, which is why detection systems need to be sophisticated. They generally fall into three categories. First are point anomalies, or outliers—single, abrupt events like a sudden spike in server errors. Next are collective anomalies, where a group of data points together signal a problem, even if each one looks normal on its own. Think of a machine’s vibration slowly increasing over an hour. Finally, there are contextual anomalies, which are normal in one situation but not another. A surge in website traffic during a marketing campaign is expected, but the same surge at 3 a.m. is suspicious. Understanding these types of anomalies helps you choose the right detection methods for your needs.

IN DEPTH: How to implement predictive analytics with Cake

Anomalies in complex multi-agent systems

The challenge of anomaly detection grows exponentially when you have multiple AI agents working together. In these multi-agent systems, the complexity comes not just from individual agents, but from the countless ways they can interact. As researchers at Galileo have pointed out, even a small group of agents can create millions of potential interactions, leading to behaviors you could never have predicted. This makes it much harder to spot when something is going wrong compared to a system with a single agent.

Anomalies in these environments tend to fall into a few specific categories:

- Behavioral anomalies: This is when a single agent starts acting out of character, even when it has the same information as its peers.

- Communication anomalies: The way agents talk to each other can break down. They might start sending too many messages and creating noise, or go silent and fail to respond when needed.

- Resource utilization anomalies: An agent might start hogging shared resources like memory or processing power, or not use enough, causing bottlenecks elsewhere in the system.

- Performance anomalies: The overall efficiency of the entire system drops below its expected baseline, but there isn't one obvious agent to blame.

- Emergent behavioral anomalies: These are the trickiest to handle. They are completely new and unexpected behaviors that arise from the complex group dynamic—problems that no single agent would cause on its own.

Effectively managing these systems requires a robust platform that can adapt to their constantly changing nature. The key is to continuously track what "normal" behavior looks like for each agent and for the system as a whole. By establishing and updating these baselines with machine learning, you can flag unusual patterns right away. This allows your team to intervene before a minor hiccup between a few agents cascades into a major system-wide problem.

The most effective techniques for detecting anomalies

Once you know what you’re looking for, you can choose the right technique to find it. Anomaly detection isn’t a one-size-fits-all process. The best approach depends on your data, your goals, and the specific problem you’re trying to solve. Some methods are great for spotting a single weird number, while others excel at finding strange patterns over time. Let's walk through the most common techniques you'll encounter so you can get a feel for what might work best for your production environment.

Starting with foundational methods

You don’t always need a complex machine learning model to get started with anomaly detection. In fact, some of the most reliable and interpretable methods are based on straightforward statistical rules. These foundational techniques are excellent for establishing a baseline and catching obvious problems without the overhead of training a sophisticated model. They are quick to implement, easy for your team to understand, and highly effective for a wide range of common issues. Think of them as the essential first layer of your monitoring strategy—the simple checks that can alert you to a problem long before it becomes critical.

Out-of-range detection

This is the simplest method in the playbook. It works by setting a predefined minimum and maximum value for a given metric and flagging any data point that falls outside that boundary. For example, if a pressure sensor on a manufacturing line should never exceed 100 PSI, any reading above that is an immediate anomaly. This approach is perfect for variables with known physical or logical limits. While it won't catch subtle deviations within the "normal" range, it's an incredibly effective first pass for catching impossible values and clear operational failures that need immediate attention.

Timeout detection

An anomaly isn't always a bad data point; sometimes, it's the absence of a data point altogether. Timeout detection identifies when a sensor or system fails to report data within an expected interval. Imagine a fleet of delivery drones that are required to send their location every 10 seconds. If one drone goes silent for a full minute, this method flags it as a potential issue, like a crash or a communication failure. This is critical for monitoring the health and uptime of connected devices and services, as silence is often the first indicator of a serious problem that requires investigation.

Rate-of-change detection

This technique focuses not on the value of a data point itself, but on how quickly that value is changing. It flags an anomaly when the difference between two consecutive data points is too large. For instance, the water level in a reservoir might rise slowly during a rainstorm, which is normal. But a sudden, sharp increase could signal a flood or a broken gate, triggering an alert. This method is especially useful for systems where gradual change is expected, but abrupt spikes or drops are signs of trouble, allowing you to detect critical events based on their velocity.

Interquartile range (IQR) detection

Stepping up the statistical sophistication a bit, the IQR method is excellent for identifying outliers without setting hard-coded limits. It works by looking at the middle 50% of your recent data to define what's "typical" at that moment. Any data point that falls significantly outside of this rolling, central range is flagged as an anomaly. This makes it highly adaptive to data that has changing trends or seasonality. It’s a robust way to spot short-term, unusual events that might otherwise get lost if you were only looking at a fixed minimum or maximum, making it a smart choice for dynamic environments.

Z-score detection

Similar to IQR, the Z-score method measures how far a data point is from the average, but it does so in terms of standard deviations. In simple terms, it calculates a score that tells you how unusual a data point is compared to the recent norm. A Z-score of 3, for example, means the point is three standard deviations away from the average, which is typically a rare event. This technique is powerful because it automatically adapts to the volatility of your data; a wider spread of "normal" data means a point has to be much further out to be considered an anomaly.

A look at statistical methods

Think of statistical methods as the foundation of anomaly detection. They use established mathematical principles to identify data points that just don't fit in with the rest. These techniques are excellent for finding outliers that deviate from a normal distribution. One powerful method for real-time data is the Random Cut Forest (RCF), which is designed to work with streaming data. It builds a collection of decision trees to isolate anomalies quickly and efficiently. This makes it a solid choice for production systems where data is constantly flowing and you need immediate insights into unusual events.

Applying machine learning algorithms

Machine learning takes anomaly detection a step further by learning from your data. These algorithms can be supervised, meaning they learn from data that's already been labeled as "normal" or "anomalous," or unsupervised, where they find unusual patterns without any pre-existing labels. Common techniques like clustering and classification help group data and spot deviations from expected behavior. For these models to work reliably in production, it's crucial to ensure your data is complete and you have a strategy for handling any missing values, as gaps can easily throw off the results.

BLOG: A business guide to machine learning in production

Why unsupervised methods are a great fit for real-time

In a live production environment, you can’t possibly predict every type of anomaly you’ll encounter. This is where unsupervised methods really shine. Instead of needing pre-labeled data to learn from, they analyze your data stream as it happens, figuring out what “normal” looks like on their own. This approach is incredibly powerful because it’s adaptable. As your systems evolve and the definition of normal behavior shifts, an unsupervised model can adjust to these changing norms without needing to be manually retrained with new labels. This makes them far more efficient and practical for real-time use cases, where you don’t have the time to stop, label new data, and redeploy a model every time something changes.

Using deep learning for complex patterns

When you're dealing with highly complex or high-dimensional data, deep learning models are incredibly effective. Models like autoencoders and recurrent neural networks (RNNs) can uncover subtle anomalies that other methods might miss. An autoencoder learns to compress and reconstruct normal data, so when it fails to accurately reconstruct a data point, that point is flagged as an anomaly. RNNs are particularly good with sequential data, like sensor readings over time, because they can recognize temporal patterns and spot when a sequence of events is out of the ordinary.

Specialized techniques for complex systems

But some anomalies aren't just subtle; they're hidden in the relationships between different parts of your system. In complex environments like multi-agent AI or distributed IoT networks, components are constantly interacting. A problem might not show up in a single data stream but in the way different parts communicate—or fail to. Standard detection methods that look at metrics in isolation can miss these relational issues entirely. To find them, you need techniques that can analyze the system as a whole, focusing on the connections that hold everything together.

Mapping system interactions with graphs

One of the most effective ways to understand a complex system is to visualize it. You can create "graphs" that map out how different agents or components communicate, share resources, and depend on one another. Think of it as a blueprint of your system's relationships. This network view helps you see the flow of information and identify bottlenecks or points of failure that wouldn't be obvious from looking at individual performance metrics. By mapping these interactions, you can spot when a normally stable connection becomes erratic or when a critical dependency is broken, allowing you to address hidden structural problems before they cause a major outage.

Analyzing dependencies and relationships

Anomalies in multi-agent systems are notoriously difficult to find because the complexity grows exponentially with each new component. Even a system with just ten agents can have millions of potential interaction pathways, leading to all kinds of unexpected behaviors. Traditional methods often fall short here because they don't account for the intricate web of dependencies between parts. A slight dip in performance in one microservice might seem insignificant on its own, but if five other services depend on it, that small dip can trigger a cascade of failures. Analyzing these relationships is key to understanding the true health of your system and catching problems that arise from the interactions themselves.

Why time series analysis matters

In most production environments, data is collected sequentially over time, which is where time series analysis shines. This technique focuses on analyzing data points recorded in chronological order to identify trends, seasonal patterns, and, most importantly, anomalies. By monitoring KPIs over time, you can spot sudden spikes or dips that signal a problem. This proactive approach allows you to address potential issues in your manufacturing or operational processes before they become critical, helping you maintain smooth operations and prevent costly downtime.

How to choose the correct algorithm

Picking the right algorithm for real-time anomaly detection can feel like searching for a needle in a haystack, but it’s really about matching the tool to your specific problem. There isn’t a single "best" algorithm; the ideal choice depends entirely on your data and what you’re trying to achieve. Before you can select a method, you need to understand the characteristics of your data. Are you working with a massive, high-dimensional dataset? Is your data seasonal or trending over time? Do you have examples of anomalies, or are you flying blind with unlabeled data?

Answering these questions will point you toward the right family of algorithms. Some methods are built for speed and efficiency, making them perfect for real-time applications where a quick response is critical. Others offer deep, nuanced insights at the cost of more computational power, which might be better for offline analysis or less time-sensitive tasks. The goal is to find a balance that fits your operational constraints and business goals. We’ll walk through a few of the most effective and popular options. Think of this as a field guide to help you identify which approach will work best for your unique environment, ensuring your system is both accurate and efficient.

Some methods are built for speed and efficiency, making them perfect for real-time applications where a quick response is critical. Others offer deep, nuanced insights at the cost of more computational power, which might be better for offline analysis or less time-sensitive tasks. The goal is to find a balance that fits your operational constraints and business goals.

When to use isolation forests

If you’re dealing with a large dataset with many variables, the Isolation Forest algorithm is a fantastic starting point. It’s an unsupervised learning method that works by, quite literally, isolating anomalies. Instead of building a complex profile of what "normal" data looks like, it randomly partitions the data until every single point is isolated. The logic is simple: anomalies are few and different, so they are typically much easier to isolate than normal points. This makes the algorithm incredibly efficient, especially when you need to find outliers in high-dimensional data without having pre-labeled examples of what an anomaly looks like.

When one-class SVM is your best bet

The One-Class Support Vector Machine (SVM) is another powerful tool, especially when you have a clean dataset that mostly consists of normal behavior. This algorithm works by learning a boundary that encloses the majority of your data points. Think of it as drawing a circle around the "normal" cluster. Any new data point that falls outside of this pre-defined boundary is flagged as an anomaly. It’s particularly useful in situations like fraud detection or network intrusion, where you have plenty of examples of normal activity but very few, if any, examples of the anomalies you want to catch.

How autoencoders spot outliers

When your data is highly complex, like images or intricate sensor readings, autoencoders are an excellent choice. An autoencoder is a type of neural network that learns to compress and then reconstruct data. It’s trained exclusively on normal data, becoming an expert at recreating it. When a new data point comes in, the autoencoder tries to reconstruct it. If the point is normal, the reconstruction will be very accurate. But if it’s an anomaly, the network will struggle, resulting in a high reconstruction error. This error is your signal that you’ve found something unusual.

The role of random forests

While often used for classification, the Random Forest algorithm can be cleverly adapted for anomaly detection. A random forest is an ensemble of many individual decision trees. To find anomalies, you can measure how close a new data point is to the rest of the data. If a point is consistently isolated or lands in a sparse region across many trees in the forest, it’s likely an outlier. This method is robust and performs well across a variety of datasets. It leverages the "wisdom of the crowd" by combining the output of multiple trees to make a more accurate and stable judgment.

A simple checklist for choosing your algorithm

So, how do you make the final call? Start by looking at your data. Is it high-dimensional? Isolation Forests and Autoencoders are strong contenders. Do you have mostly normal data to train on? A One-Class SVM might be perfect. Next, consider your resources. Some algorithms, like deep learning models, require more computational power. Finally, think about your specific use case and operational needs. The best approach is often to experiment with a couple of different models to see which one performs best on your data. Your operational requirements will ultimately guide you to the algorithm that provides the right balance of accuracy, speed, and resource efficiency.

Your guide to a smooth implementation

Putting a real-time anomaly detection system into production isn't just about picking the right algorithm. A successful launch depends on thoughtful planning and a commitment to ongoing refinement. Think of it as building a strong foundation before you put up the walls. By focusing on data quality, smart alerting, and continuous learning from the start, you can create a system that not only works but also delivers real value to your team without causing unnecessary headaches. Let's walk through the key steps to get it right.

1. Get your data ready for analysis

Your model is only as good as the data you feed it, and real-time systems are especially sensitive to data issues. Incomplete or messy data can lead to inaccurate results and missed anomalies. One of the biggest hurdles is handling missing data, which can throw off your model's predictions and reduce its reliability. Before you even think about deploying, establish a process for cleaning and preparing your data streams. This means ensuring data is complete, consistent, and correctly formatted. A solid data preparation pipeline is the first and most critical step toward building an anomaly detection system you can trust.

2. Finding the sweet spot for detection thresholds

Setting the right threshold is a balancing act. If your threshold is too sensitive, your team will be flooded with false positives, leading to alert fatigue. If it’s not sensitive enough, you’ll miss critical anomalies, defeating the purpose of the system. There’s no magic number here; the ideal setting depends on your specific use case and tolerance for risk. Start with a baseline and plan to fine-tune it as you gather more data. A good deployment checklist will always include a plan for adjusting thresholds to minimize both false positives and negatives, ensuring your system is effective without being disruptive.

3. A smart way to manage alerts

An alert is only useful if it leads to action. When your system detects an anomaly, it needs to notify the right people in a way that makes sense. This means choosing the right metrics to monitor so you’re not just creating noise. To protect against emerging threats, you need to ensure every alert is both relevant and actionable. Think about how alerts will be delivered—via email, Slack, or a dashboard—and what information they should contain. The goal is to give your team the context they need to investigate and resolve issues quickly, turning data points into decisive action.

Your anomaly detection system shouldn't operate in a silo. To be truly effective, it needs to connect with the tools and workflows your team already uses.

4. Making it work with your existing systems

Your anomaly detection system shouldn't operate in a silo. To be truly effective, it needs to connect with the tools and workflows your team already uses. Whether it's a data visualization dashboard, a ticketing system, or an automated response platform, seamless integration is key. This allows your team to see alerts in context and act on them without having to switch between a dozen different screens.

5. Set up your model for continuous learning

The world isn't static, and neither is your data. New patterns will emerge, and what’s considered "normal" today might be an anomaly tomorrow. The most effective systems are designed to adapt. This means your model needs to be retrained regularly with new data to stay accurate. Combining machine learning with domain expertise from your team is a powerful approach. This ensures your model not only learns from the data but also incorporates real-world knowledge, helping it find the right signals and adapt to new patterns over time. Continuous learning turns your system from a static tool into a dynamic, intelligent partner.

Where anomaly detection makes a difference

Anomaly detection is much more than a tool for spotting credit card fraud or network intrusions. Its real power lies in its versatility across various industries, especially in production environments where efficiency and reliability are everything. By continuously monitoring data streams, you can catch small issues before they become major problems, saving time, money, and headaches. From the factory floor to your global supply chain, real-time anomaly detection provides the insights you need to operate more intelligently.

Implementing these systems requires a solid foundation to handle the data and run the models effectively. A comprehensive platform like Cake can manage the entire AI stack, from the compute infrastructure to the pre-built project components, making it easier to get these powerful applications up and running. Let’s look at some of the most impactful ways you can put anomaly detection to work.

1. Predictive maintenance

Imagine being able to fix a critical piece of machinery right before it breaks down. That’s the goal of predictive maintenance. Industrial equipment is often fitted with sensors that generate constant streams of data about temperature, vibration, and performance. Anomaly detection models can analyze this data in real time to find subtle patterns that signal an impending failure. This approach, often called “predictive maintenance,” allows you to schedule repairs proactively, minimizing unexpected downtime and extending the life of your equipment. It’s a shift from reacting to problems to preventing them entirely.

2. Quality control systems

In manufacturing, maintaining product quality is non-negotiable. Anomaly detection can be integrated directly into your production line to monitor quality control. By analyzing images or sensor data from products as they are being made, the system can instantly flag items that don't meet quality standards. This real-time feedback helps you find problems and their root causes right away, so you can make adjustments on the fly. This not only reduces waste but also ensures that only top-quality products reach your customers, protecting your brand's reputation.

3. Supply chain monitoring

A modern supply chain is a complex web of logistics, and a single disruption can have a ripple effect. Anomaly detection helps you keep a close eye on every moving part. By monitoring data related to shipping times, inventory levels, and carrier performance, you can spot deviations from the norm. For example, the system could alert you to a shipment that’s unexpectedly delayed or a warehouse that’s running low on a key item. This allows you to identify potential disruptions early and take action to keep your operations running smoothly.

4. Energy consumption

For businesses with large physical footprints like factories or data centers, energy costs can be substantial. Anomaly detection offers a smart way to manage and reduce this expense. By analyzing real-time energy usage data, you can identify unusual spikes or patterns that indicate inefficiency, such as equipment running unnecessarily or a faulty HVAC system. This allows you to make immediate adjustments to optimize usage and reduce costs. Over time, these small optimizations can add up to significant savings and a more sustainable operation.

5. IoT device monitoring

The Internet of Things (IoT) has connected everything from industrial sensors to consumer gadgets, all generating massive amounts of data. Anomaly detection is essential for managing these device networks. It can monitor the health and performance of each device, flagging any that go offline or start behaving erratically. Any system that creates a continuous stream of data can benefit from this kind of monitoring. This ensures your IoT ecosystem is reliable, secure, and functioning as intended, whether you’re managing a smart factory or a fleet of delivery drones.

From handling massive data streams to making sure your model doesn’t become obsolete, each stage has its own set of problems to solve. Think of it less as a roadblock and more as a puzzle.

6. Fraud detection

When it comes to finance, every second counts. Real-time anomaly detection acts as a critical line of defense against fraud by constantly analyzing transaction data. These systems get to know an individual’s normal spending habits—what they buy, where they shop, and how much they typically spend. If a transaction suddenly deviates from that established pattern, like a large purchase in a different country, the system instantly flags it as suspicious. This allows financial institutions to prevent fraudulent transactions before they’re even completed, protecting both the customer and the business from potential losses.

7. Cybersecurity

Think of anomaly detection as a vigilant security guard for your computer networks. In cybersecurity, the system continuously monitors network traffic and user behavior to build a baseline of what’s normal. It’s always on the lookout for unusual activity that could signal a threat—like an employee trying to access sensitive files at an odd hour or a sudden surge of data being sent to an external server. By catching these anomalies the moment they happen, organizations can address potential security breaches before significant damage is done. This proactive approach is essential for protecting sensitive data and keeping your digital infrastructure secure.

How to solve common implementation challenges

Putting a real-time anomaly detection system into production is a huge step, but it’s not without its hurdles. You’re dealing with live data, complex models, and the pressure of delivering immediate value. It’s completely normal to run into a few bumps along the way. The key is to anticipate these challenges so you can build a system that’s not just powerful, but also resilient and efficient.

From handling massive data streams to making sure your model doesn’t become obsolete, each stage has its own set of problems to solve. Think of it less as a roadblock and more as a puzzle. With the right strategy, you can get through these common issues and create a system that runs smoothly. Let’s walk through some of the most frequent challenges and the practical steps you can take to overcome them.

How to handle high-speed data streams

Real-time data doesn’t wait for you to catch up. In a production environment, data flows in at an incredible speed, and your system needs to process it instantly to detect anomalies as they happen. If your architecture can't keep up, you risk missing critical events. The solution is to use frameworks designed specifically for this kind of velocity. You need a system that offers scalable, intelligent monitoring for time series data. This ensures you can analyze every data point without creating a bottleneck, maintaining both speed and accuracy.

Keeping your data clean and reliable

Your anomaly detection model is only as good as the data you feed it. In the real world, data is often messy—it can be incomplete, inconsistent, or full of errors. Trying to find anomalies in poor-quality data is like looking for a needle in a haystack full of other needles. Handling missing data is one of the biggest challenges you'll face. Before you even think about training a model, establish a robust data preparation pipeline. This means cleaning, validating, and standardizing your data to ensure its integrity. A reliable system is built on a foundation of clean data.

How to build an adaptable model

The patterns in your data will change over time. What’s considered normal today might be an anomaly tomorrow. If your model is static, its performance will degrade as it becomes less relevant. This is why your system needs to be adaptive. You need a model that can learn continuously and adjust to new patterns as they emerge. A data-driven approach allows for the online detection of anomalies, which means your system can proactively spot deviations and even help identify their root causes. This keeps your model sharp and your detections relevant.

How to scale your system as you grow

A system that works perfectly with a small dataset might fail when faced with a full production load. Scalability isn't an afterthought—it's something you need to plan for from day one. As your business grows, your data volume will, too. Your anomaly detection system must be able to handle this increase without a drop in performance. Following a structured deployment checklist that covers everything from data preparation to algorithm selection can help you build a scalable architecture. This ensures your system is ready for future growth.

Making the most of your resources

Running sophisticated machine learning models in real time can be computationally expensive, which translates to higher costs. The goal is to find the sweet spot between detection accuracy and resource consumption. You don’t always need the most complex model to get the job done. Often, the most effective systems use a hybrid approach, combining statistical methods with machine learning and domain expertise. This strategy leads to more efficient resource utilization, allowing you to maintain high accuracy without breaking the bank on infrastructure.

How to know if your system is effective

Once your real-time anomaly detection system is up and running, the work isn’t over. The next crucial step is to figure out if it’s actually doing its job well. An effective system isn't just about catching oddities; it's about catching the right oddities, quickly, without crying wolf every five minutes. So, how do you know if all your hard work is paying off? It comes down to continuous monitoring and measurement.

Think of it like a health checkup for your system. You need to look at a few key vital signs to understand its performance and value. This means going beyond a simple "it's working" and digging into specific metrics that tell the full story. You’ll want to assess its accuracy to ensure it’s reliable, check its speed to confirm it’s truly "real-time," and monitor its alert quality to make sure it’s not overwhelming your team. On top of that, you need to be sure it’s providing a solid return on investment and running efficiently. By regularly evaluating these areas, you can fine-tune your models, justify the resources, and build trust in the system’s outputs.

The "real-time" in real-time anomaly detection is its biggest selling point. If your system takes too long to spot and flag an issue, you lose the opportunity to act on it before it causes damage.

Focus on the right accuracy metrics

When we talk about accuracy, it’s not as simple as a single percentage. Because anomalies are rare by nature, a model that never flags anything could be 99.9% "accurate" but completely useless. Instead, you need to look at a more nuanced set of metrics. Choosing the right metrics is crucial for understanding how your system performs. Key metrics to track include precision (what percentage of alerts are actual anomalies?) and recall (what percentage of actual anomalies did you catch?). Balancing these two is often the main goal. The F1-score is a great way to measure this balance in a single number, giving you a more holistic view of your model's effectiveness.

Why response time is a key metric

The "real-time" in real-time anomaly detection is its biggest selling point. If your system takes too long to spot and flag an issue, you lose the opportunity to act on it before it causes damage. A slow system can be the difference between preventing a major outage and just reporting on it after the fact. You should measure the latency from the moment data enters the system to the moment an alert is generated. This immediate identification of unusual patterns is what allows you to respond before problems escalate. Consistently monitoring this response time ensures your system is living up to its promise of speed and giving your team enough time to react.

How to keep false positives in check

Nothing will cause your team to ignore a system faster than a constant stream of false alarms. A false positive is when the system flags normal activity as an anomaly. While you want a sensitive system, one that’s too sensitive creates "alert fatigue," and important notifications get lost in the noise. It’s a tricky balance, because tightening the rules to reduce false positives might cause you to miss real threats (false negatives). Evaluating anomaly detection algorithms for your specific purpose is a complex task, and managing this trade-off is a continuous process. Regularly review flagged events with your team to fine-tune your detection thresholds and keep the alerts meaningful.

Is your system actually saving you money?

An anomaly detection system is an investment, and you need to know if it's paying off. The goal is for the value it provides to outweigh its operational costs. This value can be measured in several ways. On one hand, it’s about preventing losses—like stopping fraudulent transactions, avoiding equipment failures, or preventing security breaches. On the other hand, it can help you seize revenue opportunities, such as identifying a sudden spike in product demand. To calculate its efficiency, compare the costs of running the system (infrastructure, maintenance, team hours) against the tangible financial benefits it delivers. This makes it easier to justify its continued development and resource allocation.

Fine-tuning your system for better performance

Beyond the quality of its detections, your system needs to run efficiently. This means keeping an eye on its technical performance, including CPU and memory usage, data processing throughput, and model inference speed. A system that consumes too many resources can become expensive and create bottlenecks that slow down other critical processes. As your data volume grows, you need to ensure your system can scale with it without a drop in performance. Implementing scalable, intelligent monitoring for your anomaly detection system itself is key to long-term success. Regular performance tuning will help you maintain a responsive and cost-effective solution that’s ready for future challenges.

What's next for anomaly detection?

Anomaly detection isn't standing still. As technology evolves, so do the methods we use to spot irregularities in our data. The systems we rely on are becoming more complex, generating data at a pace we've never seen before. This means the future of anomaly detection is all about becoming smarter, faster, and more autonomous. It’s moving from simply flagging a problem to predicting it and even responding to it automatically. For businesses, staying ahead of these trends is key to maintaining security, efficiency, and a competitive edge.

The next wave of innovation is focused on a few key areas. We're seeing more advanced AI that can understand incredibly complex patterns, a major shift toward processing data directly on devices instead of in the cloud, and a relentless push for faster, more immediate processing. On top of that, the goal is no longer just to send an alert but to trigger an intelligent, automated response. These advancements are changing what’s possible, turning anomaly detection into a proactive and essential part of any modern operation. Companies like Cake are helping businesses manage this entire stack, making it easier to adopt these next-generation capabilities.

Smarter detection with advanced AI

Much more sophisticated AI powers the next generation of anomaly detection. We're moving beyond traditional statistical methods and into the realm of deep learning. As one report notes, "Implementing deep learning models like autoencoders and RNNs enhances the capability to detect anomalies in complex and high-dimensional data." In simple terms, this means AI can now learn the normal operational patterns of a system in incredible detail, even when dealing with thousands of variables. This allows it to spot subtle deviations that would be impossible for a human or a simpler algorithm to catch, making it perfect for identifying sophisticated cyber threats or faint signals of equipment failure.

Why detection is moving to the edge

Another major shift is happening in where data gets processed. Instead of sending every piece of data to a central cloud server for analysis, more processing is happening at the "edge"—directly on or near the devices where the data is generated. This is crucial for systems that can't afford any delays. For an autonomous vehicle or a critical piece of factory machinery, detecting an anomaly instantly can be the difference between a minor adjustment and a major failure. Edge computing makes that immediate response possible.

Getting insights even faster

The sheer volume and velocity of data today demand incredible speed. The future of anomaly detection lies in systems that can ingest and analyze massive data streams without delay. This isn't just about having powerful hardware; it's about designing efficient algorithms and infrastructure that can keep up. This is especially critical in fields like cybersecurity, where new threats can emerge in seconds. The goal is to close the gap between when an event happens and when you detect it, making your systems more secure and resilient.

Beyond detection: smarter automated responses

Finding an anomaly is only half the battle. The real evolution is in what happens next. Future systems won't just send an alert that a human needs to investigate; they'll trigger an immediate and intelligent response. This is a move from passive detection to active remediation. For example, if a system detects unusual network traffic, it could automatically isolate the affected device to prevent a potential threat from spreading. This allows organizations to "detect anomalies and respond to threats faster than traditional security systems." By automating the initial response, you can contain problems instantly and free up your team to focus on strategic analysis rather than constant firefighting.

Related articles

- Anomaly Detection Powered by Cake

- Cake Component: ADTK

- Why Observability for AI is Non-Negotiable

- How DeepSeek Makes Real-Time RAG Viable

Frequently asked questions

What’s the real difference between this and just looking at weekly reports?

Think of it as the difference between a smoke detector and a fire investigation report. A weekly report tells you what already happened, forcing you to react to problems after the fact. Real-time anomaly detection is your smoke detector—it alerts you the moment something unusual occurs, giving you the chance to prevent a small issue from becoming a major crisis. It’s about being proactive and solving problems as they happen, not days later.

Do I need a team of data scientists to build an anomaly detection system?

Not necessarily. While the underlying technology is complex, you don't have to build everything from scratch. Many platforms and tools are designed to handle the heavy lifting, from managing the data infrastructure to providing pre-built models. Your team's domain expertise is actually the most critical ingredient, as they understand what "normal" looks like for your business. The key is to find a solution that lets you focus on your business logic, not on managing complex AI stacks.

How do I keep my team from being overwhelmed by false alarms?

This is a huge and very valid concern. The goal is to find the right balance between sensitivity and noise. You can achieve this by starting with a conservative alert threshold and fine-tuning it over time based on feedback from your team. It's also crucial to create smart alerts that provide context, so your team can quickly decide if something needs immediate attention. The system should learn and adapt, becoming more accurate as it processes more of your data.

Is this kind of system only useful for tech companies or finance?

Absolutely not. While it’s famous for fraud detection, some of the most powerful applications are in industries like manufacturing, logistics, and energy. Any business that has a continuous stream of operational data can benefit. It can be used to predict when a machine on the factory floor will fail, spot a quality control issue on a production line, or identify an inefficiency in your supply chain.

How long does it take for a new system to become effective?

An anomaly detection system gets smarter over time, so it won't be perfect on day one. It needs a period to learn the unique patterns of your data and establish a reliable baseline of what's normal. You can expect an initial tuning phase where you and your team work with the system to refine its thresholds and reduce false positives. The real value emerges as the model continuously learns, adapting to the natural rhythm of your operations.

About Author

Cake Team

More articles from Cake Team

Related Post

Anomaly Detection with AI & ML: A Practical Guide

Cake Team

How to Choose the Right Anomaly Detection Software

Cake Team

Detecting Outliers in Time-Series Data: A Guide

Cake Team

What is AIOps? How AI is Revolutionizing IT Operations

Cake Team