Predicting Patient Outcomes Using the Industry’s Largest Cancer Imaging Dataset

Cake supports a leading computational imaging company using AI trained on the industry’s largest cancer imaging dataset to advance precision medicine. Their models help predict patient outcomes, measure treatment effects more accurately, and bring new therapies to market sooner.

Key takeaways

- Launched a production system in 3 weeks using Cake’s managed open-source AI stack

- Hundreds of terabytes of sensitive data orchestrated with federated learning across cloud and on-prem environments

- Saved 3–4 AI engineering FTEs, avoiding months of hiring and onboarding

Accelerating oncology research with distributed AI

This company’s ML teams—data engineers, ML scientists, and MLOps—partner with major health systems to produce cutting-edge oncology research. Their AI models are already used in clinical trials. Cake serves as the company’s MLOps platform, enabling secure, distributed development across highly sensitive environments.

“Cake is a great way for us to scale our MLOps and has been a source of significant cost savings, time savings – and frankly – headache savings,” their Head of AI described.

Federated learning and AI with sensitive healthcare data

“Our solution is probably one of the hardest implementations, with many different cloud environments and Slurm clusters in addition to Kubernetes. If Cake can do a good job here, you know they can do it anywhere.”

—Head of AI, Medical Imaging Company

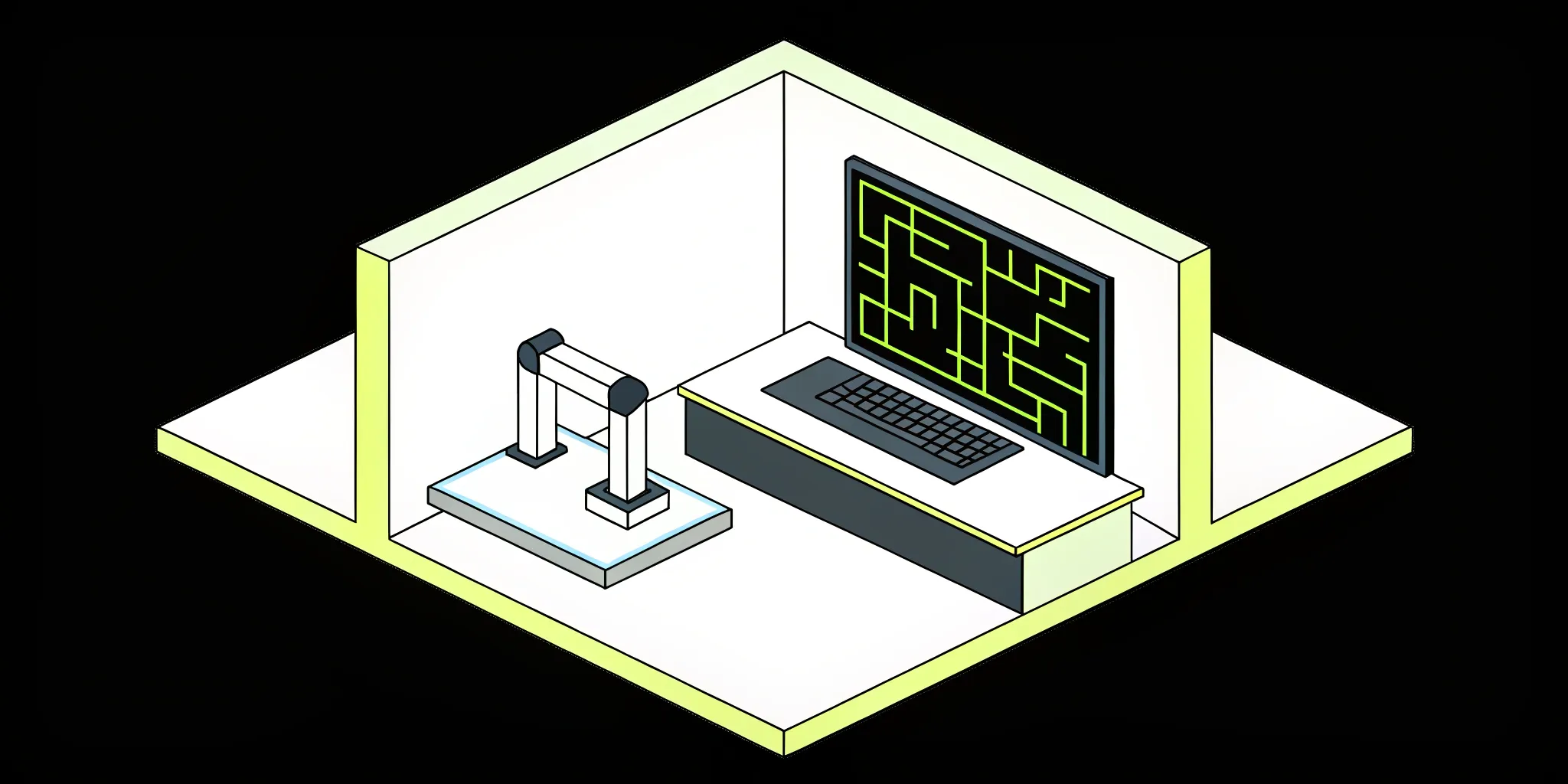

Training state-of-the-art models in healthcare requires extremely specific datasets and patient cohorts. In oncology, this often means working with tens of millions of images across multiple health systems. The company prioritizes security: data never leaves partner sites, and only de-identified data is used.

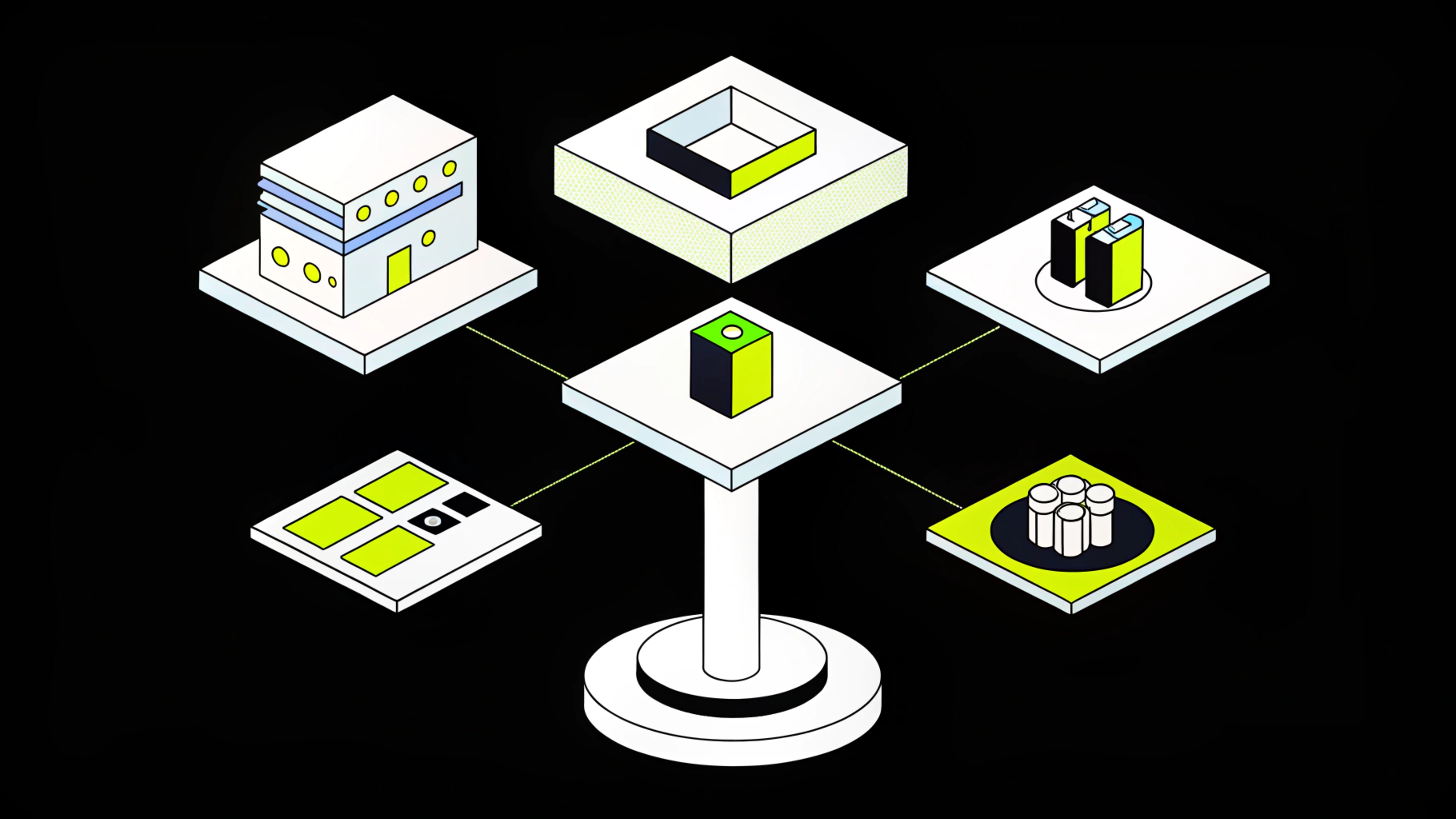

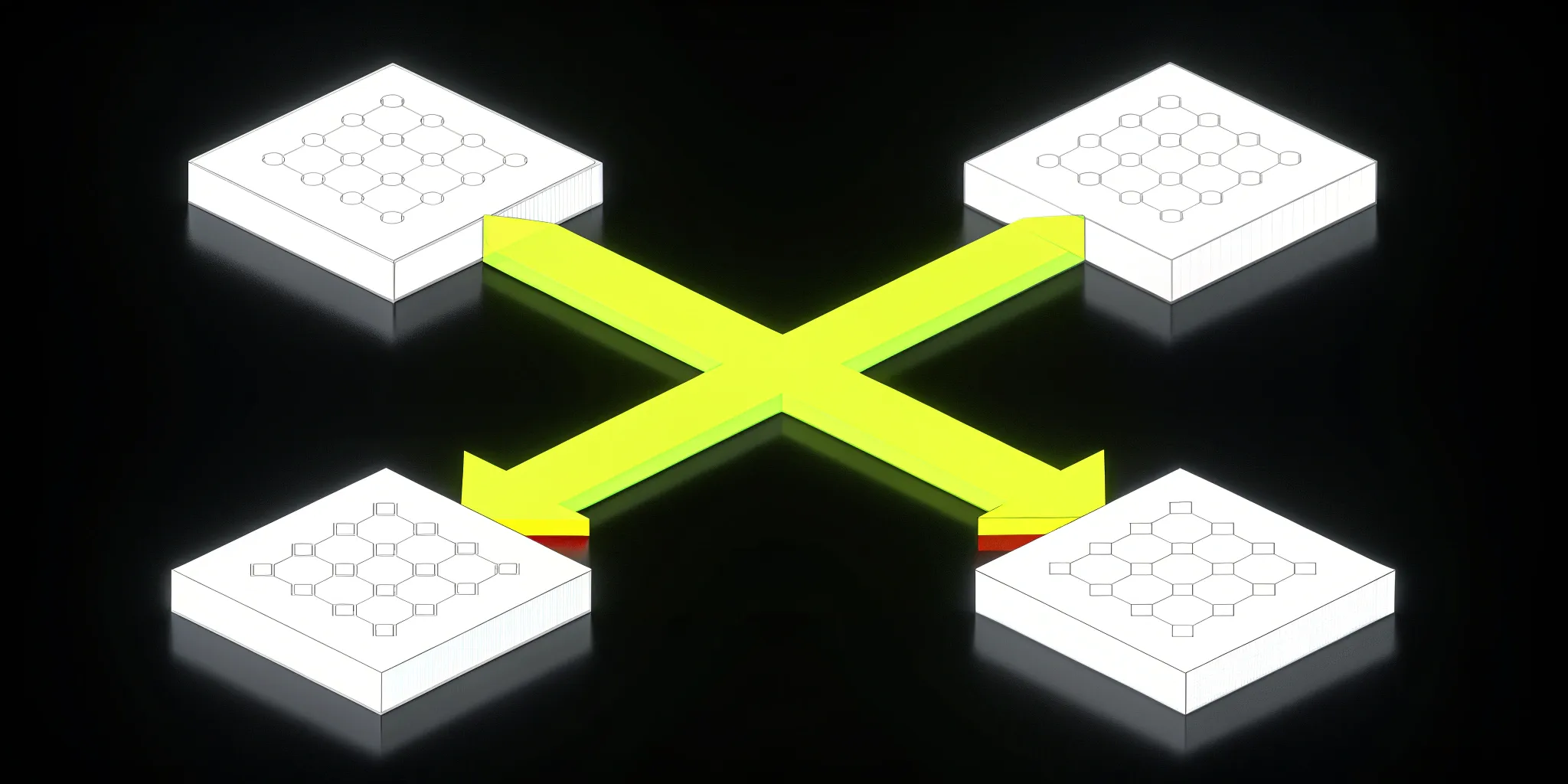

This effort required a federated learning setup in which no data leaves hospital environments, and only aggregate statistics and gradients are shared. This provides strong security guarantees but introduces significant engineering complexity. The infrastructure must be cloud-agnostic and support a mix of Kubernetes, Slurm clusters, and multiple cloud providers, while also managing hundreds of terabytes of imaging data.

After evaluating several vendors, Cake was the only platform capable of supporting a large-scale federated learning deployment across both cloud and on-prem systems.

From zero to production in three weeks

This company selected Cake as their MLOps platform to tackle hybrid cloud/on prem federated learning. As a first step in the partnership, Cake offered guidance on which open source technologies to include in the implementation, greatly reducing the time needed to test a series of different solutions.

For example, the team initially scoped one project around uptime and system redundancy. Cake was able to recommend a set of technologies that matched the needs of a B2B healthcare use case, which was different from what might be expected in a B2C application. “The recommended approach has made it much easier for us to iterate quickly while shipping into a distributed environment.”

“It's one thing to stand up a collection of software components. It’s another thing to integrate the stack into what we're currently doing,” said their Head of AI. “Trusting and relying on Cake’s expertise has served us extremely well.”

The team used the Cake-managed open source AI stack to build their systems from zero to one, deploying seamlessly into new environments across their partner healthcare systems.

“We went from having no inference pipelines, no orchestration – nothing – to having a full production pipeline in less than three weeks.”

— Head of AI, Medical Imaging Company

Building for the future with a small team

The team partners with Cake to offload AI/ML infrastructure management, freeing up internal resources for pipeline development and other work. As their Head of AI detailed, “Cake provides capacity that feels like at least three or four senior technical staff running our ML infrastructure – for less than the cost of a single engineer.”

Given the complexity of their deployment across different sites, the breadth of their open source stack, and the complexity of their models, finding the right engineers is not just a cost question, but also represents significant time delays. As their Head of AI describes, “Without Cake, I would have had to hire people with the right experience, get them on board, trained, and up-to-speed on our product and where we are, and then get stuff spun up. We’re talking months – many months.”

From initial deployments across healthcare systems, to the creation of groundbreaking published research, to accelerating the development of new treatments, this company and Cake look forward to deepening their partnership and driving forward the frontier of AI-enabled precision medicine.

About Author

Cake Team

More articles from Cake Team

Related Post

Machine Learning in Production: A Practical Guide

Cake Team

How Ping Established ML-Based Leadership in Commercial Property Insurance

Cake Team

MLOps vs. DevOps: Understanding the Key Differences

Cake Team

MLOps vs AIOps vs DevOps: A Complete Guide

Cake Team