Cake for

Federated Learning

Train AI models across distributed datasets without centralizing sensitive data. Cake provides a modular, open-source stack for orchestrating federated learning across edge, partner, or multi-tenant environments.

Overview

In healthcare, finance, and multi-tenant SaaS, the data you need to train great models is often fragmented or protected by regulation. Federated learning solves this by training models where the data lives, without ever sharing raw inputs. But orchestrating federated workflows is complex without the right infrastructure.

Cake provides a modular, cloud-agnostic platform that simplifies federated learning at scale. Use open-source libraries like Flower or FedML to coordinate model updates, manage training jobs with Kubeflow Pipelines, and track performance centrally with MLflow and Evidently. You control the orchestration and observability, without compromising on security or compliance.

Because Cake is built from composable, open-source components, you can integrate the latest federated learning frameworks and adapt to evolving data-sharing agreements, while avoiding vendor lock-in and reducing infrastructure spend.

Key benefits

-

Train on distributed data: Run training across edge devices, partners, or tenants without centralizing raw data.

-

Maintain compliance and privacy: Meet data residency and governance requirements across all regions and clients.

-

Use open-source FL frameworks: Integrate Flower, FedML, or custom aggregation logic seamlessly.

-

Deploy across clouds or hybrid setups: Coordinate learning across on-prem, cloud, or multi-region environments.

-

Track and improve global performance: Aggregate metrics, evaluate drift, and optimize collaboration over time.

THE CAKE DIFFERENCE

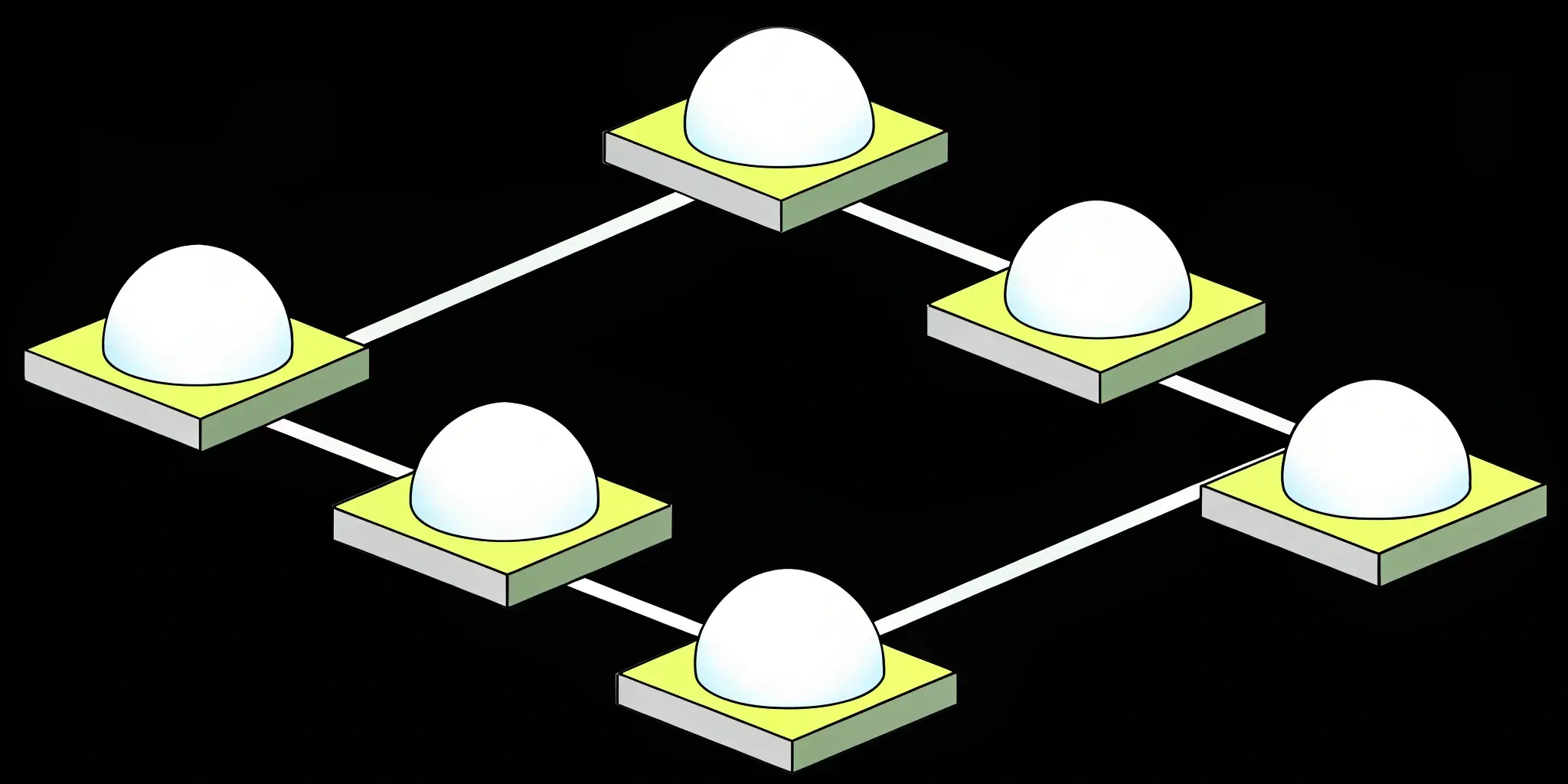

![]()

From centralized data risks to

decentralized intelligence

Centralized training

High risk, high friction: Moving data into one place for training creates privacy concerns and logistical headaches.

- Requires transferring raw data across systems or orgs

- High compliance and regulatory risk in sensitive domains

- Difficult to scale across partners, hospitals, or edge locations

- No native support for collaboration without exposing data

Result:

Slow progress, security risk, and limited access to diverse data sources

Federated learning with Cake

Train smarter across distributed environments: Cake lets you build privacy-preserving learning pipelines with full control and observability.

- Train on distributed nodes without moving raw data

- Built-in support for model aggregation, drift detection, and evals

- Supports healthcare, finance, and edge deployment scenarios

- Secure, auditable, and compatible with open FL frameworks like Flower and FedML

Result:

Faster model development, stronger privacy, and access to more representative data

EXAMPLE USE CASES

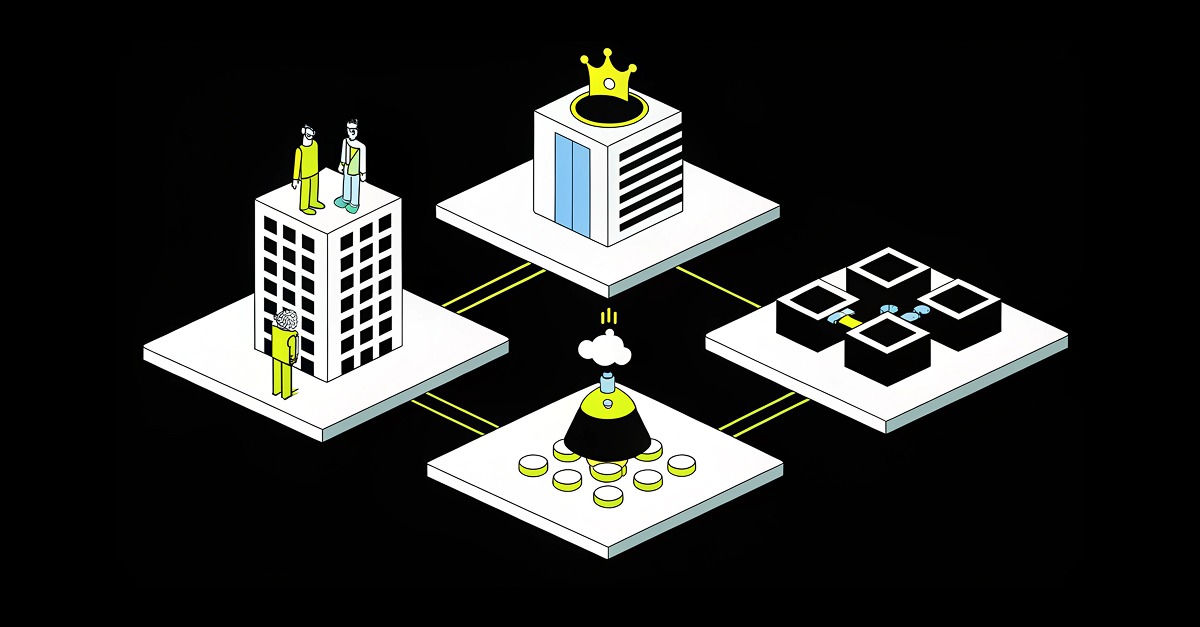

![]()

Teams use Cake’s federated learning stack to

collaborate across silos while protecting

sensitive data

![]()

Cross-hospital model training

Enable hospitals to collaboratively train diagnostic models without sharing patient records.

![]()

Multi-tenant SaaS analytics

Train personalization models per client without extracting or co-mingling datasets.

![]()

IoT intelligence

Train models directly on edge devices to improve performance and privacy without massive data transfer.

![]()

Pharmaceutical research across global trial sites

Enable drug companies to train models on trial data from multiple countries or institutions—without moving sensitive patient records across borders.

![]()

Cross-branch fraud detection in financial institutions

Allow regional banks or subsidiaries to contribute to fraud models without centralizing sensitive customer transaction data.

![]()

Personalized experiences on edge devices

Train models locally on user devices (e.g., phones, wearables) to support smart features like predictive text or health tracking without uploading personal data to the cloud.

HEALTHCARE AI

Protect patient privacy while advancing AI in healthcare

See how leading healthcare teams use federated learning to build smarter models without moving sensitive patient data. Cake provides the tools to collaborate across hospitals, labs, and research partners while staying secure and compliant.

BLOG

Top AI use cases reshaping financial services

From risk modeling to customer insights, AI is helping financial institutions move faster, stay compliant, and outpace the competition. Explore the most impactful applications in banking, insurance, and fintech.

"Our partnership with Cake has been a clear strategic choice – we're achieving the impact of two to three technical hires with the equivalent investment of half an FTE."

Scott Stafford

Chief Enterprise Architect at Ping

"With Cake we are conservatively saving at least half a million dollars purely on headcount."

CEO

InsureTech Company

COMPONENTS

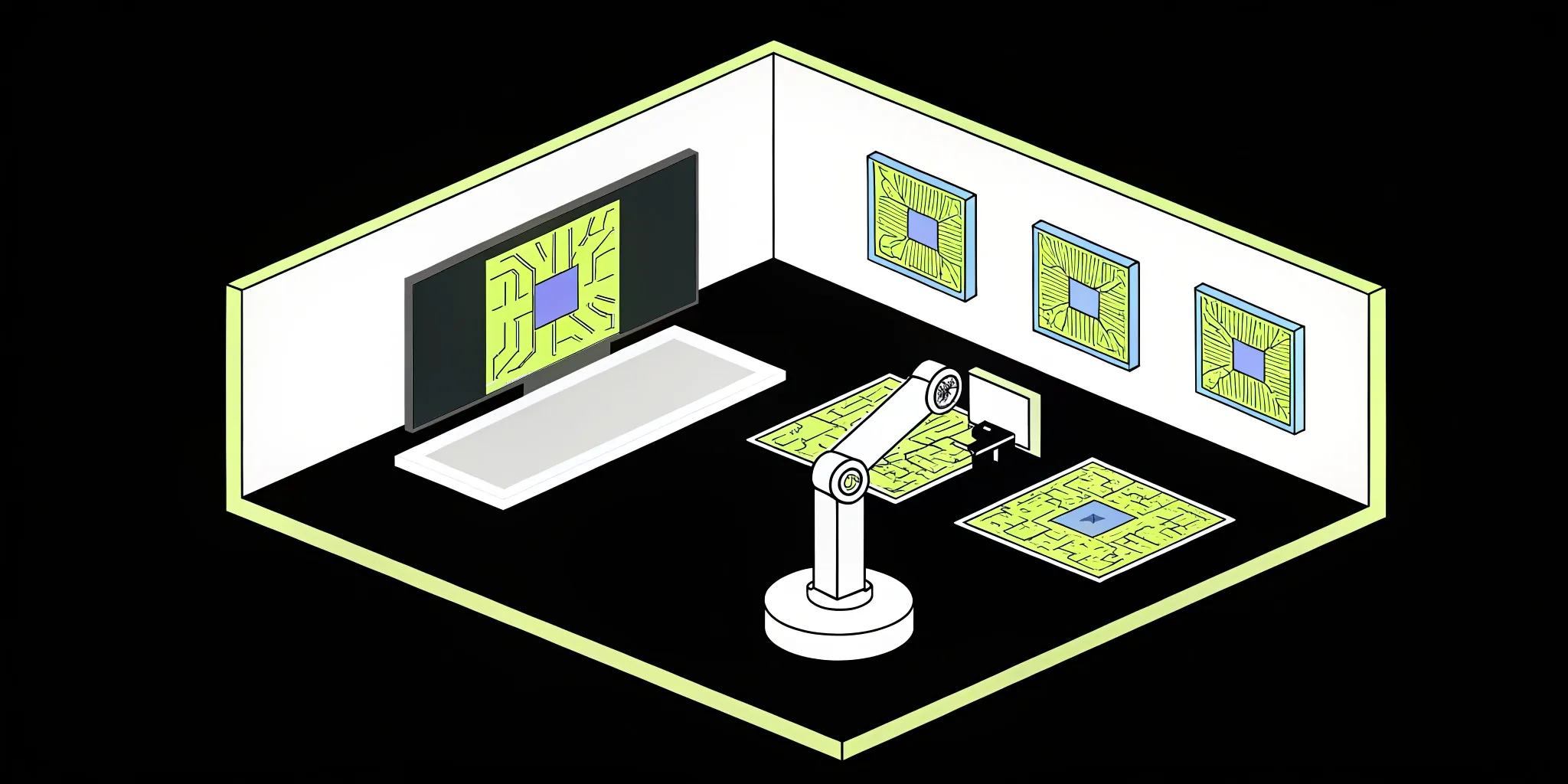

![]()

Tools that power Cake's

federated learning stack

Ray Tune

Distributed Model Training & Model Formats

Pipelines and Workflows

Ray Tune is a Python library for distributed hyperparameter optimization, built on Ray’s scalable compute framework. With Cake, you can run Ray Tune experiments across any cloud or hybrid environment while automating orchestration, tracking results, and optimizing resource usage with minimal setup.

MLflow

Pipelines and Workflows

Track ML experiments and manage your model registry at scale with Cake’s automated MLflow setup and integration.

Slurm

Cloud Compute & Storage

Slurm is an open-source job scheduler for high-performance computing environments. Cake integrates Slurm-managed compute clusters into AI workflows, automating resource allocation, scaling, and observability.

Kubeflow

Orchestration & Pipelines

Kubeflow is an open-source machine learning platform built on Kubernetes. Cake operationalizes Kubeflow deployments, automating model training, tuning, and serving while adding governance and observability.

PyTorch

ML Model Libraries

PyTorch is a widely used open-source machine learning framework known for its flexibility, dynamic computation, and deep learning support.

Frequently asked questions

What is federated learning?

Federated learning is a machine learning approach where models are trained across multiple decentralized datasets without moving the raw data. Instead, model updates are shared and aggregated, keeping sensitive information local and secure.

How does Cake support federated learning?

Cake provides the infrastructure and orchestration needed to run federated learning at scale. It integrates open-source frameworks with secure networking, compliance controls, and observability so teams can collaborate on AI without exposing raw data.

What are the benefits of federated learning for enterprises?

Federated learning enables organizations to collaborate across data silos, protect sensitive information, and meet compliance requirements while still training high-performance models. It is especially valuable in regulated industries like healthcare and financial services.

Can federated learning models perform as well as centralized models?

Yes. With the right setup and sufficient data across participants, federated learning can achieve accuracy comparable to centralized training, while adding the advantage of preserving data privacy and security.

What industries benefit most from federated learning with Cake?

Industries that manage sensitive or regulated data—such as healthcare, finance, insurance, and government—see the greatest impact. Federated learning allows them to innovate with AI while meeting strict compliance standards.

Related posts

The Best Open Source AI: A Complete Guide

Find the best open source AI tools for 2025, from top LLMs to training libraries and vector search, to power your next AI project with full control.

Your Guide to the Top Open-Source MLOps Tools

Find the best open-source MLOps tools for your team. Compare top options for experiment tracking, deployment, and monitoring to build a reliable ML...

13 Open Source RAG Frameworks for Agentic AI

Find the best open source RAG framework for building agentic AI systems. Compare top tools, features, and tips to choose the right solution for your...

.png?width=220&height=168&name=Group%2010%20(1).png)

.png)